记录:337

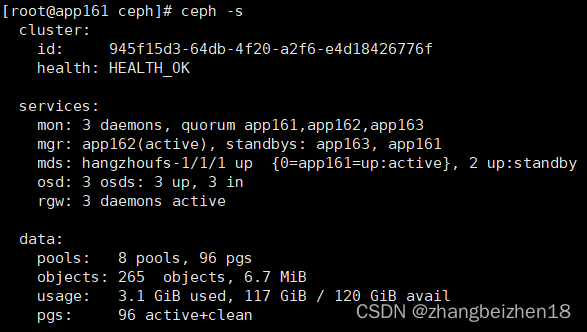

场景:在CentOS 7.9操作系统上,在ceph集群中,使用ceph命令查看ceph集群信息,以及mon、mgr、mds、osd、rgw等组件信息。

版本:

操作系统:CentOS 7.9

ceph版本:ceph-13.2.10

名词:

ceph:ceph集群的工具命令。

1.基础环境

在规划集群主机上需安装ceph-deploy、ceph、ceph-radosgw软件。

(1)集群主节点安装软件

安装命令:yum install -y ceph-deploy ceph-13.2.10

安装命令:yum install -y ceph-radosgw-13.2.10

解析:集群中主节点安装ceph-deploy、ceph、ceph-radosgw软件。

(2)集群从节点安装软件

安装命令:yum install -y ceph-13.2.10

安装命令:yum install -y ceph-radosgw-13.2.10

解析:集群中从节点安装ceph、ceph-radosgw软件。

2.命令应用

ceph命令,在集群主节点的/etc/ceph目录下使用。

(1)查看ceph版本

命令:ceph --version

解析:查的当前主机安装ceph的版本。

(2)查看集群状态

命令:ceph -s

解析:查看集群状态,使用频率高。

(3)查看集群实时状态

命令:ceph -w

解析:查看集群实时状态,使用命令时,控制台实时监控集群变化。

(4)查看mgr服务

命令:ceph mgr services

解析:命令会打印出:"dashboard": "https://app162:18443/";使用浏览器就能登录dashboard。

(5)查看mon 状态汇总信息

命令:ceph mon stat

解析:汇总mon状态。

(6)查看mds状态汇总信息

命令:ceph mds stat

解析:汇总mds状态。

(7)查看osd状态汇总信息

命令:ceph osd stat

解析:汇总osd状态。

(8)创建pool

命令:ceph osd pool create hz_data 16

解析:创建一个存储池,名称hz_data,分配16个pg。

(9)查看存储池

命令:ceph osd pool ls

解析:能看到存储列表。

(10)查看pool的pg数量

命令:ceph osd pool get hz_data pg_num

解析:查看pool的pg_num数量。

(11)设置pool的pg数量

命令:ceph osd pool set hz_data pg_num 18

解析:设置pool的pg_num数量。

(12)删除pool

命令:ceph osd pool delete hz_data hz_data --yes-i-really-really-mean-it

解析:删除pool时,pool的名称需要传两次。

(13)创建ceph文件系统

命令:ceph fs new hangzhoufs xihu_metadata xihu_data

解析:使用ceph fs new创建ceph文件系统;文件系统名称:hangzhoufs;存储池xihu_data和xihu_metadata。

(14)查ceph文件系统

命令:ceph fs ls

解析:查看ceph文件系统,打印文件系统名称和存储池。

(15)查ceph文件系统状态

命令:ceph fs status

解析:查ceph文件系统状态,打印文件系统的Pool的信息、类型等。

(16)删除ceph文件系统

命令:ceph fs rm hangzhoufs --yes-i-really-mean-it

解析:hangzhoufs是已创建的ceph文件系统名称。

(17)查看服务状态

命令:ceph service status

解析:查看服务状态,查看服务最后一次反应时间。

(18)查看节点quorum状态

命令:ceph quorum_status

解析:查看节点quorum状态。

(19)查看pg状态

命令:ceph pg stat

解析:查看pg状态;pg,placement group。

(20)查看pg清单

命令:ceph pg ls

解析:列出所有pg。

(21)查看osd磁盘信息

命令:ceph osd df

解析:打印osd磁盘信息,包括容量,可用空间,已经使用空间等。

3.命令帮助手册

(1)ceph帮助命令

命令:ceph --help

解析:查看ceph支持全部命令和选项,在实际工作中,查看这个手册应该是必备之选。

General usage:

==============

usage: ceph [-h] [-c CEPHCONF] [-i INPUT_FILE] [-o OUTPUT_FILE]

[--setuser SETUSER] [--setgroup SETGROUP] [--id CLIENT_ID]

[--name CLIENT_NAME] [--cluster CLUSTER]

[--admin-daemon ADMIN_SOCKET] [-s] [-w] [--watch-debug]

[--watch-info] [--watch-sec] [--watch-warn] [--watch-error]

[--watch-channel {cluster,audit,*}] [--version] [--verbose]

[--concise] [-f {json,json-pretty,xml,xml-pretty,plain}]

[--connect-timeout CLUSTER_TIMEOUT] [--block] [--period PERIOD]

Ceph administration tool

optional arguments:

-h, --help request mon help

-c CEPHCONF, --conf CEPHCONF

ceph configuration file

-i INPUT_FILE, --in-file INPUT_FILE

input file, or "-" for stdin

-o OUTPUT_FILE, --out-file OUTPUT_FILE

output file, or "-" for stdout

--setuser SETUSER set user file permission

--setgroup SETGROUP set group file permission

--id CLIENT_ID, --user CLIENT_ID

client id for authentication

--name CLIENT_NAME, -n CLIENT_NAME

client name for authentication

--cluster CLUSTER cluster name

--admin-daemon ADMIN_SOCKET

submit admin-socket commands ("help" for help

-s, --status show cluster status

-w, --watch watch live cluster changes

--watch-debug watch debug events

--watch-info watch info events

--watch-sec watch security events

--watch-warn watch warn events

--watch-error watch error events

--watch-channel {cluster,audit,*}

which log channel to follow when using -w/--watch. One

of ['cluster', 'audit', '*']

--version, -v display version

--verbose make verbose

--concise make less verbose

-f {json,json-pretty,xml,xml-pretty,plain}, --format {json,json-pretty,xml,xml-pretty,plain}

--connect-timeout CLUSTER_TIMEOUT

set a timeout for connecting to the cluster

--block block until completion (scrub and deep-scrub only)

--period PERIOD, -p PERIOD

polling period, default 1.0 second (for polling

commands only)

Local commands:

===============

ping <mon.id> Send simple presence/life test to a mon

<mon.id> may be 'mon.*' for all mons

daemon {type.id|path} <cmd>

Same as --admin-daemon, but auto-find admin socket

daemonperf {type.id | path} [stat-pats] [priority] [<interval>] [<count>]

daemonperf {type.id | path} list|ls [stat-pats] [priority]

Get selected perf stats from daemon/admin socket

Optional shell-glob comma-delim match string stat-pats

Optional selection priority (can abbreviate name):

critical, interesting, useful, noninteresting, debug

List shows a table of all available stats

Run <count> times (default forever),

once per <interval> seconds (default 1)

Monitor commands:

=================

auth add <entity> {<caps> [<caps>...]} add auth info for <entity> from input file, or random key

if no input is given, and/or any caps specified in the

command

auth caps <entity> <caps> [<caps>...] update caps for <name> from caps specified in the command

auth export {<entity>} write keyring for requested entity, or master keyring if

none given

auth get <entity> write keyring file with requested key

auth get-key <entity> display requested key

auth get-or-create <entity> {<caps> [<caps>...]} add auth info for <entity> from input file, or random key

if no input given, and/or any caps specified in the command

auth get-or-create-key <entity> {<caps> [<caps>...]} get, or add, key for <name> from system/caps pairs

specified in the command. If key already exists, any

given caps must match the existing caps for that key.

auth import auth import: read keyring file from -i <file>

auth ls list authentication state

auth print-key <entity> display requested key

auth print_key <entity> display requested key

auth rm <entity> remove all caps for <name>

balancer dump <plan> Show an optimization plan

balancer eval {<option>} Evaluate data distribution for the current cluster or

specific pool or specific plan

balancer eval-verbose {<option>} Evaluate data distribution for the current cluster or

specific pool or specific plan (verbosely)

balancer execute <plan> Execute an optimization plan

balancer ls List all plans

balancer mode none|crush-compat|upmap Set balancer mode

balancer off Disable automatic balancing

balancer on Enable automatic balancing

balancer optimize <plan> {<pools> [<pools>...]} Run optimizer to create a new plan

balancer reset Discard all optimization plans

balancer rm <plan> Discard an optimization plan

balancer show <plan> Show details of an optimization plan

balancer sleep <secs> Set balancer sleep interval

balancer status Show balancer status

config assimilate-conf Assimilate options from a conf, and return a new, minimal

conf file

config dump Show all configuration option(s)

config get <who> {<key>} Show configuration option(s) for an entity

config help <key> Describe a configuration option

config log {<int>} Show recent history of config changes

config reset <int> Revert configuration to previous state

config rm <who> <name> Clear a configuration option for one or more entities

config set <who> <name> <value> Set a configuration option for one or more entities

config show <who> {<key>} Show running configuration

config show-with-defaults <who> Show running configuration (including compiled-in defaults)

config-key dump {<key>} dump keys and values (with optional prefix)

config-key exists <key> check for <key>'s existence

config-key get <key> get <key>

config-key ls list keys

config-key rm <key> rm <key>

config-key set <key> {<val>} set <key> to value <val>

crash info <id> show crash dump metadata

crash json_report <hours> Crashes in the last <hours> hours

crash ls Show saved crash dumps

crash post Add a crash dump (use -i <jsonfile>)

crash prune <keep> Remove crashes older than <keep> days

crash rm <id> Remove a saved crash <id>

crash self-test Run a self test of the crash module

crash stat Summarize recorded crashes

dashboard create-self-signed-cert Create self signed certificate

dashboard get-enable-browsable-api Get the ENABLE_BROWSABLE_API option value

dashboard get-rest-requests-timeout Get the REST_REQUESTS_TIMEOUT option value

dashboard get-rgw-api-access-key Get the RGW_API_ACCESS_KEY option value

dashboard get-rgw-api-admin-resource Get the RGW_API_ADMIN_RESOURCE option value

dashboard get-rgw-api-host Get the RGW_API_HOST option value

dashboard get-rgw-api-port Get the RGW_API_PORT option value

dashboard get-rgw-api-scheme Get the RGW_API_SCHEME option value

dashboard get-rgw-api-secret-key Get the RGW_API_SECRET_KEY option value

dashboard get-rgw-api-ssl-verify Get the RGW_API_SSL_VERIFY option value

dashboard get-rgw-api-user-id Get the RGW_API_USER_ID option value

dashboard set-enable-browsable-api <value> Set the ENABLE_BROWSABLE_API option value

dashboard set-login-credentials <username> <password> Set the login credentials

dashboard set-rest-requests-timeout <int> Set the REST_REQUESTS_TIMEOUT option value

dashboard set-rgw-api-access-key <value> Set the RGW_API_ACCESS_KEY option value

dashboard set-rgw-api-admin-resource <value> Set the RGW_API_ADMIN_RESOURCE option value

dashboard set-rgw-api-host <value> Set the RGW_API_HOST option value

dashboard set-rgw-api-port <int> Set the RGW_API_PORT option value

dashboard set-rgw-api-scheme <value> Set the RGW_API_SCHEME option value

dashboard set-rgw-api-secret-key <value> Set the RGW_API_SECRET_KEY option value

dashboard set-rgw-api-ssl-verify <value> Set the RGW_API_SSL_VERIFY option value

dashboard set-rgw-api-user-id <value> Set the RGW_API_USER_ID option value

dashboard set-session-expire <int> Set the session expire timeout

df {detail} show cluster free space stats

features report of connected features

fs add_data_pool <fs_name> <pool> add data pool <pool>

fs authorize <filesystem> <entity> <caps> [<caps>...] add auth for <entity> to access file system <filesystem>

based on following directory and permissions pairs

fs dump {<int[0-]>} dump all CephFS status, optionally from epoch

fs flag set enable_multiple <val> {--yes-i-really-mean-it} Set a global CephFS flag

fs get <fs_name> get info about one filesystem

fs ls list filesystems

fs new <fs_name> <metadata> <data> {--force} {--allow- make new filesystem using named pools <metadata> and <data>

dangerous-metadata-overlay}

fs reset <fs_name> {--yes-i-really-mean-it} disaster recovery only: reset to a single-MDS map

fs rm <fs_name> {--yes-i-really-mean-it} disable the named filesystem

fs rm_data_pool <fs_name> <pool> remove data pool <pool>

fs set <fs_name> max_mds|max_file_size|allow_new_snaps| set fs parameter <var> to <val>

inline_data|cluster_down|allow_dirfrags|balancer|standby_

count_wanted|session_timeout|session_autoclose|down|

joinable|min_compat_client <val> {<confirm>}

fs set-default <fs_name> set the default to the named filesystem

fs status {<fs>} Show the status of a CephFS filesystem

fsid show cluster FSID/UUID

health {detail} show cluster health

heap dump|start_profiler|stop_profiler|release|stats show heap usage info (available only if compiled with

tcmalloc)

hello {<person_name>} Prints hello world to mgr.x.log

influx config-set <key> <value> Set a configuration value

influx config-show Show current configuration

influx self-test debug the module

influx send Force sending data to Influx

injectargs <injected_args> [<injected_args>...] inject config arguments into monitor

iostat Get IO rates

iostat self-test Run a self test the iostat module

log <logtext> [<logtext>...] log supplied text to the monitor log

log last {<int[1-]>} {debug|info|sec|warn|error} {*| print last few lines of the cluster log

cluster|audit}

mds compat rm_compat <int[0-]> remove compatible feature

mds compat rm_incompat <int[0-]> remove incompatible feature

mds compat show show mds compatibility settings

mds count-metadata <property> count MDSs by metadata field property

mds fail <role_or_gid> Mark MDS failed: trigger a failover if a standby is

available

mds metadata {<who>} fetch metadata for mds <role>

mds repaired <role> mark a damaged MDS rank as no longer damaged

mds rm <int[0-]> remove nonactive mds

mds rmfailed <role> {<confirm>} remove failed mds

mds set_state <int[0-]> <int[0-20]> set mds state of <gid> to <numeric-state>

mds stat show MDS status

mds versions check running versions of MDSs

mgr count-metadata <property> count ceph-mgr daemons by metadata field property

mgr dump {<int[0-]>} dump the latest MgrMap

mgr fail <who> treat the named manager daemon as failed

mgr metadata {<who>} dump metadata for all daemons or a specific daemon

mgr module disable <module> disable mgr module

mgr module enable <module> {--force} enable mgr module

mgr module ls list active mgr modules

mgr self-test background start <workload> Activate a background workload (one of command_spam, throw_

exception)

mgr self-test background stop Stop background workload if any is running

mgr self-test config get <key> Peek at a configuration value

mgr self-test config get_localized <key> Peek at a configuration value (localized variant)

mgr self-test remote Test inter-module calls

mgr self-test run Run mgr python interface tests

mgr services list service endpoints provided by mgr modules

mgr versions check running versions of ceph-mgr daemons

mon add <name> <IPaddr[:port]> add new monitor named <name> at <addr>

mon compact cause compaction of monitor's leveldb/rocksdb storage

mon count-metadata <property> count mons by metadata field property

mon dump {<int[0-]>} dump formatted monmap (optionally from epoch)

mon feature ls {--with-value} list available mon map features to be set/unset

mon feature set <feature_name> {--yes-i-really-mean-it} set provided feature on mon map

mon getmap {<int[0-]>} get monmap

mon metadata {<id>} fetch metadata for mon <id>

mon rm <name> remove monitor named <name>

mon scrub scrub the monitor stores

mon stat summarize monitor status

mon sync force {--yes-i-really-mean-it} {--i-know-what-i- force sync of and clear monitor store

am-doing}

mon versions check running versions of monitors

mon_status report status of monitors

node ls {all|osd|mon|mds|mgr} list all nodes in cluster [type]

osd add-nodown <ids> [<ids>...] mark osd(s) <id> [<id>...] as nodown, or use <all|any> to

mark all osds as nodown

osd add-noin <ids> [<ids>...] mark osd(s) <id> [<id>...] as noin, or use <all|any> to

mark all osds as noin

osd add-noout <ids> [<ids>...] mark osd(s) <id> [<id>...] as noout, or use <all|any> to

mark all osds as noout

osd add-noup <ids> [<ids>...] mark osd(s) <id> [<id>...] as noup, or use <all|any> to

mark all osds as noup

osd blacklist add|rm <EntityAddr> {<float[0.0-]>} add (optionally until <expire> seconds from now) or remove

<addr> from blacklist

osd blacklist clear clear all blacklisted clients

osd blacklist ls show blacklisted clients

osd blocked-by print histogram of which OSDs are blocking their peers

osd count-metadata <property> count OSDs by metadata field property

osd crush add <osdname (id|osd.id)> <float[0.0-]> <args> add or update crushmap position and weight for <name> with

[<args>...] <weight> and location <args>

osd crush add-bucket <name> <type> {<args> [<args>...]} add no-parent (probably root) crush bucket <name> of type

<type> to location <args>

osd crush class ls list all crush device classes

osd crush class ls-osd <class> list all osds belonging to the specific <class>

osd crush class rename <srcname> <dstname> rename crush device class <srcname> to <dstname>

osd crush create-or-move <osdname (id|osd.id)> <float[0.0- create entry or move existing entry for <name> <weight> at/

]> <args> [<args>...] to location <args>

osd crush dump dump crush map

osd crush get-tunable straw_calc_version get crush tunable <tunable>

osd crush link <name> <args> [<args>...] link existing entry for <name> under location <args>

osd crush ls <node> list items beneath a node in the CRUSH tree

osd crush move <name> <args> [<args>...] move existing entry for <name> to location <args>

osd crush rename-bucket <srcname> <dstname> rename bucket <srcname> to <dstname>

osd crush reweight <name> <float[0.0-]> change <name>'s weight to <weight> in crush map

osd crush reweight-all recalculate the weights for the tree to ensure they sum

correctly

osd crush reweight-subtree <name> <float[0.0-]> change all leaf items beneath <name> to <weight> in crush

map

osd crush rm <name> {<ancestor>} remove <name> from crush map (everywhere, or just at

<ancestor>)

osd crush rm-device-class <ids> [<ids>...] remove class of the osd(s) <id> [<id>...],or use <all|any>

to remove all.

osd crush rule create-erasure <name> {<profile>} create crush rule <name> for erasure coded pool created

with <profile> (default default)

osd crush rule create-replicated <name> <root> <type> create crush rule <name> for replicated pool to start from

{<class>} <root>, replicate across buckets of type <type>, using a

choose mode of <firstn|indep> (default firstn; indep best

for erasure pools)

osd crush rule create-simple <name> <root> <type> {firstn| create crush rule <name> to start from <root>, replicate

indep} across buckets of type <type>, using a choose mode of

<firstn|indep> (default firstn; indep best for erasure

pools)

osd crush rule dump {<name>} dump crush rule <name> (default all)

osd crush rule ls list crush rules

osd crush rule ls-by-class <class> list all crush rules that reference the same <class>

osd crush rule rename <srcname> <dstname> rename crush rule <srcname> to <dstname>

osd crush rule rm <name> remove crush rule <name>

osd crush set <osdname (id|osd.id)> <float[0.0-]> <args> update crushmap position and weight for <name> to <weight>

[<args>...] with location <args>

osd crush set {<int>} set crush map from input file

osd crush set-all-straw-buckets-to-straw2 convert all CRUSH current straw buckets to use the straw2

algorithm

osd crush set-device-class <class> <ids> [<ids>...] set the <class> of the osd(s) <id> [<id>...],or use <all|

any> to set all.

osd crush set-tunable straw_calc_version <int> set crush tunable <tunable> to <value>

osd crush show-tunables show current crush tunables

osd crush swap-bucket <source> <dest> {--yes-i-really-mean- swap existing bucket contents from (orphan) bucket <source>

it} and <target>

osd crush tree {--show-shadow} dump crush buckets and items in a tree view

osd crush tunables legacy|argonaut|bobtail|firefly|hammer| set crush tunables values to <profile>

jewel|optimal|default

osd crush unlink <name> {<ancestor>} unlink <name> from crush map (everywhere, or just at

<ancestor>)

osd crush weight-set create <poolname> flat|positional create a weight-set for a given pool

osd crush weight-set create-compat create a default backward-compatible weight-set

osd crush weight-set dump dump crush weight sets

osd crush weight-set ls list crush weight sets

osd crush weight-set reweight <poolname> <item> <float[0.0- set weight for an item (bucket or osd) in a pool's weight-

]> [<float[0.0-]>...] set

osd crush weight-set reweight-compat <item> <float[0.0-]> set weight for an item (bucket or osd) in the backward-

[<float[0.0-]>...] compatible weight-set

osd crush weight-set rm <poolname> remove the weight-set for a given pool

osd crush weight-set rm-compat remove the backward-compatible weight-set

osd deep-scrub <who> initiate deep scrub on osd <who>, or use <all|any> to deep

scrub all

osd destroy <osdname (id|osd.id)> {--yes-i-really-mean-it} mark osd as being destroyed. Keeps the ID intact (allowing

reuse), but removes cephx keys, config-key data and

lockbox keys, rendering data permanently unreadable.

osd df {plain|tree} show OSD utilization

osd down <ids> [<ids>...] set osd(s) <id> [<id>...] down, or use <any|all> to set all

osds down

osd dump {<int[0-]>} print summary of OSD map

osd erasure-code-profile get <name> get erasure code profile <name>

osd erasure-code-profile ls list all erasure code profiles

osd erasure-code-profile rm <name> remove erasure code profile <name>

osd erasure-code-profile set <name> {<profile> [<profile>.. create erasure code profile <name> with [<key[=value]> ...]

.]} pairs. Add a --force at the end to override an existing

profile (VERY DANGEROUS)

osd find <osdname (id|osd.id)> find osd <id> in the CRUSH map and show its location

osd force-create-pg <pgid> {--yes-i-really-mean-it} force creation of pg <pgid>

osd get-require-min-compat-client get the minimum client version we will maintain

compatibility with

osd getcrushmap {<int[0-]>} get CRUSH map

osd getmap {<int[0-]>} get OSD map

osd getmaxosd show largest OSD id

osd in <ids> [<ids>...] set osd(s) <id> [<id>...] in, can use <any|all> to

automatically set all previously out osds in

osd last-stat-seq <osdname (id|osd.id)> get the last pg stats sequence number reported for this osd

osd lost <osdname (id|osd.id)> {--yes-i-really-mean-it} mark osd as permanently lost. THIS DESTROYS DATA IF NO MORE

REPLICAS EXIST, BE CAREFUL

osd ls {<int[0-]>} show all OSD ids

osd ls-tree {<int[0-]>} <name> show OSD ids under bucket <name> in the CRUSH map

osd lspools {<int>} list pools

osd map <poolname> <objectname> {<nspace>} find pg for <object> in <pool> with [namespace]

osd metadata {<osdname (id|osd.id)>} fetch metadata for osd {id} (default all)

osd new <uuid> {<osdname (id|osd.id)>} Create a new OSD. If supplied, the `id` to be replaced

needs to exist and have been previously destroyed. Reads

secrets from JSON file via `-i <file>` (see man page).

osd ok-to-stop <ids> [<ids>...] check whether osd(s) can be safely stopped without reducing

immediate data availability

osd out <ids> [<ids>...] set osd(s) <id> [<id>...] out, or use <any|all> to set all

osds out

osd pause pause osd

osd perf print dump of OSD perf summary stats

osd pg-temp <pgid> {<osdname (id|osd.id)> [<osdname (id| set pg_temp mapping pgid:[<id> [<id>...]] (developers only)

osd.id)>...]}

osd pg-upmap <pgid> <osdname (id|osd.id)> [<osdname (id| set pg_upmap mapping <pgid>:[<id> [<id>...]] (developers

osd.id)>...] only)

osd pg-upmap-items <pgid> <osdname (id|osd.id)> [<osdname ( set pg_upmap_items mapping <pgid>:{<id> to <id>, [...]} (

id|osd.id)>...] developers only)

osd pool application disable <poolname> <app> {--yes-i- disables use of an application <app> on pool <poolname>

really-mean-it}

osd pool application enable <poolname> <app> {--yes-i- enable use of an application <app> [cephfs,rbd,rgw] on pool

really-mean-it} <poolname>

osd pool application get {<poolname>} {<app>} {<key>} get value of key <key> of application <app> on pool

<poolname>

osd pool application rm <poolname> <app> <key> removes application <app> metadata key <key> on pool

<poolname>

osd pool application set <poolname> <app> <key> <value> sets application <app> metadata key <key> to <value> on

pool <poolname>

osd pool create <poolname> <int[0-]> {<int[0-]>} create pool

{replicated|erasure} {<erasure_code_profile>} {<rule>}

{<int>}

osd pool get <poolname> size|min_size|pg_num|pgp_num|crush_ get pool parameter <var>

rule|hashpspool|nodelete|nopgchange|nosizechange|write_

fadvise_dontneed|noscrub|nodeep-scrub|hit_set_type|hit_

set_period|hit_set_count|hit_set_fpp|use_gmt_hitset|auid|

target_max_objects|target_max_bytes|cache_target_dirty_

ratio|cache_target_dirty_high_ratio|cache_target_full_

ratio|cache_min_flush_age|cache_min_evict_age|erasure_

code_profile|min_read_recency_for_promote|all|min_write_

recency_for_promote|fast_read|hit_set_grade_decay_rate|

hit_set_search_last_n|scrub_min_interval|scrub_max_

interval|deep_scrub_interval|recovery_priority|recovery_

op_priority|scrub_priority|compression_mode|compression_

algorithm|compression_required_ratio|compression_max_blob_

size|compression_min_blob_size|csum_type|csum_min_block|

csum_max_block|allow_ec_overwrites

osd pool get-quota <poolname> obtain object or byte limits for pool

osd pool ls {detail} list pools

osd pool mksnap <poolname> <snap> make snapshot <snap> in <pool>

osd pool rename <poolname> <poolname> rename <srcpool> to <destpool>

osd pool rm <poolname> {<poolname>} {<sure>} remove pool

osd pool rmsnap <poolname> <snap> remove snapshot <snap> from <pool>

osd pool set <poolname> size|min_size|pg_num|pgp_num|crush_ set pool parameter <var> to <val>

rule|hashpspool|nodelete|nopgchange|nosizechange|write_

fadvise_dontneed|noscrub|nodeep-scrub|hit_set_type|hit_

set_period|hit_set_count|hit_set_fpp|use_gmt_hitset|

target_max_bytes|target_max_objects|cache_target_dirty_

ratio|cache_target_dirty_high_ratio|cache_target_full_

ratio|cache_min_flush_age|cache_min_evict_age|auid|min_

read_recency_for_promote|min_write_recency_for_promote|

fast_read|hit_set_grade_decay_rate|hit_set_search_last_n|

scrub_min_interval|scrub_max_interval|deep_scrub_interval|

recovery_priority|recovery_op_priority|scrub_priority|

compression_mode|compression_algorithm|compression_

required_ratio|compression_max_blob_size|compression_min_

blob_size|csum_type|csum_min_block|csum_max_block|allow_

ec_overwrites <val> {--yes-i-really-mean-it}

osd pool set-quota <poolname> max_objects|max_bytes <val> set object or byte limit on pool

osd pool stats {<poolname>} obtain stats from all pools, or from specified pool

osd primary-affinity <osdname (id|osd.id)> <float[0.0-1.0]> adjust osd primary-affinity from 0.0 <= <weight> <= 1.0

osd primary-temp <pgid> <osdname (id|osd.id)> set primary_temp mapping pgid:<id>|-1 (developers only)

osd purge <osdname (id|osd.id)> {--yes-i-really-mean-it} purge all osd data from the monitors. Combines `osd destroy`

, `osd rm`, and `osd crush rm`.

osd purge-new <osdname (id|osd.id)> {--yes-i-really-mean- purge all traces of an OSD that was partially created but

it} never started

osd repair <who> initiate repair on osd <who>, or use <all|any> to repair all

osd require-osd-release luminous|mimic {--yes-i-really- set the minimum allowed OSD release to participate in the

mean-it} cluster

osd reweight <osdname (id|osd.id)> <float[0.0-1.0]> reweight osd to 0.0 < <weight> < 1.0

osd reweight-by-pg {<int>} {<float>} {<int>} {<poolname> reweight OSDs by PG distribution [overload-percentage-for-

[<poolname>...]} consideration, default 120]

osd reweight-by-utilization {<int>} {<float>} {<int>} {-- reweight OSDs by utilization [overload-percentage-for-

no-increasing} consideration, default 120]

osd reweightn <weights> reweight osds with {<id>: <weight>,...})

osd rm <ids> [<ids>...] remove osd(s) <id> [<id>...], or use <any|all> to remove

all osds

osd rm-nodown <ids> [<ids>...] allow osd(s) <id> [<id>...] to be marked down (if they are

currently marked as nodown), can use <all|any> to

automatically filter out all nodown osds

osd rm-noin <ids> [<ids>...] allow osd(s) <id> [<id>...] to be marked in (if they are

currently marked as noin), can use <all|any> to

automatically filter out all noin osds

osd rm-noout <ids> [<ids>...] allow osd(s) <id> [<id>...] to be marked out (if they are

currently marked as noout), can use <all|any> to

automatically filter out all noout osds

osd rm-noup <ids> [<ids>...] allow osd(s) <id> [<id>...] to be marked up (if they are

currently marked as noup), can use <all|any> to

automatically filter out all noup osds

osd rm-pg-upmap <pgid> clear pg_upmap mapping for <pgid> (developers only)

osd rm-pg-upmap-items <pgid> clear pg_upmap_items mapping for <pgid> (developers only)

osd safe-to-destroy <ids> [<ids>...] check whether osd(s) can be safely destroyed without

reducing data durability

osd scrub <who> initiate scrub on osd <who>, or use <all|any> to scrub all

osd set full|pause|noup|nodown|noout|noin|nobackfill| set <key>

norebalance|norecover|noscrub|nodeep-scrub|notieragent|

nosnaptrim|sortbitwise|recovery_deletes|require_jewel_

osds|require_kraken_osds|pglog_hardlimit {--yes-i-really-

mean-it}

osd set-backfillfull-ratio <float[0.0-1.0]> set usage ratio at which OSDs are marked too full to

backfill

osd set-full-ratio <float[0.0-1.0]> set usage ratio at which OSDs are marked full

osd set-nearfull-ratio <float[0.0-1.0]> set usage ratio at which OSDs are marked near-full

osd set-require-min-compat-client <version> {--yes-i- set the minimum client version we will maintain

really-mean-it} compatibility with

osd setcrushmap {<int>} set crush map from input file

osd setmaxosd <int[0-]> set new maximum osd value

osd smart get <osd_id> Get smart data for osd.id

osd stat print summary of OSD map

osd status {<bucket>} Show the status of OSDs within a bucket, or all

osd test-reweight-by-pg {<int>} {<float>} {<int>} dry run of reweight OSDs by PG distribution [overload-

{<poolname> [<poolname>...]} percentage-for-consideration, default 120]

osd test-reweight-by-utilization {<int>} {<float>} {<int>} dry run of reweight OSDs by utilization [overload-

{--no-increasing} percentage-for-consideration, default 120]

osd tier add <poolname> <poolname> {--force-nonempty} add the tier <tierpool> (the second one) to base pool

<pool> (the first one)

osd tier add-cache <poolname> <poolname> <int[0-]> add a cache <tierpool> (the second one) of size <size> to

existing pool <pool> (the first one)

osd tier cache-mode <poolname> none|writeback|forward| specify the caching mode for cache tier <pool>

readonly|readforward|proxy|readproxy {--yes-i-really-mean-

it}

osd tier rm <poolname> <poolname> remove the tier <tierpool> (the second one) from base pool

<pool> (the first one)

osd tier rm-overlay <poolname> remove the overlay pool for base pool <pool>

osd tier set-overlay <poolname> <poolname> set the overlay pool for base pool <pool> to be

<overlaypool>

osd tree {<int[0-]>} {up|down|in|out|destroyed [up|down|in| print OSD tree

out|destroyed...]}

osd tree-from {<int[0-]>} <bucket> {up|down|in|out| print OSD tree in bucket

destroyed [up|down|in|out|destroyed...]}

osd unpause unpause osd

osd unset full|pause|noup|nodown|noout|noin|nobackfill| unset <key>

norebalance|norecover|noscrub|nodeep-scrub|notieragent|

nosnaptrim

osd utilization get basic pg distribution stats

osd versions check running versions of OSDs

pg cancel-force-backfill <pgid> [<pgid>...] restore normal backfill priority of <pgid>

pg cancel-force-recovery <pgid> [<pgid>...] restore normal recovery priority of <pgid>

pg debug unfound_objects_exist|degraded_pgs_exist show debug info about pgs

pg deep-scrub <pgid> start deep-scrub on <pgid>

pg dump {all|summary|sum|delta|pools|osds|pgs|pgs_brief show human-readable versions of pg map (only 'all' valid

[all|summary|sum|delta|pools|osds|pgs|pgs_brief...]} with plain)

pg dump_json {all|summary|sum|pools|osds|pgs [all|summary| show human-readable version of pg map in json only

sum|pools|osds|pgs...]}

pg dump_pools_json show pg pools info in json only

pg dump_stuck {inactive|unclean|stale|undersized|degraded show information about stuck pgs

[inactive|unclean|stale|undersized|degraded...]} {<int>}

pg force-backfill <pgid> [<pgid>...] force backfill of <pgid> first

pg force-recovery <pgid> [<pgid>...] force recovery of <pgid> first

pg getmap get binary pg map to -o/stdout

pg ls {<int>} {<states> [<states>...]} list pg with specific pool, osd, state

pg ls-by-osd <osdname (id|osd.id)> {<int>} {<states> list pg on osd [osd]

[<states>...]}

pg ls-by-pool <poolstr> {<states> [<states>...]} list pg with pool = [poolname]

pg ls-by-primary <osdname (id|osd.id)> {<int>} {<states> list pg with primary = [osd]

[<states>...]}

pg map <pgid> show mapping of pg to osds

pg repair <pgid> start repair on <pgid>

pg scrub <pgid> start scrub on <pgid>

pg stat show placement group status.

prometheus file_sd_config Return file_sd compatible prometheus config for mgr cluster

prometheus self-test Run a self test on the prometheus module

quorum enter|exit enter or exit quorum

quorum_status report status of monitor quorum

report {<tags> [<tags>...]} report full status of cluster, optional title tag strings

restful create-key <key_name> Create an API key with this name

restful create-self-signed-cert Create localized self signed certificate

restful delete-key <key_name> Delete an API key with this name

restful list-keys List all API keys

restful restart Restart API server

service dump dump service map

service status dump service state

status show cluster status

telegraf config-set <key> <value> Set a configuration value

telegraf config-show Show current configuration

telegraf self-test debug the module

telegraf send Force sending data to Telegraf

telemetry config-set <key> <value> Set a configuration value

telemetry config-show Show current configuration

telemetry self-test Perform a self-test

telemetry send Force sending data to Ceph telemetry

telemetry show Show last report or report to be sent

tell <name (type.id)> <args> [<args>...] send a command to a specific daemon

time-sync-status show time sync status

version show mon daemon version

versions check running versions of ceph daemons

zabbix config-set <key> <value> Set a configuration value

zabbix config-show Show current configuration

zabbix self-test Run a self-test on the Zabbix module

zabbix send Force sending data to Zabbix以上,感谢。

2022年11月26日

![23. [Python GUI] PyQt5中的模型与视图框架-抽象视图基类QAbstractItemView](https://img-blog.csdnimg.cn/img_convert/5505c6d90f2c403d5f6388f6ad571837.png)

![[附源码]java毕业设计中医药系统论文2022](https://img-blog.csdnimg.cn/57faca948a704c83883a9c442fc7ced0.png)