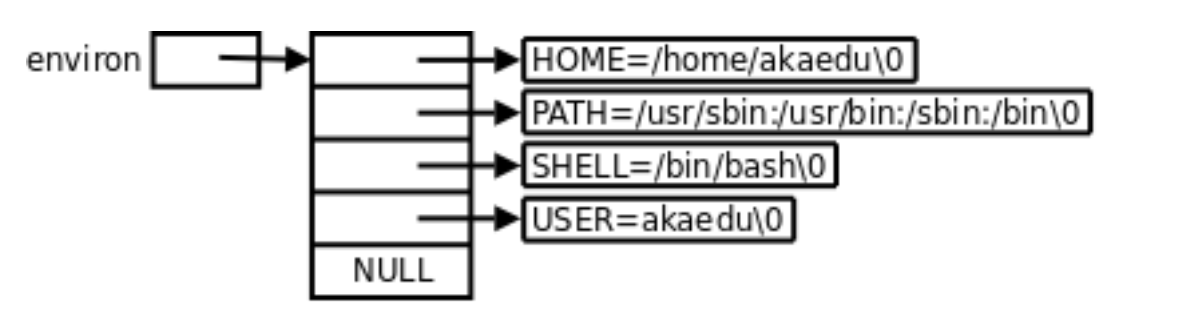

PMD是Poll Mode Driver的缩写,即基于用户态的轮询机制的驱动

在不考虑vfio的情况下,PMD的结构图如下

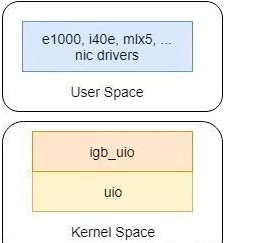

虽然PMD是在用户态实现设备驱动,但还是依赖于内核提供的策略。其中uio模块,是内核提供的用户态驱动框架,而igb_uio是DPDK kit中拥有与uio交互,bind指定网卡的内核模块;

当使用DPDK脚本dpdk-devbind来bind网卡时,会通过sysfs与内核交互,让内核使用指定驱动来匹配网卡。具体的行为向/sys/bus/pci/devices/(pci id)/driver_override写入指定驱动名称,或者向/sys/bus/pci/drivers/igb_uio(驱动名称)/new_id写入要绑定网卡的PCI ID。前者是配置设备,让其选择驱动。后者是是配置驱动,让其支持新的PCI设备。按照内核的文档https://www.kernel.org/doc/Documentation/ABI/testing/sysfs-bus-pci,这两个动作都会促使驱动bind新设备。

但是在dpdk-devbind脚本中,还是通过向/sys/bus/pci/drivers/igb_uio(驱动名称)/bind写入pci id来保证bind;

当使用igb_uio bind指定设备后,内核会调用igb_uio注册的struct pci_driver的probe函数,即igbuio_pci_probe;

其会调用uio_register_device,注册uio设备

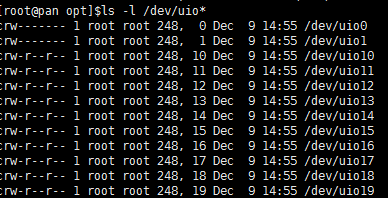

打开uio设备

应用层DPDK已经可以使用uio设备了。DPDK的应用层代码,会打开uioX设备,在函数pci_uio_alloc_resource中;

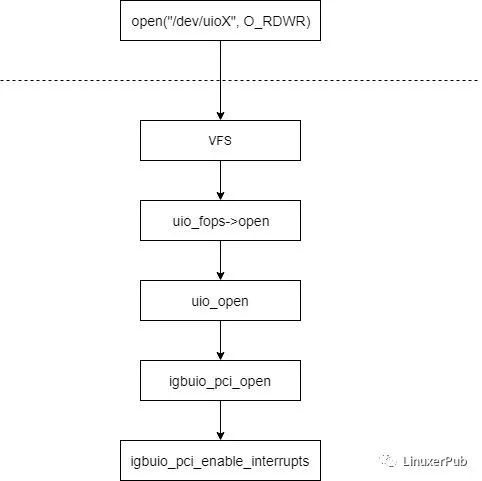

当open对应的uio设备时,对应的内核操作为uio_open,其又会调用igb_uio的open函数,流程图如下

igb_uio的默认中断模式为RTE_INTR_MODE_MSIX,在igbuio_pci_enable_interrupts的关键代码如下

static int

igbuio_pci_enable_interrupts(struct rte_uio_pci_dev *udev)

{

int err = 0;

switch (igbuio_intr_mode_preferred) {

case RTE_INTR_MODE_MSIX:

/* Only 1 msi-x vector needed */

#ifndef HAVE_ALLOC_IRQ_VECTORS

msix_entry.entry = 0;

if (pci_enable_msix(udev->pdev, &msix_entry, 1) == 0) {

dev_dbg(&udev->pdev->dev, "using MSI-X");

udev->info.irq_flags = IRQF_NO_THREAD;

udev->info.irq = msix_entry.vector;

udev->mode = RTE_INTR_MODE_MSIX;

break;

}

#else

if (pci_alloc_irq_vectors(udev->pdev, 1, 1, PCI_IRQ_MSIX) == 1) {

dev_dbg(&udev->pdev->dev, "using MSI-X");

udev->info.irq_flags = IRQF_NO_THREAD;

udev->info.irq = pci_irq_vector(udev->pdev, 0);

udev->mode = RTE_INTR_MODE_MSIX;

break;

}

#endif

default:

dev_err(&udev->pdev->dev, "invalid IRQ mode %u",

igbuio_intr_mode_preferred);

udev->info.irq = UIO_IRQ_NONE;

err = -EINVAL;

}

if (udev->info.irq != UIO_IRQ_NONE)

err = request_irq(udev->info.irq, igbuio_pci_irqhandler,

udev->info.irq_flags, udev->info.name,

udev);

dev_info(&udev->pdev->dev, "uio device registered with irq %ld\n",

udev->info.irq);

return err;

}

当打开uio设备时,igb_uio注册了一个中断。这时大家应该有个疑问,PMD不是用户态轮询设备吗?为什么还要申请中断,注册中断处理函数呢?这是因为,即使应用层可以通过uio来实现设备驱动,但是设备的某些事件还是需要内核进行响应,然后通知应用层;相当于中断的上半部 和下半部

上半部中断服务函数: 主要通知一个event到user比如: 唤醒在idev->wait等待队列中的task

/**

* This is interrupt handler which will check if the interrupt is for the right device.

* If yes, disable it here and will be enable later.

*/

static irqreturn_t

igbuio_pci_irqhandler(int irq, void *dev_id)

{

struct rte_uio_pci_dev *udev = (struct rte_uio_pci_dev *)dev_id;

struct uio_info *info = &udev->info;

/* Legacy mode need to mask in hardware */

if (udev->mode == RTE_INTR_MODE_LEGACY &&

!pci_check_and_mask_intx(udev->pdev))

return IRQ_NONE;

uio_event_notify(info);

/* Message signal mode, no share IRQ and automasked */

return IRQ_HANDLED;

}

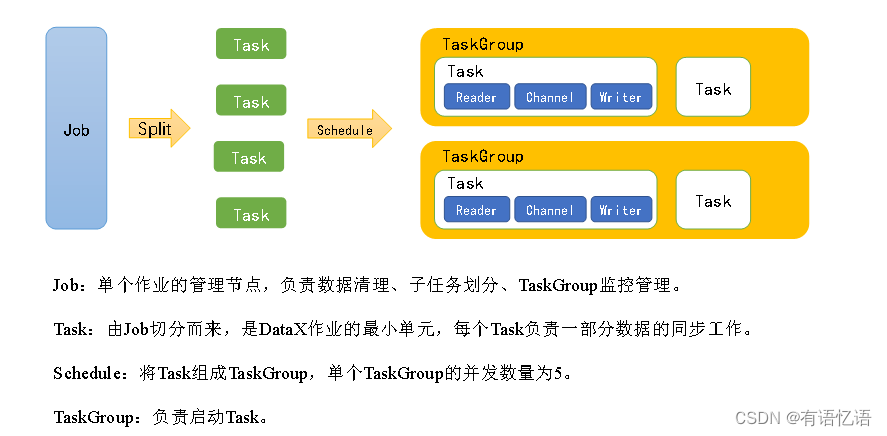

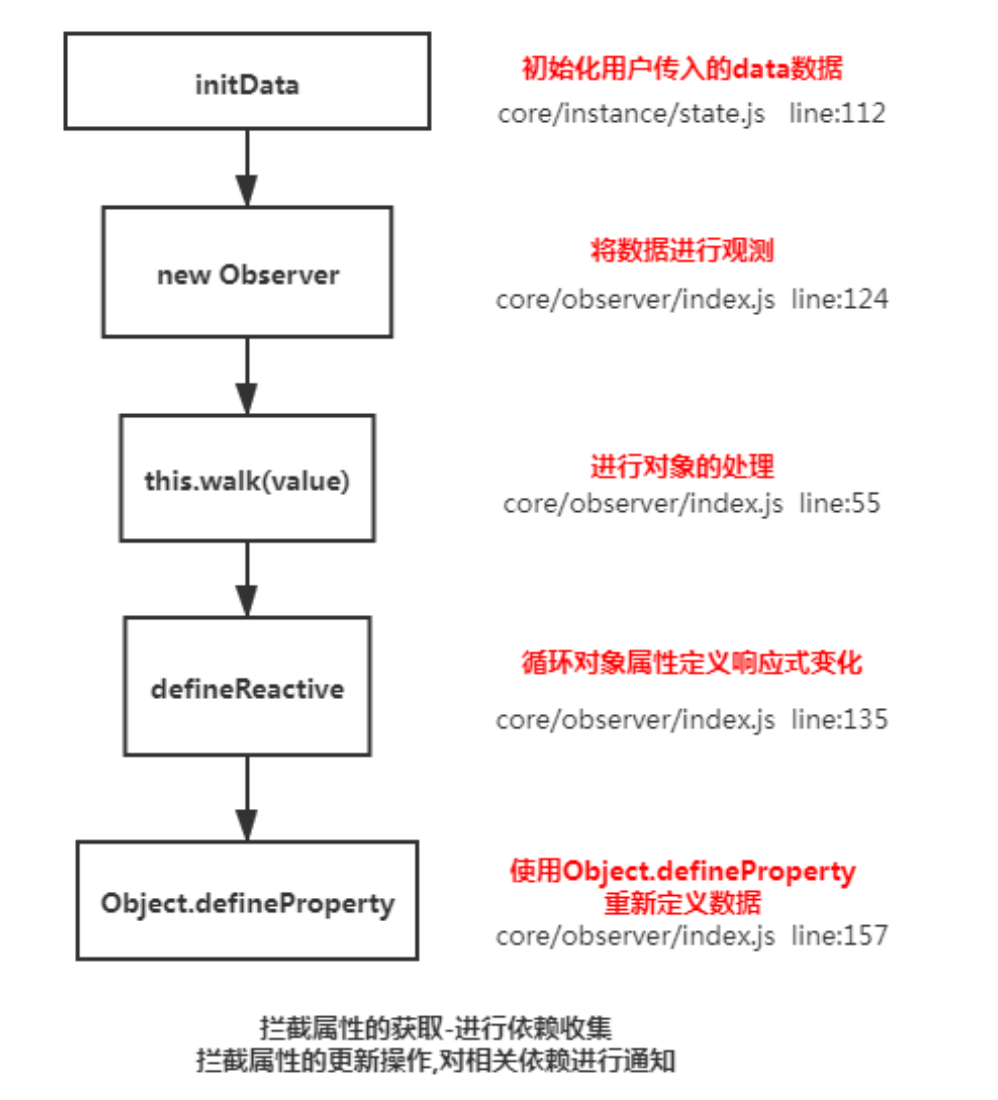

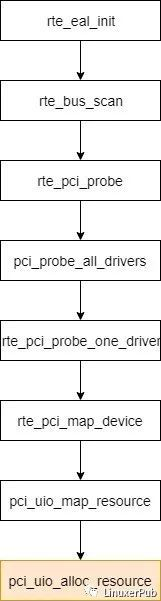

当DPDK的app启动时,会进行EAL初始化,如下图:

以ixgbe驱动为例详细说明:

学习地址: Dpdk/网络协议栈/vpp/OvS/DDos/NFV/虚拟化/高性能专家-学习视频教程-腾讯课堂

更多DPDK相关学习资料有需要的可以自行报名学习,免费订阅,久学习,或点击这里加qun免费

领取,关注我持续更新哦! !

RTE_PMD_REGISTER_PCI(net_ixgbe, rte_ixgbe_pmd);

#define RTE_PMD_EXPORT_NAME(name, idx) \

static const char RTE_PMD_EXPORT_NAME_ARRAY(this_pmd_name, idx) \

__attribute__((used)) = RTE_STR(name)

/** Helper for PCI device registration from driver (eth, crypto) instance */

#define RTE_PMD_REGISTER_PCI(nm, pci_drv) \

RTE_INIT(pciinitfn_ ##nm) \

{\

(pci_drv).driver.name = RTE_STR(nm);\

rte_pci_register(&pci_drv); \

} \

RTE_PMD_EXPORT_NAME(nm, __COUNTER__)

/* register a driver */

void

rte_pci_register(struct rte_pci_driver *driver)

{

TAILQ_INSERT_TAIL(&rte_pci_bus.driver_list, driver, next);

driver->bus = &rte_pci_bus;

}

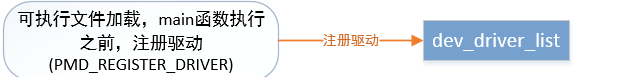

RTE_PMD_REGISTER_PCI为dpdk定义的宏,使用了GNU C提供的“__attribute__(constructor)”机制,使得注册设备驱动的过程在main函数执行之前完成。

这样就有了设备驱动类型、设备驱动的初始化函数

使用attribute的constructor属性,在MAIN函数执行前,就执行pmd_driver_register()函数,将pmd_igb_drv驱动挂到全局dev_driver_list链表上。

2、通过读取/sys/bus/pci/devices/目录下的信息,扫描当前系统的PCI设备,并初始化,并按照PCI地址从大到小的顺序挂在到pci_debice_list上。

3、rte_bus_probe------>rte_eal_pci_probe_one_driver();通过比对PCI_ID的vendor_id、device_id、subsystem_vendor_id、subsystem_device_id四个字段判断pci设备和pci驱动是否匹配。

PCI设备和PCI驱动匹配后,调用pci_map_device()函数为该PCI设备创建map resource; pci_uio_map_resource()函数为pci设备在虚拟地址空间映射pci资源,后续直接通过操作内存来操作pci设备

4、rte_bus_probe---------->调用rte_eth_dev_create 以及 eth_ixgbe_pci_probe()初始化PCI设备; 并通过eth_ixgbe_dev_init 函数分配一个网卡设备,并在全局数组rte_eth_dev_data[]中为网卡设备的数据域分配空间。

同时初始化网卡设备。首先设置网卡设备的操作函数集,以及收包、发包函数

/* Launch threads, called at application init(). */

int

rte_eal_init(int argc, char **argv)

{

int i, fctret, ret;

pthread_t thread_id;

static rte_atomic32_t run_once = RTE_ATOMIC32_INIT(0);

const char *p;

static char logid[PATH_MAX];

char cpuset[RTE_CPU_AFFINITY_STR_LEN];

char thread_name[RTE_MAX_THREAD_NAME_LEN];

/* checks if the machine is adequate */

if (!rte_cpu_is_supported()) {

rte_eal_init_alert("unsupported cpu type.");

rte_errno = ENOTSUP;

return -1;

}

//操作静态局部变量run_once确保函数只执行一次

if (!rte_atomic32_test_and_set(&run_once)) {

rte_eal_init_alert("already called initialization.");

rte_errno = EALREADY;

return -1;

}

//dpdk: EAL init args: -c 3 -n 4 --file-prefix vpp --master-lcore 0

p = strrchr(argv[0], '/');

strlcpy(logid, p ? p + 1 : argv[0], sizeof(logid));

thread_id = pthread_self();//获取主线程的线程ID

//初始化结构体struct internal_config

eal_reset_internal_config(&internal_config);

/* set log level as early as possible设置log level */

eal_log_level_parse(argc, argv);

//赋值全局结构struct lcore_config

if (rte_eal_cpu_init() < 0) {//赋值全局结构struct lcore_config

rte_eal_init_alert("Cannot detect lcores.");

rte_errno = ENOTSUP;

return -1;

}

fctret = eal_parse_args(argc, argv);

if (fctret < 0) {

rte_eal_init_alert("Invalid 'command line' arguments.");

rte_errno = EINVAL;

rte_atomic32_clear(&run_once);

return -1;

}

if (eal_plugins_init() < 0) {//EAL的“-d”选项可以指定需要载入的动态链接库)

rte_eal_init_alert("Cannot init plugins\n");

rte_errno = EINVAL;

rte_atomic32_clear(&run_once);

return -1;

}

if (eal_option_device_parse()) {

rte_errno = ENODEV;

rte_atomic32_clear(&run_once);

return -1;

}

rte_config_init();

if (rte_eal_intr_init() < 0) {

rte_eal_init_alert("Cannot init interrupt-handling thread\n");

return -1;

}

/* Put mp channel init before bus scan so that we can init the vdev

* bus through mp channel in the secondary process before the bus scan.

*/

if (rte_mp_channel_init() < 0) {

rte_eal_init_alert("failed to init mp channel\n");

if (rte_eal_process_type() == RTE_PROC_PRIMARY) {

rte_errno = EFAULT;

return -1;

}

}

if (rte_bus_scan()) {//scan 扫描设备的device信息

///sys/bus/pci/devices”目录下的所有pci地址,逐个获取对应的pci地址、pci id、sriov使能时的vf个数、亲和的numa、设备地址空间、驱动类型等

rte_eal_init_alert("Cannot scan the buses for devices\n");

rte_errno = ENODEV;

rte_atomic32_clear(&run_once);

return -1;

}

/* autodetect the iova mapping mode (default is iova_pa) */

rte_eal_get_configuration()->iova_mode = rte_bus_get_iommu_class();

/* Workaround for KNI which requires physical address to work */

if (rte_eal_get_configuration()->iova_mode == RTE_IOVA_VA &&

rte_eal_check_module("rte_kni") == 1) {

rte_eal_get_configuration()->iova_mode = RTE_IOVA_PA;

RTE_LOG(WARNING, EAL,

"Some devices want IOVA as VA but PA will be used because.. "

"KNI module inserted\n");

}

if (internal_config.no_hugetlbfs == 0) {

/* rte_config isn't initialized yet */

ret = internal_config.process_type == RTE_PROC_PRIMARY ?

eal_hugepage_info_init() :

eal_hugepage_info_read();

if (ret < 0) {

rte_eal_init_alert("Cannot get hugepage information.");

rte_errno = EACCES;

rte_atomic32_clear(&run_once);

return -1;

}

}

if (internal_config.memory == 0 && internal_config.force_sockets == 0) {

if (internal_config.no_hugetlbfs)

internal_config.memory = MEMSIZE_IF_NO_HUGE_PAGE;

}

if (internal_config.vmware_tsc_map == 1) {

#ifdef RTE_LIBRTE_EAL_VMWARE_TSC_MAP_SUPPORT

rte_cycles_vmware_tsc_map = 1;

RTE_LOG (DEBUG, EAL, "Using VMWARE TSC MAP, "

"you must have monitor_control.pseudo_perfctr = TRUE\n");

#else

RTE_LOG (WARNING, EAL, "Ignoring --vmware-tsc-map because "

"RTE_LIBRTE_EAL_VMWARE_TSC_MAP_SUPPORT is not set\n");

#endif

}

rte_srand(rte_rdtsc());

if (rte_eal_log_init(logid, internal_config.syslog_facility) < 0) {

rte_eal_init_alert("Cannot init logging.");

rte_errno = ENOMEM;

rte_atomic32_clear(&run_once);

return -1;

}

#ifdef VFIO_PRESENT

if (rte_eal_vfio_setup() < 0) {

rte_eal_init_alert("Cannot init VFIO\n");

rte_errno = EAGAIN;

rte_atomic32_clear(&run_once);

return -1;

}

#endif

/* in secondary processes, memory init may allocate additional fbarrays

* not present in primary processes, so to avoid any potential issues,

* initialize memzones first.

*/

if (rte_eal_memzone_init() < 0) {

rte_eal_init_alert("Cannot init memzone\n");

rte_errno = ENODEV;

return -1;

}

if (rte_eal_memory_init() < 0) {

rte_eal_init_alert("Cannot init memory\n");

rte_errno = ENOMEM;

return -1;

}

/* the directories are locked during eal_hugepage_info_init */

eal_hugedirs_unlock();

if (rte_eal_malloc_heap_init() < 0) {

rte_eal_init_alert("Cannot init malloc heap\n");

rte_errno = ENODEV;

return -1;

}

if (rte_eal_tailqs_init() < 0) {

rte_eal_init_alert("Cannot init tail queues for objects\n");

rte_errno = EFAULT;

return -1;

}

if (rte_eal_alarm_init() < 0) {

rte_eal_init_alert("Cannot init interrupt-handling thread\n");

/* rte_eal_alarm_init sets rte_errno on failure. */

return -1;

}

if (rte_eal_timer_init() < 0) {

rte_eal_init_alert("Cannot init HPET or TSC timers\n");

rte_errno = ENOTSUP;

return -1;

}

eal_check_mem_on_local_socket();

eal_thread_init_master(rte_config.master_lcore);

ret = eal_thread_dump_affinity(cpuset, sizeof(cpuset));

RTE_LOG(DEBUG, EAL, "Master lcore %u is ready (tid=%x;cpuset=[%s%s])\n",

rte_config.master_lcore, (int)thread_id, cpuset,

ret == 0 ? "" : "...");

RTE_LCORE_FOREACH_SLAVE(i) {

/*

* create communication pipes between master thread

* and children

*/

if (pipe(lcore_config[i].pipe_master2slave) < 0)

rte_panic("Cannot create pipe\n");

if (pipe(lcore_config[i].pipe_slave2master) < 0)

rte_panic("Cannot create pipe\n");

lcore_config[i].state = WAIT;

/* create a thread for each lcore */

ret = pthread_create(&lcore_config[i].thread_id, NULL,

eal_thread_loop, NULL);

if (ret != 0)

rte_panic("Cannot create thread\n");

/* Set thread_name for aid in debugging. */

snprintf(thread_name, sizeof(thread_name),

"lcore-slave-%d", i);

ret = rte_thread_setname(lcore_config[i].thread_id,

thread_name);

if (ret != 0)

RTE_LOG(DEBUG, EAL,

"Cannot set name for lcore thread\n");

}

/*

* Launch a dummy function on all slave lcores, so that master lcore

* knows they are all ready when this function returns.

*/

rte_eal_mp_remote_launch(sync_func, NULL, SKIP_MASTER);

rte_eal_mp_wait_lcore();

/* initialize services so vdevs register service during bus_probe. */

ret = rte_service_init();

if (ret) {

rte_eal_init_alert("rte_service_init() failed\n");

rte_errno = ENOEXEC;

return -1;

}

/* Probe all the buses and devices/drivers on them

/* 所有的 dirver 都会register a driver

void rte_pci_register(struct rte_pci_driver *driver)

{ TAILQ_INSERT_TAIL(&rte_pci_bus.driver_list, driver, next);

driver->bus = &rte_pci_bus;

*/

/*遍历pci_device_list和pci_driver_list链表,根据PCI_ID,

将pci_device与pci_driver绑定,并调用pci_driver的 probe函数初始化PCI设备*/

if (rte_bus_probe()) {//对应 rte_pci_probe 函数 --->pci_probe_all_drivers

//--AILQ_FOREACH(p, &(rte_pci_bus.driver_list), next) ---->rte_pci_probe_one_driver

//----rte_pci_map_device && dr->probe(dr, dev);

//PCI设备和PCI驱动匹配后,调用pci_map_device()函数为该PCI设备创建map resource

rte_eal_init_alert("Cannot probe devices\n");

rte_errno = ENOTSUP;

return -1;

}

#ifdef VFIO_PRESENT

/* Register mp action after probe() so that we got enough info */

if (rte_vfio_is_enabled("vfio") && vfio_mp_sync_setup() < 0)

return -1;

#endif

/* initialize default service/lcore mappings and start running. Ignore

* -ENOTSUP, as it indicates no service coremask passed to EAL.

*/

ret = rte_service_start_with_defaults();

if (ret < 0 && ret != -ENOTSUP) {

rte_errno = ENOEXEC;

return -1;

}

rte_eal_mcfg_complete();

return fctret;

}

/*

读取/sys/bus/pci/devices/0000\:00\:09.0/uio/uio0/maps/map0/目录下的文件,获取UIO设备的map resource。并将其记录在struct pci_map数据结构中。

检查PCI设备和UIO设备在内存总线上的物理地址是否一致。如果一致,对/dev/uioID文件mmap一段内存空间,并将其记录在pci_map->addr和rte_pci_device->mem_resource[].addr中

将所有UIO设备的resource信息都记录在struct mapped_pci_resource数据结构中,并挂到全局链表uio_res_list上

*/

/* Map pci device */

int

rte_pci_map_device(struct rte_pci_device *dev)

{

int ret = -1;

/* try mapping the NIC resources using VFIO if it exists */

switch (dev->kdrv) {

case RTE_KDRV_VFIO:

#ifdef VFIO_PRESENT

if (pci_vfio_is_enabled())

ret = pci_vfio_map_resource(dev);

#endif

break;

case RTE_KDRV_IGB_UIO:

case RTE_KDRV_UIO_GENERIC:

if (rte_eal_using_phys_addrs()) {

/* map resources for devices that use uio */

ret = pci_uio_map_resource(dev);

}

break;

default:

RTE_LOG(DEBUG, EAL,

" Not managed by a supported kernel driver, skipped\n");

ret = 1;

break;

}

return ret;

}

/* map the PCI resource of a PCI device in virtual memory */

int

pci_uio_map_resource(struct rte_pci_device *dev)

{

int i, map_idx = 0, ret;

uint64_t phaddr;

struct mapped_pci_resource *uio_res = NULL;

struct mapped_pci_res_list *uio_res_list =

RTE_TAILQ_CAST(rte_uio_tailq.head, mapped_pci_res_list);

dev->intr_handle.fd = -1;

dev->intr_handle.uio_cfg_fd = -1;

/* secondary processes - use already recorded details */

if (rte_eal_process_type() != RTE_PROC_PRIMARY)

return pci_uio_map_secondary(dev);

/* allocate uio resource open 打开要管理的uio 设备*/

ret = pci_uio_alloc_resource(dev, &uio_res);

if (ret)

return ret;

/* DPDK还需要把PCI设备的BAR映射到应用层

Map all BARs */

for (i = 0; i != PCI_MAX_RESOURCE; i++) {

/* skip empty BAR */

phaddr = dev->mem_resource[i].phys_addr;

if (phaddr == 0)

continue;

ret = pci_uio_map_resource_by_index(dev, i,

uio_res, map_idx);

if (ret)

goto error;

map_idx++;

}

uio_res->nb_maps = map_idx;

TAILQ_INSERT_TAIL(uio_res_list, uio_res, next);

return 0;

error:

for (i = 0; i < map_idx; i++) {

pci_unmap_resource(uio_res->maps[i].addr,

(size_t)uio_res->maps[i].size);

rte_free(uio_res->maps[i].path);

}

pci_uio_free_resource(dev, uio_res);

return -1;

}

int

pci_uio_alloc_resource(struct rte_pci_device *dev,

struct mapped_pci_resource **uio_res)

{

char devname[PATH_MAX]; /* contains the /dev/uioX */

struct rte_pci_addr *loc;

loc = &dev->addr;

snprintf(devname, sizeof(devname), "/dev/uio@pci:%u:%u:%u",

dev->addr.bus, dev->addr.devid, dev->addr.function);

if (access(devname, O_RDWR) < 0) {

RTE_LOG(WARNING, EAL, " "PCI_PRI_FMT" not managed by UIO driver, "

"skipping\n", loc->domain, loc->bus, loc->devid, loc->function);

return 1;

}

/* save fd if in primary process

初始化PCI设备的中断句柄。*/

dev->intr_handle.fd = open(devname, O_RDWR);

if (dev->intr_handle.fd < 0) {

RTE_LOG(ERR, EAL, "Cannot open %s: %s\n",

devname, strerror(errno));

goto error;

}

dev->intr_handle.type = RTE_INTR_HANDLE_UIO;

/* allocate the mapping details for secondary processes*/

*uio_res = rte_zmalloc("UIO_RES", sizeof(**uio_res), 0);

if (*uio_res == NULL) {

RTE_LOG(ERR, EAL,

"%s(): cannot store uio mmap details\n", __func__);

goto error;

}

snprintf((*uio_res)->path, sizeof((*uio_res)->path), "%s", devname);

memcpy(&(*uio_res)->pci_addr, &dev->addr, sizeof((*uio_res)->pci_addr));

return 0;

error:

pci_uio_free_resource(dev, *uio_res);

return -1;

}

4、rte_bus_probe---------->调用rte_eth_dev_create 以及 eth_ixgbe_pci_probe()初始化PCI设备; 并通过eth_ixgbe_dev_init 函数分配一个网卡设备,并在全局数组rte_eth_dev_data[]中为网卡设备的数据域分配空间。

同时初始化网卡设备。首先设置网卡设备的操作函数集,以及收包、发包函数

注册中断处理函数。

rte_intr_callback_register(&(pci_dev->intr_handle),

eth_igb_interrupt_handler, (void *)eth_dev);

int __rte_experimental

rte_eth_dev_create(struct rte_device *device, const char *name,

size_t priv_data_size,

ethdev_bus_specific_init ethdev_bus_specific_init,

void *bus_init_params,

ethdev_init_t ethdev_init, void *init_params)

{

struct rte_eth_dev *ethdev;

int retval;

RTE_FUNC_PTR_OR_ERR_RET(*ethdev_init, -EINVAL);

if (rte_eal_process_type() == RTE_PROC_PRIMARY) {

//在全局数组rte_eth_devices[]中分配一个网卡设备。并在全局数组rte_eth_dev_data[]中为网卡设备的数据域分配空间。

ethdev = rte_eth_dev_allocate(name);

if (!ethdev)

return -ENODEV;

if (priv_data_size) {

ethdev->data->dev_private = rte_zmalloc_socket(

name, priv_data_size, RTE_CACHE_LINE_SIZE,

device->numa_node);

if (!ethdev->data->dev_private) {

RTE_LOG(ERR, EAL, "failed to allocate private data");

retval = -ENOMEM;

goto data_alloc_failed;

}

}

} else {

ethdev = rte_eth_dev_attach_secondary(name);

if (!ethdev) {

RTE_LOG(ERR, EAL, "secondary process attach failed, "

"ethdev doesn't exist");

return -ENODEV;

}

}

ethdev->device = device;

if (ethdev_bus_specific_init) {

retval = ethdev_bus_specific_init(ethdev, bus_init_params);

if (retval) {

RTE_LOG(ERR, EAL,

"ethdev bus specific initialisation failed");

goto probe_failed;

}

}

retval = ethdev_init(ethdev, init_params);

if (retval) {

RTE_LOG(ERR, EAL, "ethdev initialisation failed");

goto probe_failed;

}

rte_eth_dev_probing_finish(ethdev);

return retval;

probe_failed:

/* free ports private data if primary process */

if (rte_eal_process_type() == RTE_PROC_PRIMARY)

rte_free(ethdev->data->dev_private);

data_alloc_failed:

rte_eth_dev_release_port(ethdev);

return retval;

}

/*

* This function is based on code in ixgbe_attach() in base/ixgbe.c.

* It returns 0 on success.

*/

static int

eth_ixgbe_dev_init(struct rte_eth_dev *eth_dev, void *init_params __rte_unused)

{

struct rte_pci_device *pci_dev = RTE_ETH_DEV_TO_PCI(eth_dev);

struct rte_intr_handle *intr_handle = &pci_dev->intr_handle;

struct ixgbe_hw *hw =

IXGBE_DEV_PRIVATE_TO_HW(eth_dev->data->dev_private);

struct ixgbe_vfta *shadow_vfta =

IXGBE_DEV_PRIVATE_TO_VFTA(eth_dev->data->dev_private);

struct ixgbe_hwstrip *hwstrip =

IXGBE_DEV_PRIVATE_TO_HWSTRIP_BITMAP(eth_dev->data->dev_private);

struct ixgbe_dcb_config *dcb_config =

IXGBE_DEV_PRIVATE_TO_DCB_CFG(eth_dev->data->dev_private);

struct ixgbe_filter_info *filter_info =

IXGBE_DEV_PRIVATE_TO_FILTER_INFO(eth_dev->data->dev_private);

struct ixgbe_bw_conf *bw_conf =

IXGBE_DEV_PRIVATE_TO_BW_CONF(eth_dev->data->dev_private);

uint32_t ctrl_ext;

uint16_t csum;

int diag, i;

PMD_INIT_FUNC_TRACE();

//设置网卡设备的操作函数集,以及收包、发包函数

eth_dev->dev_ops = &ixgbe_eth_dev_ops;

eth_dev->rx_pkt_burst = &ixgbe_recv_pkts;

eth_dev->tx_pkt_burst = &ixgbe_xmit_pkts;

eth_dev->tx_pkt_prepare = &ixgbe_prep_pkts;

/*

* For secondary processes, we don't initialise any further as primary

* has already done this work. Only check we don't need a different

* RX and TX function.

*/

if (rte_eal_process_type() != RTE_PROC_PRIMARY) {

struct ixgbe_tx_queue *txq;

/* TX queue function in primary, set by last queue initialized

* Tx queue may not initialized by primary process

*/

if (eth_dev->data->tx_queues) {

txq = eth_dev->data->tx_queues[eth_dev->data->nb_tx_queues-1];

ixgbe_set_tx_function(eth_dev, txq);

} else {

/* Use default TX function if we get here */

PMD_INIT_LOG(NOTICE, "No TX queues configured yet. "

"Using default TX function.");

}

ixgbe_set_rx_function(eth_dev);

return 0;

}

rte_eth_copy_pci_info(eth_dev, pci_dev);

/* Vendor and Device ID need to be set before init of shared code */

hw->device_id = pci_dev->id.device_id;

hw->vendor_id = pci_dev->id.vendor_id;

hw->hw_addr = (void *)pci_dev->mem_resource[0].addr;

hw->allow_unsupported_sfp = 1;

/* Initialize the shared code (base driver) */

#ifdef RTE_LIBRTE_IXGBE_BYPASS

diag = ixgbe_bypass_init_shared_code(hw);

#else

diag = ixgbe_init_shared_code(hw);

#endif /* RTE_LIBRTE_IXGBE_BYPASS */

if (diag != IXGBE_SUCCESS) {

PMD_INIT_LOG(ERR, "Shared code init failed: %d", diag);

return -EIO;

}

/* pick up the PCI bus settings for reporting later */

ixgbe_get_bus_info(hw);

/* Unlock any pending hardware semaphore */

ixgbe_swfw_lock_reset(hw);

#ifdef RTE_LIBRTE_SECURITY

/* Initialize security_ctx only for primary process*/

if (ixgbe_ipsec_ctx_create(eth_dev))

return -ENOMEM;

#endif

/* Initialize DCB configuration*/

memset(dcb_config, 0, sizeof(struct ixgbe_dcb_config));

ixgbe_dcb_init(hw, dcb_config);

/* Get Hardware Flow Control setting */

hw->fc.requested_mode = ixgbe_fc_full;

hw->fc.current_mode = ixgbe_fc_full;

hw->fc.pause_time = IXGBE_FC_PAUSE;

for (i = 0; i < IXGBE_DCB_MAX_TRAFFIC_CLASS; i++) {

hw->fc.low_water[i] = IXGBE_FC_LO;

hw->fc.high_water[i] = IXGBE_FC_HI;

}

hw->fc.send_xon = 1;

/* Make sure we have a good EEPROM before we read from it */

diag = ixgbe_validate_eeprom_checksum(hw, &csum);

if (diag != IXGBE_SUCCESS) {

PMD_INIT_LOG(ERR, "The EEPROM checksum is not valid: %d", diag);

return -EIO;

}

#ifdef RTE_LIBRTE_IXGBE_BYPASS

diag = ixgbe_bypass_init_hw(hw);

#else

diag = ixgbe_init_hw(hw);

#endif /* RTE_LIBRTE_IXGBE_BYPASS */

/*

* Devices with copper phys will fail to initialise if ixgbe_init_hw()

* is called too soon after the kernel driver unbinding/binding occurs.

* The failure occurs in ixgbe_identify_phy_generic() for all devices,

* but for non-copper devies, ixgbe_identify_sfp_module_generic() is

* also called. See ixgbe_identify_phy_82599(). The reason for the

* failure is not known, and only occuts when virtualisation features

* are disabled in the bios. A delay of 100ms was found to be enough by

* trial-and-error, and is doubled to be safe.

*/

if (diag && (hw->mac.ops.get_media_type(hw) == ixgbe_media_type_copper)) {

rte_delay_ms(200);

diag = ixgbe_init_hw(hw);

}

if (diag == IXGBE_ERR_SFP_NOT_PRESENT)

diag = IXGBE_SUCCESS;

if (diag == IXGBE_ERR_EEPROM_VERSION) {

PMD_INIT_LOG(ERR, "This device is a pre-production adapter/"

"LOM. Please be aware there may be issues associated "

"with your hardware.");

PMD_INIT_LOG(ERR, "If you are experiencing problems "

"please contact your Intel or hardware representative "

"who provided you with this hardware.");

} else if (diag == IXGBE_ERR_SFP_NOT_SUPPORTED)

PMD_INIT_LOG(ERR, "Unsupported SFP+ Module");

if (diag) {

PMD_INIT_LOG(ERR, "Hardware Initialization Failure: %d", diag);

return -EIO;

}

/* Reset the hw statistics */

ixgbe_dev_stats_reset(eth_dev);

/* disable interrupt */

ixgbe_disable_intr(hw);

/* reset mappings for queue statistics hw counters*/

ixgbe_reset_qstat_mappings(hw);

/* Allocate memory for storing MAC addresses */

eth_dev->data->mac_addrs = rte_zmalloc("ixgbe", ETHER_ADDR_LEN *

hw->mac.num_rar_entries, 0);

if (eth_dev->data->mac_addrs == NULL) {

PMD_INIT_LOG(ERR,

"Failed to allocate %u bytes needed to store "

"MAC addresses",

ETHER_ADDR_LEN * hw->mac.num_rar_entries);

return -ENOMEM;

}

/* Copy the permanent MAC address */

ether_addr_copy((struct ether_addr *) hw->mac.perm_addr,

ð_dev->data->mac_addrs[0]);

/* Allocate memory for storing hash filter MAC addresses */

eth_dev->data->hash_mac_addrs = rte_zmalloc("ixgbe", ETHER_ADDR_LEN *

IXGBE_VMDQ_NUM_UC_MAC, 0);

if (eth_dev->data->hash_mac_addrs == NULL) {

PMD_INIT_LOG(ERR,

"Failed to allocate %d bytes needed to store MAC addresses",

ETHER_ADDR_LEN * IXGBE_VMDQ_NUM_UC_MAC);

return -ENOMEM;

}

/* initialize the vfta */

memset(shadow_vfta, 0, sizeof(*shadow_vfta));

/* initialize the hw strip bitmap*/

memset(hwstrip, 0, sizeof(*hwstrip));

/* initialize PF if max_vfs not zero */

ixgbe_pf_host_init(eth_dev);

ctrl_ext = IXGBE_READ_REG(hw, IXGBE_CTRL_EXT);

/* let hardware know driver is loaded */

ctrl_ext |= IXGBE_CTRL_EXT_DRV_LOAD;

/* Set PF Reset Done bit so PF/VF Mail Ops can work */

ctrl_ext |= IXGBE_CTRL_EXT_PFRSTD;

IXGBE_WRITE_REG(hw, IXGBE_CTRL_EXT, ctrl_ext);

IXGBE_WRITE_FLUSH(hw);

if (ixgbe_is_sfp(hw) && hw->phy.sfp_type != ixgbe_sfp_type_not_present)

PMD_INIT_LOG(DEBUG, "MAC: %d, PHY: %d, SFP+: %d",

(int) hw->mac.type, (int) hw->phy.type,

(int) hw->phy.sfp_type);

else

PMD_INIT_LOG(DEBUG, "MAC: %d, PHY: %d",

(int) hw->mac.type, (int) hw->phy.type);

PMD_INIT_LOG(DEBUG, "port %d vendorID=0x%x deviceID=0x%x",

eth_dev->data->port_id, pci_dev->id.vendor_id,

pci_dev->id.device_id);

//注册中断处理函数。

rte_intr_callback_register(intr_handle,

ixgbe_dev_interrupt_handler, eth_dev);

/* enable uio/vfio intr/eventfd mapping */

rte_intr_enable(intr_handle);

/* enable support intr */

ixgbe_enable_intr(eth_dev);

/* initialize filter info */

memset(filter_info, 0,

sizeof(struct ixgbe_filter_info));

/* initialize 5tuple filter list */

TAILQ_INIT(&filter_info->fivetuple_list);

/* initialize flow director filter list & hash */

ixgbe_fdir_filter_init(eth_dev);

/* initialize l2 tunnel filter list & hash */

ixgbe_l2_tn_filter_init(eth_dev);

/* initialize flow filter lists */

ixgbe_filterlist_init();

/* initialize bandwidth configuration info */

memset(bw_conf, 0, sizeof(struct ixgbe_bw_conf));

/* initialize Traffic Manager configuration */

ixgbe_tm_conf_init(eth_dev);

return 0;

}

原文链接:https://www.cnblogs.com/codestack/p/15674071.html