前言: 上一次读恺明大神的文章还是两年前,被ResNet的设计折服得不行,两年过去了,我已经被卷死在沙滩上

Momentum Contrast for Unsupervised Visual Representation Learning

摘要

我们提出了针对无监督表征学习的方法MOCO,利用对比学习作为字典查找,我们建立了一个动态队列字典,和一个moving-averaged的编码器。这就可以实时的构建一个大的并且一致的字典来促进无监督的学习。MOCO在同样的线性协议(线性分类头)在IMAGENET上取得了很好的分类结果,并且学习到的特征可以很好的转移到下游任务中,MOCO在7个检测分割的任务中都 取得了最好的效果,有的甚至是大幅超过。这表明视觉任务中的无监督学习核有监督学习的差距在缩小。

We present Momentum Contrast (MoCo) for unsupervised visual representation learning. From a perspective on contrastive learning as dictionary look-up, we build a dynamic dictionary with a queue and a moving-averaged encoder. This enables building a large and consistent dictionary on-the-fly that facilitates contrastive unsupervised learning. MoCo provides competitive results under the common linear protocol on ImageNet classification. More importantly, the representations learned by MoCo transfer well to downstream tasks. MoCo can outperform its supervised pre-training counterpart in 7 detection/segmentation tasks on PASCAL VOC, COCO, and other datasets, sometimes surpassing it by large margins. This suggests that the gap between unsupervised and supervised representation learning has been largely closed in many vision tasks.

引言

无监督学习NLP领域很成功,但是在视觉领域,监督学习做预训练还是主流,无监督的方法落后。

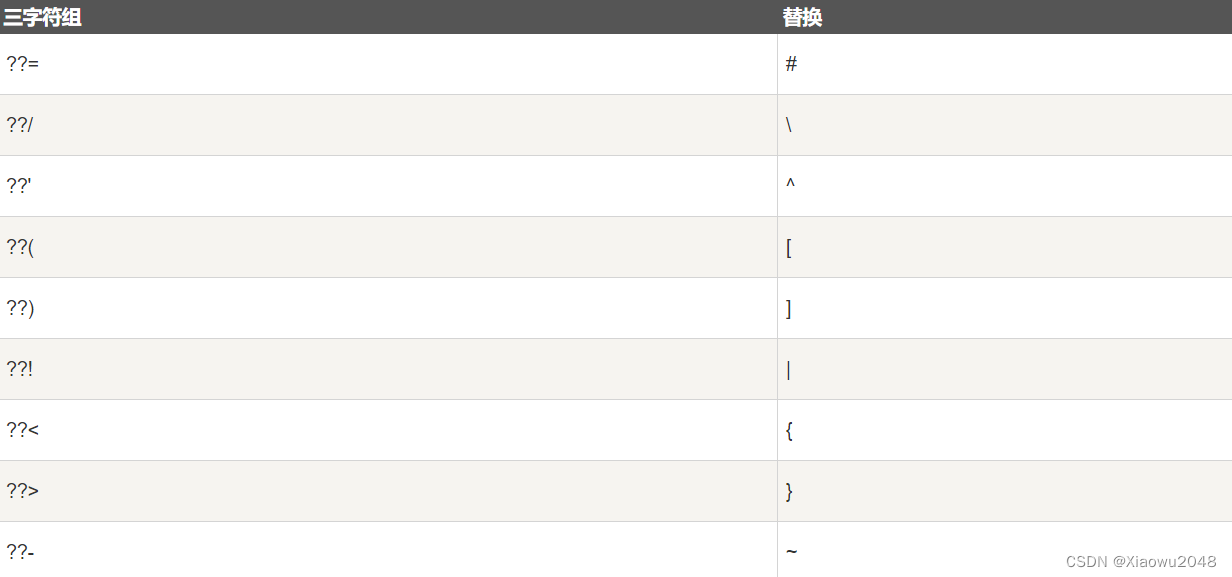

当然这个现象的原因主要还是响应的信号空间不同。语言任务是离散的信号空间,有一个个的单词组成,就可以建立一个个标记的字典,无监督学习就是基于这些字典进行的。但是视觉任务把字典的建立看作是在连续高维空间的原始信号,并不是人类表达中的结构性信息

Unsupervised representation learning is highly successful in natural language processing, e.g., as shown by GPT and BERT [12]. But supervised pre-training is still dominant in computer vision, where unsupervised methods generally lag behind. The reason may stem from differences in their respective signal spaces. Language tasks have discrete signal spaces (words, sub-word units, etc.) for building tokenized dictionaries, on which unsupervised learning can be based. Computer vision, in contrast, further concerns dictionary building [54, 9, 5], as the raw signal is in a continuous, high-dimensional space and is not structured for human communication (e.g., unlike words).

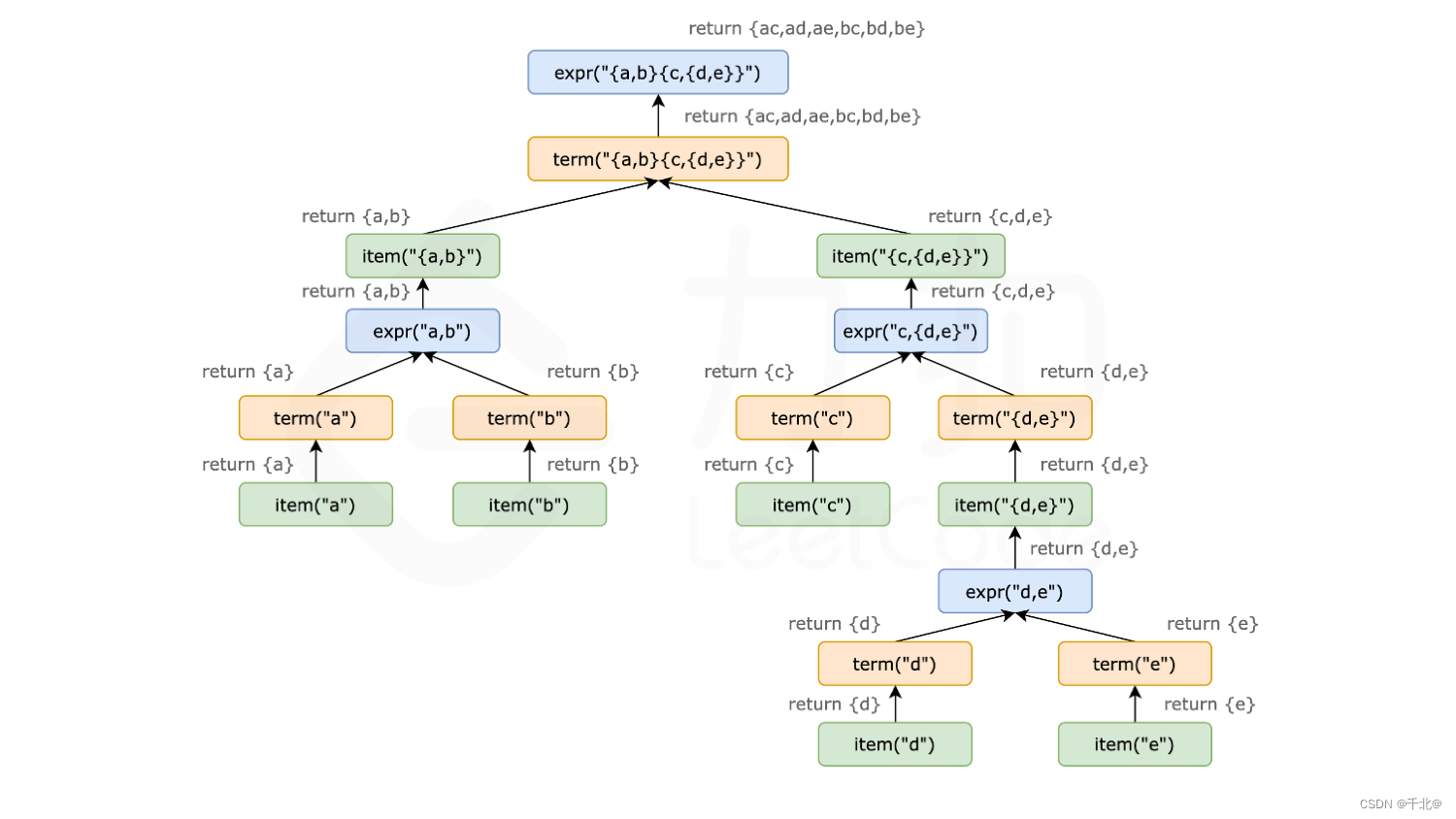

一些研究也针对无监督学习任务,利用对比学习的方法做出了一些成果。不管是出于什么样的动机,这些方法其实都是考虑建造动态字典。 key在字典中是对原数据的抽样,并被网络的编码器抽取特征。无监督学习就是训练这样一个编码器来不断的进行字典的查找。一个编码好的查询子应该与对应匹配的key相似,与不匹配的key不相似。这种学习可以被表示成最小化一个对比损失。

Several recent studies [61, 46, 36, 66, 35, 56, 2] present promising results on unsupervised visual representation learning using approaches related to the contrastive loss [29]. Though driven by various motivations, these methods can be thought of as building dynamic dictionaries. The “keys” (tokens) in the dictionary are sampled from data (e.g., images or patches) and are represented by an encoder network. Unsupervised learning trains encoders to perform dictionary look-up: an encoded “query” should be similar to its matching key and dissimilar to others. Learning is formulated as minimizing a contrastive loss [29].

从这个角度说,我们假设构建这样一个字典需要,字典够大,并且一致。直觉上来看,一个更大的字典可以更好的对连续高维的视觉空间进行抽样,而字典里的key的特征都应该由同一个编码器编码,这样他们的对比才会一致。

然而,现有的方法或多或少都被这两个局限性限制了。

From this perspective, we hypothesize that it is desirable to build dictionaries that are: (i) large and (ii) consistent as they evolve during training. Intuitively, a larger dictionary may better sample the underlying continuous, high dimensional visual space, while the keys in the dictionary should be represented by the same or similar encoder so that their comparisons to the query are consistent. However, existing methods that use contrastive losses can be limited in one of these two aspects (discussed later in context).

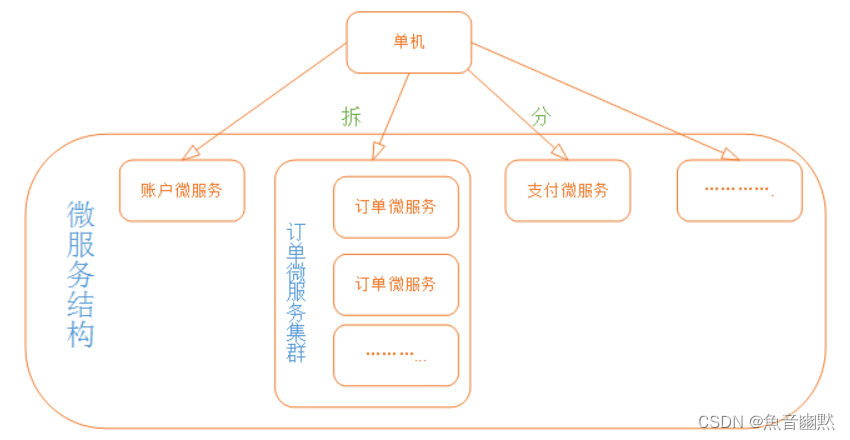

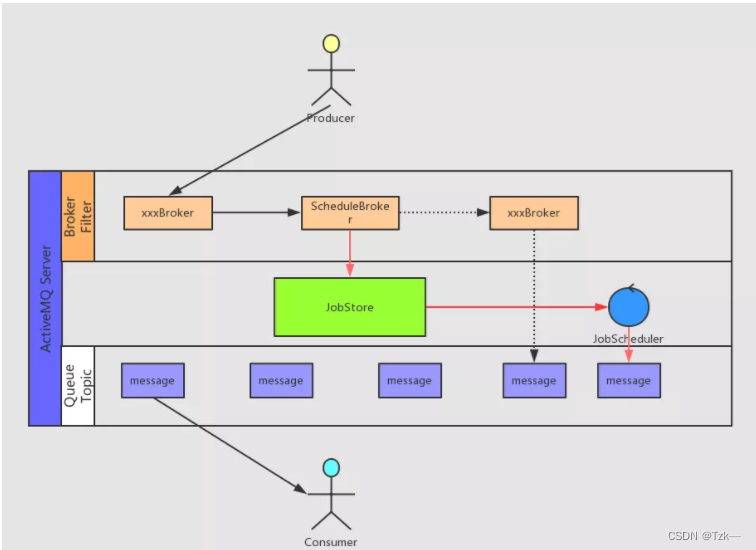

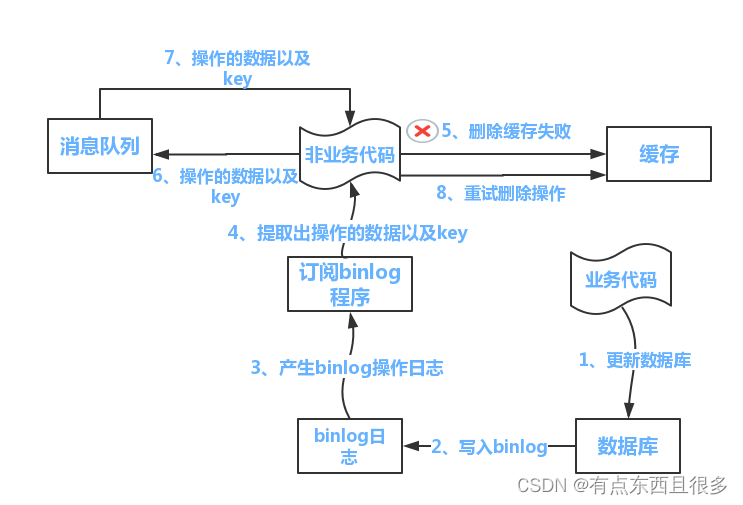

我们提出了一种MOCO,动量对比学习,如图。我们利用队列来保持这个字典里的样本,最新编码的key的表示,而最久被更新的key被队列挤出去。利用队列的形式就可以和batchsize解耦,就可以构建更大的队列而不必受限于机器中有限制大小的batchsize大小。

其次,我们字典里的key都是来自新来的一些batch中的key ,而这些key是缓慢逐渐更新的,这是由于我们设计了一个动量来实现的。因此可以保持整个队列的一致性

We present Momentum Contrast (MoCo) as a way of building large and consistent dictionaries for unsupervised learning with a contrastive loss (Figure 1). We maintain the dictionary as a queue of data samples: the encoded representations of the current mini-batch are enqueued, and the oldest are dequeued. The queue decouples the dictionary size from the mini-batch size, allowing it to be large. Moreover, as the dictionary keys come from the preceding several mini-batches, a slowly progressing key encoder, implemented as a momentum-based moving average of the query encoder, is proposed to maintain consistency.

MOCO是一个构建动态字典来实现对比学习的机制,并且可以被用于代理任务。在本文,我们遵循一个简单的实例判别任务:一个query匹配一个key如果他们是来自同一张图片的编码。用这样的代理任务,MOCO展示了十分有竞争力的结果。

MoCo is a mechanism for building dynamic dictionaries for contrastive learning, and can be used with various pretext tasks. In this paper, we follow a simple instance discrimination task [61, 63, 2]: a query matches a key if they are encoded views (e.g., different crops) of the same image. Using this pretext task, MoCo shows competitive results under the common protocol of linear classification in the ImageNet dataset [11].

一个使用无监督学习的理应是为了进行下游任务的学习。我们展示了7个不同的下游任务,检测和分割,MOCO无监督预训练都在这几个数据集上好,有的还超过了不少。在实验里,我们用了一个亿的数据集进行续联,显示了MOCO可以在实际世界,亿级图片,没有被标记的场景中工作的更好,这些都真实了无监督学习在是视觉任务中可以替代掉有监督学习视觉任务的预训练模型。

A main purpose of unsupervised learning is to pre-train representations (i.e., features) that can be transferred to downstream tasks by fine-tuning. We show that in 7 downstream tasks related to detection or segmentation, MoCo unsupervised pre-training can surpass its ImageNet supervised counterpart, in some cases by nontrivial margins. In these experiments, we explore MoCo pre-trained on ImageNet or on a one-billion Instagram image set, demonstrating that MoCo can work well in a more real-world, billion image scale, and relatively uncurated scenario. These results show that MoCo largely closes the gap between unsupervised and supervised representation learning in many computer vision tasks, and can serve as an alternative to ImageNet supervised pre-training in several applications.

相关工作

无监督/自监督学习涵盖了两个方面。代理任务和损失函数。代理指的是这个任务的解决并不是真正的目的,而是它解决过程中呈现的好的数据表征才是真正要的东西。

而损失函数是独立于代理任务进行调研的。MOCO主要关注损失函数这些部分。我们在接下来两方面来讨论它。

Unsupervised/self-supervised learning methods generally involve two aspects: pretext tasks and loss functions. The term “pretext” implies that the task being solved is not of genuine interest, but is solved only for the true purpose of learning a good data representation. Loss functions can often be investigated independently of pretext tasks. MoCo focuses on the loss function aspect. Next we discuss related studies with respect to these two aspects.

损失函数

一个常用的损失韩式就是衡量一个固定的目标和预测之间的不同,比如L1L2loss,或者把这些摄入分到固定的某些类别中,然后用交叉熵损失,或margin-based损失。接下来讨论其他的替代方法也可以

Loss functions. A common way of defining a loss function is to measure the difference between a model’s prediction and a fixed target, such as reconstructing the input pixels (e.g., auto-encoders) by L1 or L2 losses, or classifying the input into pre-defined categories (e.g., eight positions [13], color bins [64]) by cross-entropy or margin-based losses. Other alternatives, as described next, are also possible.

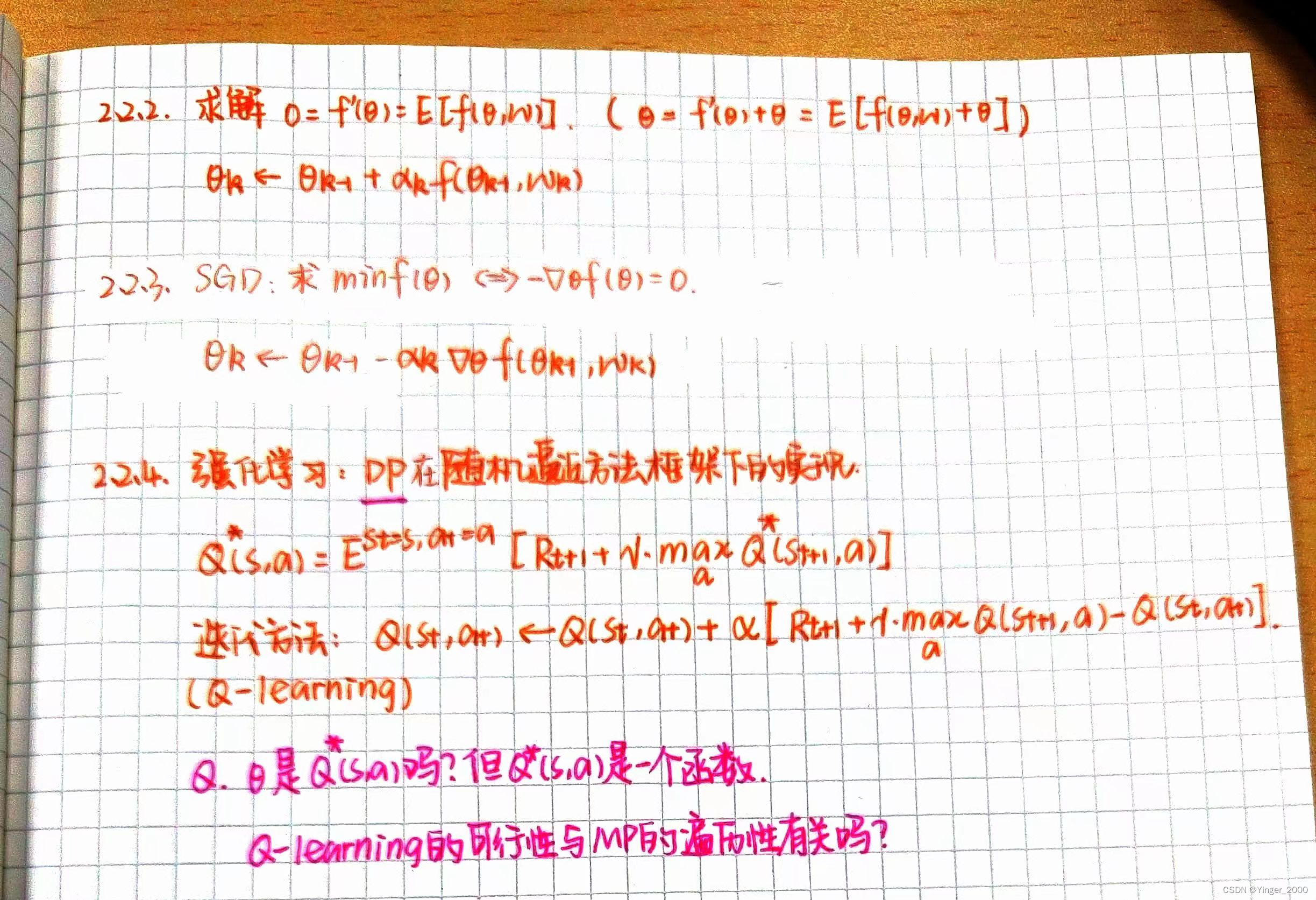

对比损失衡量的是表征空间里匹配的对的相似度。是不是将输入匹配目标,对比损失将目标实时的在训练中变化,通过网络计算得到它的表征,一些工作中的无监督学习的核心就是对比学习,也是我们在第三章用到的

Contrastive losses [29] measure the similarities of sample pairs in a representation space. Instead of matching an input to a fixed target, in contrastive loss formulations the target can vary on-the-fly during training and can be defined in terms of the data representation computed by a network [29]. Contrastive learning is at the core of several recent works on unsupervised learning [61, 46, 36, 66, 35, 56, 2], which we elaborate on later in context (Sec. 3.1).

对比学习的损失衡量的是概率分布的区别。是无监督数据生成中的成功技巧。表征学习的对抗方法也在一些文献里有研究。生成对抗网络和噪声对比估计的联系也有在一些文章里提到。

Adversarial losses [24] measure the difference between probability distributions. It is a widely successful technique or unsupervised data generation. Adversarial methods for representation learning are explored in [15, 16]. There are relations (see [24]) between generative adversarial networks and noise-contrastive estimation (NCE) [28].

代理任务。一批代理任务被提出,比如在某些损害下恢复原输入,比如…

而一些代理任务通过某些方法形成伪标签,比如…

Pretext tasks. A wide range of pretext tasks have been proposed. Examples include recovering the input under some corruption, e.g., denoising auto-encoders [58], context autoencoders [48], or cross-channel auto-encoders (colorization) [64, 65]. Some pretext tasks form pseudo-labels by, e.g., transformations of a single

(“exemplar”) image [17], patch orderings [13, 45], tracking [59] or

segmenting objects [47] in videos, or clustering features [3, 4].

对比学习和代理任务,一些代理任务是基于对抗损失函数的形式的。比如,

实例判别方法和 exemplar-based task [17] and NCE相关。而CPC中的代理任务是上下文自动编码[48]的一种形式,而在对比多视点编码(CMC)[56]中,它与着色[64]有关。

Contrastive learning vs. pretext tasks. Various pretext tasks can be based on some form of contrastive loss functions. The instance discrimination method [61] is related to the exemplar-based task [17] and NCE [28]. The pretext task in contrastive predictive coding (CPC) [46] is a form of context auto-encoding [48], and in contrastive multiview coding (CMC) [56] it is related to colorization [64].

方法

3.1 对比学习作为字典查找

对比学习和相关发展,可以看做是训练一个字典查找编码器,接下来具体介绍

Contrastive learning [29], and its recent developments, can be thought of as training an encoder for a dictionary look-up task, as described next.

一个重新

Consider an encoded query q and a set of encoded samples {k0, k1, k2, …} that are the keys of a dictionary. Assume that there is a single key (denoted as k+) in the dictionary that q matches. A contrastive loss [29] is a function whose value is low when q is similar to its positive key k+ and dissimilar to all other keys (considered negative keys for q). With similarity measured by dot product, a form of a contrastive loss function, called InfoNCE [46], is considered in this paper: