摘要

We report on a series of experiments with

convolutional neural networks (CNN)

trained on top of pre-trained word vectors for sentence-level classification tasks.

We show that a simple CNN with little hyperparameter tuning and static vectors achieves excellent results on multiple benchmarks. Learning task-specific

vectors through fine-tuning offers further

gains in performance. We additionally

propose a simple modification to the architecture to allow for the use of both

task-specific and static vectors. The CNN

models discussed herein improve upon the

state of the art on 4 out of 7 tasks, which

include sentiment analysis and question

classification

- 任务:句子级分类任务sentence-level classification tasks

- a simple CNN with little hyperparameter tuniing and static vectors.

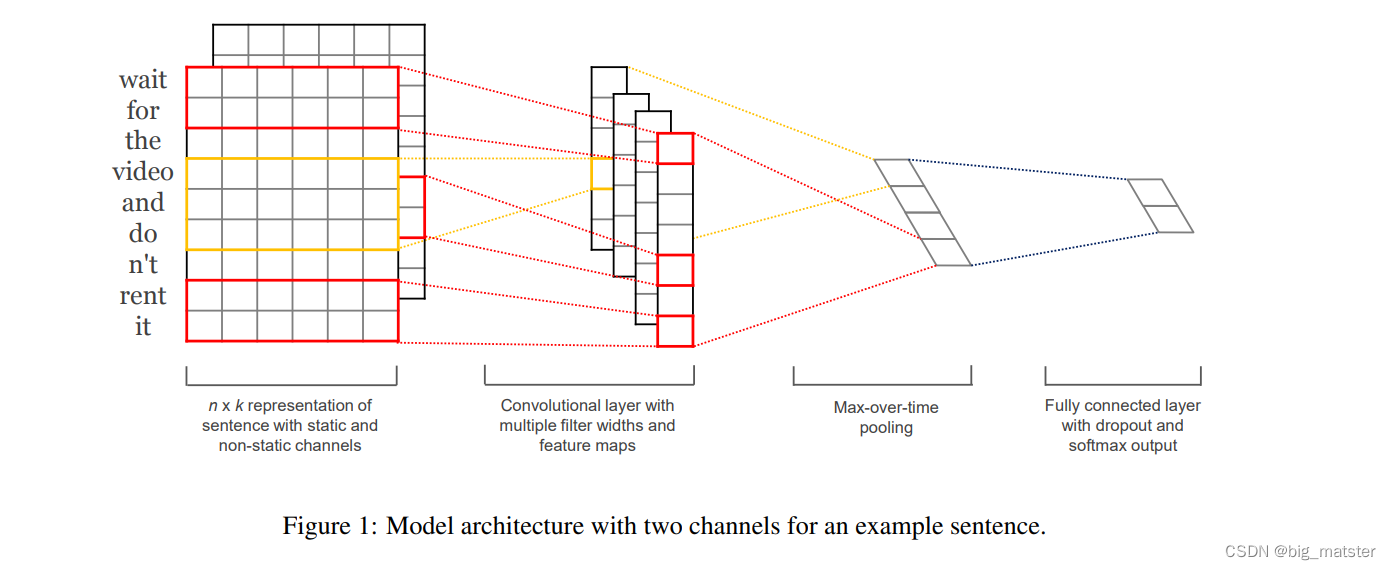

模型架构

x

i

∈

R

k

x_i \in R^k

xi∈Rk the k-dimensional word vector

A sentence of length:

n

n

n

x

1

:

n

=

x

1

⊗

x

2

⊗

⋯

,

⊗

x

n

x_{1:n} = x_1\otimes x_2\otimes \cdots,\otimes x_n

x1:n=x1⊗x2⊗⋯,⊗xn

⊗ \otimes ⊗is the concatenation operator.

x

i

:

i

+

j

x_{i:i+j}

xi:i+j 代表单词的拼接

w

∈

R

h

k

w \in R^{hk}

w∈Rhk: 卷积滤波器。

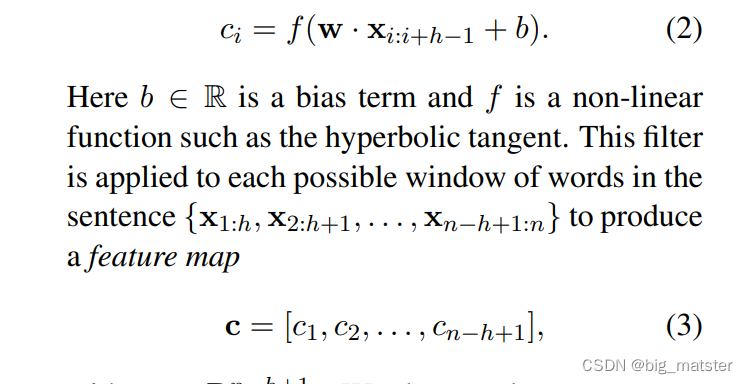

卷积操作

a max-over-time pooling operation

c ^ = max c \hat{c} = \max{c} c^=maxc

倒数第二层加入dropout ,防止过拟合。

解决了句子长度可变问题。

- the penultimate layer 倒数第二层

a fully connected softmax layer

数据集

MR

SST-1:

SST-2:

Subj

TREC

CR

• MPQA

更新算法

- 随机梯度下降法:Adadelta 算法

- 预训练词向量:the publicly available word2vec vectors

模型变体

- CNN-rand

- CNN-static

- CNN-non-static

- CNN-multichannel

![[计算机网络(第八版)]第一章 概述(学习笔记)](https://img-blog.csdnimg.cn/82bb51e12f6f4978949e1f0594fc9c8d.png)