原创文章第115篇,专注“个人成长与财富自由、世界运作的逻辑, AI量化投资”。

在模型训练过程中,过拟合(overfitting)是非常常见的现象。所谓的overfitting,就是在训练集上表现很好,但是测试集上表现很差。为了减少过拟合,提高模型的泛化能力,实际中会有很多措施来缓解overfitting的问题。其中一个常见的方法就是将已有数据集中保留一部分数据作为测试集,即将原有数据分为X_train, X_test,X_train用来训练模型,X_test用来验证调整模型。

机器学习驱动的量化策略,与传统量化不同在于,它的交易信号是由模型给出,所以它需要遵守机器学习流程。机器学习需要对数据集进行划分:训练集、测试集。在训练集上“学习”模型,在测试集上评估训练的交易。

与传统机器学习随机抽样不同在于,由于我们需要在测试集在做“连续”的回测,所以不能把总体随机打乱取其中一部分,而是将期按比列分成两段。一段当训练集,一段当测试集。因此,我们不使用sklearn的train_test_split函数,而是自己实现一个金融时间序列的数据集切分函数。

最常用的是按日期来选择,比如“2017-01-01”这一天之前的数据都将作为训练集,用于训练模型,而这一天之后的数据都作为测试集,也就是未来回测使用的时间区间。

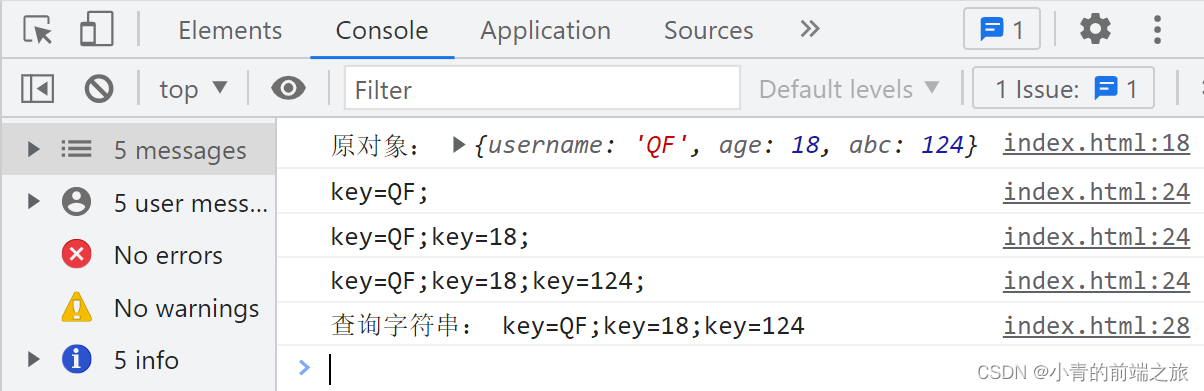

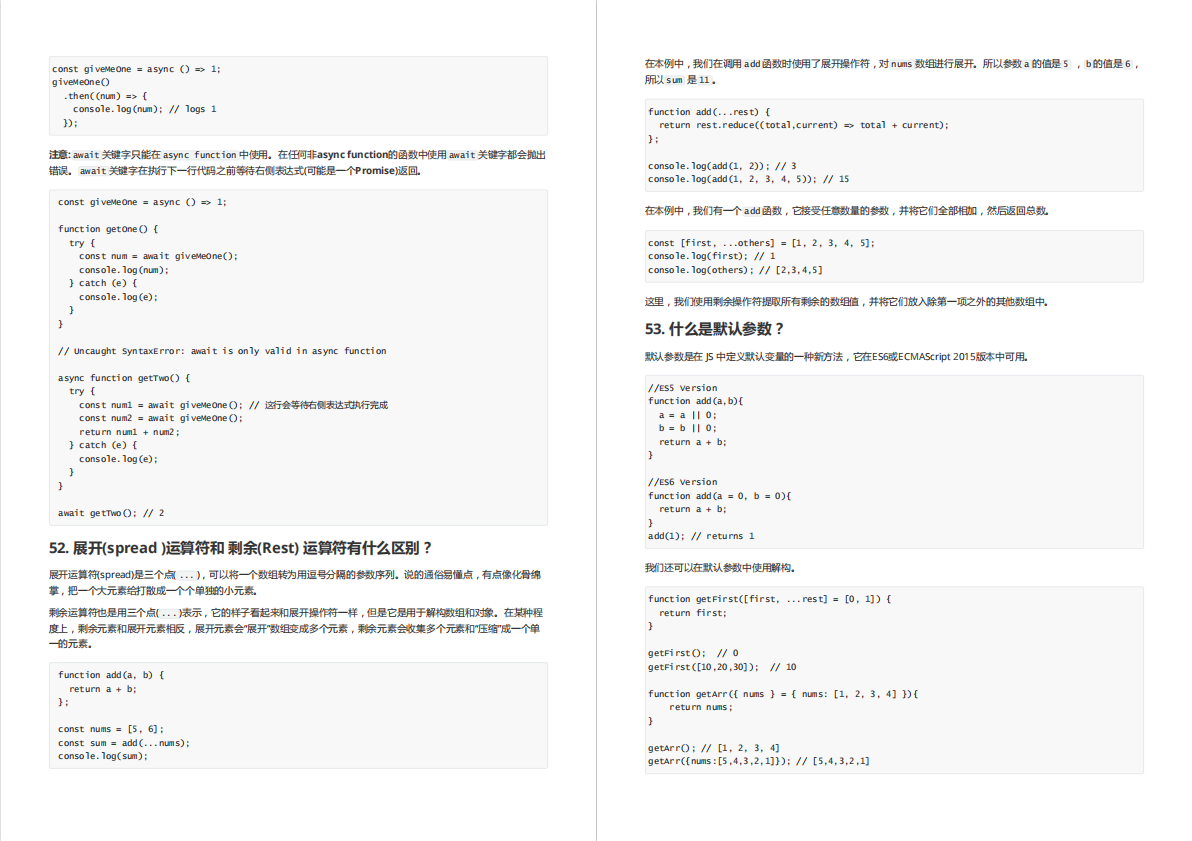

核心代码是下面的函数:

x_cols是所有的特征列,y_col的标签列,如果划分的日期为空的话,会按训练集80%计算出日期。

返回的数据格式类似sklearn的train_test_split。

import numpy as np

import datetime as dt

from engine.datafeed.dataloader import Dataloader

class OneStepTimeSeriesSplit:

"""Generates tuples of train_idx, test_idx pairs

Assumes the index contains a level labeled 'date'"""

def __init__(self, n_splits=3, test_period_length=1, shuffle=False):

self.n_splits = n_splits

self.test_period_length = test_period_length

self.shuffle = shuffle

@staticmethod

def chunks(l, n):

for i in range(0, len(l), n):

print(l[i:i + n])

yield l[i:i + n]

def split(self, X, y=None, groups=None):

unique_dates = (X.index

# .get_level_values('date')

.unique()

.sort_values(ascending=False)

[:self.n_splits * self.test_period_length])

dates = X.reset_index()[['date']]

for test_date in self.chunks(unique_dates, self.test_period_length):

train_idx = dates[dates.date < min(test_date)].index

test_idx = dates[dates.date.isin(test_date)].index

if self.shuffle:

np.random.shuffle(list(train_idx))

yield train_idx, test_idx

def get_n_splits(self, X, y, groups=None):

return self.n_splits

def get_date_by_percent(start_date, end_date, percent):

days = (end_date - start_date).days

target_days = np.trunc(days * percent)

target_date = start_date + dt.timedelta(days=target_days)

# print days, target_days,target_date

return target_date

def split_df(df, x_cols, y_col, split_date=None, split_ratio=0.8):

if not split_date:

split_date = get_date_by_percent(df.index[0], df.index[df.shape[0] - 1], split_ratio)

input_data = df[x_cols]

output_data = df[y_col]

# Create training and test sets

X_train = input_data[input_data.index < split_date]

X_test = input_data[input_data.index >= split_date]

Y_train = output_data[output_data.index < split_date]

Y_test = output_data[output_data.index >= split_date]

return X_train, X_test, Y_train, Y_test

class Dataset:

def __init__(self, symbols, feature_names, feature_fields, split_date, label_name='label', label_field=None):

self.feature_names = feature_names

self.feature_fields = feature_fields

self.split_date = split_date

self.label_name = label_name

self.label_field = 'Sign(Ref($close,-1)/$close -1)' if label_field is None else label_field

names = self.feature_names + [self.label_name]

fields = self.feature_fields + [self.label_field]

loader = Dataloader()

self.df = loader.load_one_df(symbols, names, fields)

def get_split_dataset(self):

X_train, X_test, Y_train, Y_test = split_df(self.df, x_cols=self.feature_names, y_col=self.label_name,

split_date=self.split_date)

return X_train, X_test, Y_train, Y_test

def get_train_data(self):

X_train, X_test, Y_train, Y_test = split_df(self.df, x_cols=self.feature_names, y_col=self.label_name,

split_date=self.split_date)

return X_train, Y_train

def get_test_data(self):

X_train, X_test, Y_train, Y_test = split_df(self.df, x_cols=self.feature_names, y_col=self.label_name,

split_date=self.split_date)

return X_test, Y_test

def get_X_y_data(self):

X = self.df[self.feature_names]

y = self.df[self.label_name]

return X, y

if __name__ == '__main__':

codes = ['000300.SH', 'SPX']

names = []

fields = []

fields += ["Corr($close/Ref($close,1), Log($volume/Ref($volume, 1)+1), 30)"]

names += ["CORR30"]

dataset = Dataset(codes, names, fields, split_date='2020-01-01')

X_train, Y_train = dataset.get_train_data()

print(X_train, Y_train)

模型训练:

codes = ['000300.SH', '399006.SZ']

names = []

fields = []

fields += ["Corr($close/Ref($close,1), Log($volume/Ref($volume, 1)+1), 30)"]

names += ["CORR30"]

fields += ["Corr($close/Ref($close,1), Log($volume/Ref($volume, 1)+1), 60)"]

names += ["CORR60"]

fields += ["Ref($close, 5)/$close"]

names += ["ROC5"]

fields += ["(2*$close-$high-$low)/$open"]

names += ['KSFT']

fields += ["($close-Min($low, 5))/(Max($high, 5)-Min($low, 5)+1e-12)"]

names += ["RSV5"]

fields += ["($high-$low)/$open"]

names += ['KLEN']

fields += ["$close"]

names += ['close']

fields += ['KF(Slope($close,20))']

names += ['KF']

fields += ['$close/Ref($close,20)-1']

names += ['ROC_20']

fields += ['KF($ROC_20)']

names += ['KF_ROC_20']

dataset = Dataset(codes, names, fields, split_date='2020-01-01')

from sklearn.ensemble import RandomForestClassifier

from sklearn.svm import LinearSVC, SVC

from sklearn.linear_model import LogisticRegression

from engine.ml.boosting_models import gb_clf

for model in [gb_clf, LogisticRegression(), RandomForestClassifier(), SVC()]:

m = ModelRunner(model, dataset)

m.fit()

m.predict()

集成学习比传统机器学习对于表格数据结果还是好不少。同样的数据集,最好的是随机森森,其实是lightGBM(未调参版本)。

明天继续。

ETF轮动+RSRS择时,加上卡曼滤波:年化48.41%,夏普比1.89

etf动量轮动+大盘择时:年化30%的策略

我的开源项目及知识星球