目录

- Deep Learning

- Feedforward Neural Network 前馈神经网络

- Neuron 神经元

- Output Layer 输出层

- Optimization

- Regularization 正则化

- Topic Classification 主题分类

- Language Model as Classifiers 语言模型作为分类器

- Word Embeddings 词嵌入

- Training a Feed-Forward Neural Network Language Model 训练前向传播神经网络语言模型

- Feed-Forward Neural Network for POS Tagging 用于词性标注的前向传播神经网络

- Convolutional Networks 卷积网络

- Convolutional Networks for NLP

Feedforward Neural Networks Basics

Deep Learning

-

A branch of machine learning 机器学习的一个分支

-

Re-branded name for neural networks 神经网络的重命名

-

Deep: Many layers are chained together in modern deep learning models 深度:现在深度学习模型中的链式链接的多层

-

Neural Networks: Historically inspired by the way computation works in the brain 神经网络:历史上受大脑计算方式的启发

- Consists of computation units called neurons 由称为神经元的计算单元组成

Feedforward Neural Network 前馈神经网络

-

Also called multilayer perceptron 也被称为多层感知机

-

E.g. of architecture: 架构示例

-

Each arrow carries a weight, reflecting its importance 每个箭头都会携带一个权重,以反应其重要性

-

Certain layers have non-linear activation functions 某些层具有非线性的激活函数

Neuron 神经元

-

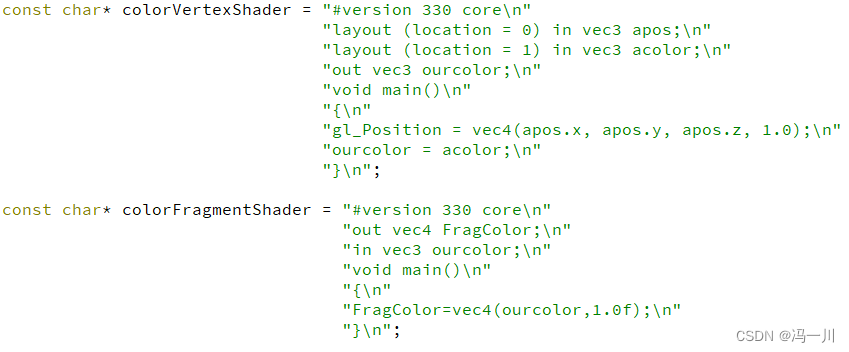

Each neuron is a function: 每个神经元是一个函数

- Given input

x, compute real-valueh: 给定输入x,计算实值h

- Scales input (with weights,

w) and adds offset (bias,b) 通过权重w缩放输入并添加偏移量(偏置,b) - Applies non-linear function, such as logistic sigmoid, hyperbolic sigmoid(tanh), or rectified linear unit 应用非线性函数,如逻辑sigmoid、双曲sigmoid(tanh)或修正线性单元

wandbare parameters of the modelw和b是模型的参数

- Given input

-

Typically hava several hidden units 通常有几个隐藏单元. E.g.

-

Each with its own weights wi, and bias terms bi 每个单元都有自己的权重 wi,和偏置项 bi

-

Can be expressed using matrix and vector operators 可以使用矩阵和向量运算符表示:

-

Where

is a matrix comprising the weight vectors and 是一个包含权重向量的矩阵

is a vector of all bias terms 是所有偏置项的向量

-

Non-linear function applied element-wise 非线性函数逐元素应用

-

Output Layer 输出层

-

For binary classification problem: Sigmoid Activation Function 对于二元分类问题:Sigmoid 激活函数

-

Multi-class classification problem: Softmax Activation Function ensures probabilities are greater than 0 and sum to 1 多类分类问题:Softmax 激活函数确保概率大于0且和为1:

Optimization

-

Consider how well the model fits the training data, in terms of the probability it assigns to the correct output 考虑模型如何拟合训练数据,即它对正确输出所分配的概率

-

-

Want to maximize total probability

L想要最大化总概率L -

Equivalently minimize

-log Lwith respect to parameters 等价地,以参数最小化-log L

-

-

Trained using gradient descent 使用梯度下降进行训练

- Tools like tensorflow, pytorch, dynet use autodiff to compute gradients automatically 像 tensorflow, pytorch, dynet 这样的工具用于自动计算梯度

Regularization 正则化

-

Have many parameters, so the model overfits easily 有许多参数,所以模型很容易过拟合

-

Low bias, high variance 偏差较低,方差较高

-

Regularization is very import in neural networks 在神经网络中,正则化非常重要

-

L1-norm: sum of absolute values of all parameters L1范数:所有参数的绝对值之和

-

L2-norm: sum of squares of all parameters L2范数:所有参数的平方之和

-

Dropout: randomly zero-out some neurons of a layer Dropout:随机将某一层的部分神经元值设为零

- If a dropout rate = 0.1, a random 10% of neurons now have 0 values 如果Dropout比率 = 0.1,那么随机10%的神经元现在的值为0

- Can apply dropout to any layer, but in practice, mostly to the hidden layers 可以在任何层应用dropout,但实际上,主要应用于隐藏层

Applications in NLP

Topic Classification 主题分类

-

Given a document, classify it into a predefined set of topics. E.g. economy, politics, sports 给定一个文档,将其分类到预定义的主题集合中。例如:经济,政治,体育

-

Input: Bag-of-words 输入:词袋模型

Example input:

-

Training:

Architecture:

Hidden Layers:

- Randomly initialize

Wandb - E.g:

Input:

Output:: Probability distribution over

Loss:if true label is

- Randomly initialize

-

Prediction:

- E.g.

Input:

Output:

Predicted class is

- E.g.

-

Potential Improvements: 可能的改进

- Use Bag of bigrams as input 使用bigrams的词袋作为输入

- Preprocess text to lemmatize words and remove stopwords 预处理文本,对词进行词元化并去除停用词

- Instead of raw counts, can weight words using TF-IDF or indicators 可以使用TF-IDF或指标来权衡单词,而不是原始计数

Language Model as Classifiers 语言模型作为分类器

-

Language Models can be considered simple classifiers 语言模型可以被视为简单的分类器.

- E.g. For a trigram model:

classifies the likely next word in a sequence, given

saltandand给出了在给定salt和and的情况下,可能的下一个词的分类

- E.g. For a trigram model:

-

Feedforward Neural Network Language Model 前向传播神经网络语言模型

- Use neural network as a classifier to model 使用神经网络作为分类器来模型

- Input features: The previous words 输入特征:前面的单词

- Output classes: the next word 输出类别:下一个单词

- Use Word Embeddings to represent each word 使用词嵌入来表示每个单词

- E.g. of Word Embeddings: 词嵌入的例子

- E.g. of Word Embeddings: 词嵌入的例子

Word Embeddings 词嵌入

-

Maps discrete word symbols to continuous vectors in a relatively low dimensional space 将离散的词符号映射到相对较低维度的连续向量空间中

-

Word embeddings allow the model to capture similarity between words 词嵌入允许模型捕获单词之间的相似性

-

In feed-forward neural network language model, the first layer is the sum of input word embeddings 在前向传播神经网络语言模型中,第一层是输入词嵌入的和

Training a Feed-Forward Neural Network Language Model 训练前向传播神经网络语言模型

-

E.g.

-

Lookup word embeddings (

) for

a,cow, andeats -

Concatenate them and feed it to the network 将它们连接起来并输入网络:

-

-

gives the probability distribution over all words in the vocabulary 给出了所有词汇表中单词的概率分布

- E.g.

-

Loss:

-

Most parameters are in the word embeddings W1 (size = d * |V|) and the output embeddings W3 (size = |V| * d) 大部分参数都在词嵌入 W1 (大小 = d * |V|) 和输出嵌入 W3 (大小 = |V| * d)

-

Example Architecture:

-

Problem of Count-based N-gram models: 基于计数的N-gram模型的问题

- Cheap to train 便宜的训练

- Problems with sparsity and scaling to larger contexts 在稀疏性和大规模上下文的扩展性上存在问题

- Don’t adequately capture properties of words 不能充分捕获单词的属性

-

Advantages of Feed-Forward Neural Network Language Model: 前向传播神经网络语言模型的优点

- Automatically capture word properties, leading to more robust estimates 自动捕获单词的属性,导致更稳健的估计

Feed-Forward Neural Network for POS Tagging 用于词性标注的前向传播神经网络

-

POS tagging can also be framed as classification: 词性标注也可以被框定为分类

-> classifies the likely POS tag for

eats对eats的可能的词性标签进行分类

-

Feed-Forward Neural Network Language Model architecture can be adapted to the task directly 前向传播神经网络语言模型的架构可以直接适应这个任务

-

Inputs:

- recent words 最近的词:

- recent tags 最近的标签:

- recent words 最近的词:

-

Outputs:

- current tags:

- current tags:

-

Frame as neural network with:

- 5 inputs: 3 word embeddings and 2 tag embeddings 5个输入:3个词嵌入和2个标签嵌入

- 1 output: vector of size |T|, using softmax 1个输出:大小为|T|的向量,其中|T|是词性标签集的大小

-

Training to minimize:

-

Example Architecture:

Convolutional Networks

Convolutional Networks 卷积网络

-

Commonly used in computer vision 常用于计算机视觉

-

Identify indicative local predictors 确定指示性本地预测因子

-

Combine them to produce a fixed-size representation 将它们合并,形成固定大小的表示

Convolutional Networks for NLP

-

Sliding window over sequence 按顺序滑动窗口

-

W = convolution filter (linear transformation + tanh) W = 卷积滤波器(线性变换+tanh)。

-

max-pool to produce a fixed-size representation max-pool来产生一个固定大小的表示

-

Example architecture: