Contents

- Introduction

- Class-wise self-knowledge distillation (CS-KD)

- Class-wise regularization

- Effects of class-wise regularization

- Experiments

- Classification accuracy

- References

Introduction

- 为了缓解模型过拟合,作者提出 Class-wise self-knowledge distillation (CS-KD),用同一类别的其他样本的预测类别概率去进行自蒸馏,使得模型输出更有意义和更加一致的预测结果

Class-wise self-knowledge distillation (CS-KD)

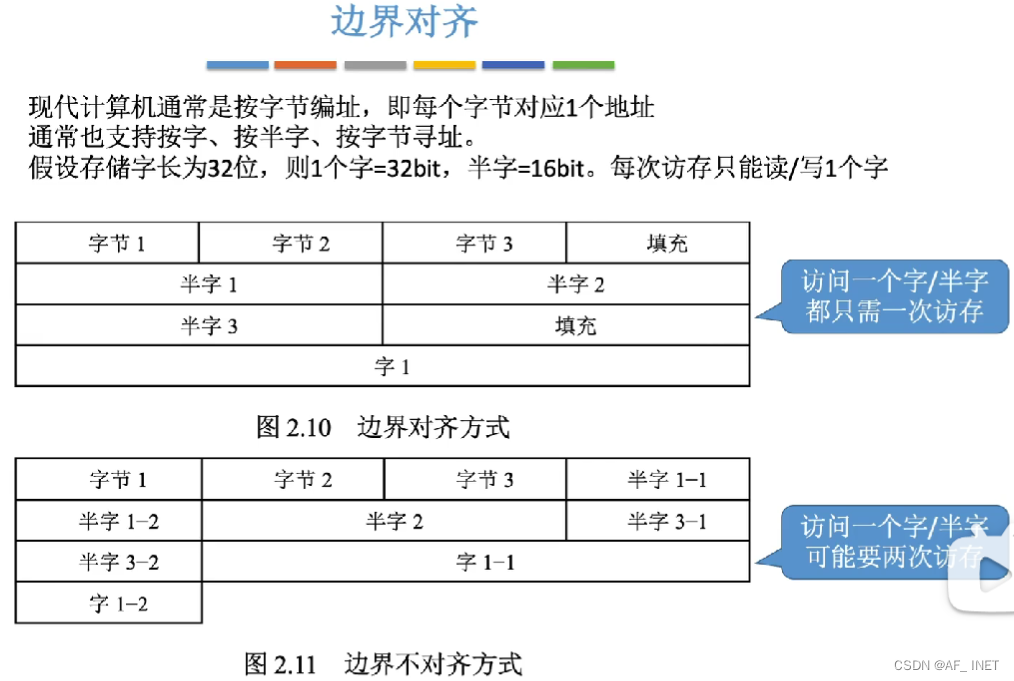

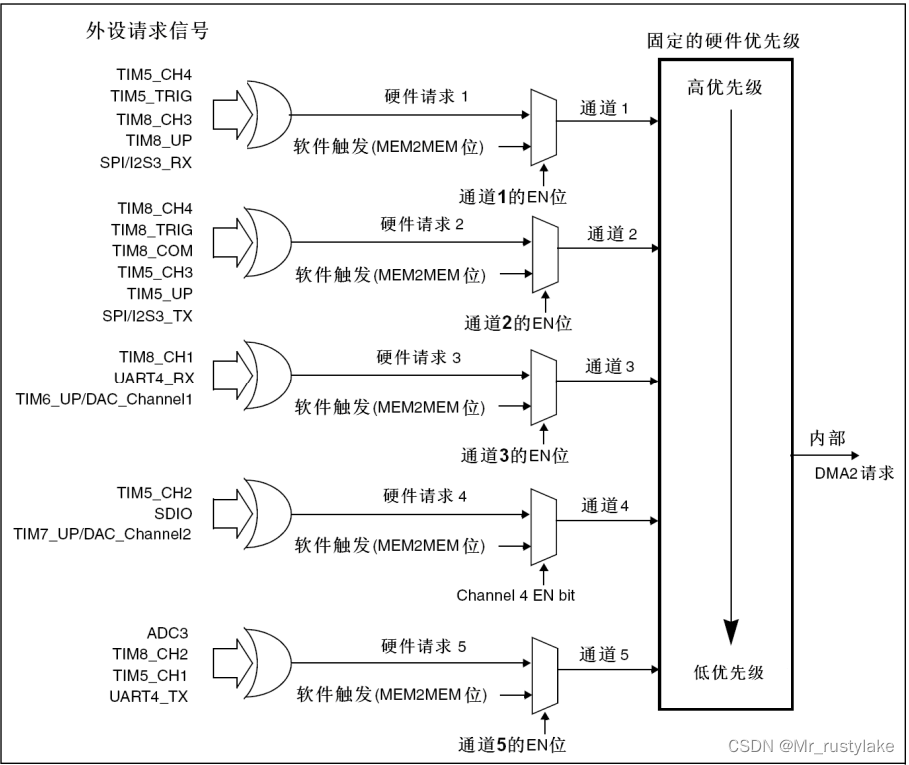

Class-wise regularization

- class-wise regularization loss. 使得属于同一类别样本的预测概率分布彼此接近,相当于对模型自身的 dark knowledge (i.e., the knowledge on wrong predictions) 进行蒸馏

其中,

x

,

x

′

\mathbf x,\mathbf x'

x,x′ 为属于同一类别的不同样本,

P

(

y

∣

x

;

θ

,

T

)

=

exp

(

f

y

(

x

;

θ

)

/

T

)

∑

i

=

1

C

exp

(

f

i

(

x

;

θ

)

/

T

)

P(y \mid \mathbf{x} ; \theta, T)=\frac{\exp \left(f_y(\mathbf{x} ; \theta) / T\right)}{\sum_{i=1}^C \exp \left(f_i(\mathbf{x} ; \theta) / T\right)}

P(y∣x;θ,T)=∑i=1Cexp(fi(x;θ)/T)exp(fy(x;θ)/T),

T

T

T 为温度参数;注意到,

θ

~

\tilde \theta

θ~ 为 fixed copy of the parameters

θ

\theta

θ,梯度不会通过

θ

~

\tilde \theta

θ~ 回传到模型参数,从而避免 model collapse (cf. Miyaeto et al.)

其中,

x

,

x

′

\mathbf x,\mathbf x'

x,x′ 为属于同一类别的不同样本,

P

(

y

∣

x

;

θ

,

T

)

=

exp

(

f

y

(

x

;

θ

)

/

T

)

∑

i

=

1

C

exp

(

f

i

(

x

;

θ

)

/

T

)

P(y \mid \mathbf{x} ; \theta, T)=\frac{\exp \left(f_y(\mathbf{x} ; \theta) / T\right)}{\sum_{i=1}^C \exp \left(f_i(\mathbf{x} ; \theta) / T\right)}

P(y∣x;θ,T)=∑i=1Cexp(fi(x;θ)/T)exp(fy(x;θ)/T),

T

T

T 为温度参数;注意到,

θ

~

\tilde \theta

θ~ 为 fixed copy of the parameters

θ

\theta

θ,梯度不会通过

θ

~

\tilde \theta

θ~ 回传到模型参数,从而避免 model collapse (cf. Miyaeto et al.) - total training loss

Effects of class-wise regularization

- Reducing the intra-class variations.

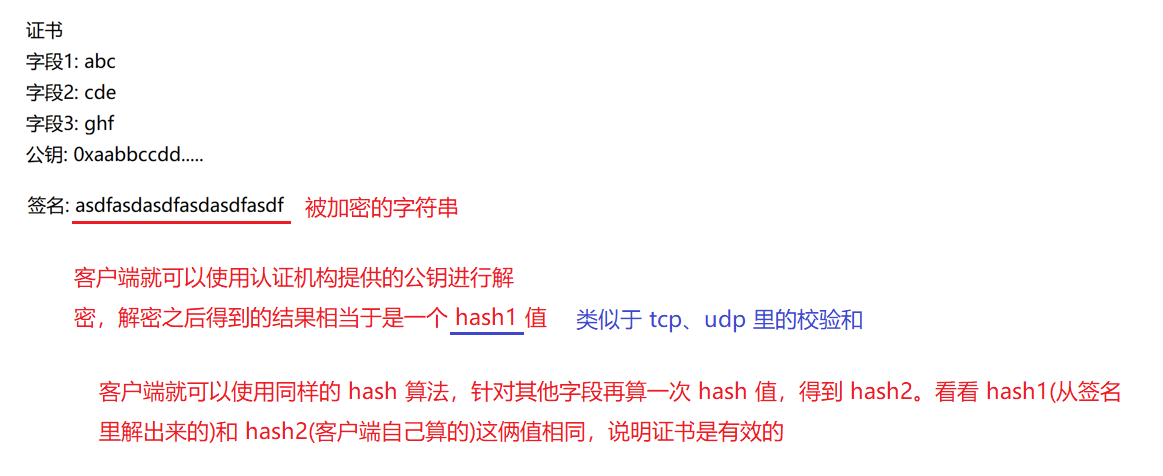

- Preventing overconfident predictions. CS-KD 通过将同一类别其他样本的预测类别分布作为软标签来避免 overconfident predictions,这比一般的 label-smoothing 方法生成的软标签更真实 (more ‘realistic’)

Experiments

Classification accuracy

- Comparison with output regularization methods.

- Comparison with self-distillation methods.

- Evaluation on large-scale datasets.

- Compatibility with other regularization methods.

- Ablation study.

(1) Feature embedding analysis.

(2) Hierarchical image classification.

(2) Hierarchical image classification.

- Calibration effects.

References

- Yun, Sukmin, et al. “Regularizing class-wise predictions via self-knowledge distillation.” Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 2020.

- code: https://github.com/alinlab/cs-kd