引言:

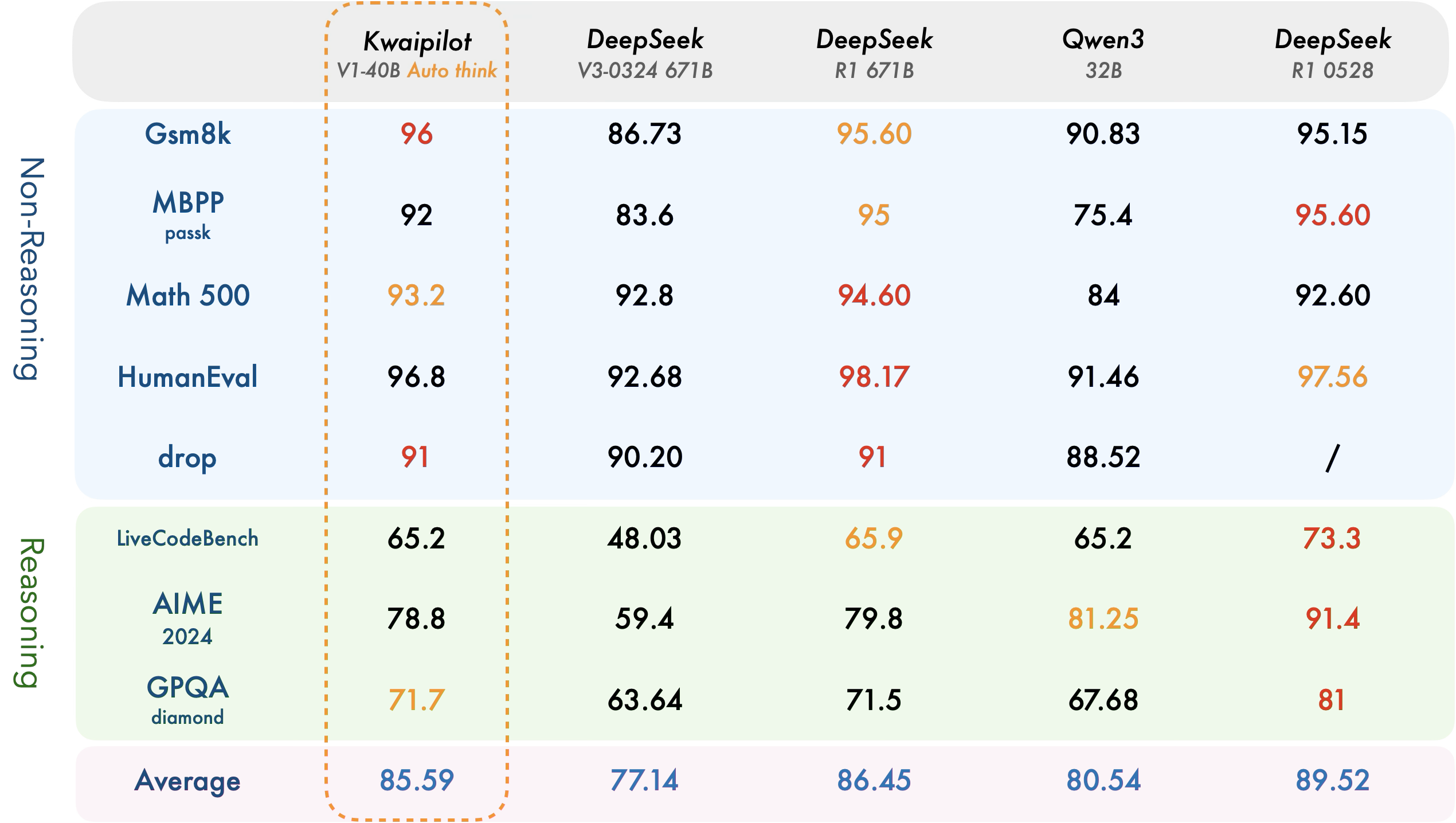

在人工智能快速发展的浪潮中,快手Kwaipilot团队推出的 KwaiCoder-AutoThink-preview 具有里程碑意义——这是首个公开的AutoThink大语言模型(LLM)。该模型代表着该领域的重大突破,通过独特方式融合思考与非思考能力,显著提升了语言模型的整体性能和适应性。

产品解析:

Kwaipilot/KwaiCoder-AutoThink-preview的核心创新在于能根据输入问题的复杂度动态调整推理深度。这一突破性能力源自三大关键技术:

- 自动思考:该功能利用多样化的预思考数据来训练模型预测任务难度,使其能够更准确地判断何时需要进行深入推理。

- 步骤SRPO:一种基于令牌的GRPO变体,具有过程级奖励机制。步骤SRPO确保了在“思考”和“不思考”场景下更稳定的强化学习(RL)和更高准确性。

- 代理数据:自动化的思维链冷启动数据生成在强化学习前增强了推理模型,从而提升整体性能的稳健性。

- 知识蒸馏+多令牌预测:通过单教师→多令牌预测蒸馏技术,显著降低了预训练成本,使模型更高效且易于使用。

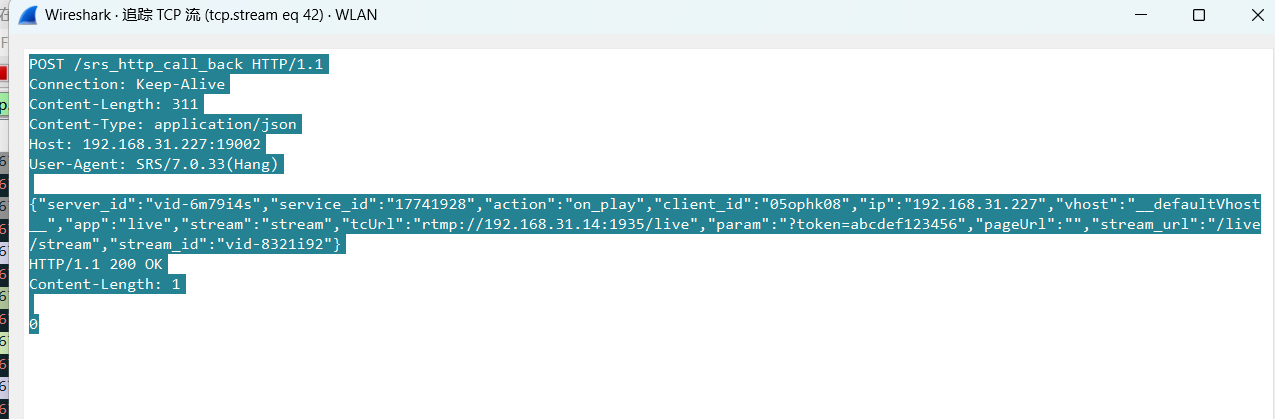

实际应用:

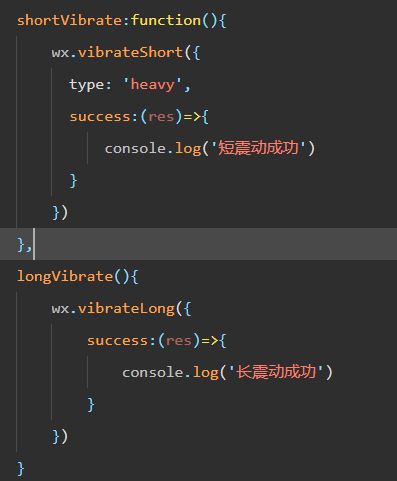

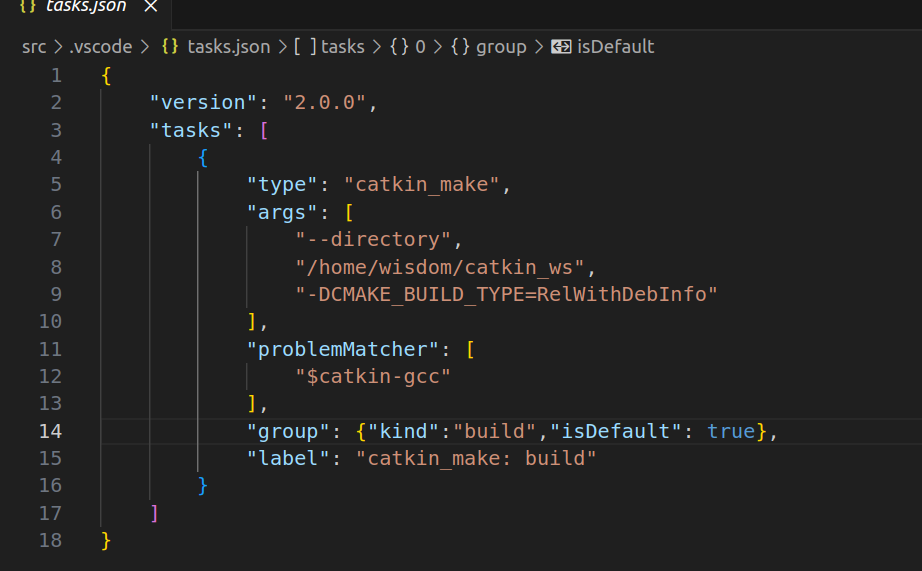

该模型的实际应用非常简单,如提供的代码片段所示。通过导入必要的模块并加载模型,用户可以轻松基于提示生成文本。从输出内容可以看出,模型能够动态调整其推理深度——它既提供了关于大语言模型(LLMs)的简明介绍,又避免了使回答过于复杂化。

快速上手

from transformers import AutoTokenizer, AutoModelForCausalLM

model_name = "Kwaipilot/KwaiCoder-AutoThink-preview"

# load the tokenizer and the model

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# prepare the model input

prompt = "Give me a short introduction to large language model."

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# conduct text completion

generated_ids = model.generate(

**model_inputs,

max_new_tokens=32768,

temperature=0.6,

top_p=0.9,

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

content = tokenizer.decode(output_ids, skip_special_tokens=True).strip("\n")

print("prompt:\n", prompt)

print("content:\n", content)

"""

prompt:

Give me a short introduction to large language model.

content:

<judge>

This is a definitional query seeking a basic explanation, which can be answered with straightforward factual recall or a concise summary. Requires think-off mode.

</judge>

<think off>

Large Language Models (LLMs) are advanced artificial intelligence systems designed to understand and generate human-like text. They are trained on vast amounts of data to learn grammar, facts, reasoning, and context. Key features include:

- **Scale**: Billions (or even trillions) of parameters, enabling complex pattern recognition.

- **Versatility**: Can perform tasks like answering questions, writing code, summarizing text, and more.

- **Adaptability**: Fine-tuned for specific uses (e.g., customer support, creative writing).

Examples include OpenAI's GPT, Google's Gemini, and Meta's Llama. While powerful, LLMs may occasionally hallucinate or rely on outdated information. They’re transforming industries by automating text-based tasks and enhancing human productivity.

Would you like a deeper dive into any aspect?

"""

优点与局限性:

Kwaipilot/KwaiCoder-AutoThink预览版具有多项优势,包括更高的准确性、适应性和效率。该模型能够预测任务难度并相应调整推理深度,使其成为适用于多种场景的强大工具。但需注意的是,预览版检查点在训练数据分布之外的任务中可能出现过度思考或思考不足的情况,这强调了负责任使用和验证事实性输出的重要性。

结论:

Kwaipilot/KwaiCoder-AutoThink-preview 在 AutoThink 大语言模型的发展道路上迈出了重要一步。其融合思考与非思考能力的独特方法,加上动态推理深度调节机制,使其成为应对各类语言任务的通用高效工具。随着Kwaipilot团队的持续优化改进,该模型未来必将展现更卓越的性能与更广阔的应用前景。