一、背景

在之前的博客 增加等IO状态的唤醒堆栈打印及缺页异常导致iowait分析-CSDN博客 里,我们进一步优化了D状态和等IO状态的事件的堆栈打印,补充了唤醒堆栈打印,也分析了一种比较典型的缺页异常filemap_fault导致的iowait的情况。

在这篇博客里,我们进一步补充缺页异常导致iowait这种场景下的信息,打印出是什么文件的filemap_fault,把文件的绝对路径打印出来。

在下面第二章里,我们给出源码和做源码的分析,在第三章里我们给出成果展示。

二、源码及源码分析

2.1 完整源码

#include <linux/module.h>

#include <linux/capability.h>

#include <linux/sched.h>

#include <linux/uaccess.h>

#include <linux/proc_fs.h>

#include <linux/ctype.h>

#include <linux/seq_file.h>

#include <linux/poll.h>

#include <linux/types.h>

#include <linux/ioctl.h>

#include <linux/errno.h>

#include <linux/stddef.h>

#include <linux/lockdep.h>

#include <linux/kthread.h>

#include <linux/sched.h>

#include <linux/delay.h>

#include <linux/wait.h>

#include <linux/init.h>

#include <asm/atomic.h>

#include <trace/events/workqueue.h>

#include <linux/sched/clock.h>

#include <linux/string.h>

#include <linux/mm.h>

#include <linux/interrupt.h>

#include <linux/tracepoint.h>

#include <trace/events/osmonitor.h>

#include <trace/events/sched.h>

#include <trace/events/irq.h>

#include <trace/events/kmem.h>

#include <linux/ptrace.h>

#include <linux/uaccess.h>

#include <asm/processor.h>

#include <linux/sched/task_stack.h>

#include <linux/nmi.h>

#include <asm/apic.h>

#include <linux/version.h>

#include <linux/sched/mm.h>

#include <asm/irq_regs.h>

#include <linux/kallsyms.h>

#include <linux/kprobes.h>

#include <linux/stop_machine.h>

MODULE_LICENSE("GPL");

MODULE_AUTHOR("zhaoxin");

MODULE_DESCRIPTION("Module for monitor D tasks.");

MODULE_VERSION("1.0");

#define IODELAY_TRACEPOINT_ENABLE

#define TEST_STACK_TRACE_ENTRIES 32

typedef unsigned int (*stack_trace_save_tsk_func)(struct task_struct *task,

unsigned long *store, unsigned int size,

unsigned int skipnr);

stack_trace_save_tsk_func _stack_trace_save_tsk;

typedef int (*get_cmdline_func)(struct task_struct *task, char *buffer, int buflen);

get_cmdline_func _get_cmdline_func;

#define TESTDIOMONITOR_SAMPLEDESC_SWDSTART "swDstart"

#define TESTDIOMONITOR_SAMPLEDESC_WADSTOP "waDstop"

#define TESTDIOMONITOR_SAMPLEDESC_SWDIOSTART "swDiostart"

#define TESTDIOMONITOR_SAMPLEDESC_WADIOSTOP "waDiostop"

#define TESTDIOMONITOR_SAMPLEDESC_DEXCEED "Dexceed"

#define TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED "Dioexceed"

#define TESTDIOMONITOR_SAMPLEDESC_IOEXCEED "Ioexceed"

#define TESTDIOMONITOR_SIMPLE

#ifdef TESTDIOMONITOR_SIMPLE

#define TESTDIOMONITOR_SIMPLE_THRESHOLDNS 0ull//5000000ull

#endif

// 1ms

//#define TESTDIOMONITOR_DEXCEED_THRESHOLD 1000ull//1000000ull

struct uclamp_bucket {

unsigned long value : bits_per(SCHED_CAPACITY_SCALE);

unsigned long tasks : BITS_PER_LONG - bits_per(SCHED_CAPACITY_SCALE);

};

struct uclamp_rq {

unsigned int value;

struct uclamp_bucket bucket[UCLAMP_BUCKETS];

};

/* CFS-related fields in a runqueue */

struct cfs_rq {

struct load_weight load;

unsigned int nr_running;

unsigned int h_nr_running; /* SCHED_{NORMAL,BATCH,IDLE} */

unsigned int idle_nr_running; /* SCHED_IDLE */

unsigned int idle_h_nr_running; /* SCHED_IDLE */

u64 exec_clock;

u64 min_vruntime;

#ifdef CONFIG_SCHED_CORE

unsigned int forceidle_seq;

u64 min_vruntime_fi;

#endif

#ifndef CONFIG_64BIT

u64 min_vruntime_copy;

#endif

struct rb_root_cached tasks_timeline;

/*

* 'curr' points to currently running entity on this cfs_rq.

* It is set to NULL otherwise (i.e when none are currently running).

*/

struct sched_entity *curr;

struct sched_entity *next;

struct sched_entity *last;

struct sched_entity *skip;

#ifdef CONFIG_SCHED_DEBUG

unsigned int nr_spread_over;

#endif

#ifdef CONFIG_SMP

/*

* CFS load tracking

*/

struct sched_avg avg;

#ifndef CONFIG_64BIT

u64 last_update_time_copy;

#endif

struct {

raw_spinlock_t lock ____cacheline_aligned;

int nr;

unsigned long load_avg;

unsigned long util_avg;

unsigned long runnable_avg;

} removed;

#ifdef CONFIG_FAIR_GROUP_SCHED

unsigned long tg_load_avg_contrib;

long propagate;

long prop_runnable_sum;

/*

* h_load = weight * f(tg)

*

* Where f(tg) is the recursive weight fraction assigned to

* this group.

*/

unsigned long h_load;

u64 last_h_load_update;

struct sched_entity *h_load_next;

#endif /* CONFIG_FAIR_GROUP_SCHED */

#endif /* CONFIG_SMP */

#ifdef CONFIG_FAIR_GROUP_SCHED

struct rq *rq; /* CPU runqueue to which this cfs_rq is attached */

/*

* leaf cfs_rqs are those that hold tasks (lowest schedulable entity in

* a hierarchy). Non-leaf lrqs hold other higher schedulable entities

* (like users, containers etc.)

*

* leaf_cfs_rq_list ties together list of leaf cfs_rq's in a CPU.

* This list is used during load balance.

*/

int on_list;

struct list_head leaf_cfs_rq_list;

struct task_group *tg; /* group that "owns" this runqueue */

/* Locally cached copy of our task_group's idle value */

int idle;

#ifdef CONFIG_CFS_BANDWIDTH

int runtime_enabled;

s64 runtime_remaining;

u64 throttled_pelt_idle;

#ifndef CONFIG_64BIT

u64 throttled_pelt_idle_copy;

#endif

u64 throttled_clock;

u64 throttled_clock_pelt;

u64 throttled_clock_pelt_time;

int throttled;

int throttle_count;

struct list_head throttled_list;

#ifdef CONFIG_SMP

struct list_head throttled_csd_list;

#endif

#endif /* CONFIG_CFS_BANDWIDTH */

#endif /* CONFIG_FAIR_GROUP_SCHED */

};

struct rt_prio_array {

DECLARE_BITMAP(bitmap, MAX_RT_PRIO+1); /* include 1 bit for delimiter */

struct list_head queue[MAX_RT_PRIO];

};

/* Real-Time classes' related field in a runqueue: */

struct rt_rq {

struct rt_prio_array active;

unsigned int rt_nr_running;

unsigned int rr_nr_running;

#if defined CONFIG_SMP || defined CONFIG_RT_GROUP_SCHED

struct {

int curr; /* highest queued rt task prio */

#ifdef CONFIG_SMP

int next; /* next highest */

#endif

} highest_prio;

#endif

#ifdef CONFIG_SMP

unsigned int rt_nr_migratory;

unsigned int rt_nr_total;

int overloaded;

struct plist_head pushable_tasks;

#endif /* CONFIG_SMP */

int rt_queued;

int rt_throttled;

u64 rt_time;

u64 rt_runtime;

/* Nests inside the rq lock: */

raw_spinlock_t rt_runtime_lock;

#ifdef CONFIG_RT_GROUP_SCHED

unsigned int rt_nr_boosted;

struct rq *rq;

struct task_group *tg;

#endif

};

/* Deadline class' related fields in a runqueue */

struct dl_rq {

/* runqueue is an rbtree, ordered by deadline */

struct rb_root_cached root;

unsigned int dl_nr_running;

#ifdef CONFIG_SMP

/*

* Deadline values of the currently executing and the

* earliest ready task on this rq. Caching these facilitates

* the decision whether or not a ready but not running task

* should migrate somewhere else.

*/

struct {

u64 curr;

u64 next;

} earliest_dl;

unsigned int dl_nr_migratory;

int overloaded;

/*

* Tasks on this rq that can be pushed away. They are kept in

* an rb-tree, ordered by tasks' deadlines, with caching

* of the leftmost (earliest deadline) element.

*/

struct rb_root_cached pushable_dl_tasks_root;

#else

struct dl_bw dl_bw;

#endif

/*

* "Active utilization" for this runqueue: increased when a

* task wakes up (becomes TASK_RUNNING) and decreased when a

* task blocks

*/

u64 running_bw;

/*

* Utilization of the tasks "assigned" to this runqueue (including

* the tasks that are in runqueue and the tasks that executed on this

* CPU and blocked). Increased when a task moves to this runqueue, and

* decreased when the task moves away (migrates, changes scheduling

* policy, or terminates).

* This is needed to compute the "inactive utilization" for the

* runqueue (inactive utilization = this_bw - running_bw).

*/

u64 this_bw;

u64 extra_bw;

/*

* Maximum available bandwidth for reclaiming by SCHED_FLAG_RECLAIM

* tasks of this rq. Used in calculation of reclaimable bandwidth(GRUB).

*/

u64 max_bw;

/*

* Inverse of the fraction of CPU utilization that can be reclaimed

* by the GRUB algorithm.

*/

u64 bw_ratio;

};

struct rq {

/* runqueue lock: */

raw_spinlock_t __lock;

/*

* nr_running and cpu_load should be in the same cacheline because

* remote CPUs use both these fields when doing load calculation.

*/

unsigned int nr_running;

#ifdef CONFIG_NUMA_BALANCING

unsigned int nr_numa_running;

unsigned int nr_preferred_running;

unsigned int numa_migrate_on;

#endif

#ifdef CONFIG_NO_HZ_COMMON

#ifdef CONFIG_SMP

unsigned long last_blocked_load_update_tick;

unsigned int has_blocked_load;

call_single_data_t nohz_csd;

#endif /* CONFIG_SMP */

unsigned int nohz_tick_stopped;

atomic_t nohz_flags;

#endif /* CONFIG_NO_HZ_COMMON */

#ifdef CONFIG_SMP

unsigned int ttwu_pending;

#endif

u64 nr_switches;

#ifdef CONFIG_UCLAMP_TASK

/* Utilization clamp values based on CPU's RUNNABLE tasks */

struct uclamp_rq uclamp[UCLAMP_CNT] ____cacheline_aligned;

unsigned int uclamp_flags;

#define UCLAMP_FLAG_IDLE 0x01

#endif

struct cfs_rq cfs;

struct rt_rq rt;

struct dl_rq dl;

#ifdef CONFIG_FAIR_GROUP_SCHED

/* list of leaf cfs_rq on this CPU: */

struct list_head leaf_cfs_rq_list;

struct list_head *tmp_alone_branch;

#endif /* CONFIG_FAIR_GROUP_SCHED */

/*

* This is part of a global counter where only the total sum

* over all CPUs matters. A task can increase this counter on

* one CPU and if it got migrated afterwards it may decrease

* it on another CPU. Always updated under the runqueue lock:

*/

unsigned int nr_uninterruptible;

struct task_struct __rcu *curr;

struct task_struct *idle;

struct task_struct *stop;

unsigned long next_balance;

struct mm_struct *prev_mm;

unsigned int clock_update_flags;

u64 clock;

/* Ensure that all clocks are in the same cache line */

u64 clock_task ____cacheline_aligned;

u64 clock_pelt;

unsigned long lost_idle_time;

atomic_t nr_iowait;

#ifdef CONFIG_SCHED_DEBUG

u64 last_seen_need_resched_ns;

int ticks_without_resched;

#endif

#ifdef CONFIG_MEMBARRIER

int membarrier_state;

#endif

#ifdef CONFIG_SMP

struct root_domain *rd;

struct sched_domain __rcu *sd;

unsigned long cpu_capacity;

unsigned long cpu_capacity_orig;

struct callback_head *balance_callback;

unsigned char nohz_idle_balance;

unsigned char idle_balance;

unsigned long misfit_task_load;

/* For active balancing */

int active_balance;

int push_cpu;

struct cpu_stop_work active_balance_work;

/* CPU of this runqueue: */

int cpu;

int online;

struct list_head cfs_tasks;

struct sched_avg avg_rt;

struct sched_avg avg_dl;

#ifdef CONFIG_HAVE_SCHED_AVG_IRQ

struct sched_avg avg_irq;

#endif

#ifdef CONFIG_SCHED_THERMAL_PRESSURE

struct sched_avg avg_thermal;

#endif

u64 idle_stamp;

u64 avg_idle;

unsigned long wake_stamp;

u64 wake_avg_idle;

/* This is used to determine avg_idle's max value */

u64 max_idle_balance_cost;

#ifdef CONFIG_HOTPLUG_CPU

struct rcuwait hotplug_wait;

#endif

#endif /* CONFIG_SMP */

#ifdef CONFIG_IRQ_TIME_ACCOUNTING

u64 prev_irq_time;

#endif

#ifdef CONFIG_PARAVIRT

u64 prev_steal_time;

#endif

#ifdef CONFIG_PARAVIRT_TIME_ACCOUNTING

u64 prev_steal_time_rq;

#endif

/* calc_load related fields */

unsigned long calc_load_update;

long calc_load_active;

#ifdef CONFIG_SCHED_HRTICK

#ifdef CONFIG_SMP

call_single_data_t hrtick_csd;

#endif

struct hrtimer hrtick_timer;

ktime_t hrtick_time;

#endif

#ifdef CONFIG_SCHEDSTATS

/* latency stats */

struct sched_info rq_sched_info;

unsigned long long rq_cpu_time;

/* could above be rq->cfs_rq.exec_clock + rq->rt_rq.rt_runtime ? */

/* sys_sched_yield() stats */

unsigned int yld_count;

/* schedule() stats */

unsigned int sched_count;

unsigned int sched_goidle;

/* try_to_wake_up() stats */

unsigned int ttwu_count;

unsigned int ttwu_local;

#endif

#ifdef CONFIG_CPU_IDLE

/* Must be inspected within a rcu lock section */

struct cpuidle_state *idle_state;

#endif

#ifdef CONFIG_SMP

unsigned int nr_pinned;

#endif

unsigned int push_busy;

struct cpu_stop_work push_work;

#ifdef CONFIG_SCHED_CORE

/* per rq */

struct rq *core;

struct task_struct *core_pick;

unsigned int core_enabled;

unsigned int core_sched_seq;

struct rb_root core_tree;

/* shared state -- careful with sched_core_cpu_deactivate() */

unsigned int core_task_seq;

unsigned int core_pick_seq;

unsigned long core_cookie;

unsigned int core_forceidle_count;

unsigned int core_forceidle_seq;

unsigned int core_forceidle_occupation;

u64 core_forceidle_start;

#endif

};

// runqueues (not export symbol)

struct rq* _prq = NULL;

struct rq* my_cpu_rq(int i_cpu)

{

return per_cpu_ptr(_prq, i_cpu);

}

u64 my_rq_clock_task(void)

{

struct rq* prq = my_cpu_rq(smp_processor_id());

return prq->clock_task;

}

#define TESTDIOMONITOR_FILE_MAXLEN 1024

typedef struct testdiomonitor_sample {

struct timespec64 time;

int cpu;

int pid;

int tgid;

int ppid;

char comm[TASK_COMM_LEN];

char ppidcomm[TASK_COMM_LEN];

// 0 or 1

int bin_iowait;

/*

* "swDstart" // 在sched_switch里

* "waDstop" // 在sched_waking里

* "swDiostart" // 在sched_switch里

* "waDiostop" // 在sched_waking里

* "Dexceed" // 超出阈值,非iowait

* "Dioexceed" // 超出阈值,iowait

*/

const char* desc;

u64 dtimens; // 纳秒单位,D状态持续的时间

u64 iowaittimens; // 纳秒单位,等待io的时间

int stackn;

void* parray_stack[TEST_STACK_TRACE_ENTRIES];

int wakercpu;

int wakerpid;

int wakertgid;

int wakerppid;

char wakercomm[TASK_COMM_LEN];

char wakerppidcomm[TASK_COMM_LEN];

int wakerstackn;

void* parray_wakerstack[TEST_STACK_TRACE_ENTRIES];

char filepath[TESTDIOMONITOR_FILE_MAXLEN];

u32 writedone; // 0 or 1

} testdiomonitor_sample;

#define TESTDIOMONITOR_SAMPLE_RINGBUFF_MAXCOUNT 8192

typedef struct testdiomonitor_sample_ringbuff {

testdiomonitor_sample* parray_sample;

volatile u64 wp; // Index is wp & (TESTDIOMONITOR_SAMPLE_RINGBUFF_MAXCOUNT - 1).

volatile u64 rp; // Index is rp & (TESTDIOMONITOR_SAMPLE_RINGBUFF_MAXCOUNT - 1).

u32 skipcount; // 0 means no skip any abnormal event

} testdiomonitor_sample_ringbuff;

#define TESTDIOMONITOR_LINEBUFF 1024

typedef struct testdiomonitor_env {

struct file* file;

char file_linebuff[TESTDIOMONITOR_LINEBUFF];

int headoffset;

loff_t file_pos;

testdiomonitor_sample_ringbuff ringbuff;

} testdiomonitor_env;

static testdiomonitor_env _env;

static struct delayed_work work_write_file;

static struct workqueue_struct *wq_write_file;

#define FILENAME "test_new.txt"

void init_file(void)

{

_env.file = filp_open(FILENAME, O_WRONLY | O_CREAT | O_TRUNC, 0644);

if (IS_ERR(_env.file)) {

_env.file = NULL;

}

}

void exit_file(void)

{

if (_env.file) {

filp_close(_env.file, NULL);

}

}

void testdiomonitor_write_file(char* i_pchar, int i_size)

{

if (_env.file) {

kernel_write(_env.file, i_pchar, i_size, &_env.file_pos);

}

}

void testdiomonitor_write_file_emptyline(void)

{

testdiomonitor_write_file("\n", strlen("\n"));

}

void testdiomonitor_file_oneline(const char* i_format, ...)

{

char* pcontent = &_env.file_linebuff[_env.headoffset];

va_list args;

va_start(args, i_format);

vsnprintf(pcontent, TESTDIOMONITOR_LINEBUFF - _env.headoffset, i_format, args);

va_end(args);

testdiomonitor_write_file(_env.file_linebuff, strlen(_env.file_linebuff));

}

void testdiomonitor_replace_null_with_space(char *str, int n) {

for (int i = 0; i < n - 1; i++) {

if (str[i] == '\0') {

str[i] = ' ';

}

}

}

void testdiomonitor_set_cmdline(char* i_pbuff, int i_buffsize, struct task_struct* i_ptask)

{

int ret = _get_cmdline_func(i_ptask, i_pbuff, i_buffsize);

if (ret <= 0) {

i_pbuff[0] = '\0';

return;

}

testdiomonitor_replace_null_with_space(i_pbuff, ret);

i_pbuff[ret - 1] = '\0';

}

void testdiomonitor_checkget_parentinfo_and_cmdline(testdiomonitor_sample* io_psample, struct task_struct* i_ptask)

{

struct task_struct* parent;

rcu_read_lock();

parent = rcu_dereference(i_ptask->real_parent);

io_psample->ppid = parent->pid;

strlcpy(io_psample->ppidcomm, parent->comm, TASK_COMM_LEN);

rcu_read_unlock();

}

void testdiomonitor_checkget_parentinfo_and_cmdline_waker(testdiomonitor_sample* io_psample, struct task_struct* i_ptask)

{

struct task_struct* parent;

rcu_read_lock();

parent = rcu_dereference(i_ptask->real_parent);

io_psample->wakerppid = parent->pid;

strlcpy(io_psample->wakerppidcomm, parent->comm, TASK_COMM_LEN);

rcu_read_unlock();

}

#define TESTDIOMONITOR_COMMANDLINE_MAX 128

static void write_file(struct work_struct *w)

{

ssize_t ret;

u32 index;

testdiomonitor_sample* psample;

struct tm t;

char timestr[64];

char exceedstr[64];

char temp_commandline[TESTDIOMONITOR_COMMANDLINE_MAX];

struct pid* pid_struct;

struct task_struct* ptask;

int stacki;

while (_env.ringbuff.rp != _env.ringbuff.wp) {

index = (_env.ringbuff.rp & (TESTDIOMONITOR_SAMPLE_RINGBUFF_MAXCOUNT - 1));

psample = &_env.ringbuff.parray_sample[index];

if (psample->writedone != 1) {

break;

}

testdiomonitor_write_file_emptyline();

_env.headoffset = sprintf(_env.file_linebuff, "[%llu][%s] ", _env.ringbuff.rp, psample->desc);

time64_to_tm(psample->time.tv_sec + 8 * 60 * 60, 0, &t);

snprintf(timestr, 64, "%04ld-%02d-%02d-%02d_%02d_%02d.%09ld",

1900 + t.tm_year, t.tm_mon + 1, t.tm_mday, t.tm_hour, t.tm_min, t.tm_sec, psample->time.tv_nsec);

if (psample->desc == TESTDIOMONITOR_SAMPLEDESC_DEXCEED) {

snprintf(exceedstr, 64, "dtimens[%llu]", psample->dtimens);

}

else if (psample->desc == TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED) {

snprintf(exceedstr, 64, "iowaittimens[%llu]", psample->iowaittimens);

}

else if (psample->desc == TESTDIOMONITOR_SAMPLEDESC_IOEXCEED) {

snprintf(exceedstr, 64, "delayacct_iowaittimens[%llu]", psample->iowaittimens);

}

else {

exceedstr[0] = '\0';

}

if (psample->desc == TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED) {

testdiomonitor_file_oneline("begin...time[%s]wakercpu[%d]desc[%s]%s\n",

timestr, psample->wakercpu, psample->desc, "wakerDioexceed");

testdiomonitor_file_oneline("wakertgid[%d]wakerpid[%d]wakercomm[%s]wakerppid[%d]wakerppidcomm[%s]\n",

psample->wakertgid, psample->wakerpid, psample->wakercomm, psample->wakerppid, psample->wakerppidcomm);

pid_struct = find_get_pid(psample->wakerpid);

if (pid_struct) {

ptask = get_pid_task(pid_struct, PIDTYPE_PID);

if (ptask) {

testdiomonitor_set_cmdline(temp_commandline, TESTDIOMONITOR_COMMANDLINE_MAX, ptask);

put_task_struct(ptask);

}

else {

temp_commandline[0] = '\0';

}

put_pid(pid_struct);

}

else {

temp_commandline[0] = '\0';

}

testdiomonitor_file_oneline("wakercommandline[%s]\n", temp_commandline);

pid_struct = find_get_pid(psample->wakerppid);

if (pid_struct) {

ptask = get_pid_task(pid_struct, PIDTYPE_PID);

if (ptask) {

testdiomonitor_set_cmdline(temp_commandline, TESTDIOMONITOR_COMMANDLINE_MAX, ptask);

put_task_struct(ptask);

}

else {

temp_commandline[0] = '\0';

}

put_pid(pid_struct);

}

else {

temp_commandline[0] = '\0';

}

testdiomonitor_file_oneline("wakerppid_commandline[%s]\n", temp_commandline);

testdiomonitor_file_oneline("stack[%d]:\n", psample->wakerstackn);

for (stacki = 0; stacki < psample->wakerstackn; stacki++) {

testdiomonitor_file_oneline("%*c%pS\n", 5, ' ', (void *)psample->parray_wakerstack[stacki]);

}

testdiomonitor_file_oneline("cpu[%d]desc[%s]%s\n",

psample->cpu, psample->desc, exceedstr);

}

else {

testdiomonitor_file_oneline("begin...time[%s]cpu[%d]desc[%s]%s\n",

timestr, psample->cpu, psample->desc, exceedstr);

}

testdiomonitor_file_oneline("tgid[%d]pid[%d]comm[%s]ppid[%d]ppidcomm[%s]\n",

psample->tgid, psample->pid, psample->ppidcomm, psample->ppid, psample->ppidcomm);

pid_struct = find_get_pid(psample->pid);

if (pid_struct) {

ptask = get_pid_task(pid_struct, PIDTYPE_PID);

if (ptask) {

testdiomonitor_set_cmdline(temp_commandline, TESTDIOMONITOR_COMMANDLINE_MAX, ptask);

put_task_struct(ptask);

}

else {

temp_commandline[0] = '\0';

}

put_pid(pid_struct);

}

else {

temp_commandline[0] = '\0';

}

testdiomonitor_file_oneline("commandline[%s]\n", temp_commandline);

pid_struct = find_get_pid(psample->ppid);

if (pid_struct) {

ptask = get_pid_task(pid_struct, PIDTYPE_PID);

if (ptask) {

testdiomonitor_set_cmdline(temp_commandline, TESTDIOMONITOR_COMMANDLINE_MAX, ptask);

put_task_struct(ptask);

}

else {

temp_commandline[0] = '\0';

}

put_pid(pid_struct);

}

else {

temp_commandline[0] = '\0';

}

testdiomonitor_file_oneline("ppid_commandline[%s]\n", temp_commandline);

testdiomonitor_file_oneline("filepath[%s]\n", psample->filepath);

testdiomonitor_file_oneline("stack[%d]:\n", psample->stackn);

for (stacki = 0; stacki < psample->stackn; stacki++) {

testdiomonitor_file_oneline("%*c%pS\n", 5, ' ', (void *)psample->parray_stack[stacki]);

}

testdiomonitor_write_file_emptyline();

psample->writedone = 0;

_env.ringbuff.rp ++;

}

queue_delayed_work_on(nr_cpu_ids - 1, wq_write_file,

&work_write_file, 1);

}

static void init_write_file(void)

{

init_file();

wq_write_file = alloc_workqueue("testdiomonitor_write_file", WQ_MEM_RECLAIM, 0);

INIT_DELAYED_WORK(&work_write_file, write_file);

queue_delayed_work_on(nr_cpu_ids - 1, wq_write_file,

&work_write_file, 3);

}

static void exit_write_file(void)

{

cancel_delayed_work_sync(&work_write_file);

destroy_workqueue(wq_write_file);

exit_file();

}

void init_testdiomonitor_sample_ringbuff(void)

{

testdiomonitor_sample* psample;

_env.ringbuff.parray_sample = kvzalloc(sizeof(testdiomonitor_sample) * TESTDIOMONITOR_SAMPLE_RINGBUFF_MAXCOUNT, GFP_KERNEL);

}

void exit_testdiomonitor_sample_ringbuff(void)

{

kvfree(_env.ringbuff.parray_sample);

}

testdiomonitor_sample* testdiomonitor_get_psample(void)

{

u64 windex_raw, windex_raw_old;

u32 windex;

while (1) {

windex_raw = _env.ringbuff.wp;

if (windex_raw - _env.ringbuff.rp >= (u64)(TESTDIOMONITOR_SAMPLE_RINGBUFF_MAXCOUNT)) {

_env.ringbuff.skipcount ++;

return NULL;

}

// atomic_cmpxchg return old value

windex_raw_old = atomic64_cmpxchg((atomic64_t*)&_env.ringbuff.wp,

windex_raw, windex_raw + 1);

if (windex_raw_old == windex_raw) {

break;

}

}

windex = (u32)(windex_raw & (u64)(TESTDIOMONITOR_SAMPLE_RINGBUFF_MAXCOUNT - 1));

return &_env.ringbuff.parray_sample[windex];

}

static u64 _magic_number = 0xABCDEFull;

void* _dl_sched_class = NULL;

int get_file_dir_by_folio(struct folio *i_fo, char* i_path, int i_len);

void testdiomonitor_add_sample(const char* i_desc, struct task_struct* i_task, u64 i_timens)

{

testdiomonitor_sample* psample = testdiomonitor_get_psample();

if (!psample) {

return;

}

ktime_get_real_ts64(&psample->time);

psample->cpu = task_cpu(i_task);

psample->pid = i_task->pid;

psample->tgid = i_task->tgid;

strlcpy(psample->comm, i_task->comm, TASK_COMM_LEN);

testdiomonitor_checkget_parentinfo_and_cmdline(psample, i_task);

psample->bin_iowait = i_task->in_iowait;

psample->desc = i_desc;

if (i_desc == TESTDIOMONITOR_SAMPLEDESC_DEXCEED) {

psample->dtimens = i_timens;

}

else if (i_desc == TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED || i_desc == TESTDIOMONITOR_SAMPLEDESC_IOEXCEED) {

psample->iowaittimens = i_timens;

}

psample->stackn = _stack_trace_save_tsk(i_task, (unsigned long*)psample->parray_stack, TEST_STACK_TRACE_ENTRIES, 0);

if (i_desc == TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED) {

psample->wakercpu = smp_processor_id();

psample->wakerpid = current->pid;

psample->wakertgid = current->tgid;

strlcpy(psample->wakercomm, current->comm, TASK_COMM_LEN);

testdiomonitor_checkget_parentinfo_and_cmdline_waker(psample, current);

psample->wakerstackn = _stack_trace_save_tsk(current, (unsigned long*)psample->parray_wakerstack, TEST_STACK_TRACE_ENTRIES, 0);

psample->filepath[0] = '\0';

if (i_task->sched_class != &_dl_sched_class) {

if (i_task->dl.dl_runtime == _magic_number) {

//if (sched_clock() - i_task->dl.dl_deadline >= TESTDIOMONITOR_SIMPLE_THRESHOLDNS)

{

//printk("__folio_lock_killable wait %llu ns\n", sched_clock() - current->dl.dl_deadline);

//dump_stack();

if (get_file_dir_by_folio((struct folio*)i_task->dl.dl_period, psample->filepath, TESTDIOMONITOR_FILE_MAXLEN) < 0) {

//printk("get_file_dir_by_folio fail!\n");

}

}

current->dl.dl_runtime = 0;

}

}

}

psample->writedone = 1;

}

static void cb_sched_switch(void *i_data, bool i_preempt,

struct task_struct *i_prev,

struct task_struct *i_next,

unsigned int i_prev_state)

{

#ifndef TESTDIOMONITOR_SIMPLE

void* parray_stack[TEST_STACK_TRACE_ENTRIES];

int num_stack;

int stacki;

if (i_prev_state == TASK_UNINTERRUPTIBLE) {

if (i_prev->in_iowait) {

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_SWDIOSTART, i_prev, 0);

}

else {

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_SWDSTART, i_prev, 0);

}

}

else if (i_prev->in_iowait) {

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_SWDIOSTART, i_prev, 0);

}

#endif

}

const char* getstatstr_bystate(u32 i_state) {

switch (i_state) {

case TASK_RUNNING:

return "TASK_RUNNING";

case TASK_INTERRUPTIBLE:

return "TASK_INTERRUPTIBLE";

case TASK_UNINTERRUPTIBLE:

return "TASK_UNINTERRUPTIBLE";

default:

return "other";

}

}

static void cb_sched_waking(void *i_data, struct task_struct *i_p) {

if (i_p->__state == TASK_UNINTERRUPTIBLE) {

//u64 currns = my_rq_clock_task();

struct rq* prq = my_cpu_rq(task_cpu(i_p));

u64 currns = prq->clock_task;

u64 local_c = local_clock();

int cpuid = smp_processor_id();

if (i_p->in_iowait) {

#ifndef TESTDIOMONITOR_SIMPLE

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_WADIOSTOP, i_p, 0);

#endif

#ifdef TESTDIOMONITOR_SIMPLE

if (currns - i_p->se.exec_start >= TESTDIOMONITOR_SIMPLE_THRESHOLDNS)

#endif

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED, i_p, currns - i_p->se.exec_start);

#ifndef TESTDIOMONITOR_SIMPLE

if (i_p->se.exec_start > currns)

{

//if (task_cpu(i_p) == cpuid)

{

printk("comm[%s]pid[%d]exec_start[%llu]currns[%llu]local_clock[%llu]last_cpu[%d]cpuid[%d]\n",

i_p->comm, i_p->pid, i_p->se.exec_start, currns, local_c, task_cpu(i_p), cpuid);

}

}

// if (printk_ratelimit()) {

// printk("waking dump_stack[D]:\n");

// dump_stack();

// }

#endif

}

#ifndef TESTDIOMONITOR_SIMPLE

else {

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_WADSTOP, i_p, 0);

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_DEXCEED, i_p, my_rq_clock_task() - i_p->se.exec_start);

if (i_p->se.exec_start > currns)

{

//if (task_cpu(i_p) == cpuid)

{

printk("comm[%s]pid[%d]exec_start[%llu]currns[%llu]local_clock[%llu]last_cpu[%d]cpuid[%d]\n",

i_p->comm, i_p->pid, i_p->se.exec_start, currns, local_c, task_cpu(i_p), cpuid);

}

}

}

#endif

}

else if (i_p->in_iowait) {

struct rq* prq = my_cpu_rq(task_cpu(i_p));

u64 currns = prq->clock_task;

u64 local_c = local_clock();

int cpuid = smp_processor_id();

//if (printk_ratelimit())

// {

// printk("i_p->__state=[%u][%s]\n", i_p->__state, getstatstr_bystate(i_p->__state));

// printk("waking dump_stack[K]:\n");

// dump_stack();

// }

#ifndef TESTDIOMONITOR_SIMPLE

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_WADIOSTOP, i_p, 0);

#endif

#ifdef TESTDIOMONITOR_SIMPLE

if (currns - i_p->se.exec_start >= TESTDIOMONITOR_SIMPLE_THRESHOLDNS)

#endif

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED, i_p, currns - i_p->se.exec_start);

}

}

static void cb_iodelay_account(void *i_data, struct task_struct *i_curr,

unsigned long long i_delta)

{

#ifdef TESTDIOMONITOR_SIMPLE

if (i_delta >= TESTDIOMONITOR_SIMPLE_THRESHOLDNS)

#endif

testdiomonitor_add_sample(TESTDIOMONITOR_SAMPLEDESC_IOEXCEED, i_curr, i_delta);

}

struct kern_tracepoint {

void *callback;

struct tracepoint *ptr;

bool bregister;

};

static void clear_kern_tracepoint(struct kern_tracepoint *tp)

{

if (tp->bregister) {

tracepoint_probe_unregister(tp->ptr, tp->callback, NULL);

}

}

#define INIT_KERN_TRACEPOINT(tracepoint_name) \

static struct kern_tracepoint mykern_##tracepoint_name = {.callback = NULL, .ptr = NULL, .bregister = false};

#define TRACEPOINT_CHECK_AND_SET(tracepoint_name) \

static void tracepoint_name##_tracepoint_check_and_set(struct tracepoint *tp, void *priv) \

{ \

if (!strcmp(#tracepoint_name, tp->name)) \

{ \

((struct kern_tracepoint *)priv)->ptr = tp; \

return; \

} \

}

INIT_KERN_TRACEPOINT(sched_switch)

TRACEPOINT_CHECK_AND_SET(sched_switch)

INIT_KERN_TRACEPOINT(sched_waking)

TRACEPOINT_CHECK_AND_SET(sched_waking)

#ifdef IODELAY_TRACEPOINT_ENABLE

INIT_KERN_TRACEPOINT(iodelay_account)

TRACEPOINT_CHECK_AND_SET(iodelay_account)

#endif

typedef unsigned long (*kallsyms_lookup_name_func)(const char *name);

kallsyms_lookup_name_func _kallsyms_lookup_name_func;

void* get_func_by_symbol_name_kallsyms_lookup_name(void)

{

int ret;

void* pfunc = NULL;

struct kprobe kp;

memset(&kp, 0, sizeof(kp));

kp.symbol_name = "kallsyms_lookup_name";

kp.pre_handler = NULL;

kp.addr = NULL; // 作为强调,提示使用symbol_name

ret = register_kprobe(&kp);

if (ret < 0) {

printk("register_kprobe fail!\n");

return NULL;

}

printk("register_kprobe succeed!\n");

pfunc = (void*)kp.addr;

unregister_kprobe(&kp);

return pfunc;

}

void* get_func_by_symbol_name(const char* i_symbol)

{

if (_kallsyms_lookup_name_func == NULL) {

return NULL;

}

return _kallsyms_lookup_name_func(i_symbol);

}

enum behavior {

EXCLUSIVE, /* Hold ref to page and take the bit when woken, like

* __folio_lock() waiting on then setting PG_locked.

*/

SHARED, /* Hold ref to page and check the bit when woken, like

* folio_wait_writeback() waiting on PG_writeback.

*/

DROP, /* Drop ref to page before wait, no check when woken,

* like folio_put_wait_locked() on PG_locked.

*/

};

int kprobecb_folio_lock_killable_pre(struct kprobe* i_k, struct pt_regs* i_p)

{

if (current->sched_class != &_dl_sched_class) {

struct folio *fo = (struct folio*) i_p->di;

int bit_nr = (int)i_p->si;

int state = (int)i_p->dx;

enum behavior beh = (enum behavior)i_p->cx;

if (bit_nr != PG_locked

|| state != TASK_KILLABLE

|| beh != EXCLUSIVE) {

return 0;

}

current->dl.dl_runtime = _magic_number;

current->dl.dl_deadline = sched_clock();

current->dl.dl_period = (u64)fo;

}

return 0;

}

int getfullpath(struct inode *inode,char* i_buffer,int i_len)

{

struct dentry *dentry;

//printk("inode = %ld\n", inode->i_ino);

//spin_lock(&inode->i_lock);

hlist_for_each_entry(dentry, &inode->i_dentry, d_u.d_alias) {

char *buffer, *path;

buffer = (char *)__get_free_page(GFP_KERNEL);

if (!buffer)

return -ENOMEM;

path = dentry_path_raw(dentry, buffer, PAGE_SIZE);

if (IS_ERR(path)){

continue;

}

strlcpy(i_buffer, path, i_len);

//printk("dentry name = %s , path = %s", dentry->d_name.name, path);

free_page((unsigned long)buffer);

}

//spin_unlock(&inode->i_lock);

return 0;

}

int get_file_dir_by_folio(struct folio *i_fo, char* i_path, int i_len)

{

if (i_fo->mapping) {

struct inode *inode = i_fo->mapping->host;

if (inode) {

// struct dentry *dentry = inode->i_dentry;

// if (!dentry) {

// return -1;

// }

{

//char path_buf[256];

int ret = 0;

if ((ret = getfullpath(inode, i_path, i_len)) < 0) {

return ret;

}

// struct path path;

// //dentry_lock(dentry);

// path.dentry = dentry;

// path.mnt = dget(dentry->d_sb->s_root);

// if (dentry_path_raw(dentry, path_buf, sizeof(path_buf)) >= 0) {

// pr_info("File path: %s\n", path_buf);

// }

//dentry_unlock(dentry);

}

return 0;

}

}

return -1;

}

struct kprobe _kp1;

void kprobecb_folio_lock_killable_post(struct kprobe *p, struct pt_regs *regs,

unsigned long flags)

{

// if (current->sched_class != &_dl_sched_class) {

// if (current->dl.dl_runtime == _magic_number) {

// if (sched_clock() - current->dl.dl_deadline >= TESTDIOMONITOR_SIMPLE_THRESHOLDNS) {

// //printk("__folio_lock_killable wait %llu ns\n", sched_clock() - current->dl.dl_deadline);

// //dump_stack();

// if (get_file_dir_by_folio((struct folio*)current->dl.dl_period) < 0) {

// printk("get_file_dir_by_folio fail!\n");

// }

// }

// current->dl.dl_runtime = 0;

// }

// }

}

int kprobe_register_func_folio_lock_killable(void)

{

int ret;

memset(&_kp1, 0, sizeof(_kp1));

_kp1.symbol_name = "folio_wait_bit_common";

_kp1.pre_handler = kprobecb_folio_lock_killable_pre;

_kp1.post_handler = kprobecb_folio_lock_killable_post;

ret = register_kprobe(&_kp1);

if (ret < 0) {

printk("register_kprobe fail!\n");

return -1;

}

printk("register_kprobe success!\n");

return 0;

}

void kprobe_unregister_func_folio_lock_killable(void)

{

unregister_kprobe(&_kp1);

}

static int __init testdiomonitor_init(void)

{

//printk("offset of mmap_lock in mm_struct [%d]\n", offsetof(struct mm_struct, mmap_lock));

_kallsyms_lookup_name_func = get_func_by_symbol_name_kallsyms_lookup_name();

_dl_sched_class = (void*)_kallsyms_lookup_name_func("dl_sched_class");

if (_dl_sched_class == NULL) {

printk(KERN_ERR "get_func_by_symbol_name _dl_sched_class failed!\n");

return -1;

}

_prq = get_func_by_symbol_name("runqueues");

if (_prq == NULL) {

printk(KERN_ERR "get_func_by_symbol_name runqueues failed!\n");

return -1;

}

init_testdiomonitor_sample_ringbuff();

init_write_file();

_stack_trace_save_tsk = get_func_by_symbol_name("stack_trace_save_tsk");

if (_stack_trace_save_tsk == NULL) {

printk(KERN_ERR "get_func_by_symbol_name stack_trace_save_tsk failed!\n");

return -1;

}

_get_cmdline_func = get_func_by_symbol_name("get_cmdline");

if (_get_cmdline_func == NULL) {

printk(KERN_ERR "get_func_by_symbol_name get_cmdline failed!\n");

return -1;

}

mykern_sched_switch.callback = cb_sched_switch;

for_each_kernel_tracepoint(sched_switch_tracepoint_check_and_set, &mykern_sched_switch);

if (!mykern_sched_switch.ptr) {

printk(KERN_ERR "mykern_sched_switch register failed!\n");

return -1;

}

else {

printk(KERN_INFO "mykern_sched_switch register succeeded!\n");

}

tracepoint_probe_register(mykern_sched_switch.ptr, mykern_sched_switch.callback, NULL);

mykern_sched_switch.bregister = 1;

mykern_sched_waking.callback = cb_sched_waking;

for_each_kernel_tracepoint(sched_waking_tracepoint_check_and_set, &mykern_sched_waking);

if (!mykern_sched_waking.ptr) {

printk(KERN_ERR "mykern_sched_waking register failed!\n");

return -1;

}

else {

printk(KERN_INFO "mykern_sched_waking register succeeded!\n");

}

tracepoint_probe_register(mykern_sched_waking.ptr, mykern_sched_waking.callback, NULL);

mykern_sched_waking.bregister = 1;

#ifdef IODELAY_TRACEPOINT_ENABLE

mykern_iodelay_account.callback = cb_iodelay_account;

for_each_kernel_tracepoint(iodelay_account_tracepoint_check_and_set, &mykern_iodelay_account);

if (!mykern_iodelay_account.ptr) {

printk(KERN_ERR "mykern_iodelay_account register failed!\n");

return -1;

}

else {

printk(KERN_INFO "mykern_iodelay_account register succeeded!\n");

}

tracepoint_probe_register(mykern_iodelay_account.ptr, mykern_iodelay_account.callback, NULL);

mykern_iodelay_account.bregister = 1;

#endif

kprobe_register_func_folio_lock_killable();

return 0;

}

static void __exit testdiomonitor_exit(void)

{

kprobe_unregister_func_folio_lock_killable();

clear_kern_tracepoint(&mykern_sched_switch);

clear_kern_tracepoint(&mykern_sched_waking);

#ifdef IODELAY_TRACEPOINT_ENABLE

clear_kern_tracepoint(&mykern_iodelay_account);

#endif

tracepoint_synchronize_unregister();

exit_write_file();

exit_testdiomonitor_sample_ringbuff();

}

module_init(testdiomonitor_init);

module_exit(testdiomonitor_exit);

2.2 源码分析

2.2.1 增加filepath这个缺页异常对应的文件绝对路径的变量及主要逻辑

添加了filepath这个采样信息:

在打印时增加了filepath的打印:

![]()

在TESTDIOMONITOR_SAMPLEDESC_DIOEXCEED类型时,设置filepath的值:

设置的方法借用了deadline调度器才会用到的几个task_struct里的变量,而deadline调度器一般是用不上的,除非特别指定使用deadline调度器的任务才会用上,关于deadline调度器的更多细节和实验在之前的博客 不修改内核镜像的情况下,使用内核模块实现“及时”的调度时间片超时事件上报-CSDN博客 的 2.1.1 一节里有介绍。

这里的逻辑里我们使用了deadline调度器的这几个变量来保存着相关缺页异常的页的结构体的地址(保存在dl.dl_period里),还有一个辅助用的变量,来记一个魔鬼数字magic number,记到dl_runtime里,来确定是我们的逻辑修改的这个变量,在用完这个folio的指针后,再清除这个magic number。另外,肯定需要确定是否这个任务的调度类是否是deadline调度类。

在下面 2.2.2 一节里我们讲怎么通过内核模块的逻辑捞到这个folio指针,然后在 2.2.3 一节里,我们展开介绍上图里的get_file_dir_by_folio函数的实现,是如何通过这个folio指针找到对应文件的绝对路径的。

2.2.2 通过内核模块逻辑捞到这个folio指针

我们是通过kprobe来捕获folio_wait_bit_common执行前的时候来拿到传入的参数:

folio_wait_bit_common函数的第一个参数就是folio的指针:

为什么用folio_wait_bit_common函数而不用在之前的博客 增加等IO状态的唤醒堆栈打印及缺页异常导致iowait分析-CSDN博客 里的 3.2 一节里分析的必然调用到的__folio_lock_killable函数,是因为__folio_lock_killable函数虽然被export symbol了出来,但是它并不是在每次被调用时都走函数调用的形式,在我们抓到的filemap_fault这个调用链场景下,__folio_lock_killable函数是被inline调用的。所以,我们得在stack里看到的folio_wait_bit_common函数里来加kprobe。

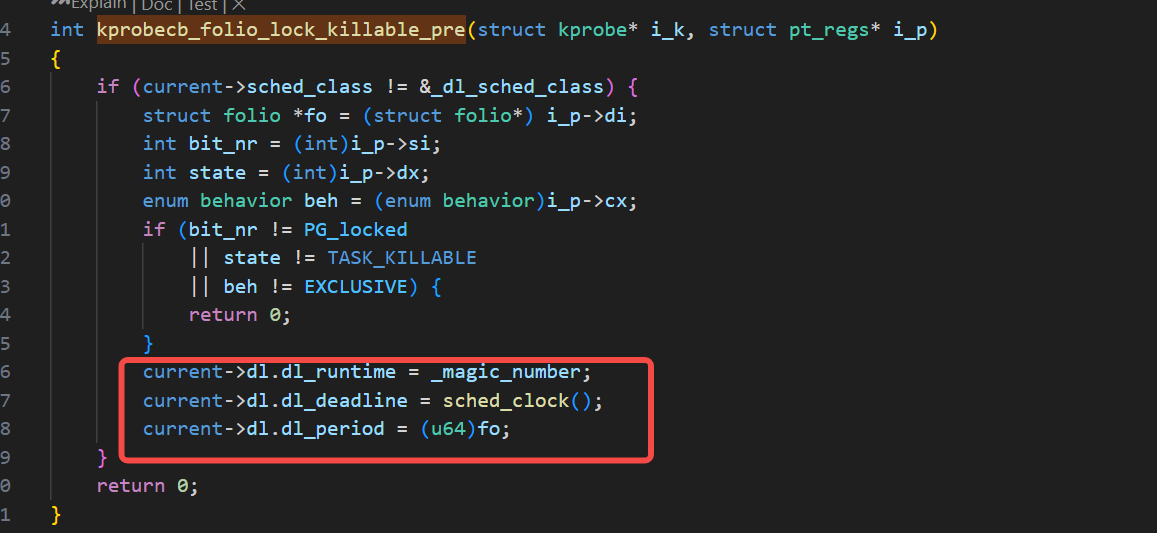

我们在kprobe的pre_handler的回调函数里,如果非deadline调度类场景,就执行记录folio指针的逻辑,在记录folio指针前要进行一定的判断,根据获取到folio_wait_bit_common函数传入的四个参数,如下图:

根据这四个参数的情况,筛选出是filemap_fault必经的__folio_lock_killable的场景,如下图:

判断对的话,再执行记录动作:

记录了folio指针到dl.dl_period里,另外,还写了一个magic number:

2.2.3 通过folio指针找到对应文件的绝对路径

在代码里,我们如下图的get_file_dir_by_folio函数实现了“通过folio指针找到对应文件的绝对路径”的功能:

调用的是getfullpath如下图:

如上图框出的两个关键逻辑,hlist_for_each_entry的遍历,及dentry_path_raw来获取完整的当前这个dentry所在的super_block下完整的路径。

这里有必要说一下,file,fd,inode,dentry,super_block这几个概念:

file和fd都是进程强相关的概念;

inode是磁盘上的文件概念,不区分具体进程;

dentry是内核用来管理文件路径结构所用到的概念,一个dentry记录了一级目录,dentry连在一起就是一个完整的super_block下的路径

super_block是文件系统挂载伴随产生的一个概念,一个挂载就产生一个super_block,super_block也是一级级的,dentry所属的一个super_block,而这个所属的super_block的root dentry还是可能所属于另一个super_block的。

上图里hlist_for_each_entry的遍历是因为一个inode对应的操作系统文件系统上会可能有多个dentry,所以需要遍历,上图代码写得还是比较粗糙的,主要是为了打通和展示概念和逻辑并不是严谨的实现,若是严谨的实现还需要稍作改造。

上图里的dentry_path_raw的实现如下:

dentry_path_raw调用到的__dentry_path函数如下实现:

从上图的实现里可以清晰的看到,它有一个往parent dentry遍历的一个动作。

三、成果展示

下面我们展示一下成果,可以发现如 增加等IO状态的唤醒堆栈打印及缺页异常导致iowait分析-CSDN博客 博客里第三章里描述的一样,读磁盘对应的文件缺页异常的读的可能是数据文件,也可能是程序本身,也可能是so库,等等

下图是程序bin的filemap_fault情形:

下图是so库的场景:

下图是读取文件的情况: