声明:该爬虫只可用于提高自己学习、工作效率,请勿用于非法用途,否则后果自负

功能概述:

- 根据待爬文章url(文章id)批量保存文章到本地;

- 支持将文中图片下载到本地指定文件夹;

- 多线程爬取;

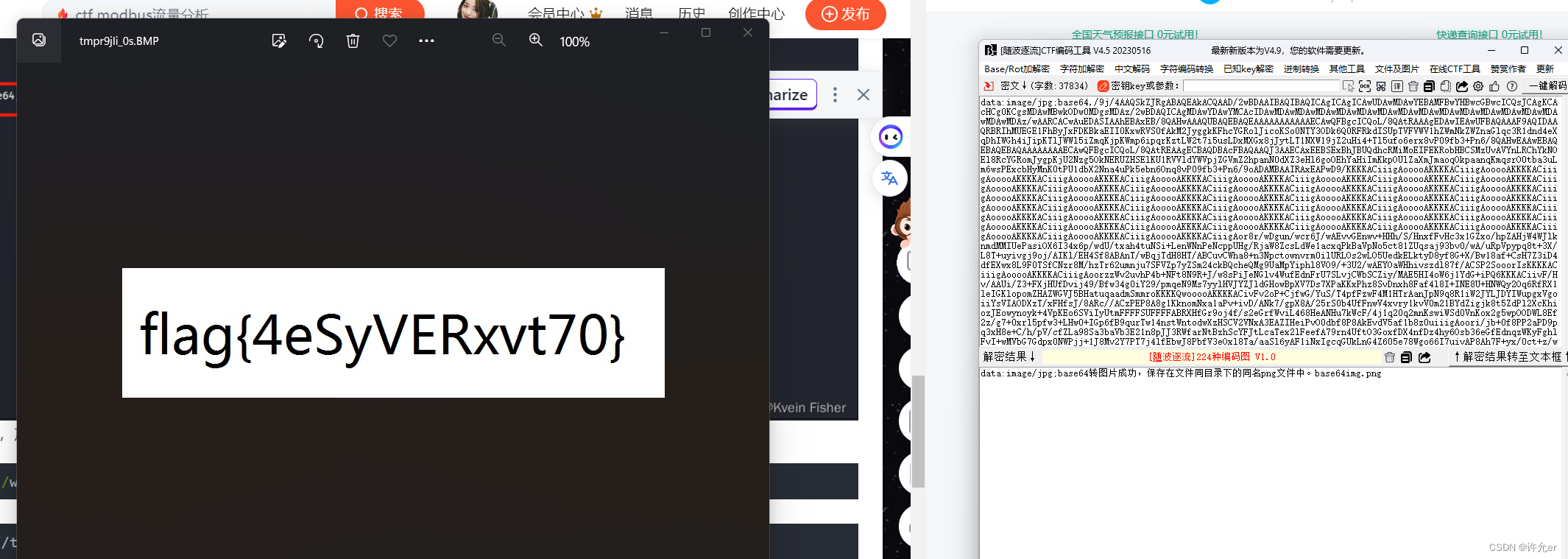

1.爬取效果展示

本次示例爬取的链接地址:

https://blog.csdn.net/m0_68111267/article/details/132574687

原文效果:

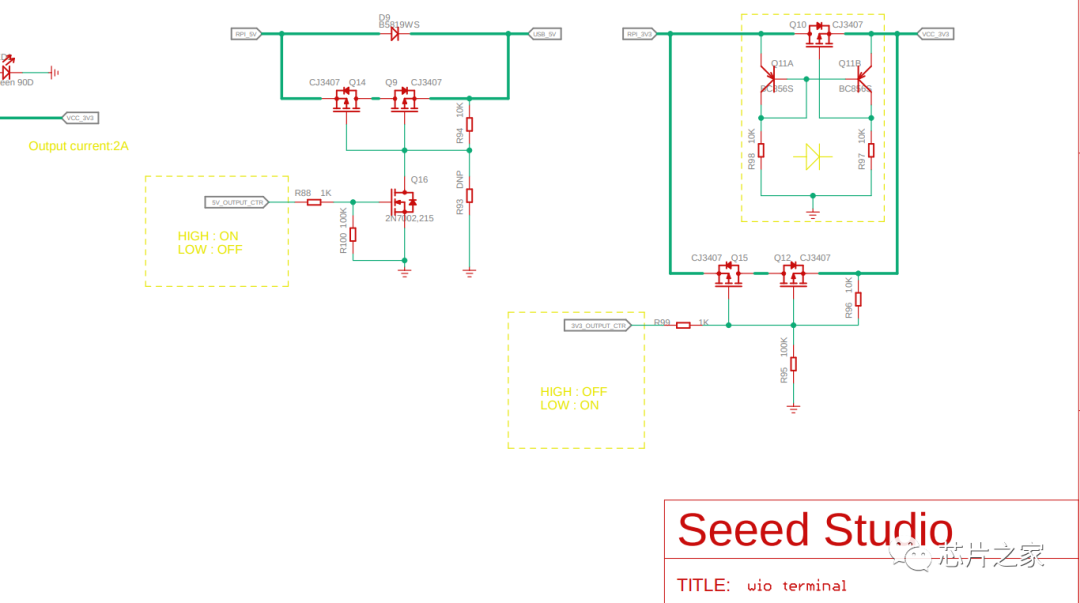

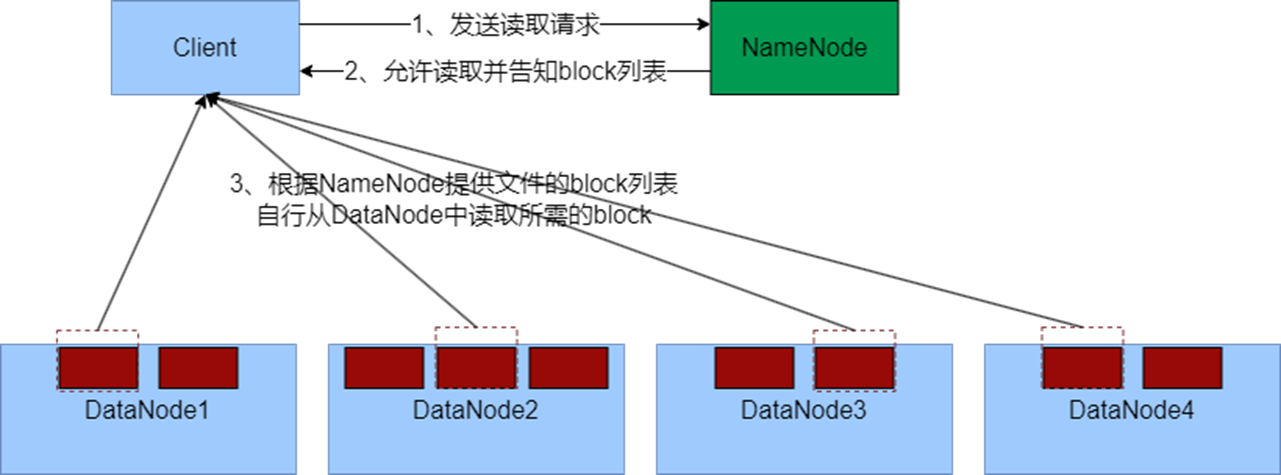

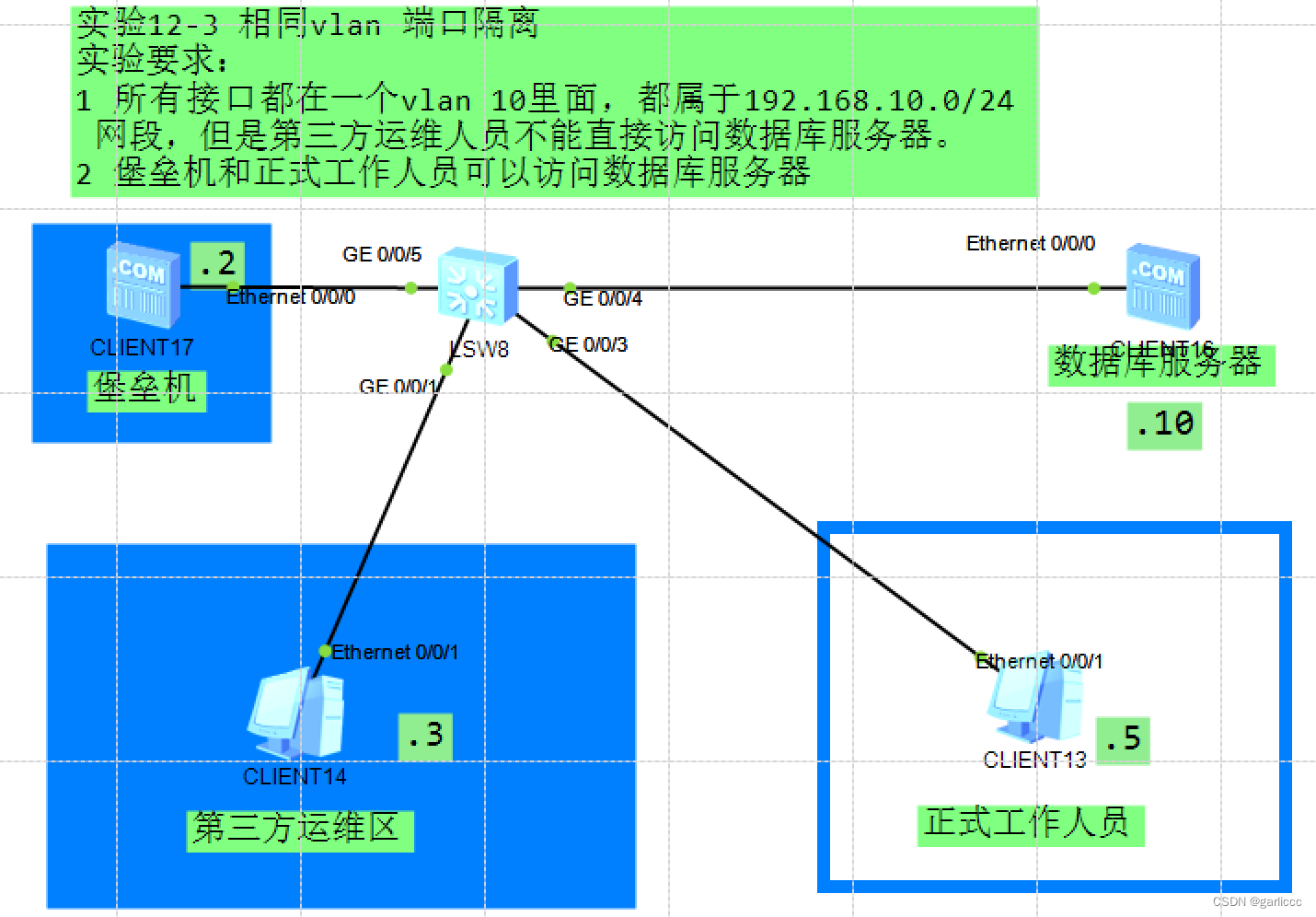

爬取效果:

文件列表:

2.编写代码

爬虫使用scrapy框架编写,分布式、多线程

2.1编写Items

class ArticleItem(scrapy.Item):

id = scrapy.Field() # ID

title = scrapy.Field()

html = scrapy.Field() # html

class ImgDownloadItem(scrapy.Item):

img_src = scrapy.Field()

img_name = scrapy.Field()

image_urls = scrapy.Field()

class LinkIdsItem(scrapy.Item):

id = scrapy.Field()

2.2添加管道

class ArticlePipeline():

def open_spider(self, spider):

if spider.name == 'csdnSpider':

data_dir = os.path.join(settings.DATA_URI)

#判断文件夹存放的位置是否存在,不存在则新建文件夹

if not os.path.exists(data_dir):

os.makedirs(data_dir)

self.data_dir = data_dir

def close_spider(self, spider): # 在关闭一个spider的时候自动运行

pass

# if spider.name == 'csdnSpider':

# self.file.close()

def process_item(self, item, spider):

try:

if spider.name == 'csdnSpider' and item['key'] == 'article':

info = item['info']

id = info['id']

title = info['title']

html = info['html']

f = open(self.data_dir + '/{}.html'.format(title),

'w',

encoding="utf-8")

f.write(html)

f.close()

except BaseException as e:

print("Article错误在这里>>>>>>>>>>>>>", e, "<<<<<<<<<<<<<错误在这里")

return item

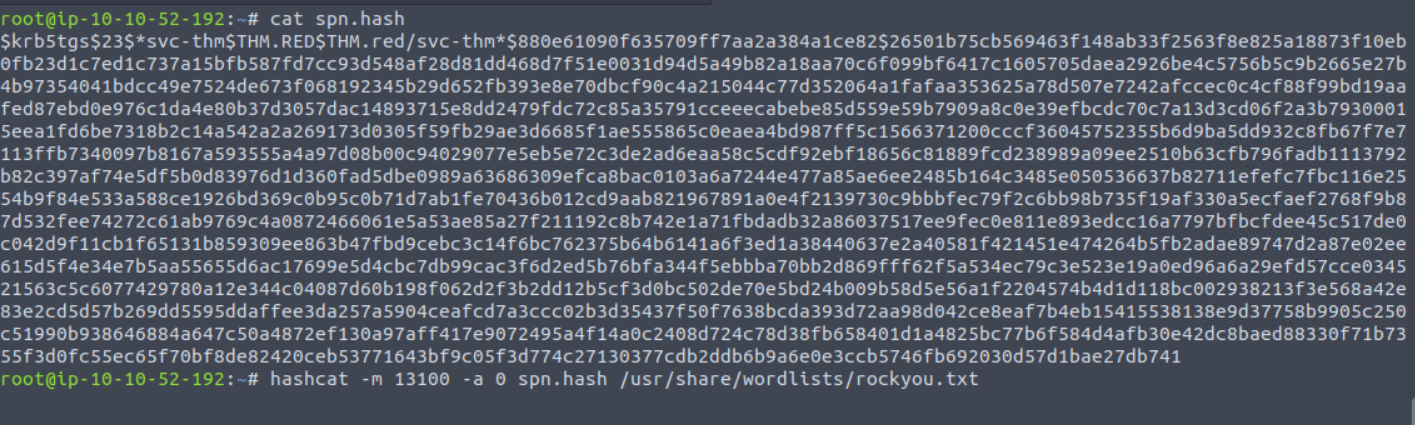

2.3添加配置

2.4添加解析器

...

def parse(self, response):

html = response.body

a_id = response.meta['a_id']

soup = BeautifulSoup(html, 'html.parser')

[element.extract() for element in soup('script')]

[element.extract() for element in soup.select("head style")]

[element.extract() for element in soup.select("html > link")]

# 删除style中包含隐藏的标签

[

element.extract() for element in soup.find_all(

style=re.compile(r'.*display:none.*?'))

]

...

3.获取完整源码

项目说明文档

爱学习的小伙伴,本次案例的完整源码,已上传微信公众号“一个努力奔跑的snail”,后台回复“csdn”即可获取。

源码地址:

https://pan.baidu.com/s/1uLBoygwQGTSCAjlwm13mog?pwd=****

提取码: ****

![[杂谈]-快速了解直接内存访问 (DMA)](https://img-blog.csdnimg.cn/c35f0532cc6942a0b3a4b48e2338633f.webp#pic_center)