ReplicaSet和Deployment

写在前面

语雀原文阅读效果更佳:198 ReplicaSet和Deployment · 语雀 《198 ReplicaSet和Deployment》

1、ReplicaSet

假如我们现在有一个 Pod 正在提供线上的服务,我们来想想一下我们可能会遇到的一些场景:

- 某次运营活动非常成功,网站访问量突然暴增

- 运行当前 Pod 的节点发生故障了,Pod 不能正常提供服务了

第一种情况,可能比较好应对,活动之前我们可以大概计算下会有多大的访问量,提前多启动几个 Pod 副本,活动结束后再把多余的 Pod 杀掉,虽然有点麻烦,但是还是能够应对这种情况的。

第二种情况,可能某天夜里收到大量报警说服务挂了,然后起来打开电脑在另外的节点上重新启动一个新的 Pod,问题可以解决。

但是如果我们都人工的去解决遇到的这些问题,似乎又回到了以前刀耕火种的时代了是吧?如果有一种工具能够来帮助我们自动管理 Pod 就好了,Pod 挂了自动帮我在合适的节点上重新启动一个 Pod,这样是不是遇到上面的问题我们都不需要手动去解决了。

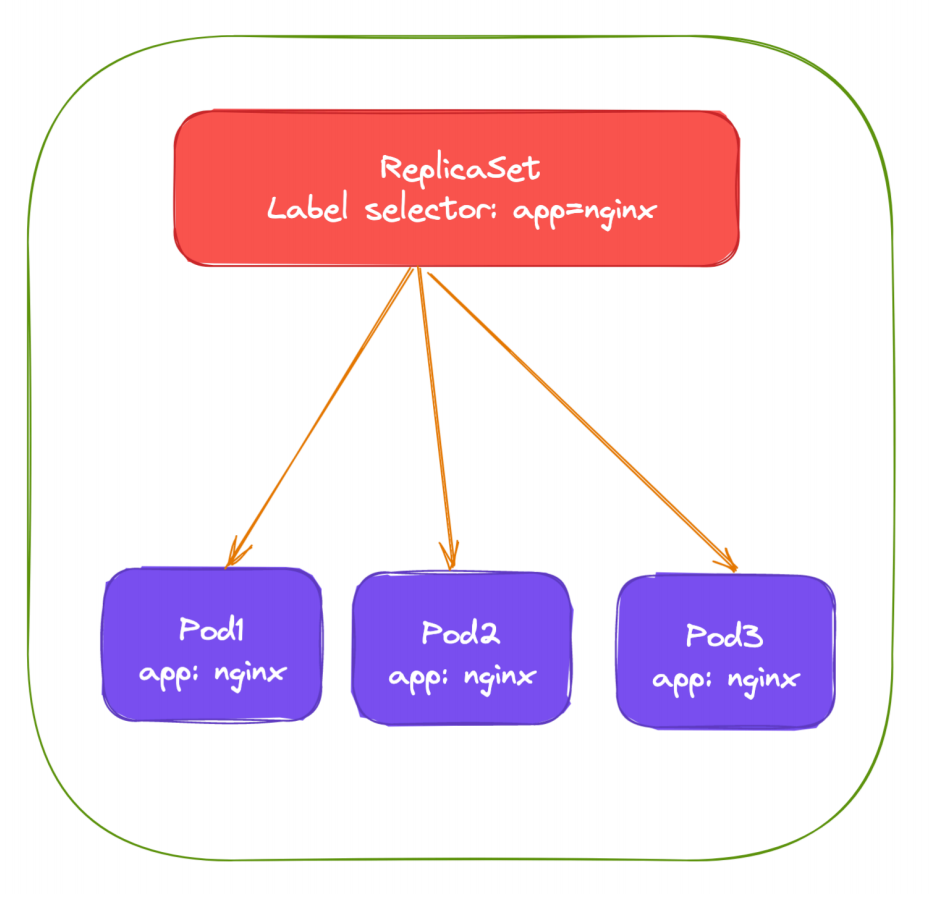

而 ReplicaSet 这种资源对象就可以来帮助我们实现这个功能,ReplicaSet(RS) 的主要作用就是维持一组 Pod 副本的运行,保证一定数量的 Pod 在集群中正常运行,ReplicaSet 控制器会持续监听它所控制的这些 Pod 的运行状态,在 Pod 发送故障数量减少或者增加时会触发调谐过程,始终保持副本数量一致。

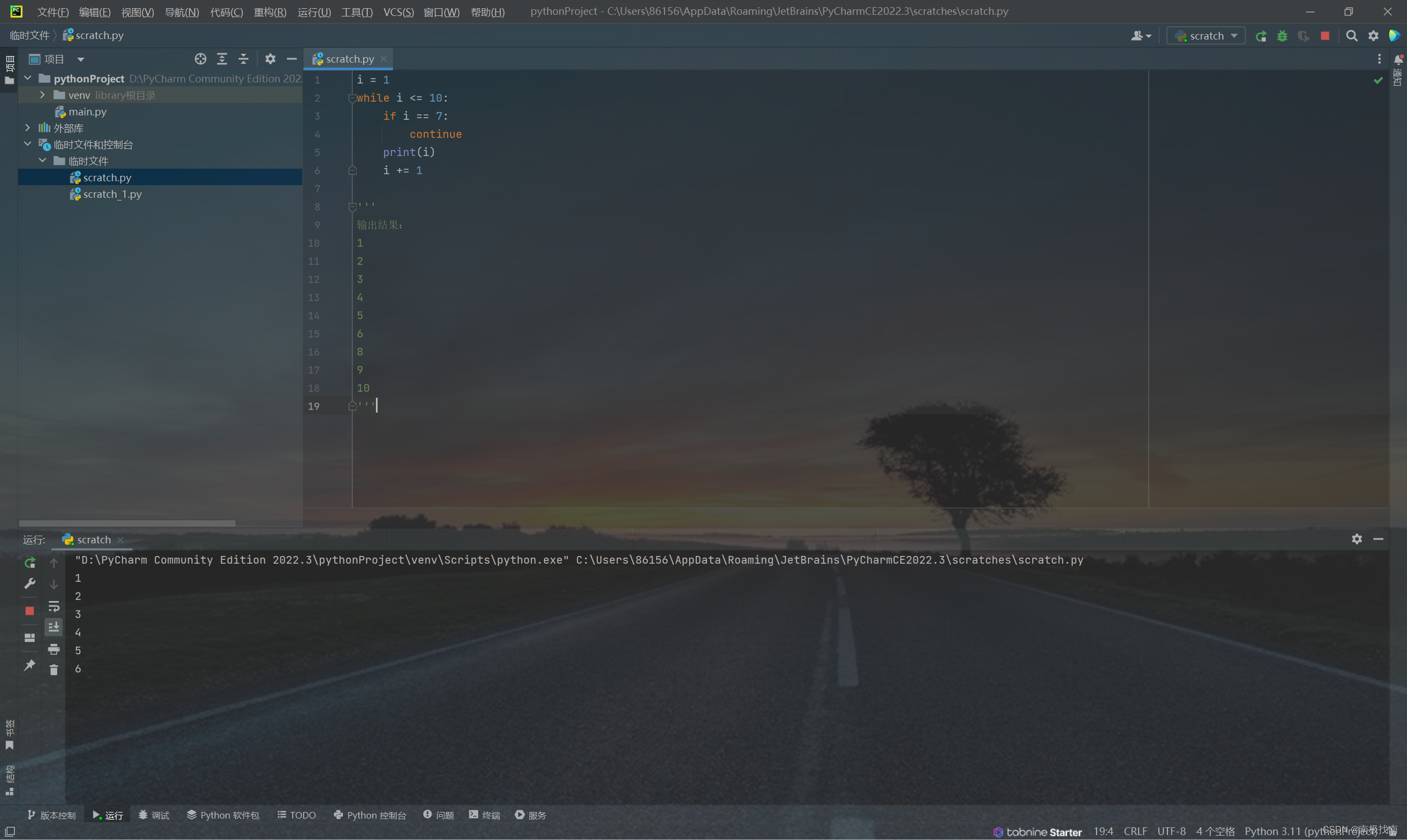

💘 实战:ReplicaSet测试-2022.12.14(成功测试)

- 实验环境

1、win10,vmwrokstation虚机; 2、k8s集群:3台centos7.6 1810虚机,2个master节点,1个node节点 k8s version:v1.20 CONTAINER-RUNTIME:containerd:v1.6.10

- 实验软件(无)

- 编写资源清单

和 Pod 一样我们仍然还是通过 YAML 文件来描述我们的 ReplicaSet 资源对象,如下 YAML 文件是一个常见的 ReplicaSet 定义:

# nginx-rs.yaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx-rs

namespace: default

spec:

replicas: 3 # 期望的 Pod 副本数量,默认值为1

selector: # Label Selector,必须匹配Pod模板中的标签。

matchLabels:

app: nginx

template: # Pod 模板

metadata: #Pod名称会根据rs的name自动生成

labels:

app: nginx #一定要包含上面的 matchLabels里面的标签。

spec:

containers:

- name: nginx

image: nginx:latest # latest标签最好别用在线上

ports:

- containerPort: 80

上面的 YAML 文件结构和我们之前定义的 Pod 看上去没太大两样,有常见的 apiVersion、kind、metadata,在 spec 下面描述 ReplicaSet 的基本信息,其中包含3个重要内容:

- replias:表示期望的 Pod 的副本数量

- selector:Label Selector,用来匹配要控制的 Pod 标签,需要和下面的 Pod 模板中的标签一致

- template:Pod 模板,实际上就是以前我们定义的 Pod 内容,相当于把一个 Pod 的描述以模板的形式嵌入到了 ReplicaSet 中来。

Pod 模板这个概念非常重要,因为后面我们讲解到的大多数控制器,都会使用 Pod 模板来统一定义它所要管理的 Pod。更有意思的是,我们还会看到其他类型的对象模板,比如Volume 的模板等。

上面就是我们定义的一个普通的 ReplicaSet 资源清单文件,ReplicaSet 控制器会通过定义的 Label Selector 标签去查找集群中的 Pod 对象:

- 我们直接来创建上面的资源对象:

[root@master1 ~]#kubectl apply -f nginx-rs.yaml replicaset.apps/nginx-rs created [root@master1 ~]#kubectl get rs NAME DESIRED CURRENT READY AGE nginx-rs 3 3 3 46s

通过查看 RS 可以看到当前资源对象的描述信息,包括DESIRED、CURRENT、READY的状态值。

- 创建完成后,可以利用如下命令查看下 Pod 列表:

[root@master1 ~]#kubectl get po -l app=nginx NAME READY STATUS RESTARTS AGE nginx-rs-7btxg 1/1 Running 0 82s nginx-rs-gfhzx 1/1 Running 0 82s nginx-rs-v8rrb 1/1 Running 0 82s

- 可以看到现在有 3 个 Pod,这 3 个 Pod 就是我们在 RS 中声明的 3 个副本,比如我们删除其中一个 Pod:

[root@master1 ~]#kubectl delete pod nginx-rs-v8rrb pod "nginx-rs-v8rrb" deleted

然后再查看 Pod 列表:

[root@master1 ~]#kubectl get po -l app=nginx NAME READY STATUS RESTARTS AGE nginx-rs-7btxg 1/1 Running 0 2m28s nginx-rs-gfhzx 1/1 Running 0 2m28s nginx-rs-xxplf 1/1 Running 0 28s

可以看到又重新出现了一个 Pod,这个就是上面我们所说的 ReplicaSet 控制器为我们做的工作,我们在 YAML 文件中声明了 3 个副本,然后现在我们删除了一个副本,就变成了两个,这个时候 ReplicaSet 控制器监控到控制的 Pod 数量和期望的 3 不一致,所以就需要启动一个新的 Pod 来保持 3 个副本,这个过程上面我们说了就是调谐的过程。

- 同样可以查看 RS 的描述信息来查看到相关的事件信息:

[root@master1 ~]#kubectl describe rs nginx-rs

Name: nginx-rs

Namespace: default

Selector: app=nginx

Labels: <none>

Annotations: <none>

Replicas: 3 current / 3 desired

Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 3m31s replicaset-controller Created pod: nginx-rs-v8rrb

Normal SuccessfulCreate 3m31s replicaset-controller Created pod: nginx-rs-7btxg

Normal SuccessfulCreate 3m31s replicaset-controller Created pod: nginx-rs-gfhzx

Normal SuccessfulCreate 91s replicaset-controller Created pod: nginx-rs-xxplf

[root@master1 ~]#

可以发现最开始通过 ReplicaSet 控制器创建了 3 个 Pod,后面我们删除了 Pod 后, ReplicaSet 控制器又为我们创建了一个 Pod,和上面我们的描述是一致的。

- 如果这个时候我们把 RS 资源对象的 Pod 副本更改为 2

spec.replicas=2,这个时候我们来更新下资源对象:

[root@master1 ~]#vim nginx-rs.yaml

……

spec:

8 replicas: 2 #修改pod副本数为2

9 selector:

10 matchLabels:

11 app: nginx

……

[root@master1 ~]#kubectl apply -f nginx-rs.yaml

replicaset.apps/nginx-rs configured

[root@master1 ~]#

[root@master1 ~]#kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-rs 2 2 2 5m38s

[root@master1 ~]#kubectl describe rs nginx-rs

Name: nginx-rs

Namespace: default

Selector: app=nginx

Labels: <none>

Annotations: <none>

Replicas: 2 current / 2 desired

Pods Status: 2 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 5m43s replicaset-controller Created pod: nginx-rs-v8rrb

Normal SuccessfulCreate 5m43s replicaset-controller Created pod: nginx-rs-7btxg

Normal SuccessfulCreate 5m43s replicaset-controller Created pod: nginx-rs-gfhzx

Normal SuccessfulCreate 3m43s replicaset-controller Created pod: nginx-rs-xxplf

Normal SuccessfulDelete 19s replicaset-controller Deleted pod: nginx-rs-xxplf

[root@master1 ~]#

可以看到 Replicaset 控制器在发现我们的资源声明中副本数变更为 2 后,就主动去删除了一个 Pod,这样副本数就和期望的始终保持一致了:

[root@master1 ~]#kubectl get po -l app=nginx NAME READY STATUS RESTARTS AGE nginx-rs-7btxg 1/1 Running 0 6m12s nginx-rs-gfhzx 1/1 Running 0 6m12s

- 我们可以随便查看一个 Pod 的描述信息可以看到这个 Pod 的所属控制器信息:

[root@master1 ~]#kubectl get po -l app=nginx NAME READY STATUS RESTARTS AGE nginx-rs-7btxg 1/1 Running 0 6m50s nginx-rs-gfhzx 1/1 Running 0 6m50s [root@master1 ~]#kubectl describe pod nginx-rs-gfhzx Name: nginx-rs-gfhzx Namespace: default Priority: 0 Node: node2/172.29.9.53 Start Time: Mon, 08 Nov 2021 21:30:03 +0800 Labels: app=nginx Annotations: <none> Status: Running IP: 10.244.2.13 IPs: IP: 10.244.2.13 Controlled By: ReplicaSet/nginx-rs #从这里可以看到,这个pod是被ReplicaSet/nginx-rs控制; …… [root@master1 ~]#

- 另外被 ReplicaSet 持有的 Pod 有一个

metadata.ownerReferences指针指向当前的 ReplicaSet,表示当前 Pod 的所有者,这个引用主要会被集群中的垃圾收集器使用以清理失去所有者的 Pod 对象。这个ownerReferences和数据库中的外键是不是非常类似。可以通过将 Pod 资源描述信息导出查看:

[root@master1 ~]#kubectl get po -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-rs-7btxg 1/1 Running 0 8m48s

nginx-rs-gfhzx 1/1 Running 0 8m48s

[root@master1 ~]#kubectl edit pod nginx-rs-gfhzx

或者

[root@master1 ~]#kubectl get po nginx-rs-gfhzx -oyaml

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: nginx-rs

uid: e1b9d63b-6be5-4a9f-b565-1d8eea92c727

我们可以看到 Pod 中有一个 metadata.ownerReferences 的字段指向了 ReplicaSet 资源对象。如果要彻底删除 Pod,我们就只能删除 RS 对象:

[root@master1 ~]#kubectl delete rs nginx-rs replicaset.apps "nginx-rs" deleted [root@master1 ~]#kubectl get rs,pod No resources found in default namespace. [root@master1 ~]# 或者:执行 kubectl delete -f nginx-rs.yaml 或者:你如果想彻底删除pod,就需要把rs给删除掉或者把rs里的副本数改为0;

这就是 ReplicaSet 对象的基本使用。

- 注意,我们直接修改

nginx-rs.yaml里的镜像版本后,是没办法改变pod里的镜像版本的,即:RS不支持应用的更新操作。

# 查看当前应用镜像版本

[root@master1 ~]#kubectl get po -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-rs-dfkqr 1/1 Running 0 38s

nginx-rs-ml7gc 1/1 Running 0 38s

nginx-rs-p2p6q 1/1 Running 0 38s

[root@master1 ~]#kubectl get po ginx-rs-dfkqr -oyaml

……

spec:

containers:

- image: nginx:latest

……

[root@master1 ~]#kubectl get rs nginx-rs -oyaml

……

spec:

containers:

- image: nginx:latest

……

#来执行一次更新应用操作

[root@master1 ~]#vim nginx-rs.yaml

……

spec:

containers:

- name: nginx

image: nginx:1.7.9 # 修改为1.7.9

……

然后执行apply操作:

[root@master1 ~]#kubectl apply -f nginx-rs.yaml

replicaset.apps/nginx-rs configured

#观察效果

#可以看到,pod并没有被重建,且其nginx镜像版本未发生变化

[root@master1 ~]#kubectl get po -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-rs-dfkqr 1/1 Running 0 4m55s

nginx-rs-ml7gc 1/1 Running 0 4m55s

nginx-rs-p2p6q 1/1 Running 0 4m55s

[root@master1 ~]#kubectl get po nginx-rs-dfkqr -oyaml

……

spec:

containers:

- image: nginx:latest

……

[root@master1 ~]#kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-rs 3 3 3 5m52s

#rs导出的资源清单里pod模板里的惊险版本发生了变化。

[root@master1 ~]#kubectl get rs -oyaml

……

spec:

containers:

- image: nginx:1.7.9

……

实验到此结束。😘

Replication Controller(了解即可)

Replication Controller 简称 RC,实际上 RC 和 RS 的功能几乎一致,RS 算是对 RC 的改进,目前唯一的一个区别就是 RC 只支持基于等式的 selector(env=dev或environment!=qa),但 RS 还支持基于集合的 selector(version in (v1.0, v2.0)),这对复杂的运维管理就非常方便了。

比如上面资源对象如果我们要使用 RC 的话,对应的 selector 是这样的:

selector: app: nginx

RC 只支持单个 Label 的等式,而 RS 中的 Label Selector 支持 matchLabels 和 matchExpressions 两种形式:

selector:

matchLabels:

app: nginx

---

selector:

matchExpressions: # 该选择器要求 Pod 包含名为 app 的标签

- key: app

operator: In

values: # 并且标签的值必须是 nginx

- nginx

总的来说 RS 是新一代的 RC,所以以后我们不使用 RC,直接使用 RS 即可,他们的功能都是一致的,但是实际上在实际使用中我们也不会直接使用 RS,而是使用更上层的类似于 Deployment 这样的资源对象。注意,一般,还是matchLabels用的多一些。

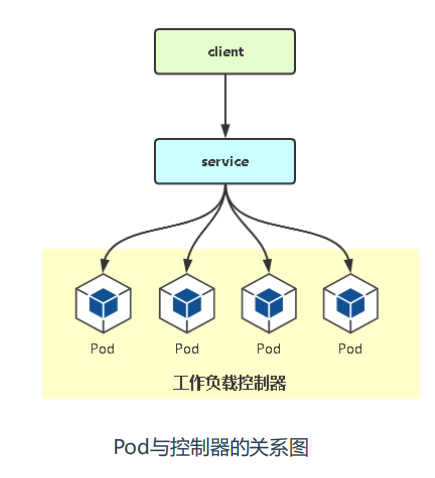

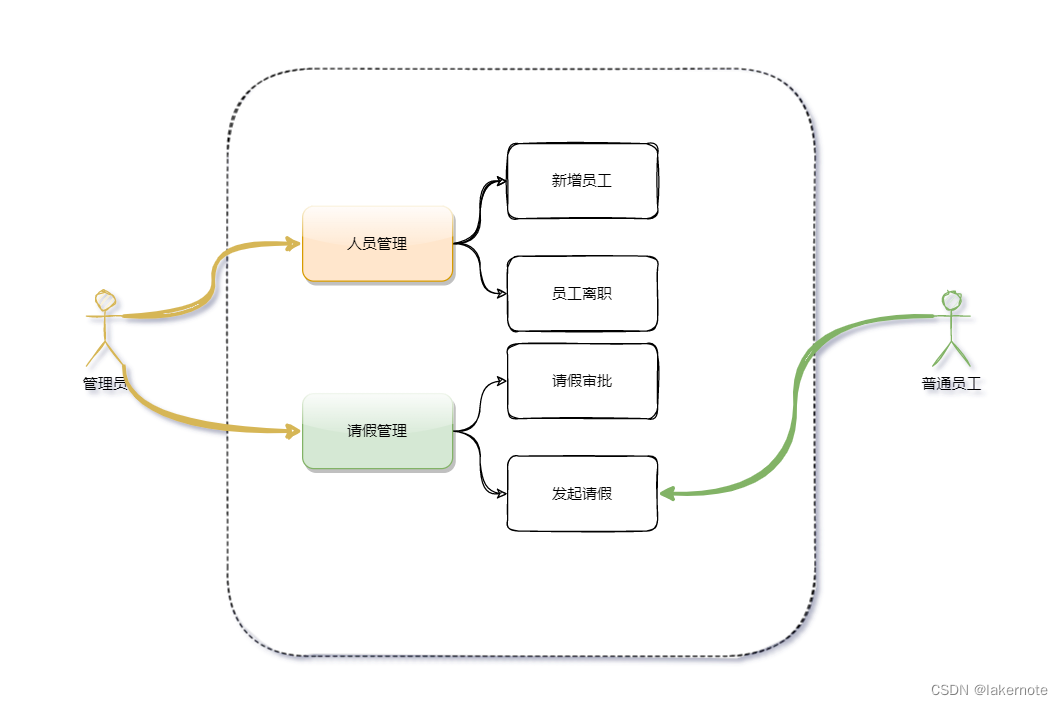

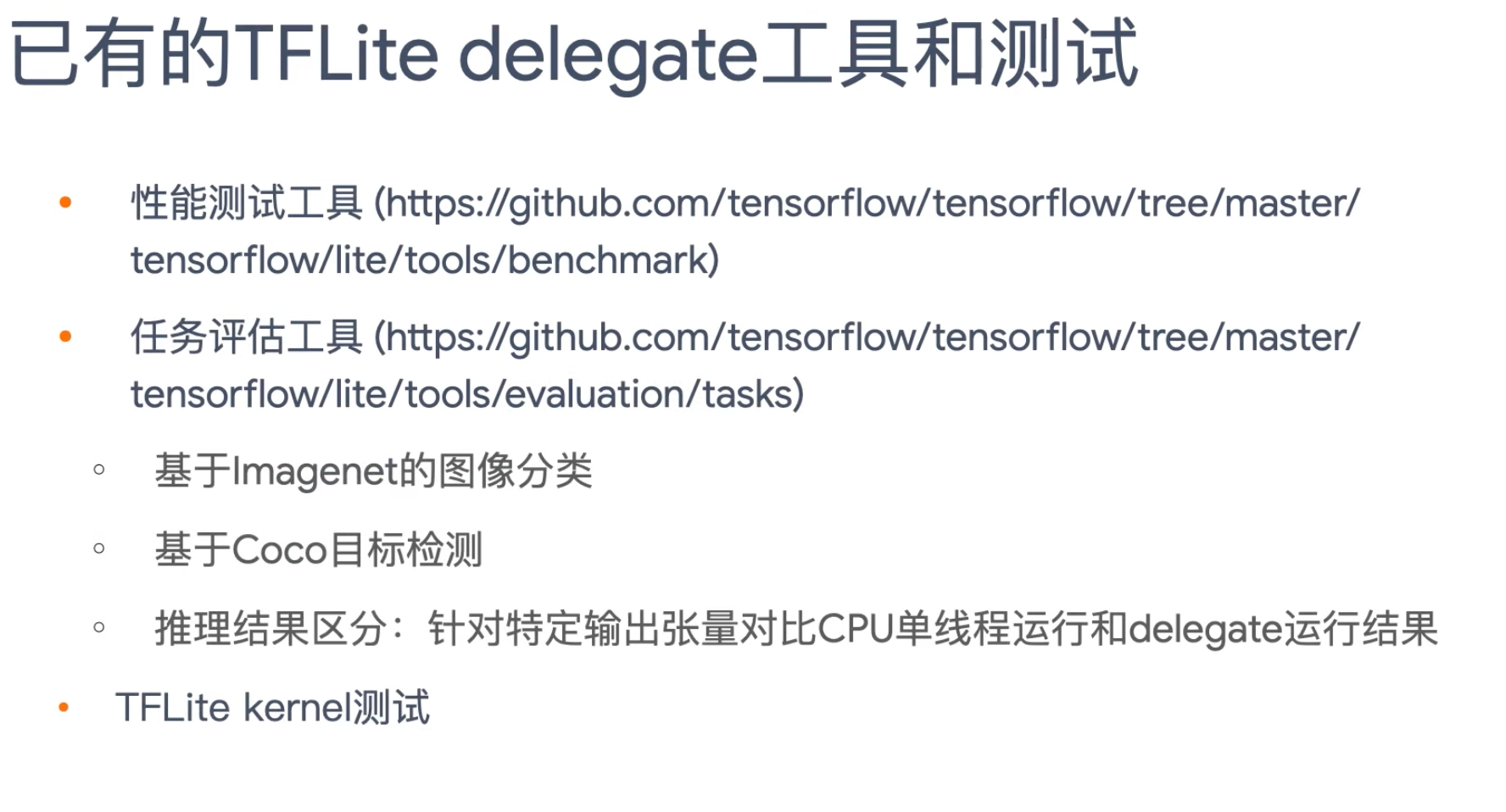

2、Deployment

前面我们学习了 ReplicaSet 控制器,了解到该控制器是用来维护集群中运行的 Pod 数量的,但是往往在实际操作的时候,我们反而不会去直接使用 RS,而是会使用更上层的控制器,比如我们今天要学习的主角 Deployment。Deployment 一个非常重要的功能就是实现了 Pod 的滚动更新,比如我们应用要更新,我们只需要更新我们的容器镜像,然后修改 Deployment 里面的 Pod 模板镜像,那么 Deployment 就会用滚动更新(Rolling Update)的方式来升级现在的 Pod,这个能力是非常重要的。因为对于线上的服务我们需要做到不中断服务,所以滚动更新就成了必须的一个功能。而 Deployment 这个能力的实现,依赖的就是上节课我们学习的ReplicaSet 这个资源对象,实际上我们可以通俗的理解就是每个Deployment 就对应集群中的一次部署,这样就更好理解了。

Deployment是最常用的K8s工作负载控制器(Workload Controllers),是K8s的一个抽象概念,用于更高级层次对象,部署和管理Pod,其他控制器还有DaemonSet、StatefulSet等。

Deployment的主要功能:

- 管理Pod和ReplicaSet

- 具有上线部署、副本设定、滚动升级、回滚等功能

- 提供声明式更新,例如只更新一个新的Image

应用场景:网站、API、微服务

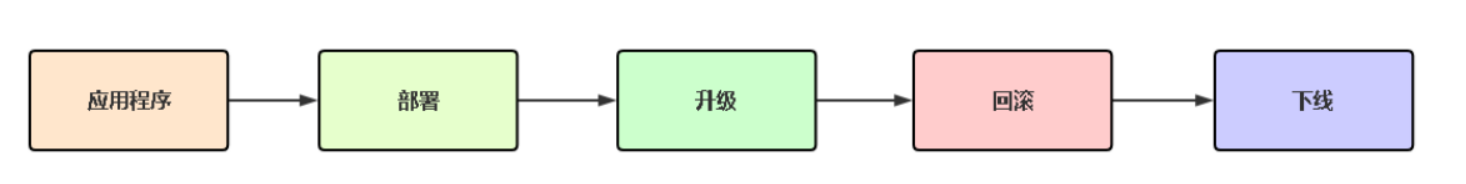

deployment应用生命周期管理流程:

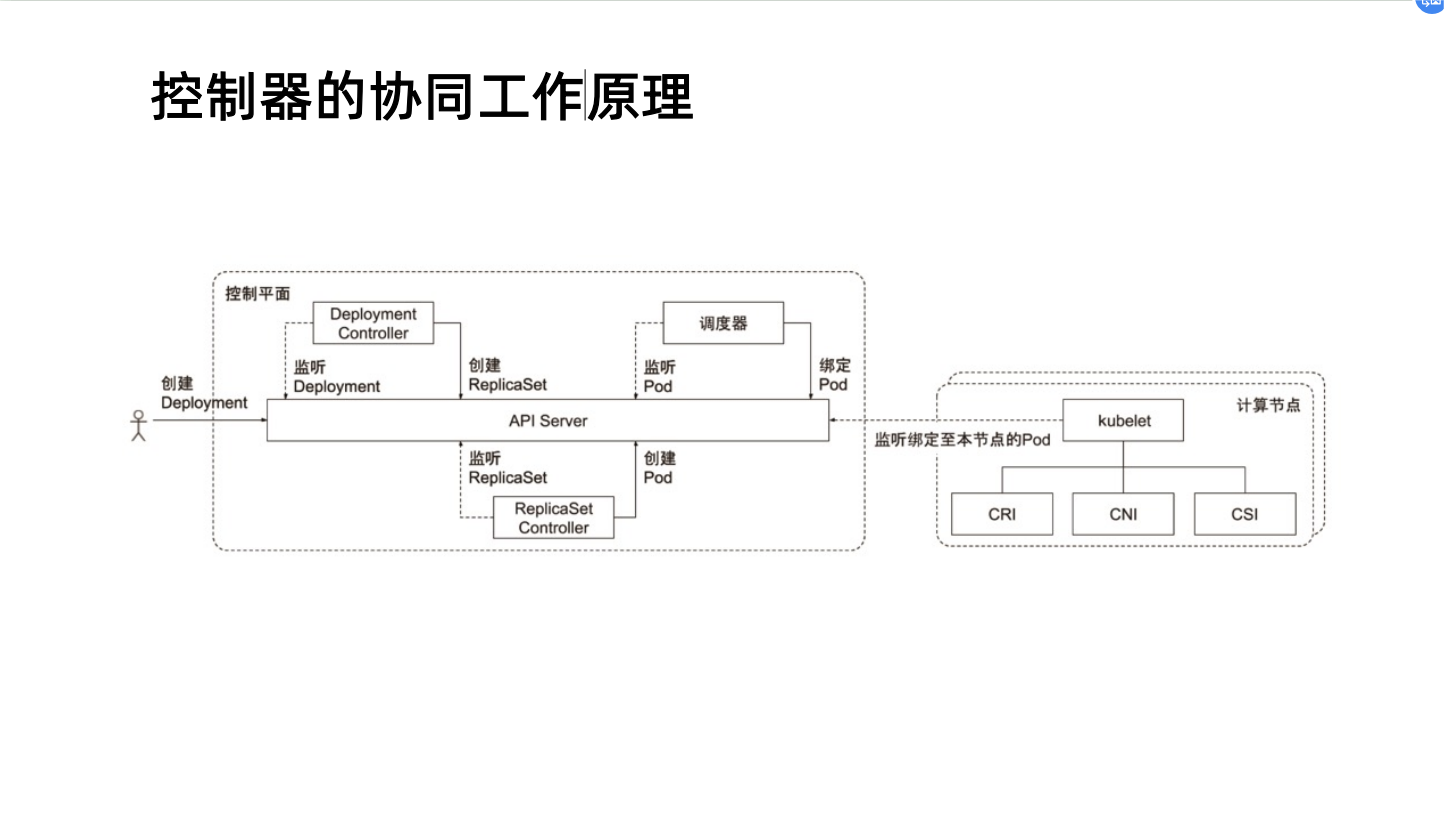

控制器的协调工作原理

💘 实战:Deployment之水平伸缩和滚动更新测试-2022.12.15(成功测试)

- 实验环境

1、win10,vmwrokstation虚机; 2、k8s集群:3台centos7.6 1810虚机,2个master节点,1个node节点 k8s version:v1.20 CONTAINER-RUNTIME:containerd:v1.6.10

- 实验软件(无)

- Deployment 资源对象的格式和 ReplicaSet 几乎一致,如下资源对象就是一个常见的 Deployment 资源类型:

# nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

labels: #这个标签仅仅用于标记deployment本身这个资源对象。注意:deployment里metadata里的label标签定不定义都无所谓,没有什么实际意义,因为deployment在k8s已经是一个很高级的概念了,没有什么人可以管它了哈哈。

role: deploy

spec:

replicas: 3 # 期望的 Pod 副本数量,默认值为1

selector: # Label Selector,必须匹配Pod模板中的标签。

matchLabels: #这里也可以是matchExpressions

app: nginx

template: # Pod 模板

metadata:

labels:

app: nginx #一定要包含上面的 matchLabels里面的标签。

spec:

containers:

- name: nginx

image: nginx:latest # latest标签最好别用在线上

ports:

- containerPort: 80

- 我们这里只是将类型替换成了 Deployment,我们可以先来创建下这个资源对象:

[root@master1 ~]#kubectl apply -f nginx-deploy.yaml deployment.apps/nginx-deploy created [root@master1 ~]#kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE nginx-deploy 3/3 3 3 23s

- 创建完成后,查看 Pod 状态:

[root@master1 ~]#kubectl get po NAME READY STATUS RESTARTS AGE nginx-deploy-cd55c47f5-gwrb5 1/1 Running 0 48s nginx-deploy-cd55c47f5-h6spx 1/1 Running 0 48s nginx-deploy-cd55c47f5-x5mgl 1/1 Running 0 48s

到这里我们发现和之前的 RS 对象是否没有什么两样,都是根据spec.replicas 来维持的副本数量。

- 我们随意查看一个Pod 的描述信息:

[root@master1 ~]#kubectl describe po nginx-deploy-cd55c47f5-gwrb5

Name: nginx-deploy-cd55c47f5-gwrb5

Namespace: default

Priority: 0

Service Account: default

Node: node1/172.29.9.62

Start Time: Thu, 15 Dec 2022 07:24:20 +0800

Labels: app=nginx

pod-template-hash=cd55c47f5

Annotations: <none>

Status: Running

IP: 10.244.1.16

IPs:

IP: 10.244.1.16

Controlled By: ReplicaSet/nginx-deploy-cd55c47f5

……

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 103s default-scheduler Successfully assigned default/nginx-deploy-cd55c47f5-gwrb5 to node1

Normal Pulling 102s kubelet Pulling image "nginx:latest"

Normal Pulled 87s kubelet Successfully pulled image "nginx:latest" in 15.23603838s

Normal Created 87s kubelet Created container nginx

Normal Started 87s kubelet Started container nginx

我们仔细查看其中有这样一个信息 Controlled By: ReplicaSet/nginx-deploy-cd55c47f5,什么意思?是不是表示当前我们这个 Pod 的控制器是一个 ReplicaSet 对象啊,我们不是创建的一个 Deployment 吗?为什么Pod 会被 RS 所控制呢?

- 那我们再去看下这个对应的 RS 对象的详细信息如何呢:

[root@master1 ~]#kubectl describe rs nginx-deploy-cd55c47f5

Name: nginx-deploy-cd55c47f5

Namespace: default

Selector: app=nginx,pod-template-hash=cd55c47f5

Labels: app=nginx

pod-template-hash=cd55c47f5

Annotations: deployment.kubernetes.io/desired-replicas: 3

deployment.kubernetes.io/max-replicas: 4

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/nginx-deploy

Replicas: 3 current / 3 desired

Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=nginx

pod-template-hash=cd55c47f5

Containers:

nginx:

Image: nginx:latest

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 3m41s replicaset-controller Created pod: nginx-deploy-cd55c47f5-gwrb5

Normal SuccessfulCreate 3m41s replicaset-controller Created pod: nginx-deploy-cd55c47f5-x5mgl

Normal SuccessfulCreate 3m41s replicaset-controller Created pod: nginx-deploy-cd55c47f5-h6spx

其中有这样的一个信息: Controlled By: Deployment/nginx-deploy,明白了吧?意思就是我们的 Pod 依赖的控制器 RS 实际上被我们的 Deployment 控制着呢。

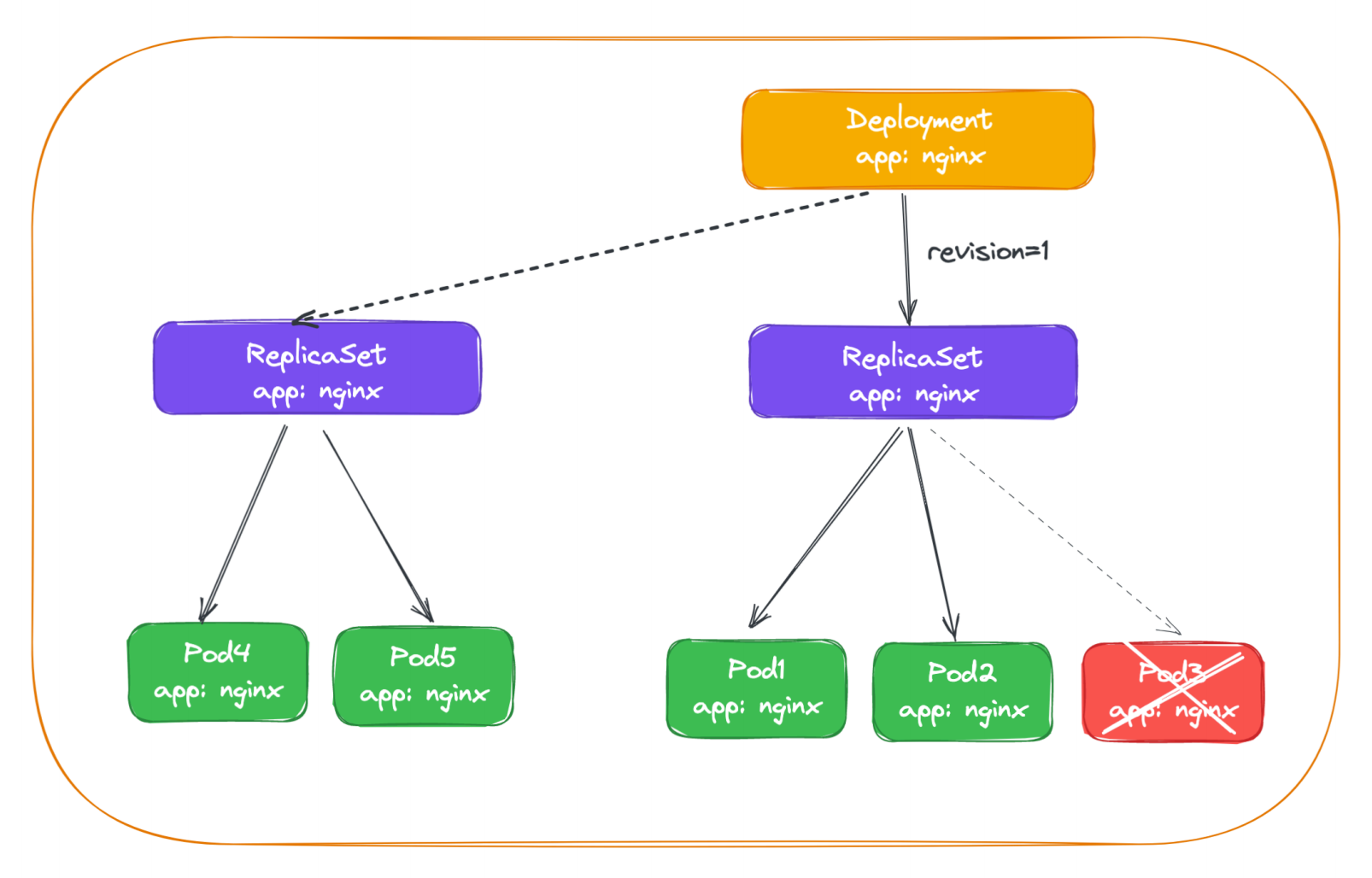

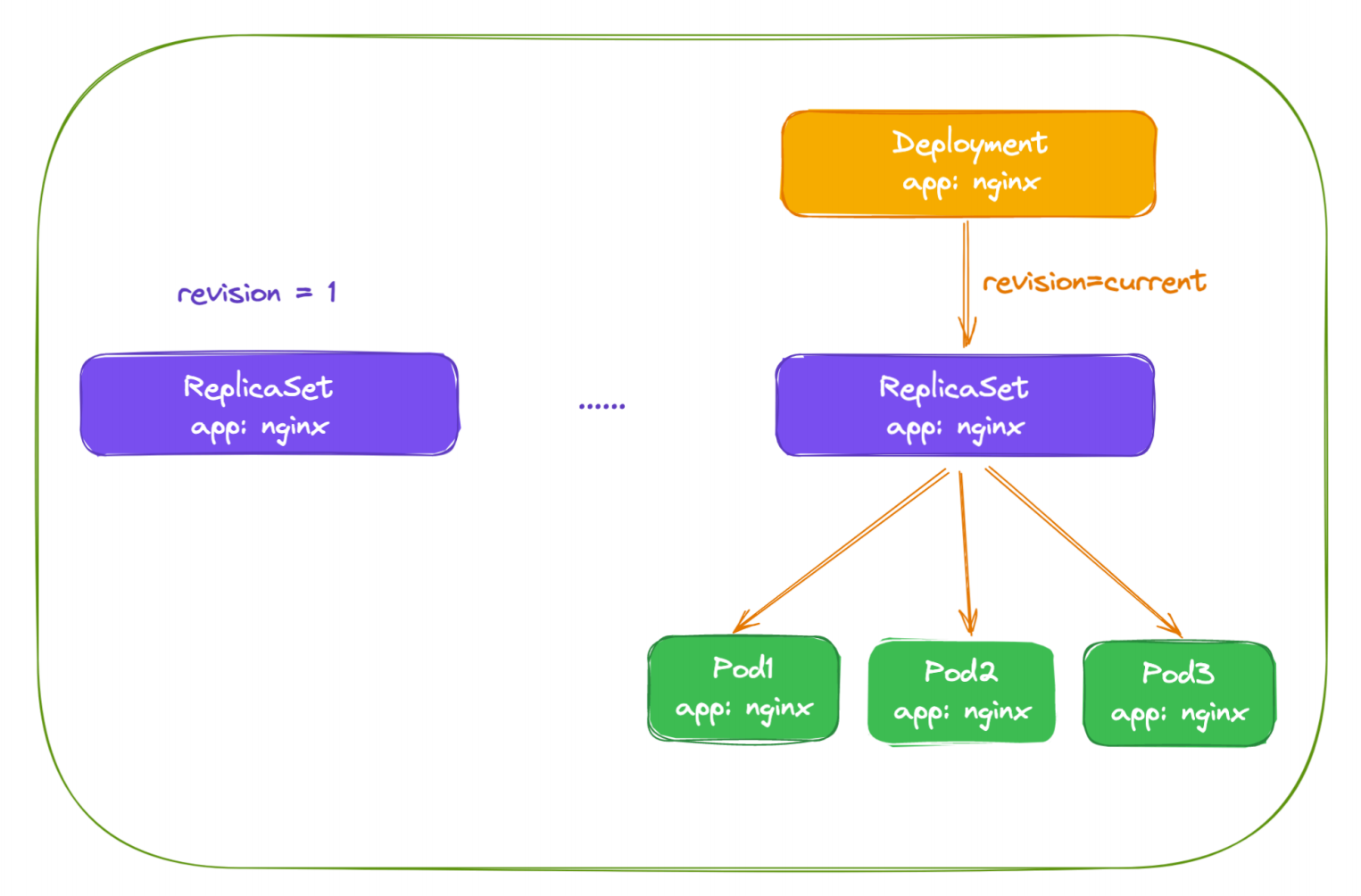

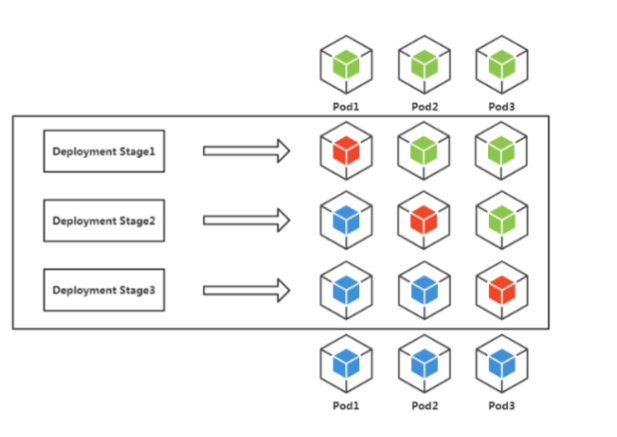

- 我们可以用下图来说明 Pod、ReplicaSet、Deployment 三者之间的关系:

通过上图我们可以很清楚的看到,定义了 3 个副本的 Deployment 与 ReplicaSet 和 Pod 的关系,就是一层一层进行控制的。ReplicaSet 作用和之前一样还是来保证 Pod 的个数始终保存指定的数量,所以 Deployment 中的容器restartPolicy=Always 是唯一的就是这个原因,因为容器必须始终保证自己处于 Running 状态,ReplicaSet 才可以去明确调整 Pod 的个数。而 Deployment 是通过管理 ReplicaSet 的数量和属性来实现水平扩展/收缩以及滚动更新两个功能的。

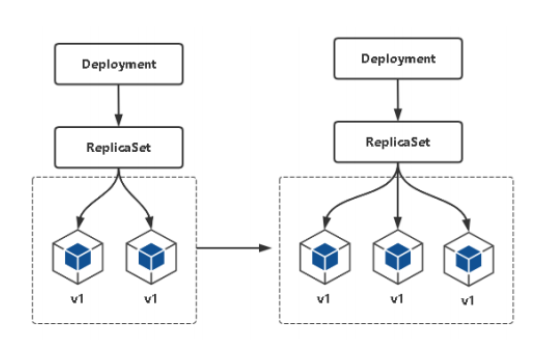

1.水平伸缩

水平扩展/收缩的功能比较简单,因为 ReplicaSet 就可以实现,所以 Deployment 控制器只需要去修改它所控制的 ReplicaSet 的 Pod 副本数量就可以了。比如现在我们把 Pod 的副本调整到 4 个,那么 Deployment 所对应的 ReplicaSet 就会自动创建一个新的 Pod 出来,这样就水平扩展了。

- 先来看下当前deployment、rs的数量:

[root@master1 ~]#kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE nginx-deploy 3/3 3 3 9m48s #这里表示有3个replica [root@master1 ~]#kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deploy-cd55c47f5 3 3 3 9m50s #这里表示有3个replica 即:deployment和rs这的replicas的数量是保持一致的

- 我们可以使用一个新的命令

kubectl scale命令来完成这个操作:

开始扩容pod

[root@master1 ~]#kubectl scale deployment nginx-deploy --replicas=4 deployment.apps/nginx-deploy scaled

- 扩展完成后可以查看当前的 RS 对象

[root@master1 ~]#kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deploy-cd55c47f5 4 4 3 9m #可以看到期望的 Pod 数量已经变成 4 了,只是 Pod 还没准备完成,所以 READY 状态数量还是 3 [root@master1 ~]#kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deploy-cd55c47f5 4 4 4 11m [root@master1 ~]#kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE nginx-deploy 4/4 4 4 11m [root@master1 ~]#kubectl get po NAME READY STATUS RESTARTS AGE nginx-deploy-cd55c47f5-gwrb5 1/1 Running 0 11m nginx-deploy-cd55c47f5-h6spx 1/1 Running 0 11m nginx-deploy-cd55c47f5-vt427 1/1 Running 0 40s nginx-deploy-cd55c47f5-x5mgl 1/1 Running 0 11m

可以看到期望的 Pod 数量已经变成 4 了,只是 Pod 还没准备完成,所以 READY 状态数量还是 3。

- 同样查看 RS 的详细信息:

[root@master1 ~]#kubectl describe rs nginx-deploy-cd55c47f5

Name: nginx-deploy-cd55c47f5

Namespace: default

Selector: app=nginx,pod-template-hash=cd55c47f5

Labels: app=nginx

pod-template-hash=cd55c47f5

Annotations: deployment.kubernetes.io/desired-replicas: 4

deployment.kubernetes.io/max-replicas: 5

deployment.kubernetes.io/revision: 1

Controlled By: Deployment/nginx-deploy

Replicas: 4 current / 4 desired

Pods Status: 4 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: app=nginx

pod-template-hash=cd55c47f5

Containers:

nginx:

Image: nginx:latest

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 13m replicaset-controller Created pod: nginx-deploy-cd55c47f5-gwrb5

Normal SuccessfulCreate 13m replicaset-controller Created pod: nginx-deploy-cd55c47f5-x5mgl

Normal SuccessfulCreate 13m replicaset-controller Created pod: nginx-deploy-cd55c47f5-h6spx

Normal SuccessfulCreate 3m2s replicaset-controller Created pod: nginx-deploy-cd55c47f5-vt427

可以看到 ReplicaSet 控制器增加了一个新的 Pod。

- 同样的 Deployment 资源对象的事件中也可以看到完成了扩容的操作:

[root@master1 ~]#kubectl describe deploy nginx-deploy

Name: nginx-deploy

Namespace: default

CreationTimestamp: Thu, 15 Dec 2022 07:24:20 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=nginx

Replicas: 4 desired | 4 updated | 4 total | 4 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx:latest

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-deploy-cd55c47f5 (4/4 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 14m deployment-controller Scaled up replica set nginx-deploy-cd55c47f5 to 3

Normal ScalingReplicaSet 3m58s deployment-controller Scaled up replica set nginx-deploy-cd55c47f5 to 4 from 3

- 注意:水平伸缩,并不是一次升级,因此这里的revision不会变的

水平扩容/缩容不会创建新的rs,只有更新镜像,触发滚动升级时才会触发rs,才回记录那个版本;

scale up/down

[root@master1 ~]#kubectl get po,rs,deploy NAME READY STATUS RESTARTS AGE pod/nginx-deploy-cd55c47f5-gwrb5 1/1 Running 0 16m pod/nginx-deploy-cd55c47f5-h6spx 1/1 Running 0 16m pod/nginx-deploy-cd55c47f5-vt427 1/1 Running 0 5m49s pod/nginx-deploy-cd55c47f5-x5mgl 1/1 Running 0 16m NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-deploy-cd55c47f5 4 4 4 16m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-deploy 4/4 4 4 16m [root@master1 ~]#kubectl rollout history deployment nginx-deploy deployment.apps/nginx-deploy REVISION CHANGE-CAUSE 1 <none>

好了,接下来我们看一下deployment的滚动更新。

2.滚动更新

滚动升级:K8s对Pod升级的默认策略,通过使用新版本Pod逐步更新旧版本Pod,实现零停机发布,用户无感知。

它是一个一个来的,不是一下子来的;

Deployment实际上有维护着replicaset控制器,而这个控制器我们是不会直接去操作它的,而是从由deployment管理的,这是他的私属管理器;

如果只是水平扩展/收缩这两个功能,就完全没必要设计 Deployment 这个资源对象了,Deployment 最突出的一个功能是支持滚动更新。

- 我们先查看下deployment的升级策略:

[root@master1 ~]#kubectl explain deploy.spec.strategy

KIND: Deployment

VERSION: apps/v1

RESOURCE: strategy <Object>

DESCRIPTION:

The deployment strategy to use to replace existing pods with new ones.

DeploymentStrategy describes how to replace existing pods with new ones.

FIELDS:

rollingUpdate <Object>

Rolling update config params. Present only if DeploymentStrategyType =

RollingUpdate.

type <string>

Type of deployment. Can be "Recreate" or "RollingUpdate". Default is

RollingUpdate.#升级策略默认是RollingUpdate(滚动升级)

- 首先,我们先把默认的滚动升级策略改为

recreate,并且把pod模板的image改为nginx:1.7.9看下效果:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

labels: #这个标签仅仅用于标记deployment本身这个资源对象

role: deploy

spec:

replicas: 4 #期望的Pod副本数量

strategy:

type: Recreate

selector: #label selector

matchLabels:

app: nginx

test: course

template: #Pod模板

metadata:

labels: #一定要和上面的selector 保持一致

app: nginx

test: course

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

- 再测试之前,我们先来再次看下当前的测试环境:

[root@master1 ~]#kubectl get po,deploy,rs NAME READY STATUS RESTARTS AGE nginx-deploy-fd46765d4-8nzmp 1/1 Running 0 9m8s nginx-deploy-fd46765d4-9rzqt 1/1 Running 0 9m8s nginx-deploy-fd46765d4-ckdhw 1/1 Running 0 9m8s nginx-deploy-fd46765d4-tdkjv 1/1 Running 0 2m38s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/nginx-deploy 4/4 4 4 3m15s NAME DESIRED CURRENT READY AGE replicaset.apps/nginx-deploy-fd46765d4 4 4 4 3m15s [root@master1 ~]#kubectl rollout history deployment nginx-deploy deployment.apps/nginx-deploy REVISION CHANGE-CAUSE 1 <none>

- 此时再打开一个终端,用watch命令监控下pod的变化情况:

[root@master1 ~]#kubectl get po --watch NAME READY STATUS RESTARTS AGE nginx-deploy-fd46765d4-8nzmp 1/1 Running 0 9m34s nginx-deploy-fd46765d4-9rzqt 1/1 Running 0 9m34s nginx-deploy-fd46765d4-ckdhw 1/1 Running 0 9m34s nginx-deploy-fd46765d4-tdkjv 1/1 Running 0 3m4s -- test Recreate----

- 更新一下资源配置清单:

[root@master1 ~]#kubectl apply -f nginx-deploy.yaml deployment.apps/nginx-deploy configured

- 此时观察下刚才那个watch pod的终端发生的变化:

我们会发现Recreate表示全部重新创建,即把旧的pod全部删除掉,然后再用新镜像创建新版本的pod;

[root@master1 ~]#kubectl get po --watch

NAME READY STATUS RESTARTS AGE

nginx-deploy-fd46765d4-8nzmp 1/1 Running 0 9m34s

nginx-deploy-fd46765d4-9rzqt 1/1 Running 0 9m34s

nginx-deploy-fd46765d4-ckdhw 1/1 Running 0 9m34s

nginx-deploy-fd46765d4-tdkjv 1/1 Running 0 3m4s

-- test Recreate----

nginx-deploy-fd46765d4-tdkjv 1/1 Terminating 0 3m25s

nginx-deploy-fd46765d4-8nzmp 1/1 Terminating 0 9m55s

nginx-deploy-fd46765d4-ckdhw 1/1 Terminating 0 9m55s

nginx-deploy-fd46765d4-9rzqt 1/1 Terminating 0 9m55s

nginx-deploy-fd46765d4-ckdhw 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-ckdhw 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-ckdhw 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-9rzqt 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-9rzqt 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-9rzqt 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-tdkjv 0/1 Terminating 0 3m26s

nginx-deploy-fd46765d4-tdkjv 0/1 Terminating 0 3m26s

nginx-deploy-fd46765d4-tdkjv 0/1 Terminating 0 3m26s

nginx-deploy-fd46765d4-8nzmp 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-8nzmp 0/1 Terminating 0 9m56s

nginx-deploy-fd46765d4-8nzmp 0/1 Terminating 0 9m56s #原来old pod被一起删除

nginx-deploy-6c5ff87cf-4f229 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-4f229 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-w2csq 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-p866j 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-w2csq 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-ttm2v 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-p866j 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-ttm2v 0/1 Pending 0 0s

nginx-deploy-6c5ff87cf-p866j 0/1 ContainerCreating 0 0s

nginx-deploy-6c5ff87cf-4f229 0/1 ContainerCreating 0 1s

nginx-deploy-6c5ff87cf-w2csq 0/1 ContainerCreating 0 2s

nginx-deploy-6c5ff87cf-ttm2v 0/1 ContainerCreating 0 2s

nginx-deploy-6c5ff87cf-ttm2v 1/1 Running 0 4s

nginx-deploy-6c5ff87cf-p866j 1/1 Running 0 17s

nginx-deploy-6c5ff87cf-w2csq 1/1 Running 0 19s

nginx-deploy-6c5ff87cf-4f229 1/1 Running 0 34s

[root@master1 ~]#kubectl get deploy,rs,po

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deploy 4/4 4 4 11m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deploy-6c5ff87cf 4 4 4 91s #当前rs

replicaset.apps/nginx-deploy-fd46765d4 0 0 0 11m

NAME READY STATUS RESTARTS AGE

pod/nginx-deploy-6c5ff87cf-4f229 1/1 Running 0 91s

pod/nginx-deploy-6c5ff87cf-p866j 1/1 Running 0 91s

pod/nginx-deploy-6c5ff87cf-ttm2v 1/1 Running 0 91s

pod/nginx-deploy-6c5ff87cf-w2csq 1/1 Running 0 91s

[root@master1 ~]#

[root@master1 ~]#kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none>

2 <none> #当前rs

#我们可以在看下当前rs的revisio是不是2呢:

[root@master1 ~]#kubectl describe rs nginx-deploy-6c5ff87cf |grep revision

deployment.kubernetes.io/revision: 2

#我们再看下nginx-deploy deploy的 event:

[root@master1 ~]#kubectl describe deployments.apps |tail -8

NewReplicaSet: nginx-deploy-6c5ff87cf (4/4 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 12m deployment-controller Scaled up replica set nginx-deploy-fd46765d4 to 3

Normal ScalingReplicaSet 5m46s deployment-controller Scaled up replica set nginx-deploy-fd46765d4 to 4

Normal ScalingReplicaSet 2m21s deployment-controller Scaled down replica set nginx-deploy-fd46765d4 to 0

Normal ScalingReplicaSet 2m20s deployment-controller Scaled up replica set nginx-deploy-6c5ff87cf to 4

上面Recreate升级策略验证完了,接下来我们验证下RollingUpdate滚动更新升级策略。

- 先来看下

RollingUpdate可以配置的选项:

[root@master1 ~]#kubectl explain deploy.spec.strategy.rollingUpdate

KIND: Deployment

VERSION: apps/v1

RESOURCE: rollingUpdate <Object>

DESCRIPTION:

Rolling update config params. Present only if DeploymentStrategyType =

RollingUpdate.

Spec to control the desired behavior of rolling update.

FIELDS:

maxSurge <string>

The maximum(最大限度) number of pods that can be scheduled above(超过) the desired number

of pods. Value can be an absolute(绝对的) number (ex: 5) or a percentage(百分比) of desired

pods (ex: 10%). This can not be 0 if MaxUnavailable is 0. Absolute number

is calculated(计算) from percentage by rounding up(四舍五入). Defaults to 25%. Example:

when this is set to 30%, the new ReplicaSet can be scaled up immediately

when the rolling update starts, such that the total number of old and new

pods do not exceed(超过) 130% of desired pods. Once(一旦) old pods have been killed,

new ReplicaSet can be scaled up further(进一步), ensuring that total number of pods

running at any time during the update is at most 130% of desired pods.

maxUnavailable <string>

The maximum number of pods that can be unavailable during the update. Value

can be an absolute number (ex: 5) or a percentage of desired pods (ex:

10%). Absolute number is calculated from percentage by rounding down(舍入,去尾法). This

can not be 0 if MaxSurge is 0. Defaults to 25%. Example: when this is set

to 30%, the old ReplicaSet can be scaled down to 70% of desired pods

immediately when the rolling update starts. Once new pods are ready, old

ReplicaSet can be scaled down further, followed by scaling up the new

ReplicaSet, ensuring that the total number of pods available at all times

during the update is at least 70% of desired pods.

- 现在我们修改本次的升级策略为RollingUpdate,当然默认的策略就是RollingUpdate。同时,本次nginx镜像tag修改为latest。

#nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

namespace: default

labels: #这个标签仅仅用于标记deployment本身这个资源对象

role: deploy

spec:

replicas: 4 #期望的Pod副本数量

minReadySeconds: 5

strategy:

type: RollingUpdate #指定滚动更新策略,默认

rollingUpdate:

maxUnavailable: 1 #最大不可用的pod数量

maxSurge: 1

selector: #label selector

matchLabels:

app: nginx

test: course

template: #Pod模板

metadata:

labels: #一定要和上面的selector 保持一致

app: nginx

test: course

spec:

containers:

- name: nginx

image: nginx:latest #本次nginx镜像tag修改为latest

ports:

- containerPort: 80

与前面相比较,除了更改了镜像之外,我们还指定了更新策略:

minReadySeconds: 5

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

minReadySeconds:表示 Kubernetes 在等待设置的时间后才进行升级,如果没有设置该值,Kubernetes 会假设该容器启动起来后就提供服务了,如果没有设置该值,在某些极端情况下可能会造成服务不正常运行,默认值就是0。(这里应该说的是新版本容器启动后需要等待的时间;)type=RollingUpdate:表示设置更新策略为滚动更新,可以设置为Recreate和RollingUpdate两个值,Recreate表示全部重新创建,默认值就是RollingUpdate。maxSurge:表示升级过程中最多可以比原先设置多出的 Pod 数量,例如:maxSurage=1,replicas=5,就表示Kubernetes 会先启动一个新的 Pod,然后才删掉一个旧的 Pod,整个升级过程中最多会有5+1个 Pod。maxUnavaible:表示升级过程中最多有多少个 Pod 处于无法提供服务的状态,当maxSurge不为0时,该值也不能为0,例如:maxUnavaible=1,则表示 Kubernetes 整个升级过程中最多会有1个 Pod 处于无法服务的状态(这里指的是老版本pod的不可用个数)。

maxSurge 和 maxUnavailable 属性的值不可同时为 0,否则 Pod 对象的副本数量在符合用户期望的数量后无法做出合理变动以进行滚动更新操作。

特别注意:

滚动升级时,有可能先创建新的pod,也有可能先删除老的pod,这个和配置的maxSurge和maxUnavailable参数有关。并且,他们的配置数量也可以大于1;

- 本次测试前的实验话环境我们再次来确认下:

[root@master1 ~]#kubectl get deploy,rs,pod

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deploy 4/4 4 4 17m

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-deploy-6c5ff87cf 4 4 4 4m50s

replicaset.apps/nginx-deploy-fd46765d4 0 0 0 17m

NAME READY STATUS RESTARTS AGE

nginx-deploy-6c5ff87cf-4f229 1/1 Running 0 3m14s

nginx-deploy-6c5ff87cf-p866j 1/1 Running 0 3m14s

nginx-deploy-6c5ff87cf-ttm2v 1/1 Running 0 3m14s

nginx-deploy-6c5ff87cf-w2csq 1/1 Running 0 3m14s

[root@master1 ~]#

[root@master1 ~]#kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none>

2 <none>

[root@master1 ~]#

[root@master1 ~]#kubectl describe rs nginx-deploy-6c5ff87cf|grep revision

deployment.kubernetes.io/revision: 2

[root@master1 ~]#

[root@master1 ~]#kubectl describe po nginx-deploy-6c5ff87cf-4f229 |grep Image

Image: nginx:1.7.9

Image ID: sha256:35d28df486f6150fa3174367499d1eb01f22f5a410afe4b9581ac0e0e58b3eaf

[root@master1 ~]#

- 这里和上面一样的方法,我们另外打开一个窗口用

--watch来监视下pod的变化状态:

[root@master1 ~]#kubectl get po --watch NAME READY STATUS RESTARTS AGE nginx-deploy-6c5ff87cf-4f229 1/1 Running 0 4m17s nginx-deploy-6c5ff87cf-p866j 1/1 Running 0 4m17s nginx-deploy-6c5ff87cf-ttm2v 1/1 Running 0 4m17s nginx-deploy-6c5ff87cf-w2csq 1/1 Running 0 4m17s ---- test Roollingupdate-----

- 现在我们来直接更新上面的 Deployment 资源对象:

[root@master1 ~]#kubectl apply -f nginx-deploy.yaml deployment.apps/nginx-deploy configured

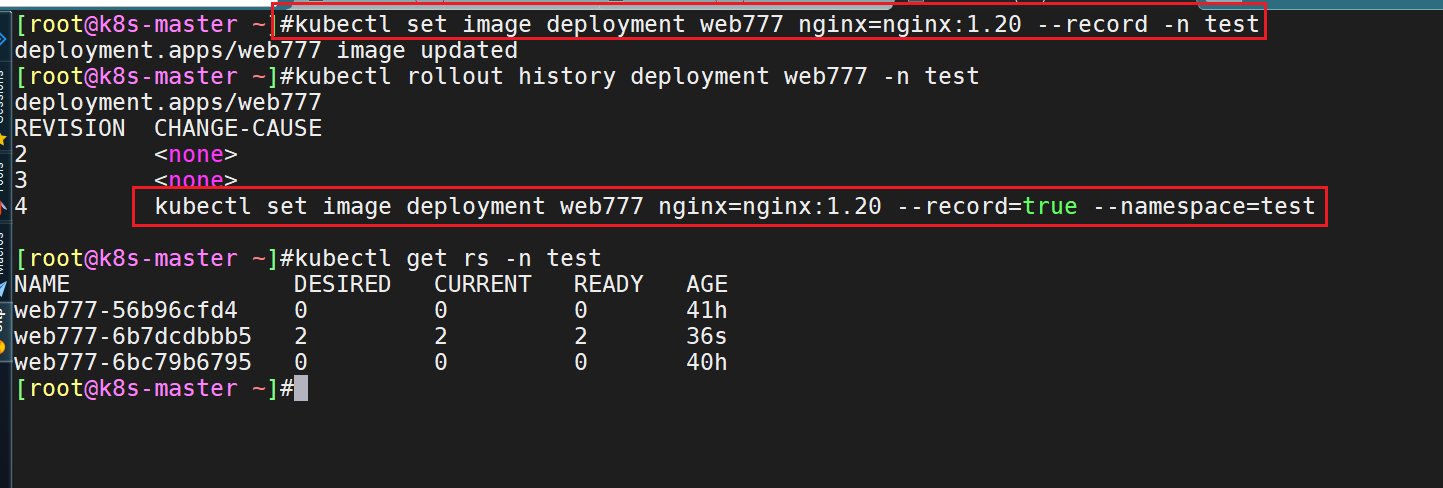

record 参数:我们可以添加了一个额外的 --record 参数来记录下我们的每次操作所执行的命令,以方便后面查看。

说明:这里只有通过命令行来更新镜像的话,这里是才会把当前执行的命令给记录下来;但是如果通过.yaml文件来更新的话,这里依然不会有任何记录的,会出现none;

其实:这种方法也是不好用的;

[root@k8s-master ~]#kubectl set image deployment web777 nginx=nginx:1.20 --record -n test

回滚

#回滚(项目升级失败恢复到正常版本) kubectl rollout history deployment/web # 查看历史发布版本 kubectl rollout undo deployment/web # 回滚上一个版本 kubectl rollout undo deployment/web --to-revision=2 # 回滚历史指定版本 #注:回滚是重新部署某一次部署时的状态,即当时版本所有配置 说明: k8s原生的"回滚"功能非常鸡肋,不能很明显地看到上一个版本具体是什么样的; 一般大厂都是自己开发这个"版本控制"功能模块的; 我们可以采用如下2种方式来改进: 1.写一个shell脚本(可以关联出回滚序号和其相关版本) 2.写平台的话,可以在mysql数据量里查询;

- 更新后,我们可以执行下面的

kubectl rollout status命令来查看我们此次滚动更新的状态:

我们先来看下rollout命令的可以参数:

[root@master1 ~]#kubectl rollout --help Manage the rollout of a resource. Valid resource types include: * deployments * daemonsets * statefulsets Examples: # Rollback to the previous deployment kubectl rollout undo deployment/abc # Check the rollout status of a daemonset kubectl rollout status daemonset/foo Available Commands: history View rollout history pause(暂停) Mark the provided resource as paused restart Restart a resource resume(恢复) Resume a paused resource status Show the status of the rollout undo(撤销,使恢复原状) Undo a previous rollout Usage: kubectl rollout SUBCOMMAND [options] Use "kubectl <command> --help" for more information about a given command. Use "kubectl options" for a list of global command-line options (applies to all commands). [root@master1 ~]#

- 从上面的信息可以看出我们的滚动更新已经有两个 Pod 已经更新完成了,在滚动更新过程中,我们还可以执行如下的命令来暂停更新:

[root@master1 ~]#kubectl rollout pause deployment/nginx-deploy deployment.apps/nginx-deploy paused

这个时候我们的滚动更新就暂停了,此时我们可以查看下 Deployment 的详细信息:

[root@master1 ~]#kubectl describe deployments.apps nginx-deploy

Name: nginx-deploy

Namespace: default

CreationTimestamp: Sat, 13 Nov 2021 10:41:09 +0800

Labels: role=deploy

Annotations: deployment.kubernetes.io/revision: 3

Selector: app=nginx,test=course

Replicas: 4 desired | 2 updated | 5 total | 5 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 5

RollingUpdateStrategy: 1 max unavailable, 1 max surge

Pod Template:

Labels: app=nginx

test=course

Containers:

nginx:

Image: nginx:latest

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing Unknown DeploymentPaused

OldReplicaSets: nginx-deploy-6c5ff87cf (3/3 replicas created)

NewReplicaSet: nginx-deploy-595b8954f7 (2/2 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 15m deployment-controller Scaled up replica set nginx-deploy-fd46765d4 to 3

Normal ScalingReplicaSet 9m8s deployment-controller Scaled up replica set nginx-deploy-fd46765d4 to 4

Normal ScalingReplicaSet 5m43s deployment-controller Scaled down replica set nginx-deploy-fd46765d4 to 0

Normal ScalingReplicaSet 5m42s deployment-controller Scaled up replica set nginx-deploy-6c5ff87cf to 4

Normal ScalingReplicaSet 41s deployment-controller Scaled up replica set nginx-deploy-595b8954f7 to 1

Normal ScalingReplicaSet 41s deployment-controller Scaled down replica set nginx-deploy-6c5ff87cf to 3

Normal ScalingReplicaSet 41s deployment-controller Scaled up replica set nginx-deploy-595b8954f7 to 2

我们仔细观察 Events 事件区域的变化,上面我们用 kubectl scale 命令将 Pod 副本调整到了 4,现在我们更新的时候是不是声明又变成 3 了,所以 Deployment 控制器首先是将之前控制的 nginx-deploy-85ff79dd56 这个 RS 资源对象进行缩容操作,然后滚动更新开始了,可以发现 Deployment 为一个新的 nginx-deploy-5b7b9ccb95 RS 资源对象首先新建了一个新的 Pod,然后将之前的 RS 对象缩容到 2 了,再然后新的 RS 对象扩容到 2,后面由于我们暂停滚动升级了,所以没有后续的事件了,大家有看明白这个过程吧?这个过程就是滚动更新的过程,启动一个新的 Pod,杀掉一个旧的 Pod,然后再启动一个新的 Pod,这样滚动更新下去,直到全都变成新的 Pod,这个时候系统中应该存在 4 个 Pod,因为我们设置的策略maxSurge=1,所以在升级过程中是允许的,而且是两个新的 Pod,两个旧的 Pod:

[root@master1 ~]#kubectl get po -l app=nginx NAME READY STATUS RESTARTS AGE nginx-deploy-595b8954f7-sp5nj 1/1 Running 0 86s nginx-deploy-595b8954f7-z6pn4 1/1 Running 0 86s nginx-deploy-6c5ff87cf-p866j 1/1 Running 0 6m27s nginx-deploy-6c5ff87cf-ttm2v 1/1 Running 0 6m27s nginx-deploy-6c5ff87cf-w2csq 1/1 Running 0 6m27s

查看 Deployment 的状态也可以看到当前的 Pod 状态:

[root@master1 ~]#kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE nginx-deploy 5/4 2 5 16m

- 这个时候我们可以使用

kubectl rollout resume来恢复我们的滚动更新:

[root@master1 ~]#kubectl rollout resume deployment nginx-deploy deployment.apps/nginx-deploy resumed [root@master1 ~]# [root@master1 ~]#kubectl rollout status deployment nginx-deploy deployment "nginx-deploy" successfully rolled out [root@master1 ~]#

看到上面的信息证明我们的滚动更新已经成功了,同样可以查看下资源状态:

[root@master1 ~]#kubectl get po -l app=nginx NAME READY STATUS RESTARTS AGE nginx-deploy-595b8954f7-p2qht 1/1 Running 0 68s nginx-deploy-595b8954f7-qw6gz 1/1 Running 0 68s nginx-deploy-595b8954f7-sp5nj 1/1 Running 0 3m25s nginx-deploy-595b8954f7-z6pn4 1/1 Running 0 3m25s [root@master1 ~]#kubectl get deployments.apps NAME READY UP-TO-DATE AVAILABLE AGE nginx-deploy 4/4 4 4 18m [root@master1 ~]#

这个时候我们查看 ReplicaSet 对象,可以发现会出现3个:

[root@master1 ~]#kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deploy-595b8954f7 4 4 4 3m43s nginx-deploy-6c5ff87cf 0 0 0 8m44s nginx-deploy-fd46765d4 0 0 0 18m

从上面可以看出滚动更新之前我们使用的 RS 资源对象的 Pod 副本数已经变成 0 了,而滚动更新后的 RS 资源对象变成了 4 个副本,我们可以导出之前的 RS 对象查看:

[root@master1 ~]#kubectl get rs nginx-deploy-6c5ff87cf -oyaml

apiVersion: apps/v1

kind: ReplicaSet

metadata:

annotations:

deployment.kubernetes.io/desired-replicas: "4"

deployment.kubernetes.io/max-replicas: "5"

deployment.kubernetes.io/revision: "2"

creationTimestamp: "2021-11-13T02:51:05Z"

generation: 4

labels:

app: nginx

pod-template-hash: 6c5ff87cf

test: course

name: nginx-deploy-6c5ff87cf

namespace: default

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: Deployment

name: nginx-deploy

uid: ac7c0147-2ed9-4e61-91fa-b4bfdf185564

resourceVersion: "319487"

uid: d3455813-e6eb-480d-b88b-d4761d16c131

spec:

replicas: 0

selector:

matchLabels:

app: nginx

pod-template-hash: 6c5ff87cf

test: course

template:

metadata:

creationTimestamp: null

labels:

app: nginx

pod-template-hash: 6c5ff87cf

test: course

spec:

containers:

- image: nginx:1.7.9

imagePullPolicy: Always

name: nginx

ports:

- containerPort: 80

protocol: TCP

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

observedGeneration: 4

replicas: 0 #replicas为0

我们仔细观察这个资源对象里面的描述信息除了副本数变成了 replicas=0 之外,和更新之前没有什么区别吧?大家看到这里想到了什么?有了这个 RS 的记录存在,是不是我们就可以回滚了啊?而且还可以回滚到前面的任意一个版本。

- 这个版本是如何定义的呢?我们可以通过命令

rollout history来获取:

[root@master1 ~]#kubectl rollout history deployment nginx-deploy deployment.apps/nginx-deploy REVISION CHANGE-CAUSE 1 <none> 2 <none> 3 <none> [root@master1 ~]#

其实 rollout history 中记录的 revision 是和 ReplicaSets 一一对应。如果我们手动删除某个 ReplicaSet,对应的rollout history就会被删除,也就是说你无法回滚到这个revison了,同样我们还可以查看一个revison的详细信息:

[root@master1 ~]#kubectl rollout history deployment nginx-deploy --revision=2

deployment.apps/nginx-deploy with revision #2

Pod Template:

Labels: app=nginx

pod-template-hash=6c5ff87cf

test=course

Containers:

nginx:

Image: nginx:1.7.9

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

[root@master1 ~]#

- 我们先来看下当前的revision是几呢:

[root@master1 ~]#kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none>

2 <none>

3 <none>

[root@master1 ~]#kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-deploy-595b8954f7-p2qht 1/1 Running 0 5m51s

nginx-deploy-595b8954f7-qw6gz 1/1 Running 0 5m51s

nginx-deploy-595b8954f7-sp5nj 1/1 Running 0 8m8s

nginx-deploy-595b8954f7-z6pn4 1/1 Running 0 8m8s

[root@master1 ~]#kubectl describe rs nginx-deploy-595b8954f7 |grep revision

deployment.kubernetes.io/revision: 3 #可以看到强的revision是3

[root@master1 ~]#

- 假如现在要直接回退到当前版本的前一个版本,我们可以直接使用如下命令进行操作:

➜ ~ kubectl rollout undo deployment nginx-deploy

- 当然也可以回退到指定的

revision版本:

➜ ~ kubectl rollout undo deployment nginx-deploy --to-revision=1 deployment "nginx-deploy" rolled back

- 本次假设我们回退到

1版本:

[root@master1 ~]#kubectl rollout undo deployment nginx-deploy --to-revision=1 deployment.apps/nginx-deploy rolled back

- 回滚的过程中我们同样可以查看回滚状态:

[root@master1 ~]#kubectl rollout status deployment nginx-deploy Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 2 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 3 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 3 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 3 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 3 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 3 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 3 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 3 out of 4 new replicas have been updated... Waiting for deployment "nginx-deploy" rollout to finish: 1 old replicas are pending termination... Waiting for deployment "nginx-deploy" rollout to finish: 3 of 4 updated replicas are available... Waiting for deployment "nginx-deploy" rollout to finish: 3 of 4 updated replicas are available... deployment "nginx-deploy" successfully rolled out [root@master1 ~]#

- 这个时候查看对应的 RS 资源对象可以看到 Pod 副本已经回到之前的 RS 里面去了。

[root@master1 ~]#kubectl get rs NAME DESIRED CURRENT READY AGE nginx-deploy-595b8954f7 0 0 0 18m nginx-deploy-6c5ff87cf 0 0 0 23m nginx-deploy-fd46765d4 4 4 4 33m [root@master1 ~]#

- 不过需要注意的是回滚的操作滚动的

revision始终是递增的:

[root@master1 ~]#kubectl rollout history deployment nginx-deploy deployment.apps/nginx-deploy REVISION CHANGE-CAUSE 2 <none> 3 <none> 4 <none> [root@master1 ~]#

保留旧版本

在很早之前的 Kubernetes 版本中,默认情况下会为我们暴露下所有滚动升级的历史记录,也就是 ReplicaSet 对象,但一般情况下没必要保留所有的版本,毕竟会存在 etcd 中,我们可以通过配置 spec.revisionHistoryLimit 属性来设置保留的历史记录数量,不过新版本中该值默认为 10,如果希望多保存几个版本可以设置该字段。

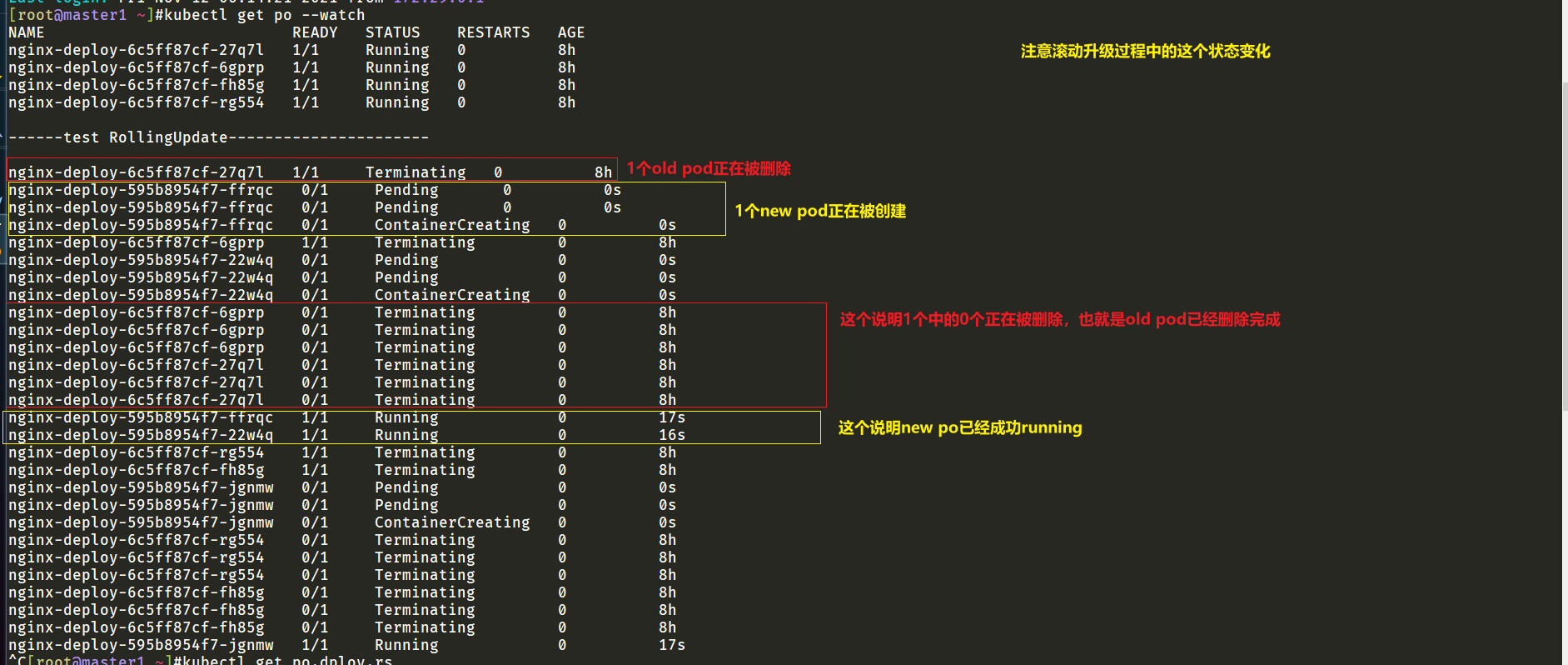

- 我们来查看下watch监控的内容

[root@master1 ~]#kubectl get po --watch NAME READY STATUS RESTARTS AGE nginx-deploy-6c5ff87cf-4f229 1/1 Running 0 4m17s nginx-deploy-6c5ff87cf-p866j 1/1 Running 0 4m17s nginx-deploy-6c5ff87cf-ttm2v 1/1 Running 0 4m17s nginx-deploy-6c5ff87cf-w2csq 1/1 Running 0 4m17s ---- test Roollingupdate----- nginx-deploy-595b8954f7-z6pn4 0/1 Pending 0 0s nginx-deploy-595b8954f7-z6pn4 0/1 Pending 0 0s nginx-deploy-6c5ff87cf-4f229 1/1 Terminating 0 5m1s nginx-deploy-595b8954f7-z6pn4 0/1 ContainerCreating 0 0s nginx-deploy-595b8954f7-sp5nj 0/1 Pending 0 0s nginx-deploy-595b8954f7-sp5nj 0/1 Pending 0 0s nginx-deploy-595b8954f7-sp5nj 0/1 ContainerCreating 0 0s nginx-deploy-6c5ff87cf-4f229 0/1 Terminating 0 5m2s nginx-deploy-6c5ff87cf-4f229 0/1 Terminating 0 5m2s nginx-deploy-6c5ff87cf-4f229 0/1 Terminating 0 5m2s nginx-deploy-595b8954f7-sp5nj 1/1 Running 0 2s nginx-deploy-595b8954f7-z6pn4 1/1 Running 0 17s nginx-deploy-6c5ff87cf-ttm2v 1/1 Terminating 0 7m18s nginx-deploy-6c5ff87cf-w2csq 1/1 Terminating 0 7m18s nginx-deploy-595b8954f7-qw6gz 0/1 Pending 0 0s nginx-deploy-595b8954f7-qw6gz 0/1 Pending 0 0s nginx-deploy-595b8954f7-p2qht 0/1 Pending 0 0s nginx-deploy-595b8954f7-p2qht 0/1 Pending 0 0s nginx-deploy-595b8954f7-qw6gz 0/1 ContainerCreating 0 0s nginx-deploy-595b8954f7-p2qht 0/1 ContainerCreating 0 0s nginx-deploy-6c5ff87cf-ttm2v 0/1 Terminating 0 7m19s nginx-deploy-6c5ff87cf-ttm2v 0/1 Terminating 0 7m19s nginx-deploy-6c5ff87cf-ttm2v 0/1 Terminating 0 7m19s nginx-deploy-6c5ff87cf-w2csq 0/1 Terminating 0 7m19s nginx-deploy-6c5ff87cf-w2csq 0/1 Terminating 0 7m19s nginx-deploy-6c5ff87cf-w2csq 0/1 Terminating 0 7m19s nginx-deploy-595b8954f7-p2qht 1/1 Running 0 16s nginx-deploy-595b8954f7-qw6gz 1/1 Running 0 17s nginx-deploy-6c5ff87cf-p866j 1/1 Terminating 0 7m40s nginx-deploy-6c5ff87cf-p866j 0/1 Terminating 0 7m40s nginx-deploy-6c5ff87cf-p866j 0/1 Terminating 0 7m40s nginx-deploy-6c5ff87cf-p866j 0/1 Terminating 0 7m40s

- 我们用命令

kubectl describle deploy nginx反而更清晰可以看到滚动升级的过程:

[root@master1 ~]#kubectl describe deployments.apps nginx-deploy

Name: nginx-deploy

Namespace: default

CreationTimestamp: Thu, 11 Nov 2021 22:04:31 +0800

Labels: role=deploy

Annotations: deployment.kubernetes.io/revision: 3

Selector: app=nginx,test=course

Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 5

RollingUpdateStrategy: 1 max unavailable, 1 max surge

Pod Template:

Labels: app=nginx

test=course

Containers:

nginx:

Image: nginx:latest

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-deploy-595b8954f7 (3/3 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 23m deployment-controller Scaled down replica set nginx-deploy-6c5ff87cf to 3

Normal ScalingReplicaSet 23m deployment-controller Scaled up replica set nginx-deploy-595b8954f7 to 1

Normal ScalingReplicaSet 23m deployment-controller Scaled down replica set nginx-deploy-6c5ff87cf to 2

Normal ScalingReplicaSet 23m deployment-controller Scaled up replica set nginx-deploy-595b8954f7 to 2

Normal ScalingReplicaSet 22m deployment-controller Scaled down replica set nginx-deploy-6c5ff87cf to 0

Normal ScalingReplicaSet 22m deployment-controller Scaled up replica set nginx-deploy-595b8954f7 to 3 #最终的目的:就是deployment-controller将old rs的副本数设置为0,将new rs的副本数设置为desired数量

[root@master1 ~]#

[root@master1 ~]#kubectl get rs

NAME DESIRED CURRENT READY AGE

nginx-deploy-595b8954f7 3 3 3 25m

nginx-deploy-6c5ff87cf 0 0 0 9h

nginx-deploy-fd46765d4 0 0 0 9h

[root@master1 ~]#

测试结束。😘

滚动升级的注意事项

如果我们强制做一个滚动更新的话,我们的应用如果现在还对外提供服务,那么就有可能正在接收流量,那如果我们做滚动升级的话,那就有可能也会造成业务的请求中断。

那么我们可以用什么样的方式来解决这个"中断"问题呢?

- 就是我们前面所说的

preStaop钩子,就是我们在停止之前,可以做一个什么样的事情呢?比如nginx,可以做一个优雅退出。让它把我们现在的请求处理完成之后,就是不接受请求了,再停止pod。所以,对于我们线上的应用,基本上会加上这里的优雅退出,在我们的preStop做这样一个事情。 - 你直接在这个

preStop里面直接sleep一下,就是让我们这里的请求/连接有足够的时间处理完成。所以,我用个preStop里面直接sleep也是可以的。

- 应用升级(更新镜像三种方式,自动触发滚动升级)

1、kubectl apply -f xxx.yaml (推荐使用) 2、kubectl set image deployment/web nginx=nginx:1.17 3、kubectl edit deployment/web #使用系统编辑器打开

关于我

我的博客主旨:

- 排版美观,语言精炼;

- 文档即手册,步骤明细,拒绝埋坑,提供源码;

- 本人实战文档都是亲测成功的,各位小伙伴在实际操作过程中如有什么疑问,可随时联系本人帮您解决问题,让我们一起进步!

🍀 微信二维码

x2675263825 (舍得), qq:2675263825。

🍀 微信公众号

《云原生架构师实战》

🍀 语雀

彦 · 语雀

语雀博客 · 语雀 《语雀博客》

🍀 博客

www.onlyyou520.com

🍀 csdn

一念一生~one的博客_CSDN博客-k8s,Linux,git领域博主

🍀 知乎

一个人 - 知乎

最后

好了,关于本次就到这里了,感谢大家阅读,最后祝大家生活快乐,每天都过的有意义哦,我们下期见!

![[思考进阶]03 每一个成年人都应该掌握的学习技巧](https://img-blog.csdnimg.cn/3f57fbee2e2f479fb85fce1a119f7b7a.png#pic_center)

![[附源码]Python计算机毕业设计个人博客Django(程序+LW)](https://img-blog.csdnimg.cn/918e000d7ff14b4d8974be1e9344f449.png)