⭐作者介绍:大二本科网络工程专业在读,持续学习Java,努力输出优质文章

⭐作者主页:@逐梦苍穹

⭐所属专栏:人工智能。

目录

- 1、简介

- 2、Prompts(提示)

- 2.1、Prompt templates

- 2.1.1、创建提示模板

- 2.1.2、聊天提示模板

- 2.1.3、Few-shot prompt templates

- 2.1.4、格式模板输出

- 2.1.5、模板格式

- 2.1.6、MessagePromptTemplate 的类型

- 2.1.7、部分提示模板

- 2.1.8、组合

- 2.1.9、验证模板

- 2.2、example_selectors

- 2.2.1、自定义示例选择器

- 2.2.2、选择长度

- 2.2.3、相似度选择

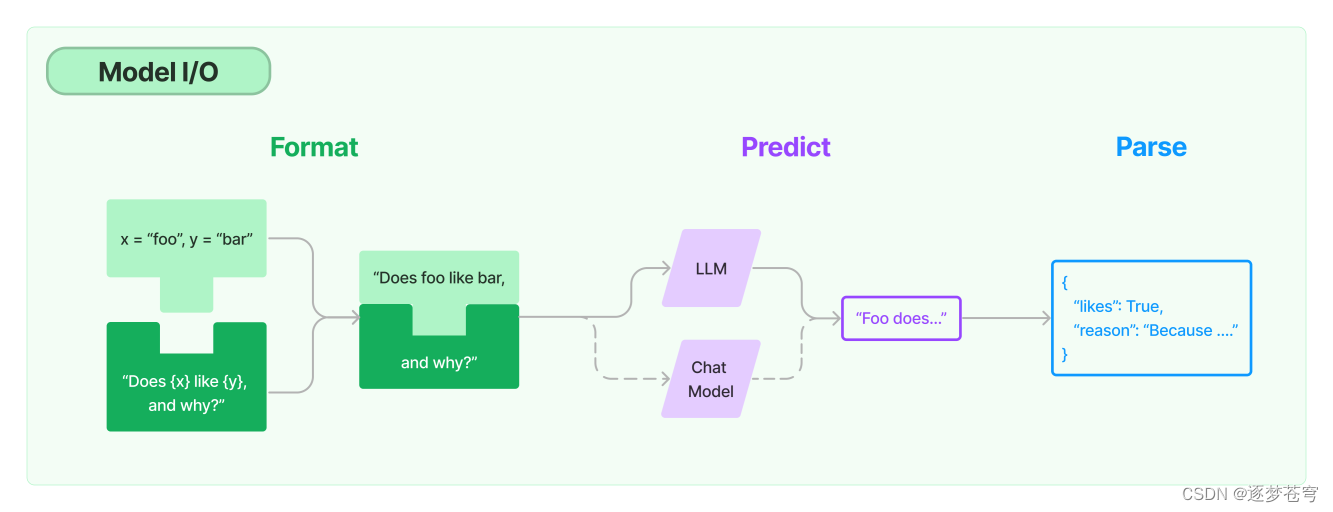

1、简介

任何语言模型应用的核心元素是模型的输入和输出。LangChain提供了与任何语言模型进行接口交互的基本组件。

● 提示 prompts: 将模型输入模板化、动态选择和管理

● 语言模型 models: 通过常见接口调用语言模型

● 输出解析器 output_parsers: 从模型输出中提取信息

2、Prompts(提示)

编写模型的新方式是通过提示。 一个提示(prompt) 指的是输入模型的内容。 这个输入通常由多个组件构成。 LangChain 提供了多个类和函数,使构建和处理提示变得简单。

● 提示模板(Prompt templates): 为模型输入添加参数

● 示例选择器(Example selectors): 动态选择在提示中包含的示例

2.1、Prompt templates

语言模型以文本作为输入->该文本通常称为提示(prompt)。

通常,这不仅仅是一个硬编码的字符串,而是一个模板、一些示例和用户输入的组合。

LangChain 提供了几个类和函数,使得构建和使用提示变得容易。

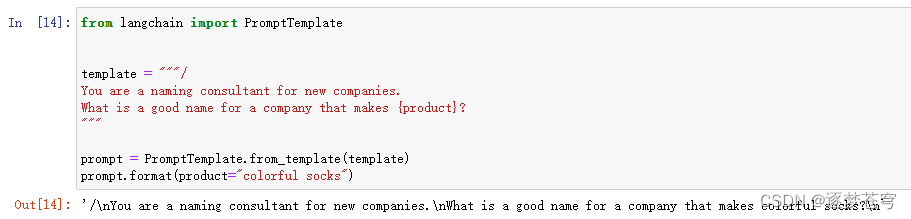

什么是提示模板?

提示模板是指一种可复制的生成提示的方式。它包含一个文本字符串(“模板”),可以从最终用户那里接收一组参数并生成提示。

提示模板可以包含:

● 向语言模型提供指令,

● 一组少样本示例,以帮助语言模型生成更好的回复,

● 向语言模型提出问题。

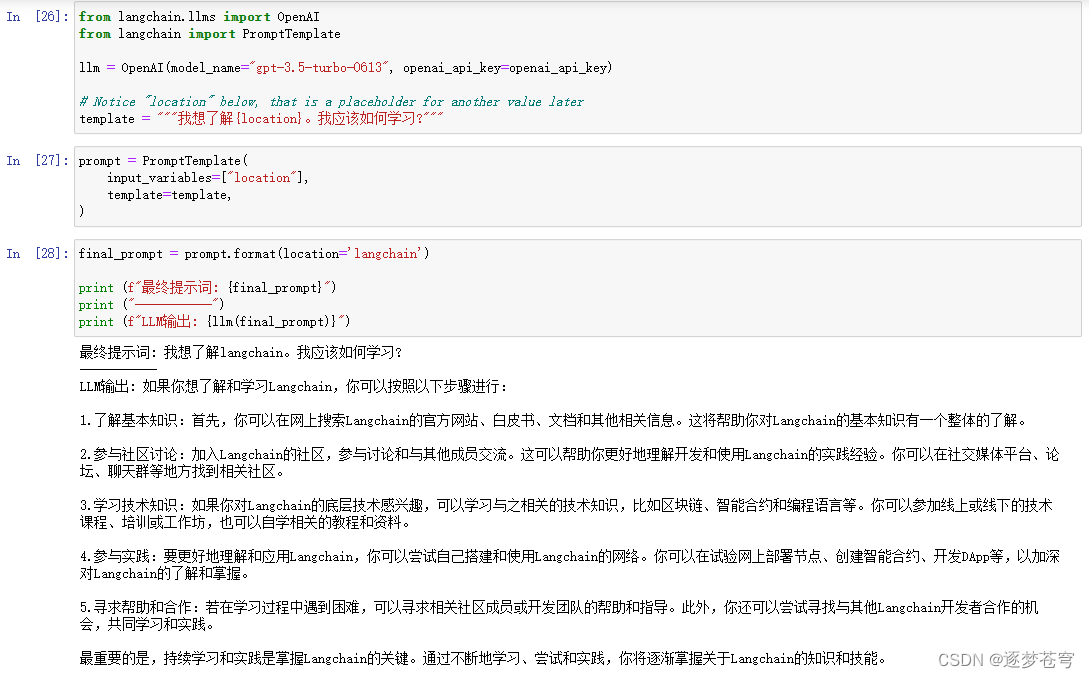

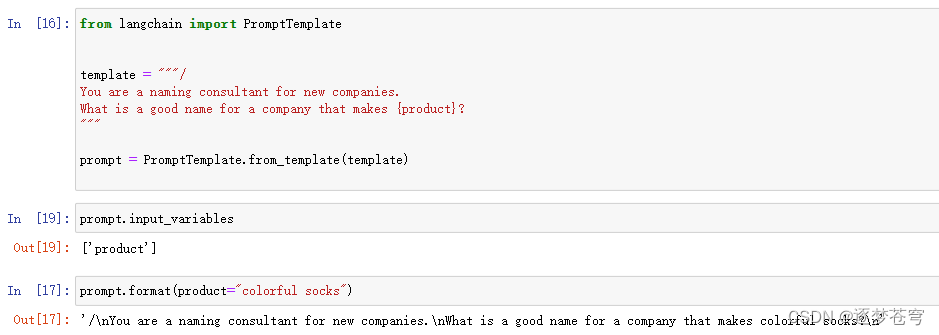

一个简单示例:

from langchain import PromptTemplate

template = """/

You are a naming consultant for new companies.

What is a good name for a company that makes {product}?

"""

prompt = PromptTemplate.from_template(template)

prompt.format(product="colorful socks")

2.1.1、创建提示模板

可以使用 PromptTemplate 类创建简单的硬编码提示。提示模板可以接受任意数量的输入变量,并可以格式化生成提示。

from langchain import PromptTemplate

# An example prompt with no input variables

no_input_prompt = PromptTemplate(input_variables=[], template="Tell me a joke.")

no_input_prompt.format()

# -> "Tell me a joke."

# An example prompt with one input variable

one_input_prompt = PromptTemplate(input_variables=["adjective"], template="Tell me a {adjective} joke.")

one_input_prompt.format(adjective="funny")

# -> "Tell me a funny joke."

# An example prompt with multiple input variables

multiple_input_prompt = PromptTemplate(

input_variables=["adjective", "content"],

template="Tell me a {adjective} joke about {content}."

)

multiple_input_prompt.format(adjective="funny", content="chickens")

# -> "Tell me a funny joke about chickens."

如果不想手动指定 input_variables,也可以使用 from_template 类方法创建 PromptTemplate。LangChain 将根据传递的 template 自动推断 input_variables。

template = "Tell me a {adjective} joke about {content}."

prompt_template = PromptTemplate.from_template(template)

prompt_template.input_variables

# -> ['adjective', 'content']

prompt_template.format(adjective="funny", content="chickens")

# -> Tell me a funny joke about chickens.

2.1.2、聊天提示模板

聊天模型以聊天消息列表作为输入 - 这个列表通常称为 prompt。 这些聊天消息与原始字符串不同(您会将其传递给LLM模型),因为每个消息都与一个 role 相关联。

例如,在OpenAI的聊天补全 API中,一个聊天消息可以与 AI、人类或系统角色相关联。模型应更密切地遵循系统聊天消息的指令。

LangChain 提供了几个提示模板,以便更轻松地构建和处理提示。在查询聊天模型时,建议您使用这些与聊天相关的提示模板,以充分发挥底层聊天模型的潜力。

from langchain.prompts import (

ChatPromptTemplate,

PromptTemplate,

SystemMessagePromptTemplate,

AIMessagePromptTemplate,

HumanMessagePromptTemplate,

)

from langchain.schema import (

AIMessage,

HumanMessage,

SystemMessage

)

2.1.3、Few-shot prompt templates

少样本示例的提示模板 Few-shot prompt templates

少样本提示模板可以从一组示例或示例选择器对象构建。

用例

在本教程中,我们将为自问自答与搜索配置少量示例。

使用示例集

创建示例集:

首先,创建一个少量示例的列表。每个示例应该是一个字典,其中键是输入变量,值是这些输入变量的值。

from langchain.prompts.few_shot import FewShotPromptTemplate

from langchain.prompts.prompt import PromptTemplate

examples = [

{

"question": "Who lived longer, Muhammad Ali or Alan Turing?",

"answer":

"""

Are follow up questions needed here: Yes.

Follow up: How old was Muhammad Ali when he died?

Intermediate answer: Muhammad Ali was 74 years old when he died.

Follow up: How old was Alan Turing when he died?

Intermediate answer: Alan Turing was 41 years old when he died.

So the final answer is: Muhammad Ali

"""

},

{

"question": "When was the founder of craigslist born?",

"answer":

"""

Are follow up questions needed here: Yes.

Follow up: Who was the founder of craigslist?

Intermediate answer: Craigslist was founded by Craig Newmark.

Follow up: When was Craig Newmark born?

Intermediate answer: Craig Newmark was born on December 6, 1952.

So the final answer is: December 6, 1952

"""

},

{

"question": "Who was the maternal grandfather of George Washington?",

"answer":

"""

Are follow up questions needed here: Yes.

Follow up: Who was the mother of George Washington?

Intermediate answer: The mother of George Washington was Mary Ball Washington.

Follow up: Who was the father of Mary Ball Washington?

Intermediate answer: The father of Mary Ball Washington was Joseph Ball.

So the final answer is: Joseph Ball

"""

},

{

"question": "Are both the directors of Jaws and Casino Royale from the same country?",

"answer":

"""

Are follow up questions needed here: Yes.

Follow up: Who is the director of Jaws?

Intermediate Answer: The director of Jaws is Steven Spielberg.

Follow up: Where is Steven Spielberg from?

Intermediate Answer: The United States.

Follow up: Who is the director of Casino Royale?

Intermediate Answer: The director of Casino Royale is Martin Campbell.

Follow up: Where is Martin Campbell from?

Intermediate Answer: New Zealand.

So the final answer is: No

"""

}

]

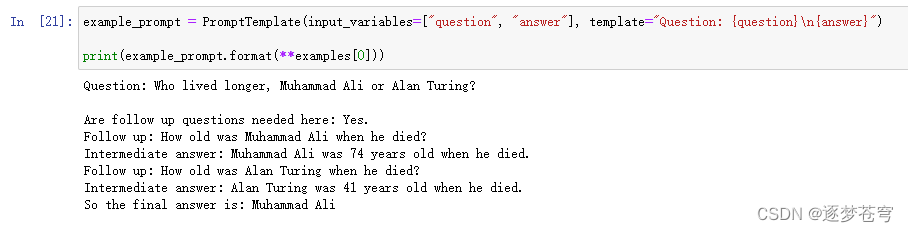

创建少量示例的格式化程序

配置一个将少量示例格式化为字符串的格式化程序。该格式化程序应该是一个 PromptTemplate 对象。

example_prompt = PromptTemplate(input_variables=["question", "answer"], template="Question: {question}\n{answer}")

print(example_prompt.format(**examples[0]))

将示例和格式化程序提供给 FewShotPromptTemplate

最后,创建一个 FewShotPromptTemplate 对象。该对象接受少量示例和少量示例的格式化程序。

prompt = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

suffix="Question: {input}",

input_variables=["input"]

)

print(prompt.format(input="Who was the father of Mary Ball Washington?"))

Question: Who lived longer, Muhammad Ali or Alan Turing?

Are follow up questions needed here: Yes.

Follow up: How old was Muhammad Ali when he died?

Intermediate answer: Muhammad Ali was 74 years old when he died.

Follow up: How old was Alan Turing when he died?

Intermediate answer: Alan Turing was 41 years old when he died.

So the final answer is: Muhammad Ali

Question: When was the founder of craigslist born?

Are follow up questions needed here: Yes.

Follow up: Who was the founder of craigslist?

Intermediate answer: Craigslist was founded by Craig Newmark.

Follow up: When was Craig Newmark born?

Intermediate answer: Craig Newmark was born on December 6, 1952.

So the final answer is: December 6, 1952

Question: Who was the maternal grandfather of George Washington?

Are follow up questions needed here: Yes.

Follow up: Who was the mother of George Washington?

Intermediate answer: The mother of George Washington was Mary Ball Washington.

Follow up: Who was the father of Mary Ball Washington?

Intermediate answer: The father of Mary Ball Washington was Joseph Ball.

So the final answer is: Joseph Ball

Question: Are both the directors of Jaws and Casino Royale from the same country?

Are follow up questions needed here: Yes.

Follow up: Who is the director of Jaws?

Intermediate Answer: The director of Jaws is Steven Spielberg.

Follow up: Where is Steven Spielberg from?

Intermediate Answer: The United States.

Follow up: Who is the director of Casino Royale?

Intermediate Answer: The director of Casino Royale is Martin Campbell.

Follow up: Where is Martin Campbell from?

Intermediate Answer: New Zealand.

So the final answer is: No

Question: Who was the father of Mary Ball Washington?

使用示例选择器

将示例提供给 ExampleSelector

我们将重用前一节中的示例集和格式化程序。但是,我们不会直接将示例提供给 FewShotPromptTemplate 对象,而是将它们提供给一个 ExampleSelector 对象。

在本教程中,我们将使用 SemanticSimilarityExampleSelector 类。该类根据输入与少量示例的相似性选择少量示例。它使用嵌入模型计算输入与少量示例之间的相似性,以及向量存储执行最近邻搜索。

from langchain.prompts.example_selector import SemanticSimilarityExampleSelector

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

example_selector = SemanticSimilarityExampleSelector.from_examples(

# This is the list of examples available to select from.

examples,

# This is the embedding class used to produce embeddings which are used to measure semantic similarity.

OpenAIEmbeddings(),

# This is the VectorStore class that is used to store the embeddings and do a similarity search over.

Chroma,

# This is the number of examples to produce.

k=1

)

# Select the most similar example to the input.

question = "Who was the father of Mary Ball Washington?"

selected_examples = example_selector.select_examples({"question": question})

print(f"Examples most similar to the input: {question}")

for example in selected_examples:

print("\n")

for k, v in example.items():

print(f"{k}: {v}")

Running Chroma using direct local API.

Using DuckDB in-memory for database. Data will be transient.

Examples most similar to the input: Who was the father of Mary Ball Washington?

question: Who was the maternal grandfather of George Washington?

answer:

Are follow up questions needed here: Yes.

Follow up: Who was the mother of George Washington?

Intermediate answer: The mother of George Washington was Mary Ball Washington.

Follow up: Who was the father of Mary Ball Washington?

Intermediate answer: The father of Mary Ball Washington was Joseph Ball.

So the final answer is: Joseph Ball

将示例选择器提供给 FewShotPromptTemplate

最后,创建一个 FewShotPromptTemplate 对象。该对象接受示例选择器和少量示例的格式化程序。

prompt = FewShotPromptTemplate(

example_selector=example_selector,

example_prompt=example_prompt,

suffix="Question: {input}",

input_variables=["input"]

)

print(prompt.format(input="Who was the father of Mary Ball Washington?"))

Question: Who was the maternal grandfather of George Washington?

Are follow up questions needed here: Yes.

Follow up: Who was the mother of George Washington?

Intermediate answer: The mother of George Washington was Mary Ball Washington.

Follow up: Who was the father of Mary Ball Washington?

Intermediate answer: The father of Mary Ball Washington was Joseph Ball.

So the final answer is: Joseph Ball

Question: Who was the father of Mary Ball Washington?

2.1.4、格式模板输出

格式模板输出->chat_prompt.format:

格式方法的输出可作为字符串、消息列表和ChatPromptValue使用

作为字符串:

output = chat_prompt.format(input_language="English",output_language="French", text="I love programming.")

output

'System: You are a helpful assistant that translates English to French.\nHuman: I love programming.'

# or alternatively

output_2 = chat_prompt.format_prompt(input_language="English", output_language="French", text="I love programming.").to_string()

assert output == output_2

作为ChatPromptValue:

chat_prompt.format_prompt(input_language="English", output_language="French", text="I love programming.")

ChatPromptValue(messages=[SystemMessage(content='You are a helpful assistant that translates English to French.', additional_kwargs={}), HumanMessage(content='I love programming.', additional_kwargs={})])

作为消息对象列表:

chat_prompt.format_prompt(input_language="English", output_language="French", text="I love programming.").to_messages()

[SystemMessage(content='You are a helpful assistant that translates English to French.', additional_kwargs={}),

HumanMessage(content='I love programming.', additional_kwargs={})

]

2.1.5、模板格式

模板格式 PromptTemplate

默认情况下,PromptTemplate会将提供的模板视为Python f-string。您可以通过template_format参数指定其他模板格式:

# Make sure jinja2 is installed before running this

jinja2_template = "Tell me a {{ adjective }} joke about {{ content }}"

prompt_template = PromptTemplate.from_template(template=jinja2_template, template_format="jinja2")

prompt_template.format(adjective="funny", content="chickens")

# -> Tell me a funny joke about chickens.

目前,PromptTemplate仅支持jinja2和f-string模板格式。如果您希望使用其他模板格式,请随时在Github页面上提交问题。

2.1.6、MessagePromptTemplate 的类型

LangChain 提供了不同类型的 MessagePromptTemplate。最常用的是 AIMessagePromptTemplate、SystemMessagePromptTemplate 和 HumanMessagePromptTemplate,分别用于创建 AI 消息、系统消息和人工消息。

然而,在对话模型支持使用任意角色的情况下,您可以使用 ChatMessagePromptTemplate,该模板允许用户指定角色名。

from langchain.prompts import ChatMessagePromptTemplate

prompt = "May the {subject} be with you"

chat_message_prompt = ChatMessagePromptTemplate.from_template(role="Jedi", template=prompt)

chat_message_prompt.format(subject="force")

ChatMessage(content='May the force be with you', additional_kwargs={}, role='Jedi')

LangChain 还提供了 MessagesPlaceholder,使您完全控制格式化过程中要呈现的消息。当您不确定应该为消息提示模板使用什么角色或者希望在格式化过程中插入消息列表时,这将非常有用。

from langchain.prompts import MessagesPlaceholder

human_prompt = "Summarize our conversation so far in {word_count} words."

human_message_template = HumanMessagePromptTemplate.from_template(human_prompt)

chat_prompt = ChatPromptTemplate.from_messages([MessagesPlaceholder(variable_name="conversation"), human_message_template])

human_message = HumanMessage(content="What is the best way to learn programming?")

ai_message = AIMessage(content="""\

1. Choose a programming language: Decide on a programming language that you want to learn.

2. Start with the basics: Familiarize yourself with the basic programming concepts such as variables, data types and control structures.

3. Practice, practice, practice: The best way to learn programming is through hands-on experience\

""")

chat_prompt.format_prompt(conversation=[human_message, ai_message], word_count="10").to_messages()

[HumanMessage(content='What is the best way to learn programming?', additional_kwargs={}),

AIMessage(content='1. Choose a programming language: Decide on a programming language \

that you want to learn. \n\n2. Start with the basics: Familiarize yourself \

with the basic programming concepts such as variables, data types and control \

structures.\n\n3. Practice, practice, practice: The best way to learn programming\

is through hands-on experience', additional_kwargs={}),

HumanMessage(content='Summarize our conversation so far in 10 words.', additional_kwargs={})

]

2.1.7、部分提示模板

部分提示模板 partial

与其他方法一样,"部分化"提示模板可以很有意义 - 例如,传入所需值的子集,以创建仅期望剩余子集值的新提示模板。

LangChain提供了两种方式来支持这种操作:

使用字符串值进行部分格式化。

使用返回字符串值的函数进行部分格式化。

这两种不同的方式支持不同的用例。在下面的示例中,我们将介绍两种用例的原因以及如何在LangChain中执行它们。

部分使用字符串

希望部分填充提示模板的一个常见用例是如果您在获取某些变量之前获得了其他变量。例如,假设您有一个需要两个变量 foo 和 baz 的提示模板。如果您在链条中早期获得了 foo 的值,但稍后才获得 baz 的值,那么等到两个变量在同一个位置时将它们传递给提示模板可能会很麻烦。相反,您可以使用 foo 的值部分填充提示模板,然后传递部分填充的提示模板,并只使用它。下面是一个示例:

from langchain.prompts import PromptTemplate

prompt = PromptTemplate(template="{foo}{bar}", input_variables=["foo", "bar"])

partial_prompt = prompt.partial(foo="foo");

print(partial_prompt.format(bar="baz"))

#foobaz

您还可以使用部分填充的变量初始化提示。

prompt = PromptTemplate(template="{foo}{bar}", input_variables=["bar"], partial_variables={"foo": "foo"})

print(prompt.format(bar="baz"))

#foobaz

部分使用函数

另一个常见用途是使用函数进行部分填充。这种情况是当您知道您总是希望以常见方式获取某个变量时使用的。一个典型的例子是日期或时间。想象一下,您有一个始终希望具有当前日期的提示。您不能在提示中硬编码它,并且将其与其他输入变量一起传递有点麻烦。在这种情况下,使用一个始终返回当前日期的函数来部分填充提示非常方便。

from datetime import datetime

def _get_datetime():

now = datetime.now()

return now.strftime("%m/%d/%Y, %H:%M:%S")

prompt = PromptTemplate(

template="Tell me a {adjective} joke about the day {date}",

input_variables=["adjective", "date"]

);

partial_prompt = prompt.partial(date=_get_datetime)

print(partial_prompt.format(adjective="funny"))

#Tell me a funny joke about the day 02/27/2023, 22:15:16

您还可以使用部分填充的变量初始化提示,这在这个工作流中通常更合理。

prompt = PromptTemplate(

template="Tell me a {adjective} joke about the day {date}",

input_variables=["adjective"],

partial_variables={"date": _get_datetime}

);

print(prompt.format(adjective="funny"))

#Tell me a funny joke about the day 02/27/2023, 22:15:16

2.1.8、组合

组合 prompt_composition

本笔记本介绍如何将多个提示组合在一起。当您想要重用提示的部分时,这将非常有用。这可以通过PipelinePrompt完成。PipelinePrompt由两个主要部分组成:

最终提示:这是返回的最终提示

Pipeline提示:这是一个由字符串名称和提示模板组成的元组列表。每个提示模板将被格式化,然后作为具有相同名称的变量传递给未来的提示模板。

from langchain.prompts.pipeline import PipelinePromptTemplate

from langchain.prompts.prompt import PromptTemplate

full_template = """{introduction}

{example}

{start}"""

full_prompt = PromptTemplate.from_template(full_template)

introduction_template = """You are impersonating {person}."""

introduction_prompt = PromptTemplate.from_template(introduction_template)

example_template = """Here's an example of an interaction:

Q: {example_q}

A: {example_a}"""

example_prompt = PromptTemplate.from_template(example_template)

start_template = """Now, do this for real!

Q: {input}

A:"""

start_prompt = PromptTemplate.from_template(start_template)

input_prompts = [

("introduction", introduction_prompt),

("example", example_prompt),

("start", start_prompt)

]

pipeline_prompt = PipelinePromptTemplate(final_prompt=full_prompt, pipeline_prompts=input_prompts)

pipeline_prompt.input_variables

#['example_a', 'person', 'example_q', 'input']

print(pipeline_prompt.format(

person="Elon Musk",

example_q="What's your favorite car?",

example_a="Telsa",

input="What's your favorite social media site?"

))

#

You are impersonating Elon Musk.

Here's an example of an interaction:

Q: What's your favorite car?

A: Telsa

Now, do this for real!

Q: What's your favorite social media site?

A:

2.1.9、验证模板

验证模板 validate_template

默认情况下,PromptTemplate会通过检查input_variables是否与template中定义的变量匹配来验证template字符串。您可以将validate_template设置为False来禁用此行为。

template = "I am learning langchain because {reason}."

prompt_template = PromptTemplate(template=template,

input_variables=["reason", "foo"]) # ValueError due to extra variables

prompt_template = PromptTemplate(template=template,

input_variables=["reason", "foo"],

validate_template=False) # No error

2.2、example_selectors

示例选择器 example_selectors

如果有大量的示例,可能需要选择哪些示例包含在提示中。示例选择器是负责执行此操作的类。

基本接口定义如下:

class BaseExampleSelector(ABC):

"""Interface for selecting examples to include in prompts."""

@abstractmethod

def select_examples(self, input_variables: Dict[str, str]) -> List[dict]:

"""Select which examples to use based on the inputs."""

它需要暴露的唯一方法是 select_examples 方法。该方法接受输入变量,然后返回一个示例列表。每个具体的实现可以自行选择这些示例的方式。

2.2.1、自定义示例选择器

自定义示例选择器 ExampleSelector

在本教程中,我们将创建一个自定义示例选择器,该选择器从给定的示例列表中选择每个交替示例。

ExampleSelector必须实现两个方法:

add_example 方法,该方法接受一个示例并将其添加到ExampleSelector中

select_examples 方法,该方法接受输入变量(用于用户输入)并返回要在few shot提示中使用的示例列表。

让我们实现一个自定义的ExampleSelector,它只是随机选择两个示例。

:::{note} 请查看LangChain支持的当前示例选择器实现集合此处。 :::

实现自定义示例选择器

from langchain.prompts.example_selector.base import BaseExampleSelector

from typing import Dict, List

import numpy as np

class CustomExampleSelector(BaseExampleSelector):

def __init__(self, examples: List[Dict[str, str]]):

self.examples = examples

def add_example(self, example: Dict[str, str]) -> None:

"""Add new example to store for a key."""

self.examples.append(example)

def select_examples(self, input_variables: Dict[str, str]) -> List[dict]:

"""Select which examples to use based on the inputs."""

return np.random.choice(self.examples, size=2, replace=False)

使用自定义示例选择器

examples = [

{"foo": "1"},

{"foo": "2"},

{"foo": "3"}

]

# Initialize example selector.

example_selector = CustomExampleSelector(examples)

# Select examples

example_selector.select_examples({"foo": "foo"})

# -> array([{'foo': '2'}, {'foo': '3'}], dtype=object)

# Add new example to the set of examples

example_selector.add_example({"foo": "4"})

example_selector.examples

# -> [{'foo': '1'}, {'foo': '2'}, {'foo': '3'}, {'foo': '4'}]

# Select examples

example_selector.select_examples({"foo": "foo"})

# -> array([{'foo': '1'}, {'foo': '4'}], dtype=object)

2.2.2、选择长度

选择长度 length_based

此示例选择器根据长度选择要使用的示例。当您担心构建的提示长度超过上下文窗口的长度时,这很有用。对于较长的输入,它会选择较少的示例进行包含,而对于较短的输入,它会选择更多。

from langchain.prompts import PromptTemplate

from langchain.prompts import FewShotPromptTemplate

from langchain.prompts.example_selector import LengthBasedExampleSelector

# These are a lot of examples of a pretend task of creating antonyms.

examples = [

{"input": "happy", "output": "sad"},

{"input": "tall", "output": "short"},

{"input": "energetic", "output": "lethargic"},

{"input": "sunny", "output": "gloomy"},

{"input": "windy", "output": "calm"},

example_prompt = PromptTemplate(

input_variables=["input", "output"],

template="Input: {input}\nOutput: {output}",

)

example_selector = LengthBasedExampleSelector(

# These are the examples it has available to choose from.

examples=examples,

# This is the PromptTemplate being used to format the examples.

example_prompt=example_prompt,

# This is the maximum length that the formatted examples should be.

# Length is measured by the get_text_length function below.

max_length=25,

# This is the function used to get the length of a string, which is used

# to determine which examples to include. It is commented out because

# it is provided as a default value if none is specified.

# get_text_length: Callable[[str], int] = lambda x: len(re.split("\n| ", x))

)

dynamic_prompt = FewShotPromptTemplate(

# We provide an ExampleSelector instead of examples.

example_selector=example_selector,

example_prompt=example_prompt,

prefix="Give the antonym of every input",

suffix="Input: {adjective}\nOutput:",

input_variables=["adjective"],

)

# An example with small input, so it selects all examples.

print(dynamic_prompt.format(adjective="big"))

Give the antonym of every input

Input: happy

Output: sad

Input: tall

Output: short

Input: energetic

Output: lethargic

Input: sunny

Output: gloomy

Input: windy

Output: calm

Input: big

Output:

# An example with long input, so it selects only one example.

long_string = "big and huge and massive and large and gigantic and tall and much much much much much bigger than everything else"

print(dynamic_prompt.format(adjective=long_string))

Give the antonym of every input

Input: happy

Output: sad

Input: big and huge and massive and large and gigantic and tall and much much much much much bigger than everything else

Output:

# You can add an example to an example selector as well.

new_example = {"input": "big", "output": "small"}

dynamic_prompt.example_selector.add_example(new_example)

print(dynamic_prompt.format(adjective="enthusiastic"))

Give the antonym of every input

Input: happy

Output: sad

Input: tall

Output: short

Input: energetic

Output: lethargic

Input: sunny

Output: gloomy

Input: windy

Output: calm

Input: big

Output: small

Input: enthusiastic

Output:

2.2.3、相似度选择

相似度选择 similarity

该对象根据与输入的相似度选择示例。它通过查找具有与输入具有最大余弦相似度的嵌入的示例来实现这一点。

from langchain.prompts.example_selector import SemanticSimilarityExampleSelector

from langchain.vectorstores import Chroma

from langchain.embeddings import OpenAIEmbeddings

from langchain.prompts import FewShotPromptTemplate, PromptTemplate

example_prompt = PromptTemplate(

input_variables=["input", "output"],

template="Input: {input}\nOutput: {output}",

)

# These are a lot of examples of a pretend task of creating antonyms.

examples = [

{"input": "happy", "output": "sad"},

{"input": "tall", "output": "short"},

{"input": "energetic", "output": "lethargic"},

{"input": "sunny", "output": "gloomy"},

{"input": "windy", "output": "calm"},

]

example_selector = SemanticSimilarityExampleSelector.from_examples(

# This is the list of examples available to select from.

examples,

# This is the embedding class used to produce embeddings which are used to measure semantic similarity.

OpenAIEmbeddings(),

# This is the VectorStore class that is used to store the embeddings and do a similarity search over.

Chroma,

# This is the number of examples to produce.

k=1

)

similar_prompt = FewShotPromptTemplate(

# We provide an ExampleSelector instead of examples.

example_selector=example_selector,

example_prompt=example_prompt,

prefix="Give the antonym of every input",

suffix="Input: {adjective}\nOutput:",

input_variables=["adjective"],

)

Running Chroma using direct local API.

Using DuckDB in-memory for database. Data will be transient.

# Input is a feeling, so should select the happy/sad example

print(similar_prompt.format(adjective="worried"))

Give the antonym of every input

Input: happy

Output: sad

Input: worried

Output:

# Input is a measurement, so should select the tall/short example

print(similar_prompt.format(adjective="fat"))

Give the antonym of every input

Input: happy

Output: sad

Input: fat

Output:

# You can add new examples to the SemanticSimilarityExampleSelector as well

similar_prompt.example_selector.add_example({"input": "enthusiastic", "output": "apathetic"})

print(similar_prompt.format(adjective="joyful"))

Give the antonym of every input

Input: happy

Output: sad

Input: joyful

Output: