概率计算算法

直接计算法

给定模型 λ = ( A , B , π ) \lambda=(A,B,\pi) λ=(A,B,π)和观测序列 O = ( o 1 , o 2 , ⋯ , o T ) O=(o_1,o_2,\cdots,o_T) O=(o1,o2,⋯,oT),计算观测序列 O O O出现的概率 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。最直接的方法就是按概率公式直接计算。通过列举所有可能的长度为 T T T的状态序列 I = ( i 1 , i 2 , ⋯ , i T ) I=(i_1,i_2,\cdots,i_T) I=(i1,i2,⋯,iT),求各个状态序列 I I I与观测序列 O = ( o 1 , o 2 , ⋯ , o T ) O=(o_1,o_2,\cdots,o_T) O=(o1,o2,⋯,oT)的联合概率 P ( O , I ∣ λ ) P(O,I|\lambda) P(O,I∣λ),然后对所有可能的状态序列求和,就得到 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。

状态序列

I

=

(

i

1

,

i

2

,

⋯

,

i

T

)

I=(i_1,i_2,\cdots,i_T)

I=(i1,i2,⋯,iT)的概率为

P

(

I

∣

λ

)

=

P

(

i

1

,

i

2

,

⋯

,

i

T

∣

λ

)

=

P

(

i

1

)

∏

t

=

2

T

P

(

i

t

∣

i

1

,

⋯

,

i

t

−

1

,

λ

)

联合概率分布

=

P

(

i

1

)

∏

t

=

2

T

P

(

i

t

∣

i

t

−

1

,

λ

)

齐次马尔可夫假设

=

π

i

1

∏

t

=

2

T

a

i

t

−

1

,

i

t

=

π

i

1

a

i

1

,

i

2

a

i

2

,

i

3

⋯

a

i

T

−

1

,

i

T

(10.10)

\begin{aligned} P(I|\lambda) &= P(i_1,i_2,\cdots,i_T|\lambda) \\ &= P(i_1) \prod_{t=2}^T P(i_t|i_1,\cdots,i_{t-1},\lambda) & 联合概率分布 \\ &= P(i_1) \prod_{t=2}^T P(i_t|i_{t-1},\lambda) & 齐次马尔可夫假设 \\ &= \pi_{i_1} \prod_{t=2}^T a_{i_{t-1},i_t} \\ &= \pi_{i_1}a_{i_1,i_2}a_{i_2,i_3}\cdots a_{i_{T-1},i_T} \end{aligned} \tag{10.10}

P(I∣λ)=P(i1,i2,⋯,iT∣λ)=P(i1)t=2∏TP(it∣i1,⋯,it−1,λ)=P(i1)t=2∏TP(it∣it−1,λ)=πi1t=2∏Tait−1,it=πi1ai1,i2ai2,i3⋯aiT−1,iT联合概率分布齐次马尔可夫假设(10.10)

就是由初始概率生成第一个状态 i 1 i_1 i1,然后转移到第二个状态 i 2 i_2 i2,最后到第 T T T个状态 i T i_T iT的概率之积。

对于固定的状态序列

I

=

(

i

1

,

i

2

,

⋯

,

i

T

)

I=(i_1,i_2,\cdots,i_T)

I=(i1,i2,⋯,iT),观测序列

O

=

(

o

1

,

o

2

,

⋯

,

o

T

)

O=(o_1,o_2,\cdots,o_T)

O=(o1,o2,⋯,oT)的概率是:

P

(

O

∣

I

,

λ

)

=

P

(

o

1

,

o

2

,

⋯

,

o

T

∣

i

1

,

i

2

,

⋯

,

i

T

,

λ

)

=

P

(

o

T

∣

o

1

,

o

2

,

⋯

,

o

T

−

1

,

i

1

,

i

2

,

⋯

,

i

T

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

T

−

1

∣

i

1

,

i

2

,

⋯

,

i

T

,

λ

)

=

P

(

o

T

∣

i

T

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

T

−

1

∣

i

1

,

i

2

,

⋯

,

i

T

,

λ

)

观测独立假设

=

P

(

o

T

∣

i

T

,

λ

)

P

(

o

T

−

1

∣

o

1

,

o

2

,

⋯

,

o

T

−

2

,

i

1

,

i

2

,

⋯

,

i

T

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

T

−

2

∣

i

1

,

i

2

,

⋯

,

i

T

,

λ

)

=

P

(

o

T

∣

i

T

,

λ

)

P

(

o

T

−

1

∣

i

T

−

1

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

T

−

2

∣

i

1

,

i

2

,

⋯

,

i

T

,

λ

)

=

∏

t

=

1

T

P

(

o

t

∣

i

t

)

=

∏

t

=

1

T

b

i

t

(

o

t

)

=

b

i

1

(

o

1

)

b

i

2

(

o

2

)

⋯

b

i

T

(

o

T

)

(10.11)

\begin{aligned} P(O|I,\lambda) &= P(o_1,o_2,\cdots,o_T|i_1,i_2,\cdots,i_T,\lambda) \\ &= P(o_T|o_1,o_2,\cdots,o_{T-1},i_1,i_2,\cdots,i_T,\lambda) P(o_1,o_2,\cdots,o_{T-1}|i_1,i_2,\cdots,i_T,\lambda)\\ &= P(o_T|i_T,\lambda) P(o_1,o_2,\cdots,o_{T-1}|i_1,i_2,\cdots,i_T,\lambda) & 观测独立假设 \\ &= P(o_T|i_T,\lambda) P(o_{T-1}|o_1,o_2,\cdots,o_{T-2},i_1,i_2,\cdots,i_T,\lambda) P(o_1,o_2,\cdots,o_{T-2}|i_1,i_2,\cdots,i_T,\lambda)\\ &= P(o_T|i_T,\lambda) P(o_{T-1}|i_{T-1},\lambda) P(o_1,o_2,\cdots,o_{T-2}|i_1,i_2,\cdots,i_T,\lambda)\\ &= \prod_{t=1}^T P(o_t|i_t) \\ &= \prod_{t=1}^T b_{i_t}(o_t) \\ &= b_{i_1}(o_1)b_{i_2}(o_2)\cdots b_{i_T}(o_T) \end{aligned} \tag{10.11}

P(O∣I,λ)=P(o1,o2,⋯,oT∣i1,i2,⋯,iT,λ)=P(oT∣o1,o2,⋯,oT−1,i1,i2,⋯,iT,λ)P(o1,o2,⋯,oT−1∣i1,i2,⋯,iT,λ)=P(oT∣iT,λ)P(o1,o2,⋯,oT−1∣i1,i2,⋯,iT,λ)=P(oT∣iT,λ)P(oT−1∣o1,o2,⋯,oT−2,i1,i2,⋯,iT,λ)P(o1,o2,⋯,oT−2∣i1,i2,⋯,iT,λ)=P(oT∣iT,λ)P(oT−1∣iT−1,λ)P(o1,o2,⋯,oT−2∣i1,i2,⋯,iT,λ)=t=1∏TP(ot∣it)=t=1∏Tbit(ot)=bi1(o1)bi2(o2)⋯biT(oT)观测独立假设(10.11)

由状态 i 1 i_1 i1产生观测 o 1 o_1 o1,状态 i 2 i_2 i2产生观测 o 2 o_2 o2,到状态 i T i_T iT产生观测 o T o_T oT的概率之积。

那么

O

O

O和

I

I

I同时出现的概率为:

P

(

O

,

I

∣

λ

)

=

P

(

O

∣

I

,

λ

)

P

(

I

∣

λ

)

=

b

i

1

(

o

1

)

b

i

2

(

o

2

)

⋯

b

i

T

(

o

T

)

π

i

1

a

i

1

,

i

2

a

i

2

,

i

3

⋯

a

i

T

−

1

,

i

T

=

π

i

1

b

i

1

(

o

1

)

a

i

1

,

i

2

b

i

2

(

o

2

)

⋯

a

i

T

−

1

,

i

T

b

i

T

(

o

T

)

(10.12)

\begin{aligned} P(O,I|\lambda) &= P(O|I,\lambda) P(I|\lambda) \\ &= b_{i_1}(o_1)b_{i_2}(o_2)\cdots b_{i_T}(o_T) \pi_{i_1}a_{i_1,i_2}a_{i_2,i_3}\cdots a_{i_{T-1},i_T} \\ &= \pi_{i_1} b_{i_1}(o_1)a_{i_1,i_2}b_{i_2}(o_2)\cdots a_{i_{T-1},i_T}b_{i_T}(o_T) \end{aligned} \tag{10.12}

P(O,I∣λ)=P(O∣I,λ)P(I∣λ)=bi1(o1)bi2(o2)⋯biT(oT)πi1ai1,i2ai2,i3⋯aiT−1,iT=πi1bi1(o1)ai1,i2bi2(o2)⋯aiT−1,iTbiT(oT)(10.12)

上式只是针对一种状态序列

I

I

I,对所有可能的状态序列

I

I

I求和,就可以得到观测序列

O

O

O的概率

P

(

O

∣

λ

)

P(O|\lambda)

P(O∣λ),即

P

(

O

∣

λ

)

=

∑

I

P

(

O

,

I

∣

λ

)

=

∑

I

P

(

O

∣

I

,

λ

)

P

(

I

∣

λ

)

=

∑

i

1

,

i

2

,

⋯

,

i

T

π

i

1

b

i

1

(

o

1

)

a

i

1

,

i

2

b

i

2

(

o

2

)

⋯

a

i

T

−

1

,

i

T

b

i

T

(

o

T

)

(10.13)

\begin{aligned} P(O|\lambda) &= \sum_I P(O,I|\lambda) \\ &= \sum_I P(O|I,\lambda) P(I|\lambda) \\ &= \sum_{i_1,i_2,\cdots,i_T} \pi_{i_1} b_{i_1}(o_1)a_{i_1,i_2}b_{i_2}(o_2)\cdots a_{i_{T-1},i_T}b_{i_T}(o_T) \end{aligned} \tag{10.13}

P(O∣λ)=I∑P(O,I∣λ)=I∑P(O∣I,λ)P(I∣λ)=i1,i2,⋯,iT∑πi1bi1(o1)ai1,i2bi2(o2)⋯aiT−1,iTbiT(oT)(10.13)

但是,直接利用上式计算量非常大。其中

∑

i

1

,

i

2

,

⋯

,

i

T

\sum_{i_1,i_2,\cdots,i_T}

∑i1,i2,⋯,iT共有

N

T

N^T

NT种可能(长度为

T

T

T,每个位置都有

N

N

N种可能),而计算

π

i

1

b

i

1

(

o

1

)

a

i

1

,

i

2

b

i

2

(

o

2

)

⋯

a

i

T

−

1

,

i

T

b

i

T

(

o

T

)

\pi_{i_1} b_{i_1}(o_1)a_{i_1,i_2}b_{i_2}(o_2)\cdots a_{i_{T-1},i_T}b_{i_T}(o_T)

πi1bi1(o1)ai1,i2bi2(o2)⋯aiT−1,iTbiT(oT)的时间复杂度为

O

(

T

)

O(T)

O(T),所以整体的时间复杂度是

O

(

T

N

T

)

O(TN^T)

O(TNT)阶的,这种算法实际上不可行。

下面介绍计算观测序列概率 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)的有效算法:前向-后向算法(forward-backward algorithm)。

前向算法

定义10.2(前向概率) 给定隐马尔可夫模型

λ

\lambda

λ,定义到时刻

t

t

t部分观测序列为

o

1

,

o

2

,

⋯

,

o

t

o_1,o_2,\cdots,o_t

o1,o2,⋯,ot且状态为

q

i

q_i

qi的概率为前向概率,记作

α

t

(

i

)

=

P

(

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

i

∣

λ

)

(10.14)

\alpha_t(i) = P(o_1,o_2,\cdots,o_t,i_t=q_i|\lambda) \tag{10.14}

αt(i)=P(o1,o2,⋯,ot,it=qi∣λ)(10.14)

这个公式中

α

\alpha

α的下标变成了观测序列,参数变成了状态。

从这个定义可以看到,显然我们可以基于时刻 a t − 1 a_{t-1} at−1来表示 α t \alpha_t αt,即可以递推地求得前向概率 α t ( i ) \alpha_t(i) αt(i)及观测序列概率 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。

算法10.2(观测序列概率的前向算法)

输入:隐马尔可夫模型 λ \lambda λ,观测序列 O O O;

输出:观测序列概率 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。

(1) 初值

α

1

(

i

)

=

π

i

b

i

(

o

1

)

,

i

=

1

,

2

,

⋯

,

N

(10.15)

\alpha_1(i) = \pi_i b_i(o_1), \quad i=1,2,\cdots,N \tag{10.15}

α1(i)=πibi(o1),i=1,2,⋯,N(10.15)

在

t

=

1

t=1

t=1时,只看到观测

o

1

o_1

o1,是初始时刻的状态

i

1

=

q

i

i_1=q_i

i1=qi和观测

o

1

o_1

o1的联合概率,即初始状态为

i

i

i的概率

π

i

\pi_i

πi乘以 状态

i

i

i产生观测

o

1

o_1

o1的概率

b

i

(

o

1

)

b_i(o_1)

bi(o1)。

(2) 递推 对 t = 1 , 2 , ⋯ , T − 1 t=1,2,\cdots,T-1 t=1,2,⋯,T−1,

计算到时刻 t + 1 t+1 t+1部分观测序列为 o 1 , o 2 , ⋯ , o t , o t + 1 o_1,o_2,\cdots,o_t,o_{t+1} o1,o2,⋯,ot,ot+1且在时刻 t + 1 t+1 t+1处处于状态 q i q_i qi的前向概率。

公式推导如下:

α

t

+

1

(

i

)

=

P

(

o

1

,

o

2

,

⋯

,

o

t

,

o

t

+

1

,

i

t

+

1

=

q

i

∣

λ

)

=

∑

j

=

1

N

P

(

o

1

,

o

2

,

⋯

,

o

t

,

o

t

+

1

,

i

t

=

q

j

,

i

t

+

1

=

q

i

∣

λ

)

引入

i

t

=

q

j

=

∑

j

=

1

N

P

(

o

t

+

1

∣

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

j

,

i

t

+

1

=

q

i

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

j

,

i

t

+

1

=

q

i

∣

λ

)

=

∑

j

=

1

N

P

(

o

t

+

1

∣

i

t

+

1

=

q

i

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

j

,

i

t

+

1

=

q

i

∣

λ

)

观测独立假设

=

∑

j

=

1

N

P

(

o

t

+

1

∣

i

t

+

1

=

q

i

,

λ

)

P

(

i

t

+

1

=

q

i

∣

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

j

,

i

t

+

1

=

q

i

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

j

∣

λ

)

=

∑

j

=

1

N

P

(

o

t

+

1

∣

i

t

+

1

=

q

i

,

λ

)

P

(

i

t

+

1

=

q

i

∣

i

t

=

q

j

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

j

∣

λ

)

齐次马尔可夫假设

=

[

∑

j

=

1

N

P

(

i

t

+

1

=

q

i

∣

i

t

=

q

j

,

λ

)

P

(

o

1

,

o

2

,

⋯

,

o

t

,

i

t

=

q

j

∣

λ

)

]

P

(

o

t

+

1

∣

i

t

+

1

=

q

i

)

=

[

∑

j

=

1

N

a

j

i

α

t

(

j

)

]

b

i

(

o

t

+

1

)

\begin{aligned} \alpha_{t+1}(i) &= P(o_1,o_2,\cdots,o_t,o_{t+1},i_{t+1}=q_i|\lambda) \\ &= \sum_{j=1}^N P(o_1,o_2,\cdots,o_t,o_{t+1},i_t = q_j ,i_{t+1}=q_i|\lambda) & 引入i_t=q_j\\ &= \sum_{j=1}^N P(o_{t+1}|o_1,o_2,\cdots,o_t,i_t = q_j ,i_{t+1}=q_i,\lambda) P(o_1,o_2,\cdots,o_t,i_t = q_j ,i_{t+1}=q_i|\lambda) \\ &= \sum_{j=1}^N P(o_{t+1}| i_{t+1}=q_i,\lambda) P(o_1,o_2,\cdots,o_t,i_t = q_j ,i_{t+1}=q_i|\lambda) & 观测独立假设\\ &= \sum_{j=1}^N P(o_{t+1}| i_{t+1}=q_i,\lambda) P(i_{t+1}=q_i|o_1,o_2,\cdots,o_t,i_t = q_j ,i_{t+1}=q_i,\lambda)P(o_1,o_2,\cdots,o_t,i_t = q_j |\lambda) \\ &= \sum_{j=1}^N P(o_{t+1}| i_{t+1}=q_i,\lambda) P(i_{t+1}=q_i|i_t = q_j,\lambda )P(o_1,o_2,\cdots,o_t,i_t = q_j |\lambda) & 齐次马尔可夫假设 \\ &=\left[ \sum_{j=1}^N P(i_{t+1}=q_i|i_t = q_j ,\lambda)P(o_1,o_2,\cdots,o_t,i_t = q_j |\lambda) \right] P(o_{t+1}| i_{t+1}=q_i) \\ &= \left[ \sum_{j=1}^N a_{ji} \alpha_t(j) \right ] b_{i}(o_{t+1}) \end{aligned}

αt+1(i)=P(o1,o2,⋯,ot,ot+1,it+1=qi∣λ)=j=1∑NP(o1,o2,⋯,ot,ot+1,it=qj,it+1=qi∣λ)=j=1∑NP(ot+1∣o1,o2,⋯,ot,it=qj,it+1=qi,λ)P(o1,o2,⋯,ot,it=qj,it+1=qi∣λ)=j=1∑NP(ot+1∣it+1=qi,λ)P(o1,o2,⋯,ot,it=qj,it+1=qi∣λ)=j=1∑NP(ot+1∣it+1=qi,λ)P(it+1=qi∣o1,o2,⋯,ot,it=qj,it+1=qi,λ)P(o1,o2,⋯,ot,it=qj∣λ)=j=1∑NP(ot+1∣it+1=qi,λ)P(it+1=qi∣it=qj,λ)P(o1,o2,⋯,ot,it=qj∣λ)=[j=1∑NP(it+1=qi∣it=qj,λ)P(o1,o2,⋯,ot,it=qj∣λ)]P(ot+1∣it+1=qi)=[j=1∑Najiαt(j)]bi(ot+1)引入it=qj观测独立假设齐次马尔可夫假设

把上式整理一下,就得到了书中的形式:

α t + 1 ( i ) = [ ∑ j = 1 N α t ( j ) a j i ] b i ( o t + 1 ) , i = 1 , 2 , ⋯ , N (10.16) \alpha_{t+1}(i) = \left[\sum_{j=1}^N \alpha_{t}(j) a_{ji}\right] b_{i}(o_{t+1}),\quad i=1,2,\cdots,N \tag{10.16} αt+1(i)=[j=1∑Nαt(j)aji]bi(ot+1),i=1,2,⋯,N(10.16)

(3) 终止

因为

α

T

(

i

)

=

P

(

o

1

,

o

2

,

⋯

,

o

T

,

i

T

=

q

i

∣

λ

)

\alpha_T(i) = P(o_1,o_2,\cdots,o_T,i_T=q_i|\lambda)

αT(i)=P(o1,o2,⋯,oT,iT=qi∣λ)

所以

P

(

O

∣

λ

)

=

∑

i

=

1

N

P

(

O

,

i

t

=

q

i

∣

λ

)

=

∑

i

=

1

N

α

T

(

i

)

(10.17)

P(O|\lambda) = \sum_{i=1}^N P(O,i_t=q_i|\lambda)=\sum_{i=1}^N \alpha_{T}(i) \tag{10.17}

P(O∣λ)=i=1∑NP(O,it=qi∣λ)=i=1∑NαT(i)(10.17)

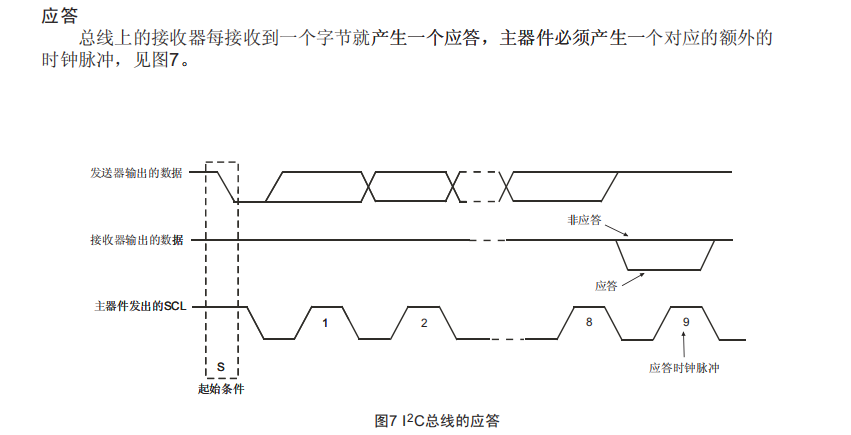

如图10.2所示,前向算法实际是基于“状态序列的路径结构”递推计算

P

(

O

∣

λ

)

P(O|\lambda)

P(O∣λ)的算法。其高效的关键在于记住并利用前一步计算的前向概率,避免了重复计算,然后利用路径结构将前向概率递推到全局,得到

P

(

O

∣

λ

)

P(O|\lambda)

P(O∣λ)。这样,利用前向概率计算

P

(

O

∣

λ

)

P(O|\lambda)

P(O∣λ)的计算量是

O

(

N

2

T

)

O(N^2T)

O(N2T)阶的,而不是直接计算的

O

(

T

N

T

)

O(TN^T)

O(TNT)。

在每个时间步 t t t,每个状态都需要与前一个时间步 t − 1 t-1 t−1的 N N N个状态的结果相乘。而每个时间步 t t t,都有 N N N个状态,所以是 N 2 N^2 N2,总共有 T T T个时间步,所以总量是 O ( N 2 T ) O(N^2T) O(N2T)。

后向算法

定义 10.3(后向概率) 给定隐马尔可夫模型

λ

\lambda

λ,定义在时刻

t

t

t状态为

q

i

q_i

qi的条件下,从

t

+

1

t+1

t+1到

T

T

T的部分观测序列为

o

t

+

1

,

o

t

+

2

,

⋯

,

o

T

o_{t+1},o_{t+2},\cdots,o_T

ot+1,ot+2,⋯,oT的概率为后向概率,记作

β

t

(

i

)

=

P

(

o

t

+

1

,

o

t

+

2

,

⋯

,

o

T

∣

i

t

=

q

i

,

λ

)

(10.18)

\beta_t(i) = P(o_{t+1},o_{t+2},\cdots,o_T|i_t=q_i,\lambda) \tag{10.18}

βt(i)=P(ot+1,ot+2,⋯,oT∣it=qi,λ)(10.18)

这里和前向概率有点不同,隐藏状态

i

t

=

q

i

i_t=q_i

it=qi作为条件观测

o

t

+

1

:

T

o_{t+1:T}

ot+1:T,下面也推导一下。

同样可以用递推的方法求得后向概率 β t ( i ) \beta_t(i) βt(i)及观测序列 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。

算法 10.3(观测序列概率的后向算法)

输入: 隐马尔可夫模型 λ \lambda λ,观测序列 O O O;

输出: 观测序列概率 P ( O ∣ λ ) P(O|\lambda) P(O∣λ)。

(1)

β

T

(

i

)

=

1

,

i

=

1

,

2

,

⋯

,

N

(10.19)

\beta_T(i) = 1, \quad i=1,2,\cdots,N \tag{10.19}

βT(i)=1,i=1,2,⋯,N(10.19)

初始概率为

1

1

1。

(2) 对 t = T − 1 , T − 2 , ⋯ 1 t=T-1,T-2,\cdots 1 t=T−1,T−2,⋯1

我们希望通过

β

t

+

1

(

j

)

=

P

(

o

t

+

2

,

⋯

,

o

T

∣

i

t

+

1

=

q

j

,

λ

)

\beta_{t+1}(j) = P(o_{t+2},\cdots,o_T|i_{t+1}=q_j,\lambda)

βt+1(j)=P(ot+2,⋯,oT∣it+1=qj,λ)来表示

β

t

(

i

)

\beta_{t}(i)

βt(i)。

β

t

(

i

)

=

P

(

o

t

+

1

,

o

t

+

2

,

⋯

,

o

T

∣

i

t

=

q

i

,

λ

)

=

∑

j

=

1

N

P

(

o

t

+

1

,

o

t

+

2

,

⋯

,

o

T

,

i

t

+

1

=

q

j

∣

i

t

=

q

i

,

λ

)

=

∑

j

=

1

N

P

(

o

t

+

1

,

o

t

+

2

,

⋯

,

o

T

,

∣

i

t

+

1

=

q

j

,

i

t

=

q

i

,

λ

)

P

(

i

t

+

1

=

q

j

∣

i

t

=

q

i

,

λ

)

=

∑

j

=

1

N

P

(

o

t

+

1

,

o

t

+

2

,

⋯

,

o

T

,

∣

i

t

+

1

=

q

j

,

λ

)

P

(

i

t

+

1

=

q

j

∣

i

t

=

q

i

,

λ

)

阻隔

=

∑

j

=

1

N

P

(

o

t

+

1

∣

o

t

+

2

,

⋯

,

o

T

,

i

t

+

1

=

q

j

,

λ

)

P

(

o

t

+

2

,

⋯

,

o

T

∣

i

t

+

1

=

q

j

,

λ

)

P

(

i

t

+

1

=

q

j

∣

i

t

=

q

i

,

λ

)

=

∑

j

=

1

N

P

(

o

t

+

1

∣

i

t

+

1

=

q

j

,

λ

)

P

(

o

t

+

2

,

⋯

,

o

T

∣

i

t

+

1

=

q

j

,

λ

)

P

(

i

t

+

1

=

q

j

∣

i

t

=

q

i

,

λ

)

观测独立假设

=

∑

j

=

1

N

b

j

(

o

t

+

1

)

β

t

+

1

(

j

)

a

i

j

=

∑

j

=

1

N

a

i

j

b

j

(

o

t

+

1

)

β

t

+

1

(

j

)

i

=

1

,

2

,

⋯

,

N

(10.20)

\begin{aligned} \beta_{t}(i) &= P(o_{t+1},o_{t+2},\cdots,o_T|i_t=q_i,\lambda) \\ &= \sum_{j=1}^N P(o_{t+1},o_{t+2},\cdots,o_T,i_{t+1}=q_j|i_t=q_i,\lambda) \\ &= \sum_{j=1}^N P(o_{t+1},o_{t+2},\cdots,o_T,|i_{t+1}=q_j,i_t=q_i,\lambda) P(i_{t+1}=q_j|i_t=q_i,\lambda) \\ &= \sum_{j=1}^N P(o_{t+1},o_{t+2},\cdots,o_T,|i_{t+1}=q_j,\lambda) P(i_{t+1}=q_j|i_t=q_i,\lambda) & 阻隔\\ &= \sum_{j=1}^N P(o_{t+1}|o_{t+2},\cdots,o_T,i_{t+1}=q_j,\lambda) P(o_{t+2},\cdots,o_T|i_{t+1}=q_j,\lambda) P(i_{t+1}=q_j|i_t=q_i,\lambda)\\ &= \sum_{j=1}^N P(o_{t+1} | i_{t+1}=q_j,\lambda) P(o_{t+2},\cdots,o_T|i_{t+1}=q_j,\lambda) P(i_{t+1}=q_j|i_t=q_i,\lambda) & 观测独立假设\\ &= \sum_{j=1}^N b_j(o_{t+1}) \beta_{t+1}(j) a_{ij} \\ &= \sum_{j=1}^N a_{ij} b_j(o_{t+1}) \beta_{t+1}(j) \quad i=1,2,\cdots, N \end{aligned} \tag{10.20}

βt(i)=P(ot+1,ot+2,⋯,oT∣it=qi,λ)=j=1∑NP(ot+1,ot+2,⋯,oT,it+1=qj∣it=qi,λ)=j=1∑NP(ot+1,ot+2,⋯,oT,∣it+1=qj,it=qi,λ)P(it+1=qj∣it=qi,λ)=j=1∑NP(ot+1,ot+2,⋯,oT,∣it+1=qj,λ)P(it+1=qj∣it=qi,λ)=j=1∑NP(ot+1∣ot+2,⋯,oT,it+1=qj,λ)P(ot+2,⋯,oT∣it+1=qj,λ)P(it+1=qj∣it=qi,λ)=j=1∑NP(ot+1∣it+1=qj,λ)P(ot+2,⋯,oT∣it+1=qj,λ)P(it+1=qj∣it=qi,λ)=j=1∑Nbj(ot+1)βt+1(j)aij=j=1∑Naijbj(ot+1)βt+1(j)i=1,2,⋯,N阻隔观测独立假设(10.20)

(3)

P

(

O

∣

λ

)

=

P

(

o

1

,

⋯

,

o

T

∣

λ

)

=

∑

i

=

1

N

P

(

o

1

,

⋯

,

o

T

,

i

1

=

q

i

∣

λ

)

=

∑

i

=

1

N

P

(

o

1

,

⋯

,

o

T

∣

i

1

=

q

i

,

λ

)

P

(

i

1

=

q

i

∣

λ

)

=

∑

i

=

1

N

P

(

o

1

∣

o

2

,

⋯

,

o

T

,

i

1

=

q

i

,

λ

)

P

(

o

2

,

⋯

,

o

T

∣

i

1

=

q

i

,

λ

)

P

(

i

1

=

q

i

∣

λ

)

=

∑

i

=

1

N

P

(

o

1

∣

i

1

=

q

i

,

λ

)

P

(

o

2

,

⋯

,

o

T

∣

i

1

=

q

i

,

λ

)

P

(

i

1

=

q

i

∣

λ

)

=

∑

i

=

1

N

b

i

(

o

1

)

β

1

(

i

)

π

i

=

∑

i

=

1

N

π

i

b

i

(

o

1

)

β

1

(

i

)

(10.21)

\begin{aligned} P(O|\lambda) &= P(o_1,\cdots,o_T|\lambda) \\ &= \sum_{i=1}^N P(o_1,\cdots,o_T,i_1=q_i|\lambda) \\ &= \sum_{i=1}^N P(o_1,\cdots,o_T|i_1=q_i,\lambda)P(i_1=q_i|\lambda) \\ &= \sum_{i=1}^N P(o_1|o_2,\cdots,o_T,i_1=q_i,\lambda) P(o_2,\cdots,o_T|i_1=q_i,\lambda)P(i_1=q_i|\lambda) \\ &= \sum_{i=1}^N P(o_1|i_1=q_i,\lambda)P(o_2,\cdots,o_T|i_1=q_i,\lambda)P(i_1=q_i|\lambda) \\ &= \sum_{i=1}^N b_i(o_1) \beta_1(i) \pi_i \\ &= \sum_{i=1}^N \pi_i b_i(o_1)\beta_1(i) \end{aligned} \tag{10.21}

P(O∣λ)=P(o1,⋯,oT∣λ)=i=1∑NP(o1,⋯,oT,i1=qi∣λ)=i=1∑NP(o1,⋯,oT∣i1=qi,λ)P(i1=qi∣λ)=i=1∑NP(o1∣o2,⋯,oT,i1=qi,λ)P(o2,⋯,oT∣i1=qi,λ)P(i1=qi∣λ)=i=1∑NP(o1∣i1=qi,λ)P(o2,⋯,oT∣i1=qi,λ)P(i1=qi∣λ)=i=1∑Nbi(o1)β1(i)πi=i=1∑Nπibi(o1)β1(i)(10.21)

利用前向概率和后向概率的定义可以将观测序列概率

P

(

O

∣

λ

)

P(O|\lambda)

P(O∣λ)统一写成

P

(

O

∣

λ

)

=

∑

i

=

1

N

∑

j

=

1

N

α

t

(

i

)

a

i

j

b

j

(

o

t

+

1

)

β

t

+

1

(

j

)

,

t

=

1

,

2

,

⋯

,

T

−

1

(10.22)

P(O|\lambda) = \sum_{i=1}^N \sum_{j=1}^N \alpha_t(i)a_{ij}b_j(o_{t+1})\beta_{t+1}(j),\quad t=1,2,\cdots,T-1 \tag{10.22}

P(O∣λ)=i=1∑Nj=1∑Nαt(i)aijbj(ot+1)βt+1(j),t=1,2,⋯,T−1(10.22)

这是公式怎么来的呢,我们也推导一下。为了简便,省去固定参数

λ

\lambda

λ。

P ( O ) = P ( o 1 , ⋯ , o T ) = ∑ i = 1 N ∑ j = 1 N P ( o 1 , ⋯ , o T , i t = q i , i t + 1 = q j ) = ∑ i = 1 N ∑ j = 1 N P ( o 1 , ⋯ , o t , o t + 2 , ⋯ , o T , i t = q i , i t + 1 = q j ) P ( o t + 1 ∣ o 1 , ⋯ , o t , o t + 2 , ⋯ , o T , i t = q i , i t + 1 = q j ) = ∑ i = 1 N ∑ j = 1 N P ( o 1 , ⋯ , o t , o t + 2 , ⋯ , o T , i t = q i , i t + 1 = q j ) P ( o t + 1 ∣ i t + 1 = q j ) 观测独立假设 = ∑ i = 1 N ∑ j = 1 N P ( o 1 , ⋯ , o t , o t + 2 , ⋯ , o T , i t = q i , i t + 1 = q j ) b j ( o t + 1 ) = ∑ i = 1 N ∑ j = 1 N P ( o 1 , ⋯ , o t , i t = q i , i t + 1 = q j ) P ( o t + 2 , ⋯ , o T ∣ o 1 , ⋯ , o t , i t = q i , i t + 1 = q j ) b j ( o t + 1 ) = ∑ i = 1 N ∑ j = 1 N P ( o 1 , ⋯ , o t , i t = q i , i t + 1 = q j ) P ( o t + 2 , ⋯ , o T ∣ i t + 1 = q j ) b j ( o t + 1 ) D − 划分 = ∑ i = 1 N ∑ j = 1 N P ( i t + 1 = q j ∣ o 1 , ⋯ , o t , i t = q i ) P ( o 1 , ⋯ , o t , i t = q i ) P ( o t + 2 , ⋯ , o T ∣ i t + 1 = q j ) b j ( o t + 1 ) = ∑ i = 1 N ∑ j = 1 N P ( i t + 1 = q j ∣ i t = q i ) P ( o 1 , ⋯ , o t , i t = q i ) P ( o t + 2 , ⋯ , o T ∣ i t + 1 = q j ) b j ( o t + 1 ) 齐次马尔可夫假设 = ∑ i = 1 N ∑ j = 1 N a i j α t ( i ) β t + 1 ( j ) b j ( o t + 1 ) = ∑ i = 1 N ∑ j = 1 N α t ( i ) a i j b j ( o t + 1 ) β t + 1 ( j ) , t = 1 , 2 , ⋯ , T − 1 \begin{aligned} P(O) &= P(o_1,\cdots,o_T) \\ &= \sum_{i=1}^N \sum_{j=1}^N P(o_1,\cdots,o_T, i_t=q_i, i_{t+1} =q_j)\\ &= \sum_{i=1}^N \sum_{j=1}^N P(o_1,\cdots,o_t, o_{t+2},\cdots,o_T, i_t=q_i, i_{t+1} =q_j) P(o_{t+1}|o_1,\cdots,o_t, o_{t+2},\cdots,o_T, i_t=q_i, i_{t+1} =q_j) \\ &= \sum_{i=1}^N \sum_{j=1}^N P(o_1,\cdots,o_t, o_{t+2},\cdots,o_T, i_t=q_i, i_{t+1} =q_j) P(o_{t+1}|i_{t+1}=q_j) & 观测独立假设\\ &= \sum_{i=1}^N \sum_{j=1}^N P(o_1,\cdots,o_t, o_{t+2},\cdots,o_T, i_t=q_i, i_{t+1} =q_j) b_j(o_{t+1})\\ &= \sum_{i=1}^N \sum_{j=1}^N P(o_1,\cdots,o_t,i_t=q_i, i_{t+1} =q_j) P(o_{t+2},\cdots,o_T|o_1,\cdots,o_t,i_t=q_i, i_{t+1} =q_j)b_j(o_{t+1})\\ &= \sum_{i=1}^N \sum_{j=1}^N P(o_1,\cdots,o_t,i_t=q_i, i_{t+1} =q_j) P(o_{t+2},\cdots,o_T|i_{t+1} =q_j)b_j(o_{t+1}) & D-划分 \\ &= \sum_{i=1}^N \sum_{j=1}^N P(i_{t+1}=q_j|o_1,\cdots,o_t,i_t=q_i)P(o_1,\cdots,o_t,i_t=q_i)P(o_{t+2},\cdots,o_T|i_{t+1} =q_j)b_j(o_{t+1}) \\ &= \sum_{i=1}^N \sum_{j=1}^N P(i_{t+1}=q_j|i_t=q_i) P(o_1,\cdots,o_t,i_t=q_i)P(o_{t+2},\cdots,o_T|i_{t+1} =q_j)b_j(o_{t+1}) & 齐次马尔可夫假设 \\ &= \sum_{i=1}^N \sum_{j=1}^N a_{ij} \alpha_t(i) \beta_{t+1}(j)b_j(o_{t+1})\\ &= \sum_{i=1}^N \sum_{j=1}^N \alpha_t(i) a_{ij} b_j(o_{t+1})\beta_{t+1}(j), \quad t=1,2,\cdots,T-1 \end{aligned} P(O)=P(o1,⋯,oT)=i=1∑Nj=1∑NP(o1,⋯,oT,it=qi,it+1=qj)=i=1∑Nj=1∑NP(o1,⋯,ot,ot+2,⋯,oT,it=qi,it+1=qj)P(ot+1∣o1,⋯,ot,ot+2,⋯,oT,it=qi,it+1=qj)=i=1∑Nj=1∑NP(o1,⋯,ot,ot+2,⋯,oT,it=qi,it+1=qj)P(ot+1∣it+1=qj)=i=1∑Nj=1∑NP(o1,⋯,ot,ot+2,⋯,oT,it=qi,it+1=qj)bj(ot+1)=i=1∑Nj=1∑NP(o1,⋯,ot,it=qi,it+1=qj)P(ot+2,⋯,oT∣o1,⋯,ot,it=qi,it+1=qj)bj(ot+1)=i=1∑Nj=1∑NP(o1,⋯,ot,it=qi,it+1=qj)P(ot+2,⋯,oT∣it+1=qj)bj(ot+1)=i=1∑Nj=1∑NP(it+1=qj∣o1,⋯,ot,it=qi)P(o1,⋯,ot,it=qi)P(ot+2,⋯,oT∣it+1=qj)bj(ot+1)=i=1∑Nj=1∑NP(it+1=qj∣it=qi)P(o1,⋯,ot,it=qi)P(ot+2,⋯,oT∣it+1=qj)bj(ot+1)=i=1∑Nj=1∑Naijαt(i)βt+1(j)bj(ot+1)=i=1∑Nj=1∑Nαt(i)aijbj(ot+1)βt+1(j),t=1,2,⋯,T−1观测独立假设D−划分齐次马尔可夫假设

这里利用了前面介绍的D-划分:

P

(

o

t

+

2

,

⋯

,

o

T

∣

o

1

,

⋯

,

o

t

,

i

t

=

q

i

,

i

t

+

1

=

q

j

)

=

P

(

o

t

+

2

,

⋯

,

o

T

∣

i

t

+

1

=

q

j

)

P(o_{t+2},\cdots,o_T|o_1,\cdots,o_t,i_t=q_i, i_{t+1} =q_j) = P(o_{t+2},\cdots,o_T|i_{t+1} =q_j)

P(ot+2,⋯,oT∣o1,⋯,ot,it=qi,it+1=qj)=P(ot+2,⋯,oT∣it+1=qj)

根据概率图就很直观了:

节点 o t + 2 , ⋯ , o T o_{t+2},\cdots,o_T ot+2,⋯,oT中的任何一个节点到节点 o 1 , ⋯ , o t o_1,\cdots,o_t o1,⋯,ot或 i t i_t it都要经过 i t + 1 i_{t+1} it+1,而 i t + 1 i_{t+1} it+1被观测到(它在条件中),所有的这种路径都是头到尾的,因此该条件独立性质成立。

一些概率与期望值的计算

利用前向概率和后向概率,可以得到关于单个状态和两个状态概率的计算公式。

1.给定模型

λ

\lambda

λ和观测

O

O

O,在时刻

t

t

t处于状态

q

i

q_i

qi的概率。记

γ

t

(

i

)

=

P

(

i

t

=

q

i

∣

O

,

λ

)

(10.23)

\gamma_t(i) = P(i_t=q_i|O,\lambda) \tag{10.23}

γt(i)=P(it=qi∣O,λ)(10.23)

可以通过前向概率后向概率计算

γ

t

(

i

)

=

P

(

i

t

=

q

i

∣

O

,

λ

)

=

P

(

i

t

=

q

i

,

O

∣

λ

)

P

(

O

∣

λ

)

\gamma_t(i) = P(i_t=q_i|O,\lambda) =\frac{P(i_t=q_i,O|\lambda) }{P(O|\lambda)}

γt(i)=P(it=qi∣O,λ)=P(O∣λ)P(it=qi,O∣λ)

同样,为了简便,省去已知参数 λ \lambda λ。

γ

t

(

i

)

=

P

(

i

t

=

q

i

∣

O

)

=

P

(

i

t

=

q

i

,

O

)

P

(

O

)

=

1

P

(

O

)

P

(

i

t

=

q

i

)

P

(

O

∣

i

t

=

q

i

)

=

P

(

i

t

=

q

i

)

P

(

O

)

P

(

o

1

,

⋯

,

o

t

∣

i

t

=

q

i

)

P

(

o

t

+

1

,

⋯

,

o

T

∣

i

t

=

q

i

)

D

−

划分

=

1

P

(

O

)

P

(

o

1

,

⋯

,

o

t

,

i

t

=

q

i

)

P

(

o

t

+

1

,

⋯

,

o

T

∣

i

t

=

q

i

)

=

α

t

(

i

)

β

t

(

i

)

P

(

O

)

=

α

t

(

i

)

β

t

(

i

)

∑

j

=

1

N

P

(

O

,

i

t

=

q

j

)

=

α

t

(

i

)

β

t

(

i

)

∑

j

=

1

N

α

t

(

j

)

β

t

(

j

)

(10.24)

\begin{aligned} \gamma_t(i) &= P(i_t=q_i|O) \\ &= \frac{P(i_t=q_i,O)}{P(O)} \\ &= \frac{1}{P(O)} P(i_t=q_i) P(O|i_t=q_i) \\ &= \frac{P(i_t=q_i)}{P(O)} P(o_1,\cdots,o_t|i_t=q_i) P(o_{t+1},\cdots,o_T|i_t=q_i) & D-划分 \\ &= \frac{1}{P(O)} P(o_1,\cdots,o_t,i_t=q_i) P(o_{t+1},\cdots,o_T|i_t=q_i) \\ &= \frac{\alpha_t(i)\beta_t(i)}{P(O)} \\ &= \frac{\alpha_t(i)\beta_t(i)}{\sum_{j=1}^N P(O,i_t=q_j)} \\ &= \frac{\alpha_t(i)\beta_t(i)}{\sum_{j=1}^N \alpha_t(j)\beta_t(j)} \end{aligned} \tag{10.24}

γt(i)=P(it=qi∣O)=P(O)P(it=qi,O)=P(O)1P(it=qi)P(O∣it=qi)=P(O)P(it=qi)P(o1,⋯,ot∣it=qi)P(ot+1,⋯,oT∣it=qi)=P(O)1P(o1,⋯,ot,it=qi)P(ot+1,⋯,oT∣it=qi)=P(O)αt(i)βt(i)=∑j=1NP(O,it=qj)αt(i)βt(i)=∑j=1Nαt(j)βt(j)αt(i)βt(i)D−划分(10.24)

这里关键部分是

P

(

O

∣

i

t

=

q

i

)

=

P

(

o

1

,

⋯

,

o

t

∣

i

t

=

q

i

)

P

(

o

t

+

1

,

⋯

,

o

T

∣

i

t

=

q

i

)

P(O|i_t=q_i) = P(o_1,\cdots,o_t|i_t=q_i) P(o_{t+1},\cdots,o_T|i_t=q_i)

P(O∣it=qi)=P(o1,⋯,ot∣it=qi)P(ot+1,⋯,oT∣it=qi)。

这个是怎么得到的呢?还是利用了D-划分规则:

可能还是没那么直观,再展开就好了:

P

(

O

∣

i

t

=

q

i

)

=

P

(

o

1

,

⋯

,

o

T

∣

i

t

=

q

i

)

=

P

(

o

1

,

⋯

,

o

t

∣

i

t

=

q

i

)

P

(

o

t

+

1

,

⋯

,

o

T

∣

i

t

=

q

i

,

o

1

,

⋯

,

o

t

)

=

P

(

o

1

,

⋯

,

o

t

∣

i

t

=

q

i

)

P

(

o

t

+

1

,

⋯

,

o

T

∣

i

t

=

q

i

)

d

−

划分

\begin{aligned} P(O|i_t=q_i) &= P(o_1,\cdots,o_T|i_t=q_i) \\ &= P(o_1,\cdots,o_t|i_t=q_i)P(o_{t+1},\cdots,o_T|i_t=q_i,o_1,\cdots,o_t) \\ &= P(o_1,\cdots,o_t|i_t=q_i) P(o_{t+1},\cdots,o_T|i_t=q_i) & d-划分 \end{aligned}

P(O∣it=qi)=P(o1,⋯,oT∣it=qi)=P(o1,⋯,ot∣it=qi)P(ot+1,⋯,oT∣it=qi,o1,⋯,ot)=P(o1,⋯,ot∣it=qi)P(ot+1,⋯,oT∣it=qi)d−划分

这里

P

(

o

t

+

1

,

⋯

,

o

T

∣

i

t

=

q

i

,

o

1

,

⋯

,

o

t

)

=

P

(

o

t

+

1

,

⋯

,

o

T

∣

i

t

=

q

i

)

P(o_{t+1},\cdots,o_T|i_t=q_i,o_1,\cdots,o_t) =P(o_{t+1},\cdots,o_T|i_t=q_i)

P(ot+1,⋯,oT∣it=qi,o1,⋯,ot)=P(ot+1,⋯,oT∣it=qi)利用了D-划分。

节点 o t + 1 , ⋯ , o T o_{t+1},\cdots,o_T ot+1,⋯,oT 中的任何一个节点到节点 o 1 , ⋯ , o t o_1,\cdots,o_t o1,⋯,ot中任意节点都要经过 i t i_{t} it,而 i t i_{t} it被观测到,所有的这种路径都是头到尾的,因此该条件独立性质成立。

2.给定模型

λ

\lambda

λ和观测

O

O

O,在时刻

t

t

t处于状态

q

i

q_i

qi且在时刻

t

+

1

t+1

t+1处于状态

q

j

q_j

qj的概率。记

ξ

t

(

i

,

j

)

=

P

(

i

t

=

q

i

,

i

t

+

1

=

q

j

∣

O

,

λ

)

(10.25)

\xi_t(i,j) = P(i_t=q_i,i_{t+1}=q_j|O,\lambda) \tag{10.25}

ξt(i,j)=P(it=qi,it+1=qj∣O,λ)(10.25)

可以通过前向后向概率计算:

ξ

t

(

i

,

j

)

=

P

(

i

t

=

q

i

,

i

t

+

1

=

q

j

,

O

)

P

(

O

)

=

P

(

i

t

=

q

i

,

i

t

+

1

=

q

j

,

O

)

∑

i

=

1

N

∑

j

=

1

N

P

(

i

t

=

q

i

,

i

t

+

1

=

q

j

,

O

)

\begin{aligned} \xi_t(i,j) &= \frac{P(i_t=q_i,i_{t+1}=q_j, O) }{P(O)} \\ &= \frac{P(i_t=q_i,i_{t+1}=q_j, O)}{\sum_{i=1}^N \sum_{j=1}^N P(i_t=q_i,i_{t+1}=q_j, O)} \end{aligned}

ξt(i,j)=P(O)P(it=qi,it+1=qj,O)=∑i=1N∑j=1NP(it=qi,it+1=qj,O)P(it=qi,it+1=qj,O)

而

P

(

i

t

=

q

i

,

i

t

+

1

=

q

j

,

O

)

=

α

t

(

i

)

a

i

j

b

j

(

o

t

+

1

)

β

t

+

1

(

j

)

P(i_t=q_i,i_{t+1}=q_j,O) = \alpha_t(i)a_{ij}b_j(o_{t+1})\beta_{t+1}(j)

P(it=qi,it+1=qj,O)=αt(i)aijbj(ot+1)βt+1(j)我们以经在公式

(

10.22

)

(10.22)

(10.22)中证明过,这里直接拿来使用。

所以

ξ

t

(

i

,

j

)

=

α

t

(

i

)

a

i

j

b

j

(

o

t

+

1

)

β

t

+

1

(

j

)

∑

i

=

1

N

∑

j

=

1

N

α

t

(

i

)

a

i

j

b

j

(

o

t

+

1

)

β

t

+

1

(

j

)

(10.26)

\xi_t(i,j) = \frac{\alpha_t(i)a_{ij}b_j(o_{t+1})\beta_{t+1}(j)}{\sum_{i=1}^N \sum_{j=1}^N \alpha_t(i)a_{ij}b_j(o_{t+1})\beta_{t+1}(j)} \tag{10.26}

ξt(i,j)=∑i=1N∑j=1Nαt(i)aijbj(ot+1)βt+1(j)αt(i)aijbj(ot+1)βt+1(j)(10.26)