此处仅为个人结果记录,并无完整部署代码

目录

Pre

一、OpenCV DNN C++ 部署

二、ONNX RUNTIME C++ 部署

Pre

一定要知道,yolov8的输出与Yolov5 7.0 实例分割的输出不一样,

output0: float32[1,116,8400]。 116是4个box坐标信息+80个类别概率+32个mask系数

output1: float32[1,32,160,160]。一张图片一组mask原型。这个mask原型是相对于整个图像的。

一、OpenCV DNN C++ 部署

所以output0需要装置一下,117->116,其他的与yolov5实例分割没啥区别:

net.setInput(blob);

std::vector<cv::Mat> net_output_img;

vector<string> output_layer_names{ "output0","output1" };

net.forward(net_output_img, output_layer_names); //get outputs

std::vector<int> class_ids;// res-class_id

std::vector<float> confidences;// res-conf

std::vector<cv::Rect> boxes;// res-box

std::vector<vector<float>> picked_proposals; //output0[:,:, 4 + _className.size():net_width]===> for mask

int net_width = _className.size() + 4 + _segChannels;

//转置

Mat output0=Mat( Size(net_output_img[0].size[2], net_output_img[0].size[1]), CV_32F, (float*)net_output_img[0].data).t(); //[bs,116,8400]=>[bs,8400,116]

int rows = output0.rows;

float* pdata = (float*)output0.data;

for (int r = 0; r < rows; ++r) {

cv::Mat scores(1, _className.size(), CV_32FC1, pdata + 4);

Point classIdPoint;

double max_class_socre;

minMaxLoc(scores, 0, &max_class_socre, 0, &classIdPoint);

max_class_socre = (float)max_class_socre;

if (max_class_socre >= _classThreshold) {

vector<float> temp_proto(pdata + 4 + _className.size(), pdata + net_width);

picked_proposals.push_back(temp_proto);

//rect [x,y,w,h]

float x = (pdata[0] - params[2]) / params[0];

float y = (pdata[1] - params[3]) / params[1];

float w = pdata[2] / params[0];

float h = pdata[3] / params[1];

int left = MAX(int(x - 0.5 * w + 0.5), 0);

int top = MAX(int(y - 0.5 * h + 0.5), 0);

class_ids.push_back(classIdPoint.x);

confidences.push_back(max_class_socre);

boxes.push_back(Rect(left, top, int(w + 0.5), int(h + 0.5)));

}

pdata += net_width;//next line

}

//NMS

vector<int> nms_result;

NMSBoxes(boxes, confidences, _classThreshold, _nmsThreshold, nms_result);

std::vector<vector<float>> temp_mask_proposals;

Rect holeImgRect(0, 0, srcImg.cols, srcImg.rows);

for (int i = 0; i < nms_result.size(); ++i) {

int idx = nms_result[i];

OutputSeg result;

result.id = class_ids[idx];

result.confidence = confidences[idx];

result.box = boxes[idx] & holeImgRect;

temp_mask_proposals.push_back(picked_proposals[idx]);

output.push_back(result);

}

MaskParams mask_params;

mask_params.params = params;

mask_params.srcImgShape = srcImg.size();

for (int i = 0; i < temp_mask_proposals.size(); ++i) {

GetMask2(Mat(temp_mask_proposals[i]).t(), net_output_img[1], output[i], mask_params);

}

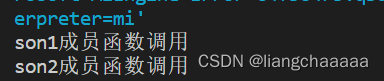

但是我read模型的时候报错:ERROR during processing node with 2 inputs and 1 outputs: [Reshape]:(/model.22/dfl/Reshape_output_0) from domain='ai.onnx'

应该是OpenCV版本需要升级到4.7。之前部署检测的时候也报这个错:yolov8 OpenCV DNN 部署 推理报错_爱钓鱼的歪猴的博客-CSDN博客

我不想升级OpenCV了,所以选择用ONNX RUNTIME 部署

二、ONNX RUNTIME C++ 部署

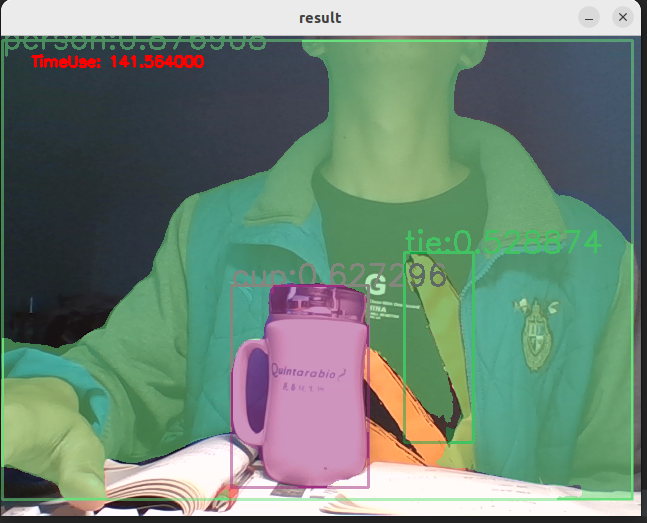

yolov8n-seg CPU 推理耗时150ms左右, 速度比yolov5n-seg慢一点(100ms),但是检测效果好一些,具体来说,同样是我穿上这件外套,yolov8n-seg给出的置信度更高!