PyTorch——利用Accelerate轻松控制多个CPU/GPU/TPU加速计算

前言 官方示例 控制多个CPU/GPU/TPU 简单说一下 设备环境 导包 加载数据 FashionMNIST 创建一个简单的CNN模型 训练函数-只包含训练 训练函数-包含训练和验证 训练 参考链接

CPU?GPU?TPU?

计算设备太多,很混乱? 切换环境,代码大量改来改去? 不懂怎么调用多个CPU/GPU/TPU?或者想轻松调用? OK!OK!OK!

来自HuggingFace的Accelerate库帮你轻松解决这些问题,只需几行代码改动既可以快速完成计算设备的自动调整。 相关地址

官方文档:https://huggingface.co/docs/accelerate/index GitHub:https://github.com/huggingface/accelerate 安装(推荐用>=0.14的版本) $ pip install accelerate 下面就来说说怎么用

你也可以直接看我在Kaggle上做好的完整的Notebook示例 先大致看个样 移除掉以前.to(device)部分的代码,引入Accelerator 对model、optimizer、data、loss.backward()做下处理即可 import torch

import torch. nn. functional as F

from datasets import load_dataset

from accelerate import Accelerator

accelerator = Accelerator( )

model = torch. nn. Transformer( )

optimizer = torch. optim. Adam( model. parameters( ) )

dataset = load_dataset( 'my_dataset' )

data = torch. utils. data. DataLoader( dataset, shuffle= True )

model, optimizer, data = accelerator. prepare( model, optimizer, data)

model. train( )

for epoch in range ( 10 ) :

for source, targets in data:

optimizer. zero_grad( )

output = model( source)

loss = F. cross_entropy( output, targets)

accelerator. backward( loss)

optimizer. step( )

对于单个设备,像前面那个简单示例改下代码即可 多个设备的情况下,有一点特殊的要处理,下面做个完整的PyTorch训练示例

你可以拿这个和我之前发的示例做个对比 CNN图像分类-FashionMNIST 也可以直接看我在Kaggle上做好的完整的Notebook示例 看看当前的显卡设备(2颗Tesla T4),命令 $ nvidia-smi Thu Apr 27 10:53:26 2023

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.161.03 Driver Version: 470.161.03 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 43C P8 9W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla T4 Off | 00000000:00:05.0 Off | 0 |

| N/A 41C P8 9W / 70W | 0MiB / 15109MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

安装或更新Accelerate ,命令 $ !pip install --upgrade accelerate import torch

from torch import nn

from torch. utils. data import DataLoader

from torchvision. transforms import ToTensor, Compose

import torchvision. datasets as datasets

from accelerate import Accelerator

from accelerate import notebook_launcher

train_data = datasets. FashionMNIST(

root= "./data" ,

train= True ,

download= True ,

transform= Compose( [ ToTensor( ) ] )

)

test_data = datasets. FashionMNIST(

root= "./data" ,

train= False ,

download= True ,

transform= Compose( [ ToTensor( ) ] )

)

print ( train_data. data. shape)

print ( test_data. data. shape)

class CNNModel ( nn. Module) :

def __init__ ( self) :

super ( CNNModel, self) . __init__( )

self. module1 = nn. Sequential(

nn. Conv2d( 1 , 32 , kernel_size= 5 , stride= 1 , padding= 2 ) ,

nn. BatchNorm2d( 32 ) ,

nn. ReLU( ) ,

nn. MaxPool2d( kernel_size= 2 , stride= 2 )

)

self. module2 = nn. Sequential(

nn. Conv2d( 32 , 64 , kernel_size= 5 , stride= 1 , padding= 2 ) ,

nn. BatchNorm2d( 64 ) ,

nn. ReLU( ) ,

nn. MaxPool2d( kernel_size= 2 , stride= 2 )

)

self. flatten = nn. Flatten( )

self. linear1 = nn. Linear( 7 * 7 * 64 , 64 )

self. linear2 = nn. Linear( 64 , 10 )

self. relu = nn. ReLU( )

def forward ( self, x) :

out = self. module1( x)

out = self. module2( out)

out = self. flatten( out)

out = self. linear1( out)

out = self. relu( out)

out = self. linear2( out)

return out

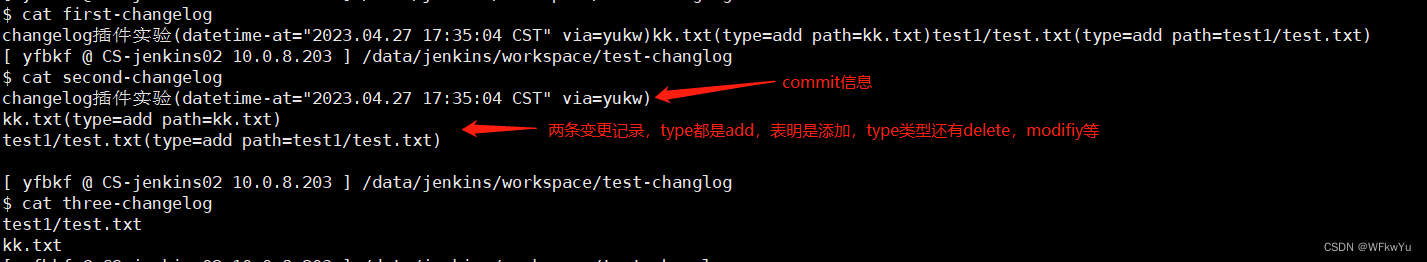

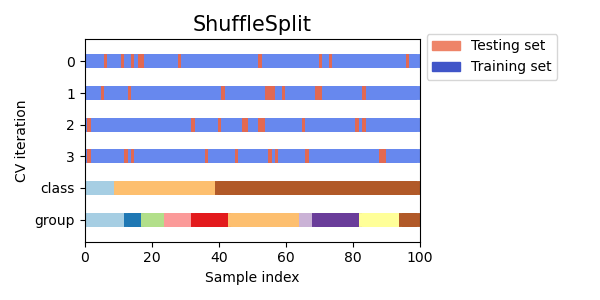

注意看accelerator相关代码 若要实现多设备控制训练,for epoch in range(epoch_num):中末尾处的代码必不可少 def training_function ( ) :

epoch_num = 4

batch_size = 64

learning_rate = 0.005

train_loader = DataLoader( dataset= train_data, batch_size= batch_size, shuffle= True )

val_loader = DataLoader( test_data, batch_size= batch_size, shuffle= True )

model = CNNModel( )

criterion = nn. CrossEntropyLoss( )

optimizer = torch. optim. Adam( model. parameters( ) , lr= learning_rate)

accelerator = Accelerator( )

model, optimizer, train_loader, val_loader = accelerator. prepare( model, optimizer, train_loader, val_loader)

for epoch in range ( epoch_num) :

model. train( )

for i, ( X_train, y_train) in enumerate ( train_loader) :

out = model( X_train)

loss = criterion( out, y_train)

optimizer. zero_grad( )

accelerator. backward( loss)

optimizer. step( )

if ( i + 1 ) % 100 == 0 :

print ( f" { accelerator. device} Train... [epoch { epoch + 1 } / { epoch_num} , step { i + 1 } / { len ( train_loader) } ]\t[loss { loss. item( ) } ]" )

accelerator. wait_for_everyone( )

model = accelerator. unwrap_model( model)

accelerator. save( model, "model.pth" )

相比前面的代码,多了“验证”相关的代码 验证时,因为使用多个设备进行训练,所以会比较特殊,会涉及到多个设备的验证结果合并的问题 def training_function ( ) :

epoch_num = 4

batch_size = 64

learning_rate = 0.005

train_loader = DataLoader( dataset= train_data, batch_size= batch_size, shuffle= True )

val_loader = DataLoader( test_data, batch_size= batch_size, shuffle= True )

model = CNNModel( )

criterion = nn. CrossEntropyLoss( )

optimizer = torch. optim. Adam( model. parameters( ) , lr= learning_rate)

accelerator = Accelerator( )

model, optimizer, train_loader, val_loader = accelerator. prepare( model, optimizer, train_loader, val_loader)

for epoch in range ( epoch_num) :

model. train( )

for i, ( X_train, y_train) in enumerate ( train_loader) :

out = model( X_train)

loss = criterion( out, y_train)

optimizer. zero_grad( )

accelerator. backward( loss)

optimizer. step( )

if ( i + 1 ) % 100 == 0 :

print ( f" { accelerator. device} Train... [epoch { epoch + 1 } / { epoch_num} , step { i + 1 } / { len ( train_loader) } ]\t[loss { loss. item( ) } ]" )

model. eval ( )

correct, total = 0 , 0

for X_val, y_val in val_loader:

with torch. no_grad( ) :

output = model( X_val)

_, pred = torch. max ( output, 1 )

pred, y_val = accelerator. gather_for_metrics( (

pred, y_val

) )

total += y_val. size( 0 )

correct += ( pred == y_val) . sum ( )

accelerator. print ( f'epoch { epoch + 1 } / { epoch_num} , accuracy = { 100 * ( correct. item( ) / total) : .2f } ' )

accelerator. wait_for_everyone( )

model = accelerator. unwrap_model( model)

accelerator. save( model, "model.pth" )

如果你在本地训练的话,直接调用前面定义的函数training_function即可 training_function( )

如果你在Kaggle/Colab上面,则需要利用notebook_launcher进行训练

notebook_launcher( training_function, num_processes= 2 )

Launching training on 2 GPUs.

cuda:0 Train... [epoch 1/4, step 100/469] [loss 0.43843933939933777]

cuda:1 Train... [epoch 1/4, step 100/469] [loss 0.5267877578735352]

cuda:0 Train... [epoch 1/4, step 200/469] [loss 0.39918822050094604]cuda:1 Train... [epoch 1/4, step 200/469] [loss 0.2748252749443054]

cuda:1 Train... [epoch 1/4, step 300/469] [loss 0.54105544090271]cuda:0 Train... [epoch 1/4, step 300/469] [loss 0.34716445207595825]

cuda:1 Train... [epoch 1/4, step 400/469] [loss 0.2694844901561737]

cuda:0 Train... [epoch 1/4, step 400/469] [loss 0.4343942701816559]

epoch 1/4, accuracy = 88.49

cuda:0 Train... [epoch 2/4, step 100/469] [loss 0.19695354998111725]

cuda:1 Train... [epoch 2/4, step 100/469] [loss 0.2911057770252228]

cuda:0 Train... [epoch 2/4, step 200/469] [loss 0.2948791980743408]

cuda:1 Train... [epoch 2/4, step 200/469] [loss 0.292676717042923]

cuda:0 Train... [epoch 2/4, step 300/469] [loss 0.222089946269989]

cuda:1 Train... [epoch 2/4, step 300/469] [loss 0.28814008831977844]

cuda:0 Train... [epoch 2/4, step 400/469] [loss 0.3431250751018524]

cuda:1 Train... [epoch 2/4, step 400/469] [loss 0.2546379864215851]

epoch 2/4, accuracy = 87.31

cuda:1 Train... [epoch 3/4, step 100/469] [loss 0.24118559062480927]cuda:0 Train... [epoch 3/4, step 100/469] [loss 0.363821804523468]

cuda:0 Train... [epoch 3/4, step 200/469] [loss 0.36783623695373535]

cuda:1 Train... [epoch 3/4, step 200/469] [loss 0.18346744775772095]

cuda:0 Train... [epoch 3/4, step 300/469] [loss 0.23459288477897644]

cuda:1 Train... [epoch 3/4, step 300/469] [loss 0.2887689769268036]

cuda:0 Train... [epoch 3/4, step 400/469] [loss 0.3079166114330292]

cuda:1 Train... [epoch 3/4, step 400/469] [loss 0.18255220353603363]

epoch 3/4, accuracy = 88.46

cuda:1 Train... [epoch 4/4, step 100/469] [loss 0.27428603172302246]

cuda:0 Train... [epoch 4/4, step 100/469] [loss 0.17705145478248596]

cuda:1 Train... [epoch 4/4, step 200/469] [loss 0.2811894416809082]

cuda:0 Train... [epoch 4/4, step 200/469] [loss 0.22682836651802063]

cuda:0 Train... [epoch 4/4, step 300/469] [loss 0.2291710525751114]

cuda:1 Train... [epoch 4/4, step 300/469] [loss 0.32024848461151123]

cuda:0 Train... [epoch 4/4, step 400/469] [loss 0.24648766219615936]

cuda:1 Train... [epoch 4/4, step 400/469] [loss 0.0805584192276001]

epoch 4/4, accuracy = 89.38

Launching training on CPU.

xla:0 Train... [epoch 1/4, step 100/938] [loss 0.6051161289215088]

xla:0 Train... [epoch 1/4, step 200/938] [loss 0.27442359924316406]

xla:0 Train... [epoch 1/4, step 300/938] [loss 0.557417631149292]

xla:0 Train... [epoch 1/4, step 400/938] [loss 0.1840067058801651]

xla:0 Train... [epoch 1/4, step 500/938] [loss 0.5252436399459839]

xla:0 Train... [epoch 1/4, step 600/938] [loss 0.2718536853790283]

xla:0 Train... [epoch 1/4, step 700/938] [loss 0.2763175368309021]

xla:0 Train... [epoch 1/4, step 800/938] [loss 0.39897507429122925]

xla:0 Train... [epoch 1/4, step 900/938] [loss 0.28720396757125854]

epoch = 0, accuracy = 86.36

xla:0 Train... [epoch 2/4, step 100/938] [loss 0.24496735632419586]

xla:0 Train... [epoch 2/4, step 200/938] [loss 0.37713131308555603]

xla:0 Train... [epoch 2/4, step 300/938] [loss 0.3106330633163452]

xla:0 Train... [epoch 2/4, step 400/938] [loss 0.40438592433929443]

xla:0 Train... [epoch 2/4, step 500/938] [loss 0.38303741812705994]

xla:0 Train... [epoch 2/4, step 600/938] [loss 0.39199298620224]

xla:0 Train... [epoch 2/4, step 700/938] [loss 0.38932573795318604]

xla:0 Train... [epoch 2/4, step 800/938] [loss 0.26298171281814575]

xla:0 Train... [epoch 2/4, step 900/938] [loss 0.21517205238342285]

epoch = 1, accuracy = 90.07

xla:0 Train... [epoch 3/4, step 100/938] [loss 0.366019606590271]

xla:0 Train... [epoch 3/4, step 200/938] [loss 0.27360212802886963]

xla:0 Train... [epoch 3/4, step 300/938] [loss 0.2014923095703125]

xla:0 Train... [epoch 3/4, step 400/938] [loss 0.21998485922813416]

xla:0 Train... [epoch 3/4, step 500/938] [loss 0.28129786252975464]

xla:0 Train... [epoch 3/4, step 600/938] [loss 0.42534705996513367]

xla:0 Train... [epoch 3/4, step 700/938] [loss 0.22158119082450867]

xla:0 Train... [epoch 3/4, step 800/938] [loss 0.359947144985199]

xla:0 Train... [epoch 3/4, step 900/938] [loss 0.3221997022628784]

epoch = 2, accuracy = 90.36

xla:0 Train... [epoch 4/4, step 100/938] [loss 0.2814193069934845]

xla:0 Train... [epoch 4/4, step 200/938] [loss 0.16465164721012115]

xla:0 Train... [epoch 4/4, step 300/938] [loss 0.2897304892539978]

xla:0 Train... [epoch 4/4, step 400/938] [loss 0.13403896987438202]

xla:0 Train... [epoch 4/4, step 500/938] [loss 0.1135573536157608]

xla:0 Train... [epoch 4/4, step 600/938] [loss 0.14964193105697632]

xla:0 Train... [epoch 4/4, step 700/938] [loss 0.20239461958408356]

xla:0 Train... [epoch 4/4, step 800/938] [loss 0.23625142872333527]

xla:0 Train... [epoch 4/4, step 900/938] [loss 0.3418393135070801]

epoch = 3, accuracy = 90.11

https://github.com/huggingface/accelerate https://www.kaggle.com/code/muellerzr/multi-gpu-and-accelerate https://github.com/huggingface/notebooks/blob/main/examples/accelerate_examples/simple_nlp_example.ipynb