一、attention机制

注意力模型最近几年在深度学习各个领域被广泛使用,无论是图像处理、语音识别还是自然语言处理的各种不同类型的任务中,都很容易遇到注意力模型的身影。从注意力模型的命名方式看,很明显其借鉴了人类的注意力机制。我们来看下面的一张图片。

图中形象化展示了人类在看到一副图像时是如何高效分配有限的注意力资源的,其中红色区域表明视觉系统更关注的目标,很明显对于图1所示的场景,人们会把注意力更多投入到人的脸部,文本的标题以及文章首句等位置。

视觉注意力机制是人类视觉所特有的大脑信号处理机制。人类视觉通过快速扫描全局图像,获得需要重点关注的目标区域,也就是一般所说的注意力焦点,而后对这一区域投入更多注意力资源,以获取更多所需要关注目标的细节信息,而抑制其他无用信息。深度学习中的注意力机制的核心就是让网络关注其更需要更重要的地方,注意力机制就是实现网络自适应的一个方式。

注意力机制的本质就是定位到感兴趣的信息,抑制无用信息,结果通常都是以概率图或者概率特征向量的形式展示,从原理上来说,主要分为空间注意力模型,通道注意力模型,空间和通道混合注意力模型三种。那么今天我们主要介绍通道注意力机制。

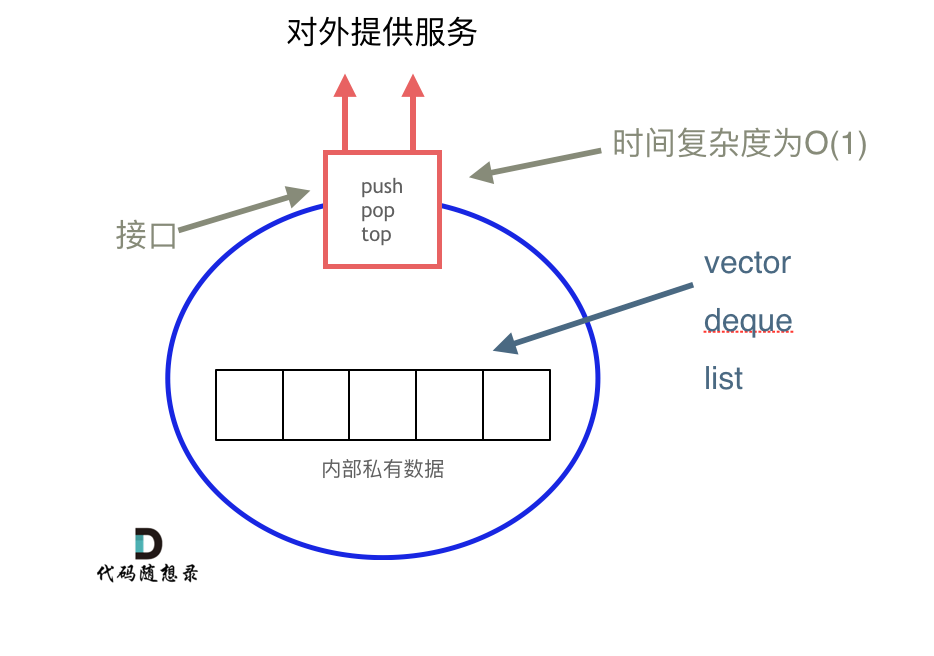

1、通道注意力机制

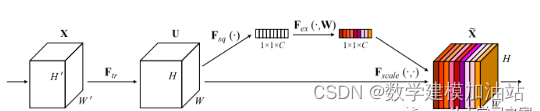

通道注意力机制最经典的应用就是SENet(Sequeeze and Excitation Net),它通过建模各个特征通道的重要程度,然后针对不同的任务增强或者抑制不同的通道,原理图如下。

在正常的卷积操作后分出了一个旁路分支,首先进行Squeeze操作(即图中Fsq(·)),它将空间维度进行特征压缩,即每个二维的特征图变成一个实数,相当于具有全局感受野的池化操作,特征通道数不变。然后是Excitation操作(即图中的Fex(·)),它通过参数w为每个特征通道生成权重,w被学习用来显式地建模特征通道间的相关性。在文章中,使用了一个2层bottleneck结构(先降维再升维)的全连接层+Sigmoid函数来实现。得到了每一个特征通道的权重之后,就将该权重应用于原来的每个特征通道,基于特定的任务,就可以学习到不同通道的重要性。作为一种通用的设计思想,它可以被用于任何现有网络,具有较强的实践意义。

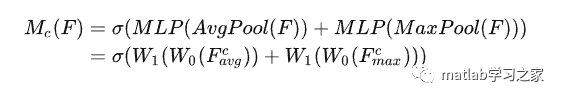

综上通道注意力计算公式总结为:

关于通道注意力机制的原理就介绍到这里,想要了解具体原理的,大家可以参考文献:Squeeze-and-Excitation Networks

二、代码实战

clc

clear

close all

load Train.mat

% load Test.mat

Train.weekend = dummyvar(Train.weekend);

Train.month = dummyvar(Train.month);

Train = movevars(Train,{'weekend','month'},'After','demandLag');

Train.ts = [];

Train(1,:) =[];

y = Train.demand;

x = Train{:,2:5};

[xnorm,xopt] = mapminmax(x',0,1);

[ynorm,yopt] = mapminmax(y',0,1);

xnorm = xnorm(:,1:1000);

ynorm = ynorm(1:1000);

k = 24; % 滞后长度

% 转换成2-D image

for i = 1:length(ynorm)-k

Train_xNorm{:,i} = xnorm(:,i:i+k-1);

Train_yNorm(i) = ynorm(i+k-1);

Train_y{i} = y(i+k-1);

end

Train_x = Train_xNorm';

ytest = Train.demand(1001:1170);

xtest = Train{1001:1170,2:5};

[xtestnorm] = mapminmax('apply', xtest',xopt);

[ytestnorm] = mapminmax('apply',ytest',yopt);

% xtestnorm = [xtestnorm; Train.weekend(1001:1170,:)'; Train.month(1001:1170,:)'];

xtest = xtest';

for i = 1:length(ytestnorm)-k

Test_xNorm{:,i} = xtestnorm(:,i:i+k-1);

Test_yNorm(i) = ytestnorm(i+k-1);

Test_y(i) = ytest(i+k-1);

end

Test_x = Test_xNorm';

x_train = table(Train_x,Train_y');

x_test = table(Test_x);

%% 训练集和验证集划分

% TrainSampleLength = length(Train_yNorm);

% validatasize = floor(TrainSampleLength * 0.1);

% Validata_xNorm = Train_xNorm(:,end - validatasize:end,:);

% Validata_yNorm = Train_yNorm(:,TrainSampleLength-validatasize:end);

% Validata_y = Train_y(TrainSampleLength-validatasize:end);

%

% Train_xNorm = Train_xNorm(:,1:end-validatasize,:);

% Train_yNorm = Train_yNorm(:,1:end-validatasize);

% Train_y = Train_y(1:end-validatasize);

%% 构建残差神经网络

lgraph = layerGraph();

tempLayers = [

imageInputLayer([4 24 1],"Name","imageinput")

convolution2dLayer([3 3],32,"Name","conv","Padding","same")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

batchNormalizationLayer("Name","batchnorm")

reluLayer("Name","relu")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

additionLayer(2,"Name","addition")

convolution2dLayer([3 3],32,"Name","conv_1","Padding","same")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

batchNormalizationLayer("Name","batchnorm_1")

reluLayer("Name","relu_1")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

additionLayer(2,"Name","addition_1")

convolution2dLayer([3 3],32,"Name","conv_2","Padding","same")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

batchNormalizationLayer("Name","batchnorm_2")

reluLayer("Name","relu_2")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

additionLayer(2,"Name","addition_2")

convolution2dLayer([3 3],32,"Name","conv_3","Padding","same")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

batchNormalizationLayer("Name","batchnorm_3")

reluLayer("Name","relu_3")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

additionLayer(2,"Name","addition_4")

sigmoidLayer("Name","sigmoid")];

lgraph = addLayers(lgraph,tempLayers);

tempLayers = multiplicationLayer(2,"Name","multiplication");

lgraph = addLayers(lgraph,tempLayers);

tempLayers = [

additionLayer(3,"Name","addition_3")

fullyConnectedLayer(32,"Name","fc1")

fullyConnectedLayer(16,"Name","fc2")

fullyConnectedLayer(1,"Name","fc3")

regressionLayer("Name","regressionoutput")];

lgraph = addLayers(lgraph,tempLayers);

% 清理辅助变量

clear tempLayers;

plot(lgraph);

analyzeNetwork(lgraph);

%% 设置网络参数

maxEpochs = 100;

miniBatchSize = 32;

options = trainingOptions('adam', ...

'MaxEpochs',maxEpochs, ...

'MiniBatchSize',miniBatchSize, ...

'InitialLearnRate',0.005, ...

'GradientThreshold',1, ...

'Shuffle','never', ...

'Plots','training-progress',...

'Verbose',0);

net = trainNetwork(x_train,lgraph ,options);

Predict_yNorm = predict(net,x_test);

Predict_y = double(Predict_yNorm);

plot(Test_y)

hold on

plot(Predict_y)

legend('真实值','预测值')

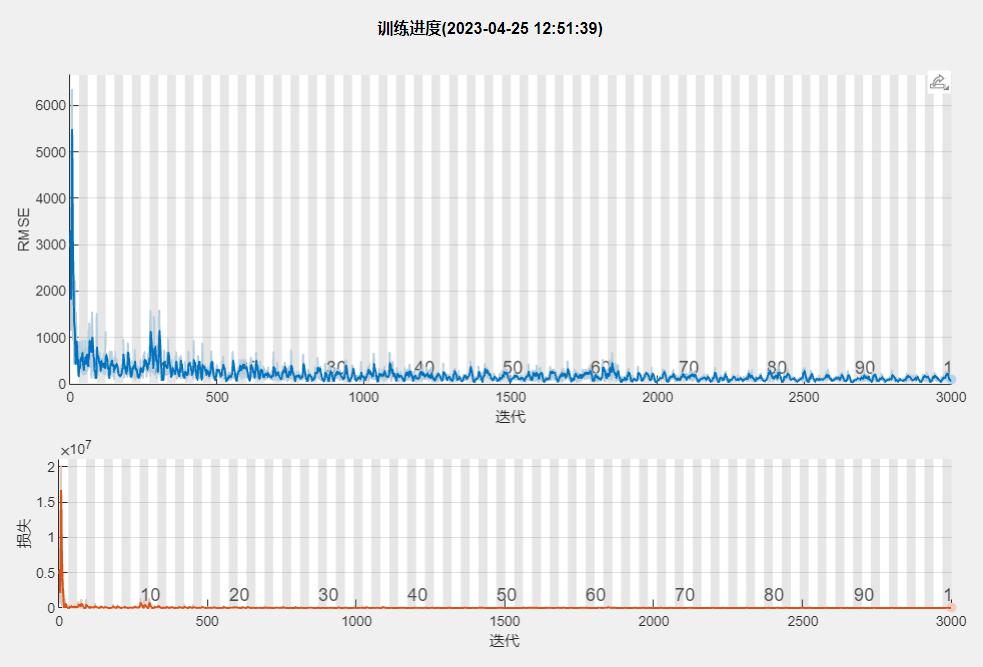

训练迭代图:

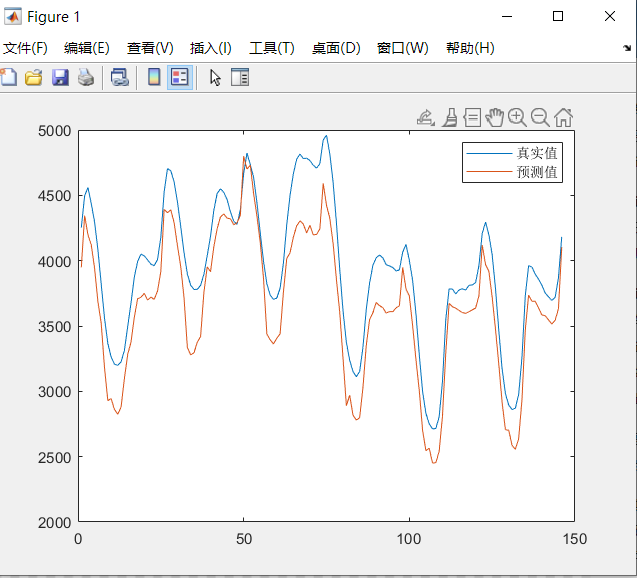

试集预测曲线图

完整代码