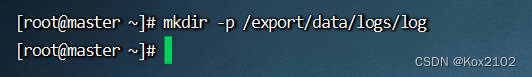

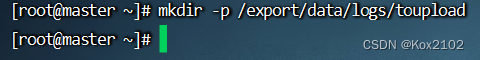

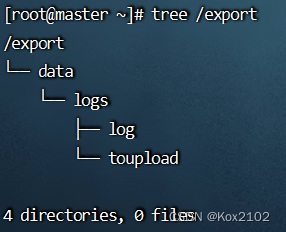

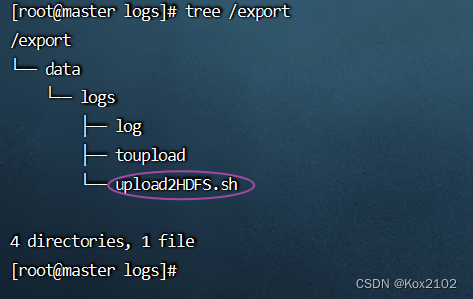

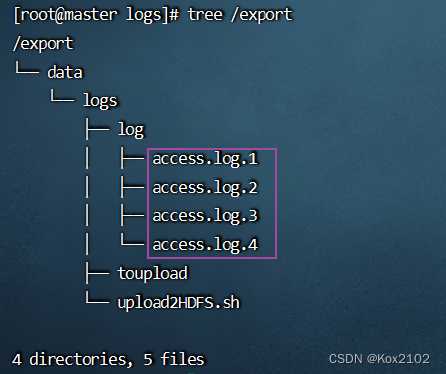

创建日志文件存放的目录 /export/data/logs/log,执行命令:mkdir -p /export/data/logs/log 创建待上传文件存放的目录/export/data/logs/toupload,执行命令:mkdir -p /export/data/logs/toupload 查看创建的目录树结构

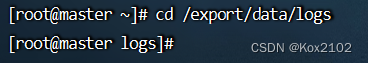

先安装tree命令:yum -y install tree 查看创建目录数结构:tree /export 进入/export/data/logs目录:cd /export/data/logs 执行命令:vim upload2HDFS.sh

JAVA_HOME= / usr/ local / jdk1. 8.0 _231

export JRE_HOME= ${JAVA_HOME}/ jre

export CLASSPATH= . :${JAVA_HOME}/ lib:${JRE_HOME}/ lib

export PATH= ${JAVA_HOME}/ bin:$PATH

export HADOOP_HOME= / usr/ local / hadoop- 3.3 .4 /

export PATH= ${HADOOP_HOME}/ bin:${HADOOP_HOME}/ sbin:$PATH

log_src_dir= / export/ data / logs/ log/

log_toupload_dir= / export/ data / logs/ toupload/

date1= ` date +%Y_%m_%d` = / data / clickLog/ $date1/

echo "envs: hadoop_home: $HADOOP_HOME"

echo "log_src_dir: $log_src_dir"

ls $log_src_dir | while read fileName

do

if [ [ "$fileName" = = access. log. * ] ] ; then

date = ` date +%Y_%m_%d_%H_%M_%S` "moving $log_src_dir$fileName to $log_toupload_dir" lzy_click_log_$fileName"$date"

mv $log_src_dir$fileName $log_toupload_dir"lzy_click_log_$fileName" $date

echo $log_toupload_dir"lzy_click_log_$fileName" $date >> $log_toupload_dir"willDoing." $date

fi

done

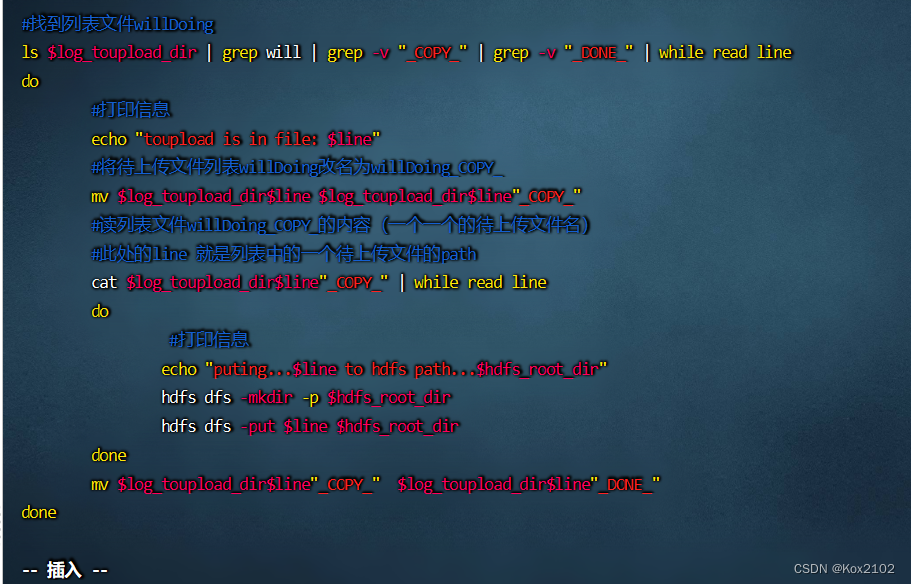

ls $log_toupload_dir | grep will | grep - v "_COPY_" | grep - v "_DONE_" | while read line

do

echo "toupload is in file: $line"

mv $log_toupload_dir$line $log_toupload_dir$line"_COPY_"

cat $log_toupload_dir$line"_COPY_" | while read line

do

echo "puting...$line to hdfs path...$hdfs_root_dir"

hdfs dfs - mkdir - p $hdfs_root_dir

hdfs dfs - put $line $hdfs_root_dir

done

mv $log_toupload_dir$line"_COPY_" $log_toupload_dir$line"_DONE_"

done

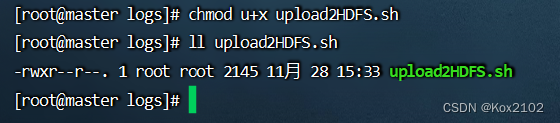

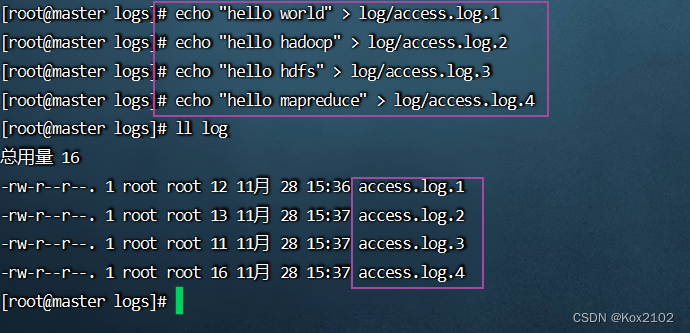

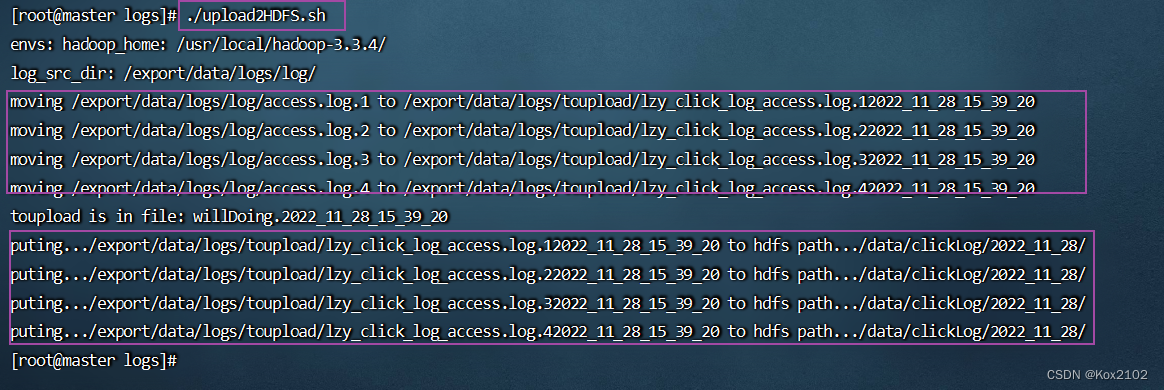

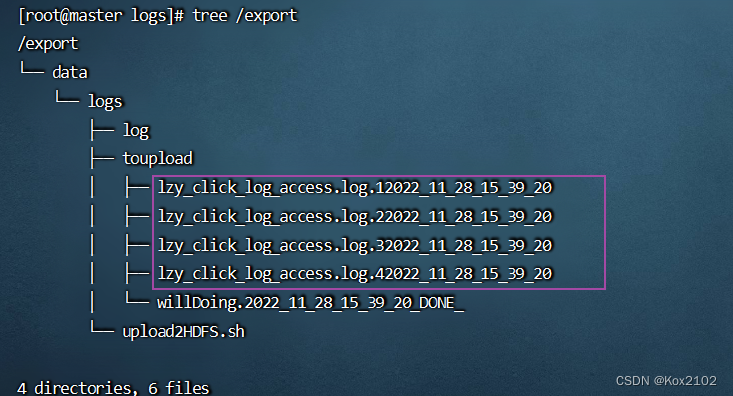

编辑权限,执行命令:chmod u+x upload2HDFS.sh 查看/export目录树结构 创建四个日志文件(必须以access.log.打头) 查看/export目录树结构 执行命令:./upload2HDFS.sh 查看/export目录树结构 打开HDFS集群WebUI查看上传的日志文件