一般讲到GMM就会讲到EM。

我不过多的介绍EM算法。这里只是举一些例子来看看真实的GMM怎么用EM算的。

一、GMM的作用

记住GMM的作用,就是聚类!

二、GMM有hard和soft两种

hard-GMM和soft-GMM是为了对标k-means和soft k-means。在中文互联网上搜索到的GMM,其实基本都是soft-GMM。

所以这里我先介绍一下hard-GMM。

三、hard-GMM

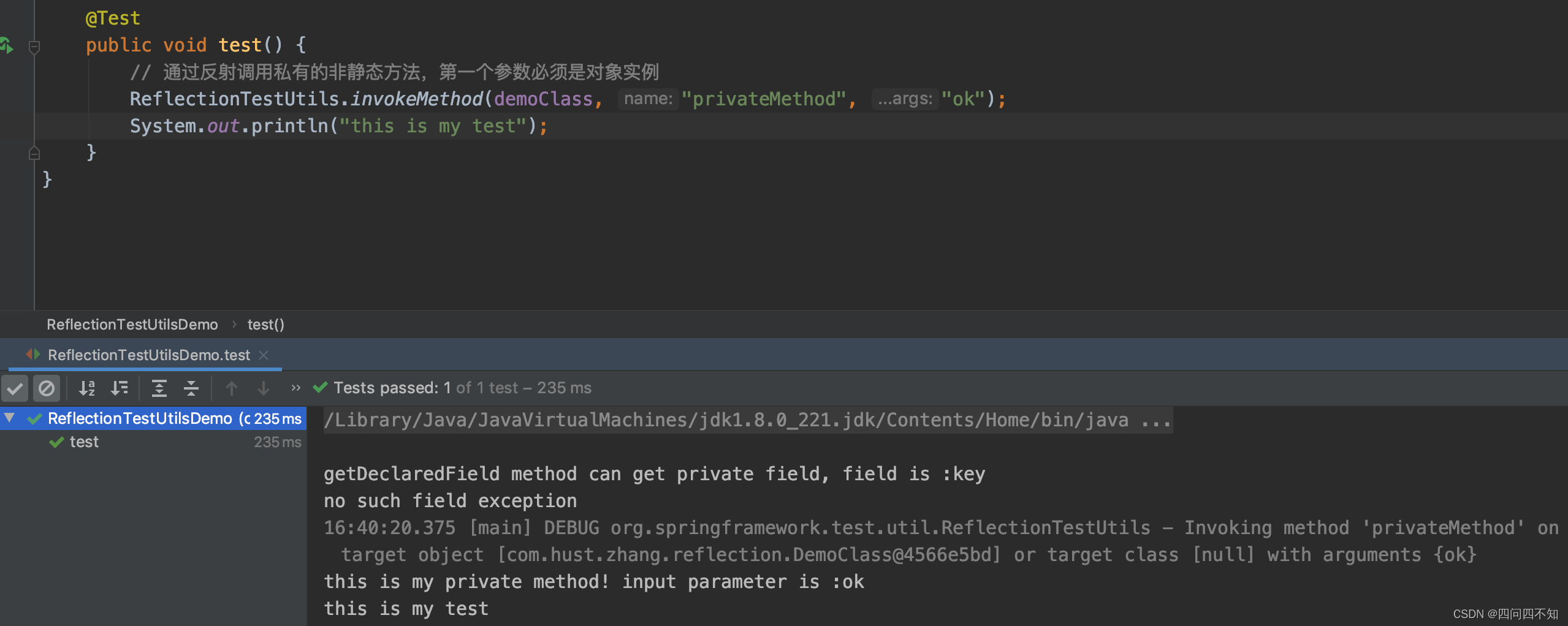

1)已知初始化簇, B 1 = { x 1 , x 2 } , B 2 = { x 3 , x 4 , x 5 , x 6 } B1 = \{x_1, x_2\}, B2 = \{x_3,x_4,x_5,x_6\} B1={x1,x2},B2={x3,x4,x5,x6}.

初始化两个簇权重分别为 w 1 = 2 / 6 = 1 / 3 , w 2 = 4 / 6 = 2 / 3 w_1 = 2/6 = 1/3, w_2 = 4/6 = 2/3 w1=2/6=1/3,w2=4/6=2/3.

由此可知,两个簇的高斯分布参数为:

μ

1

=

(

0

+

0.5

)

/

2

=

0.25

\mu_1 = (0+0.5)/2 = 0.25

μ1=(0+0.5)/2=0.25

μ

2

=

(

1

+

2

+

3

+

4

)

/

4

=

2.5

\mu_2 = (1+2+3+4)/4 = 2.5

μ2=(1+2+3+4)/4=2.5.

σ

1

2

=

0.2

5

2

\sigma_1 ^ 2 = 0.25^2

σ12=0.252,

σ

2

2

=

1.25

\sigma_2^2 = 1.25

σ22=1.25

概率密度

P

^

(

x

)

\hat{P}(x)

P^(x)

=

w

1

N

(

x

;

μ

1

,

σ

1

2

)

+

w

2

N

(

x

;

μ

2

,

σ

2

2

)

= w_1N(x;\mu_1, \sigma_1^2)+w_2N(x;\mu_2, \sigma_2^2)

=w1N(x;μ1,σ12)+w2N(x;μ2,σ22)

=

1

/

3

N

(

x

;

0.25

,

0.2

5

2

)

+

2

/

3

N

(

x

;

2.5

,

1.25

)

= 1/3N(x;0.25, 0.25^2)+2/3N(x;2.5, 1.25)

=1/3N(x;0.25,0.252)+2/3N(x;2.5,1.25)

查表知:

| P ^ ( x 1 ) = 0.342 \hat{P}(x_1) = 0.342 P^(x1)=0.342 | P ^ ( x 2 ) = 0.371 \hat{P}(x_2) = 0.371 P^(x2)=0.371 |

|---|---|

| P ^ ( x 3 ) = 0.103 \hat{P}(x3) = 0.103 P^(x3)=0.103 | P ^ ( x 4 ) = 0.215 \hat{P}(x4) = 0.215 P^(x4)=0.215 |

| P ^ ( x 5 ) = 0.215 \hat{P}(x5) = 0.215 P^(x5)=0.215 | P ^ ( x 6 ) = 0.097 \hat{P}(x6) = 0.097 P^(x6)=0.097 |

E i n = − ∑ n = 1 6 l o g P ^ ( x n ) = 9.748 E_{in} = -\sum\limits_{n=1}^{6}log\hat{P}(x_n) = 9.748 Ein=−n=1∑6logP^(xn)=9.748

2)使用EM算法迭代直至收敛,需要写出每一步的聚类结果和模型参数

E-step:

计算归属度

λ

i

j

\lambda_{ij}

λij,表示数据点

i

i

i对簇

j

j

j的归属度。

λ

i

j

=

w

j

N

(

x

i

;

μ

j

,

σ

j

2

)

\lambda_{ij} = w_jN(x_i; \mu_j, \sigma_j^2)

λij=wjN(xi;μj,σj2)

(1)对数据点

x

1

x_1

x1来说,对簇1簇2的归属度分别为:

λ

11

\lambda_{11}

λ11

=

w

1

N

(

x

1

;

μ

1

,

σ

1

2

)

= w_1N(x_1; \mu_1, \sigma_1^2)

=w1N(x1;μ1,σ12)

=

1

/

3

N

(

0

;

0.25

,

0.2

5

2

)

= 1/3N(0;0.25, 0.25^2)

=1/3N(0;0.25,0.252)

=

0.323

= 0.323

=0.323

λ

12

\lambda_{12}

λ12

=

w

2

N

(

x

1

;

μ

2

,

σ

2

2

)

= w_2N(x_1; \mu_2, \sigma_2^2)

=w2N(x1;μ2,σ22)

=

2

/

3

N

(

0

;

2.5

,

1.25

)

= 2/3N(0;2.5, 1.25)

=2/3N(0;2.5,1.25)

=

0.019

= 0.019

=0.019

λ

11

>

λ

12

\lambda_{11} > \lambda_{12}

λ11>λ12

说明该步下,数据点1归属到簇1

(2)对数据点

x

3

x_3

x3来说,对簇1簇2的归属度分别为:

λ

31

\lambda_{31}

λ31

=

w

1

N

(

x

3

;

μ

1

,

σ

1

2

)

= w_1N(x_3; \mu_1, \sigma_1^2)

=w1N(x3;μ1,σ12)

=

1

/

3

N

(

1

;

0.25

,

0.2

5

2

)

= 1/3N(1;0.25, 0.25^2)

=1/3N(1;0.25,0.252)

=

4

/

(

3

2

π

)

∗

e

−

4.5

)

= 4/(3\sqrt{2\pi})*e^{-4.5)}

=4/(32π)∗e−4.5)

=

0.006

=0.006

=0.006

λ

32

\lambda_{32}

λ32

=

w

2

N

(

x

3

;

μ

2

,

σ

2

2

)

= w_2N(x_3; \mu_2, \sigma_2^2)

=w2N(x3;μ2,σ22)

=

2

/

3

N

(

1

;

2.5

,

1.25

)

= 2/3N(1;2.5, 1.25)

=2/3N(1;2.5,1.25)

=

(

2

/

1.25

)

/

(

3

2

π

)

∗

e

−

0.9

= (2/\sqrt{1.25})/(3\sqrt{2\pi})*e^{-0.9}

=(2/1.25)/(32π)∗e−0.9

=

0.097

=0.097

=0.097

λ

31

<

λ

32

\lambda_{31} < \lambda_{32}

λ31<λ32

说明该步下,数据点3归属到簇2

(3)同理

x

2

x_2

x2归属到簇1,

x

4

,

x

5

,

x

6

x_4,x_5,x_6

x4,x5,x6归属到簇2

所以最终我们还是得到

B

1

=

{

x

1

,

x

2

}

,

B

2

=

{

x

3

,

x

4

,

x

5

,

x

6

}

B1 = \{x1, x2\}, B2 = \{x3,x4,x5,x6\}

B1={x1,x2},B2={x3,x4,x5,x6}. 也就是说已经收敛,解答完毕。

但如果还没有收敛怎么办?

假设得到了

B

1

=

{

x

1

,

x

2

,

x

3

}

,

B

2

=

{

x

4

,

x

5

,

x

6

}

B1 = \{x_1, x_2, x_3\}, B2 = \{x_4,x_5,x_6\}

B1={x1,x2,x3},B2={x4,x5,x6}

那就再通过M步更新高斯模型参数

M-step

两个簇权重分别为

w

1

=

2

/

6

=

1

/

3

,

w

2

=

4

/

6

=

2

/

3

w_1 = 2/6 = 1/3, w_2 = 4/6 = 2/3

w1=2/6=1/3,w2=4/6=2/3

两个簇的高斯分布为

μ

1

=

(

0

+

0.5

+

1

)

/

3

=

0.5

\mu_1 = (0+0.5+1)/3 = 0.5

μ1=(0+0.5+1)/3=0.5

μ

2

=

(

2

+

3

+

4

)

/

3

=

3

\mu_2 = (2+3+4)/3 = 3

μ2=(2+3+4)/3=3

σ

1

2

=

1

/

6

\sigma_1^2 = 1/6

σ12=1/6

σ

2

2

=

2

/

3

\sigma_2^2 =2/3

σ22=2/3

概率密度

P

^

(

x

)

\hat{P}(x)

P^(x)

=

w

1

N

(

x

;

μ

1

,

σ

1

2

)

+

w

2

N

(

x

;

μ

2

,

σ

2

2

)

= w_1N(x;\mu_1, \sigma_1^2)+w_2N(x;\mu_2, \sigma_2^2)

=w1N(x;μ1,σ12)+w2N(x;μ2,σ22)

=

1

/

3

N

(

x

;

0.5

,

1

/

6

)

+

2

/

3

N

(

x

;

3

,

2

/

3

)

= 1/3N(x;0.5, 1/6)+2/3N(x;3, 2/3)

=1/3N(x;0.5,1/6)+2/3N(x;3,2/3)

这样就可以计算新一轮的E-step,如此反复,直至数据点的归属簇不再变化

类比一下:hard-GMM中,E-step就像是k-means中的将各数据点归属到各个簇,M-step就像是k-means计算新的簇中心

四、soft-GMM

第1)问没有区别,第2)问开始重新写soft-GMM版本的

2)使用EM算法迭代直至收敛,需要写出每一步的聚类结果和模型参数

E-step:

计算归属度

λ

i

j

\lambda_{ij}

λij,表示数据点

i

i

i对簇

j

j

j的归属度。

λ

i

j

=

w

j

N

(

x

i

;

μ

j

,

σ

j

2

)

\lambda_{ij} = w_jN(x_i; \mu_j, \sigma_j^2)

λij=wjN(xi;μj,σj2)

(1)对数据点

x

1

x_1

x1来说,对簇1簇2的归属度分别为:

λ

11

\lambda_{11}

λ11

=

w

1

N

(

x

1

;

μ

1

,

σ

1

2

)

= w_1N(x_1; \mu_1, \sigma_1^2)

=w1N(x1;μ1,σ12)

=

1

/

3

N

(

0

;

0.25

,

0.2

5

2

)

= 1/3N(0;0.25, 0.25^2)

=1/3N(0;0.25,0.252)

=

0.323

= 0.323

=0.323

λ

12

\lambda_{12}

λ12

=

w

2

N

(

x

1

;

μ

2

,

σ

2

2

)

= w_2N(x_1; \mu_2, \sigma_2^2)

=w2N(x1;μ2,σ22)

=

2

/

3

N

(

0

;

2.5

,

1.25

)

= 2/3N(0;2.5, 1.25)

=2/3N(0;2.5,1.25)

=

0.019

= 0.019

=0.019

与hard-GMM不同的时,我们需要计算归一化后的归属度:

λ

11

′

=

λ

11

λ

11

+

λ

12

=

0.943

\lambda_{11}' = \frac{\lambda_{11}}{\lambda_{11}+\lambda_{12}} = 0.943

λ11′=λ11+λ12λ11=0.943

λ

12

′

=

λ

12

λ

11

+

λ

12

=

0.057

\lambda_{12}' = \frac{\lambda_{12}}{\lambda_{11}+\lambda_{12}} = 0.057

λ12′=λ11+λ12λ12=0.057

λ

11

=

λ

11

′

\lambda_{11} = \lambda_{11}'

λ11=λ11′

λ

12

=

λ

12

′

\lambda_{12} = \lambda_{12}'

λ12=λ12′

(2)对数据点

x

3

x_3

x3来说,对簇1簇2的归属度分别为:

λ

31

\lambda_{31}

λ31

=

w

1

N

(

x

3

;

μ

1

,

σ

1

2

)

= w_1N(x_3; \mu_1, \sigma_1^2)

=w1N(x3;μ1,σ12)

=

1

/

3

N

(

1

;

0.25

,

0.2

5

2

)

= 1/3N(1;0.25, 0.25^2)

=1/3N(1;0.25,0.252)

=

4

/

(

3

2

π

)

∗

e

−

4.5

)

= 4/(3\sqrt{2\pi})*e^{-4.5)}

=4/(32π)∗e−4.5)

=

0.006

=0.006

=0.006

λ

32

\lambda_{32}

λ32

=

w

2

N

(

x

3

;

μ

2

,

σ

2

2

)

= w_2N(x_3; \mu_2, \sigma_2^2)

=w2N(x3;μ2,σ22)

=

2

/

3

N

(

1

;

2.5

,

1.25

)

= 2/3N(1;2.5, 1.25)

=2/3N(1;2.5,1.25)

=

(

2

/

1.25

)

/

(

3

2

π

)

∗

e

−

0.9

= (2/\sqrt{1.25})/(3\sqrt{2\pi})*e^{-0.9}

=(2/1.25)/(32π)∗e−0.9

=

0.097

=0.097

=0.097

计算归一化后的归属度:

λ

31

′

=

λ

31

λ

31

+

λ

32

=

0.058

\lambda_{31}' = \frac{\lambda_{31}}{\lambda_{31}+\lambda_{32}} = 0.058

λ31′=λ31+λ32λ31=0.058

λ

32

′

=

λ

32

λ

31

+

λ

32

=

0.942

\lambda_{32}' = \frac{\lambda_{32}}{\lambda_{31}+\lambda_{32}} = 0.942

λ32′=λ31+λ32λ32=0.942

λ

31

=

λ

31

′

\lambda_{31} = \lambda_{31}'

λ31=λ31′

λ

32

=

λ

32

′

\lambda_{32} = \lambda_{32}'

λ32=λ32′

(3)同理计算剩下的数据点的归属度

soft-GMM如何认为算法已经收敛了呢?

当两次归属度的值变化量 < tolerance即可,比如我们这里设定 tolerance=0.02

我们这里再通过M步更新高斯模型参数

M-step

两个簇权重分别为

w

1

=

∑

i

=

1

6

λ

i

1

=

0.312

,

w

2

=

∑

i

=

1

6

λ

i

2

=

0.688

w_1 = \sum\limits_{i=1}^{6}\lambda_{i1} = 0.312, w_2 = \sum\limits_{i=1}^{6}\lambda_{i2} = 0.688

w1=i=1∑6λi1=0.312,w2=i=1∑6λi2=0.688

两个簇的高斯分布为

μ

1

=

∑

i

=

1

6

λ

i

1

x

i

∑

i

=

1

6

λ

i

1

=

(

λ

11

x

1

+

λ

21

x

2

+

.

.

.

+

λ

61

x

6

)

λ

11

+

λ

21

+

.

.

.

+

λ

61

=

0.263

\mu_1 = \frac{\sum\limits_{i=1}^{6}\lambda_{i1}x_i}{\sum\limits_{i=1}^{6}\lambda_{i1}} = \frac{(\lambda_{11}x_1 + \lambda_{21}x_2 + ... + \lambda_{61}x_6) }{\lambda_{11}+\lambda_{21}+...+\lambda_{61}}= 0.263

μ1=i=1∑6λi1i=1∑6λi1xi=λ11+λ21+...+λ61(λ11x1+λ21x2+...+λ61x6)=0.263

μ 2 = ∑ i = 1 6 λ i 2 x i ∑ i = 1 6 λ i 2 = 2.424 \mu_2 = \frac{\sum\limits_{i=1}^{6}\lambda_{i2}x_i}{\sum\limits_{i=1}^{6}\lambda_{i2}} =2.424 μ2=i=1∑6λi2i=1∑6λi2xi=2.424

σ 1 2 = ∑ i = 1 6 λ i 1 ( x i − μ 1 ) 2 ∑ i = 1 6 λ i 1 = 0.279 \sigma_1^2 = \frac{\sum\limits_{i=1}^{6}\lambda_{i1}(x_i-\mu_1)^2}{\sum\limits_{i=1}^{6}\lambda_{i1}} = 0.279 σ12=i=1∑6λi1i=1∑6λi1(xi−μ1)2=0.279

σ 2 2 = ∑ i = 1 6 λ i 2 ( x i − μ 2 ) 2 ∑ i = 1 6 λ i 2 = 1.180 \sigma_2^2 = \frac{\sum\limits_{i=1}^{6}\lambda_{i2}(x_i-\mu_2)^2}{\sum\limits_{i=1}^{6}\lambda_{i2}} = 1.180 σ22=i=1∑6λi2i=1∑6λi2(xi−μ2)2=1.180

概率密度

P

^

(

x

)

\hat{P}(x)

P^(x)

=

w

1

N

(

x

;

μ

1

,

σ

1

2

)

+

w

2

N

(

x

;

μ

2

,

σ

2

2

)

= w_1N(x;\mu_1, \sigma_1^2)+w_2N(x;\mu_2, \sigma_2^2)

=w1N(x;μ1,σ12)+w2N(x;μ2,σ22)

=

0.312

N

(

x

;

0.263

,

0.279

)

+

0.688

N

(

x

;

2.424

,

1.180

)

= 0.312N(x;0.263, 0.279) + 0.688N(x;2.424, 1.180)

=0.312N(x;0.263,0.279)+0.688N(x;2.424,1.180)

这样就可以计算新一轮的E-step,如此反复,直至收敛(参数是否收敛或对数似然函数收敛)

参数收敛比方说第19轮和第20轮迭代时:

| iter | μ 1 \mu_1 μ1 | μ 2 \mu_2 μ2 | σ 1 2 \sigma_1^2 σ12 | σ 1 2 \sigma_1^2 σ12 |

|---|---|---|---|---|

| 19 | 0.485 | 2.923 | 0.408 | 0.896 |

| 20 | 0.485 | 2.923 | 0.408 | 0.895 |

此时指定的参数已经的变化量已经 < tolerance = 0.02,可以认为已经收敛。

类比一下:soft-GMM中,E-step就像是k-means中的将各数据点对各个簇的归属度,M-step就像是k-means计算新的簇中心

五、python复现代码

"""

import numpy as np

import time

def CreateData(mu1, sigma1, alpha1, mu2, sigma2, alpha2, length):

"""

生成数据集

输入两个高斯分布的真实参数

:param mu1:

:param sigma1:

:param alpha1:

:param mu2:

:param sigma2:

:param alpha2:

:param length:

:return: 通过两个高斯分布生成的数据集

"""

# 生成第一个分模型的数据

First = np.random.normal(loc=mu1, scale=sigma1, size=int(length * alpha1))

# 生成第二个分模型的数据

Second = np.random.normal(loc=mu2, scale=sigma2, size=int(length * alpha2))

# 混合两个数据

ObservedData = np.concatenate((First, Second), axis=0) # 行拼接,生成的还是一列

# 打乱顺序(打不打乱其实对算法没有影响)

np.random.shuffle(ObservedData)

return ObservedData

def Cal_Gaussian(Data, mu, sigma):

"""

根据高斯密度函数计算

:param Data:数据集

:param mu: 当前的高斯分布的参数

:param sigma:

:return: GaussianP:概率

"""

GaussianP = 1 / (sigma * np.sqrt(2 * np.pi)) \

* np.exp(-1 * np.square(Data - mu) / (2 * np.square(sigma)))

return GaussianP

def E_step(Data, mu1, sigma1, alpha1, mu2, sigma2, alpha2):

"""

E步

:param Data: 使用的是ndarray格式

:param mu1:

:param sigma1:

:param alpha1:

:param mu2:

:param sigma2:

:param alpha2:

:return: Gamma1; Gamma2:响应度

"""

# 计算样本对每个高斯混合模型的响应度(归属度,gamma)

# 因此得到的数列结果中,包含该分模型对每个样本的计算响应度公式的分子项

print(f" Cal_Gaussian(Data, mu1, sigma1) = { Cal_Gaussian(Data, mu1, sigma1)}")

Gamma1 = alpha1 * Cal_Gaussian(Data, mu1, sigma1) # 是一个列表,保存了每个样本对高斯模型1的响应度

print(f" Cal_Gaussian(Data, mu2, sigma2) = { Cal_Gaussian(Data, mu2, sigma2)}")

Gamma2 = alpha2 * Cal_Gaussian(Data, mu2, sigma2)

# 响应度归一化

Gamma1 = Gamma1 / (Gamma1 + Gamma2)

# Gamma2 = Gamma2 / (Gamma1 + Gamma2) # 注意原来是这样写的,但是Gamma1已经在上一行变化了,不能再用来归一化

Gamma2 = 1 - Gamma1

return Gamma1, Gamma2

def M_step(Data, mu1_old, mu2_old, Gamma1, Gamma2):

"""

M步

:param Data:

:param mu1_old: 当前高斯分布的参数

:param mu2_old:

:param Gamma1: 对高斯分布1的响应度

:param Gamma2: 对高斯分布2的响应度

:return: 新的高斯分布的参数

"""

# 计算新的参数mu

mu1_new = np.dot(Gamma1, Data) / np.sum(Gamma1)

mu2_new = np.dot(Gamma2, Data) / np.sum(Gamma2)

# 计算新的参数sigma

# sigma1_new = np.sqrt(np.dot(Gamma1, np.square(Data - mu1_old)) / np.sum(Gamma1))

sigma1_new = np.sqrt(np.dot(Gamma1, np.square(Data - mu1_new)) / np.sum(Gamma1))

# sigma2_new = np.sqrt(np.dot(Gamma2, np.square(Data - mu2_old)) / np.sum(Gamma2))

sigma2_new = np.sqrt(np.dot(Gamma2, np.square(Data - mu2_new)) / np.sum(Gamma2))

# 计算新的参数alpha

m = len(Data)

alpha1_new = np.sum(Gamma1) / m

alpha2_new = np.sum(Gamma2) / m

return mu1_new, sigma1_new, alpha1_new, mu2_new, sigma2_new, alpha2_new

def EM(Data, mu1, sigma1, alpha1, mu2, sigma2, alpha2, itertime):

"""

EM算法

:param Data:

:param mu1:

:param sigma1:

:param alpha1:

:param mu2:

:param sigma2:

:param alpha2:

:param itertime: 迭代次数

:return: 模型中分模型的参数

"""

# 迭代

for i in range(itertime):

print("************************************************")

print(f"\n\n第{i}次迭代")

# 计算响应度,E步

Gamma1, Gamma2 = E_step(Data, mu1, sigma1, alpha1, mu2, sigma2, alpha2)

print(f"对1类的隶属度 = {Gamma1}, \n对2类的隶属度 = {Gamma2}")

# 更新参数,M步

mu1, sigma1, alpha1, mu2, sigma2, alpha2 \

= M_step(Data, mu1, mu2, Gamma1, Gamma2)

print(f"mu1={mu1}, sigma1={sigma1}, alpha1={alpha1},\n"

f"mu2={mu2}, sigma2={sigma2}, alpha2={alpha2}")

return mu1, sigma1, alpha1, mu2, sigma2, alpha2

if __name__ == '__main__':

# 生成数据

Data = np.array([0,

0.5,

1,

2,

3,

4])

# 初始化数据

init_mu1 = 0.25

init_sigma1 = 0.25

init_theta1, init_alpha1 = [init_mu1, init_sigma1], 1/3

init_mu2 = 2.5

init_sigma2 = np.sqrt(1.25)

init_theta2, init_alpha2 = [init_mu2, init_sigma2], 2/3

itertime = 20

tol = 2e-3

# 训练模型

start = time.time()

print("start training")

model_mu1, model_sigma1, model_alpha1, model_mu2, model_sigma2, model_alpha2 = \

EM(Data, init_mu1, init_sigma1, init_alpha1, init_mu2, init_sigma2, init_alpha2, itertime)

print('end training')

end = time.time()

print('training time: ', end - start)

# print('真实参数:mu1 ', mu1, 'sigma1 ', sigma1, 'alpha1 ', alpha1, 'mu2 ', mu2, 'sigma2 ', sigma2, 'alpha2 ', alpha2)

print('模型参数:mu1 ', model_mu1, 'sigma1 ', model_sigma1, 'alpha1 ', model_alpha1, 'mu2 ', model_mu2, 'sigma2 ',

model_sigma2, 'alpha2 ', model_alpha2)

参考:

[1]《Dempster A P . Maximum likelihood from incomplete data via the EM algorithm[J]. Journal of the Royal Statistical Society, 1977, 39.》

[2]《高斯混合模型(GMM)》

[3]《EM算法python复现 - 高斯混合模型》

![[LeetCode周赛复盘] 第 92 场双周赛20221015](https://img-blog.csdnimg.cn/fe90b8cf343c48c7a75815560d3be358.png)