基于Docker和YARN的大数据环境部署实践

目的

本操作手册旨在指导用户通过Docker容器技术,快速搭建一个完整的大数据环境。该环境包含以下核心组件:

- Hadoop HDFS/YARN(分布式存储与资源调度)

- Spark on YARN(分布式计算)

- Kafka(消息队列)

- Hive(数据仓库)

- JupyterLab(交互式开发环境)

通过清晰的步骤说明和验证方法,读者将掌握:

- 容器网络的搭建(Weave)

- Docker Compose编排文件编写技巧

- 多组件协同工作的配置要点

- 集群扩展与验证方法

整体架构

组件功能表

| 组件名称 | 功能描述 | 依赖服务 | 端口配置 | 数据存储 |

|---|---|---|---|---|

| Hadoop NameNode | HDFS元数据管理 | 无 | 9870 (Web UI), 8020 | Docker卷: hadoop_namenode |

| Hadoop DataNode | HDFS数据存储节点 | NameNode | 9864 (数据传输) | 本地卷或Docker卷 |

| YARN ResourceManager | 资源调度与管理 | NameNode | 8088 (Web UI), 8032 | 无 |

| YARN NodeManager | 单个节点资源管理 | ResourceManager | 8042 (Web UI) | 无 |

| Spark (YARN模式) | 分布式计算框架 | YARN ResourceManager | 无 | 集成在YARN中 |

| JupyterLab | 交互式开发环境 | Spark, YARN | 8888 (Web UI) | 本地目录挂载 |

| Kafka | 分布式消息队列 | ZooKeeper | 9092 (Broker) | Docker卷:kafka_data、kafka_logs |

| Hive | 数据仓库服务 | HDFS, MySQL | 10000 (HiveServer2) | MySQL存储元数据 |

| MySQL | 存储Hive元数据 | 无 | 3306 | Docker卷: mysql_data |

| ZooKeeper | 分布式协调服务(Kafka依赖) | 无 | 2181 | Docker卷:zookeeper_data |

关键交互流程

-

数据存储:

- HDFS通过NameNode管理元数据,DataNode存储实际数据。

- JupyterLab通过挂载本地目录访问数据,同时可读写HDFS。

-

资源调度:

- Spark作业通过YARN ResourceManager申请资源,由NodeManager执行任务。

-

数据处理:

- Kafka接收实时数据流,Spark消费后进行实时计算。

- Hive通过HDFS存储表数据,元数据存储在MySQL。

环境搭建步骤

1. 容器网络准备(Weave)

# 安装Weave网络插件

sudo curl -L git.io/weave -o /usr/local/bin/weave

sudo chmod +x /usr/local/bin/weave

# 启动Weave网络

weave launch

# 验证网络状态

weave status

#在其他节点上运行

weave launch 主节点IP

2. Docker Compose编排文件

创建 docker-compose.yml,核心配置如下:

version: "3.8"

services:

# ZooKeeper

zookeeper-1:

image: bitnami/zookeeper:3.8.0

privileged: true #使用二进制文件安装的docker需要开启特权模式,每个容器都需要开启该模式

container_name: zookeeper-1

hostname: zookeeper-1

ports:

- "2181:2181"

environment:

- ALLOW_ANONYMOUS_LOGIN=yes

- TZ=Asia/Shanghai

volumes:

- zookeeper_data:/bitnami/zookeeper

networks:

- bigdata-net

dns:

- 172.17.0.1

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

# Kafka

kafka-1:

image: bitnami/kafka:3.3.1

container_name: kafka-1

hostname: kafka-1

environment:

- KAFKA_BROKER_ID=1

- KAFKA_CFG_ZOOKEEPER_CONNECT=zookeeper-1:2181

- ALLOW_PLAINTEXT_LISTENER=yes

- TZ=Asia/Shanghai

ports:

- "9092:9092"

volumes:

- kafka_data:/bitnami/kafka # Kafka数据持久化

- kafka_logs:/kafka-logs # 独立日志目录

depends_on:

- zookeeper-1

networks:

- bigdata-net

dns:

- 172.17.0.1

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

# Hadoop HDFS

hadoop-namenode:

image: bde2020/hadoop-namenode:2.0.0-hadoop3.2.1-java8

container_name: hadoop-namenode

hostname: hadoop-namenode

environment:

- CLUSTER_NAME=bigdata

- CORE_CONF_fs_defaultFS=hdfs://hadoop-namenode:8020

- HDFS_CONF_dfs_replication=2

- TZ=Asia/Shanghai

ports:

- "9870:9870"

- "8020:8020"

networks:

- bigdata-net

dns:

- 172.17.0.1

volumes:

- hadoop_namenode:/hadoop/dfs/name

restart: always

hadoop-datanode:

image: bde2020/hadoop-datanode:2.0.0-hadoop3.2.1-java8

container_name: hadoop-datanode

hostname: hadoop-datanode

environment:

- CORE_CONF_fs_defaultFS=hdfs://hadoop-namenode:8020

- HDFS_CONF_dfs_replication=2

- TZ=Asia/Shanghai

depends_on:

- hadoop-namenode

networks:

- bigdata-net

dns:

- 172.17.0.1

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

# YARN

hadoop-resourcemanager:

image: bde2020/hadoop-resourcemanager:2.0.0-hadoop3.2.1-java8

container_name: hadoop-resourcemanager

hostname: hadoop-resourcemanager

ports:

- "8088:8088" # YARN Web UI

environment:

- CORE_CONF_fs_defaultFS=hdfs://hadoop-namenode:8020

- YARN_CONF_yarn_resourcemanager_hostname=hadoop-resourcemanager

- TZ=Asia/Shanghai

depends_on:

- hadoop-namenode

networks:

- bigdata-net

dns:

- 172.17.0.1

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

hadoop-nodemanager:

image: bde2020/hadoop-nodemanager:2.0.0-hadoop3.2.1-java8

container_name: hadoop-nodemanager

hostname: hadoop-nodemanager

environment:

- CORE_CONF_fs_defaultFS=hdfs://hadoop-namenode:8020

- YARN_CONF_yarn_resourcemanager_hostname=hadoop-resourcemanager

- TZ=Asia/Shanghai

depends_on:

- hadoop-resourcemanager

networks:

- bigdata-net

dns:

- 172.17.0.1

volumes:

- ./hadoop-conf/yarn-site.xml:/etc/hadoop/yarn-site.xml # 挂载主节点的Hadoop配置文件,用于上报内存与cpu核心数

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

# Hive

hive:

image: bde2020/hive:2.3.2

container_name: hive

hostname: hive

environment:

- HIVE_METASTORE_URI=thrift://hive:9083

- SERVICE_PRECONDITION=hadoop-namenode:8020,mysql:3306

- TZ=Asia/Shanghai

ports:

- "10000:10000"

- "9083:9083"

depends_on:

- hadoop-namenode

- mysql

networks:

- bigdata-net

dns:

- 172.17.0.1

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

# MySQL

mysql:

image: mysql:8.0

container_name: mysql

environment:

- MYSQL_ROOT_PASSWORD=root

- MYSQL_DATABASE=metastore

- TZ=Asia/Shanghai

ports:

- "3306:3306"

networks:

- bigdata-net

dns:

- 172.17.0.1

volumes:

- mysql_data:/var/lib/mysql

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

# JupyterLab(集成Spark on YARN)

jupyter:

image: jupyter/all-spark-notebook:latest

container_name: jupyter-lab

environment:

- JUPYTER_ENABLE_LAB=yes

- TZ=Asia/Shanghai

- SPARK_OPTS="--master yarn --deploy-mode client" # 默认使用YARN模式

- HADOOP_CONF_DIR=/etc/hadoop/conf # 必须定义

- YARN_CONF_DIR=/etc/hadoop/conf # 必须定义

ports:

- "8888:8888"

volumes:

- ./notebooks:/home/jovyan/work

- /path/to/local/data:/data

- ./hadoop-conf:/etc/hadoop/conf # 挂载Hadoop配置文件,./hadoop-conf代表在docker-compose.yml同目录下的hadoop-conf

networks:

- bigdata-net

dns:

- 172.17.0.1

depends_on:

- hadoop-resourcemanager

- hadoop-namenode

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "7"

volumes:

hadoop_namenode:

mysql_data:

zookeeper_data:

kafka_data:

kafka_logs:

hadoop-nodemanager:

networks:

bigdata-net:

external: true

name: weave

Hadoop配置文件

yarn-site.xml:

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-resourcemanager</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop-resourcemanager:8032</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-namenode:8020</value>

</property>

</configuration>

将这两个文件放置到hadoop-conf目录下。

3. 启动服务

# 启动容器

docker-compose up -d

# 查看容器状态

docker-compose ps

4. 验证服务是否可用

验证HDFS

(1) 访问HDFS Web UI

-

操作:浏览器打开

http://localhost:9870。 -

预期结果:

Overview 页面显示HDFS总容量。

Datanodes 显示至少1个活跃节点(对应

hadoop-datanode容器)。

(2) 命令行操作HDFS

docker exec -it hadoop-namenode bash

# 创建测试目录

hdfs dfs -mkdir /test

# 上传本地文件

echo "hello hdfs" > test.txt

hdfs dfs -put test.txt /test/

# 查看文件

hdfs dfs -ls /test

#解除安全模式

hdfs dfsadmin -safemode leave

- 预期结果:成功创建目录、上传文件并列出文件。

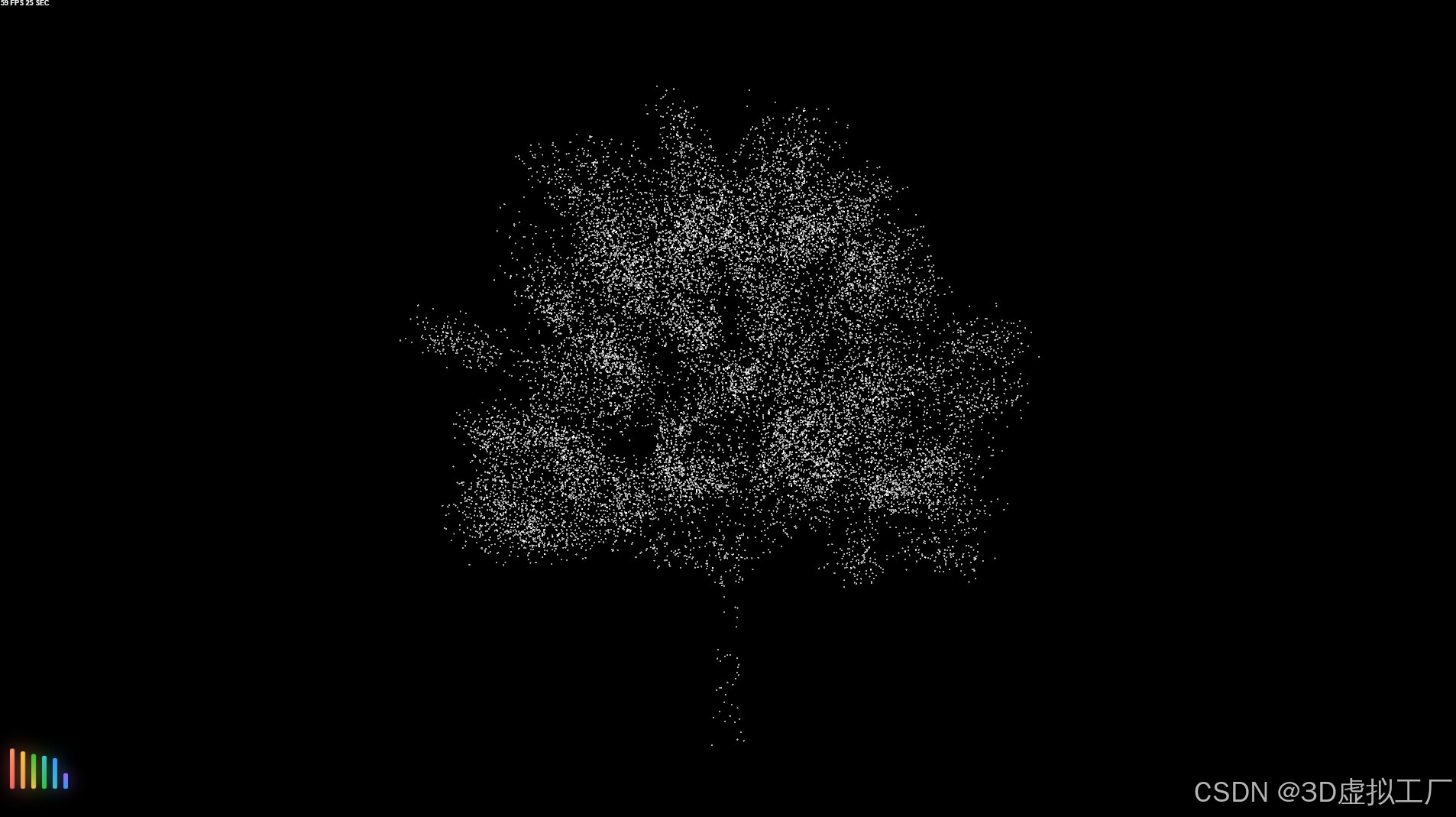

如图所示:

** 验证YARN**

(1) 访问YARN ResourceManager Web UI

- 操作:浏览器打开

http://localhost:8088。 - 预期结果:

- Cluster Metrics 显示总资源(如内存、CPU)。

- Nodes 显示至少1个NodeManager(对应

hadoop-nodemanager容器)。

(2) 提交测试作业到YARN

# 进入Jupyter容器提交Spark作业

docker exec -it jupyter-lab bash

# 提交Spark Pi示例作业

spark-submit --master yarn --deploy-mode client --class org.apache.spark.examples.SparkPi /usr/local/spark/examples/jars/spark-examples_*.jar 10

- 预期结果:

- 作业输出中包含

Pi is roughly 3.14。 - 在YARN Web UI (

http://localhost:8088) 中看到作业状态为 SUCCEEDED。

- 作业输出中包含

如图所示:

若是出现报错:Permission denied: user=jovyan, access=WRITE, inode=“/user”:root:supergroup:drwxr-xr-x

报错原因:

当前运行 Spark 的用户是:jovyan(Jupyter 默认用户);

Spark 提交任务后,会自动尝试在 HDFS 上创建目录 /user/jovyan;

但是:这个目录不存在,或者 /user 目录不允许 jovyan 写入;

所以 HDFS 拒绝创建临时目录,导致整个作业提交失败;

解决方法

创建目录并赋权

进入NameNode 容器:

docker exec -it hadoop-namenode bash

然后执行 HDFS 命令:

hdfs dfs -mkdir -p /user/jovyan

hdfs dfs -chown jovyan:supergroup /user/jovyan

这一步允许 jovyan 用户有权写入自己的临时目录。

提示:可以先执行

hdfs dfs -ls /user看是否有jovyan子目录。

最后:再次执行 spark-submit 后,可以看到

-

控制台打印:

Submitting application application_xxx to ResourceManager -

YARN 8088 页面:

- 出现作业记录;

- 状态为

RUNNING或FINISHED

验证Spark on YARN(通过JupyterLab)

(1) 访问JupyterLab

- 操作:浏览器打开

http://localhost:8888,使用Token登录(通过docker logs jupyter-lab获取Token)。 - 预期结果:成功进入JupyterLab界面。

(2) 运行PySpark代码

在Jupyter中新建Notebook,执行以下代码:

from pyspark.sql import SparkSession

spark = SparkSession.builder \

.appName("jupyter-yarn-test") \

.getOrCreate()

# 测试Spark Context

print("Spark Version:", spark.version)

print("YARN Cluster Mode:", spark.sparkContext.master)

# 读取HDFS文件

df = spark.read.text("hdfs://hadoop-namenode:8020/test/test.txt")

df.show()

# 读取数据

local_df = spark.read.csv("/data/example.csv", header=True) # 替换为实际文件路径

local_df.show()

- 预期结果:

- 输出Spark版本和YARN模式(如

yarn)。 - 成功读取HDFS文件并显示内容

hello hdfs。 - 成功读取CSV文件(需提前放置测试文件)。

- 输出Spark版本和YARN模式(如

如图所示:

验证Hive

(1) 创建Hive表并查询

#使用docker cp命令将jdbc驱动放入容器内部,示例:

docker cp mysql-connector-java-8.0.12.jar 容器ID或容器名称:/opt/hive/lib

docker exec -it hive bash

#重新初始化 Hive Metastore

schematool -dbType mysql -initSchema --verbose

#查询MetaStore运行状态

ps -ef | grep MetaStore

# 启动Hive Beeline客户端

beeline -u jdbc:hive2://localhost:10000 -n root

//驱动下载链接:https://downloads.mysql.com/archives/c-j/

若是执行上述命令报错,可以按照以下步骤来进行更改

1、配置hive-site.xml文件:

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

--><configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://192.168.0.78:3306/metastore_db?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

<!-- Metastor-->rash

<property>

<name>hive.metastore.uris</name>

<value>thrift://localhost:9083</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

</configuration>

//将此配置使用docker cp命令拷贝至hive容器内的/opt/hive/conf目录下。

2、配置core-site.xml文件:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property><name>fs.defaultFS</name><value>hdfs://hadoop-namenode:8020</value></property>

</configuration>

//hadoop-namenode容器IP可以在宿主机执行weave ps 命令获取,配置文件修改完毕后通过docker cp命令将文件拷贝至hive容器内的/opt/hadoop-2.7.4/etc/hadoop目录与/opt/hive/conf目录。

启动metastore

#在hive容器内部执行

hive --service metastore &

启动hiveserver2

#在hive容器内部执行(执行此命令需要先关闭hadoo-namenode的安全模式)

hive --service hiveserver2 --hiveconf hive.root.logger=DEBUG,console

执行HQL:

CREATE TABLE test_hive (id INT, name STRING);

INSERT INTO test_hive VALUES (1, 'hive-test');

SELECT * FROM test_hive;

- 预期结果:输出

1, hive-test。

(2) 验证MySQL元数据

docker exec -it mysql mysql -uroot -proot

use metastore_db;

SELECT TBL_NAME FROM TBLS;

- 预期结果:显示

test_hive表名。

如图所示:

验证Kafka

(1) 生产与消费消息

docker exec -it kafka-1 bash

# 创建主题

kafka-topics.sh --create --topic test-topic --bootstrap-server localhost:9092

# 生产消息

echo "hello kafka" | kafka-console-producer.sh --topic test-topic --bootstrap-server localhost:9092

# 消费消息(需另开终端)

kafka-console-consumer.sh --topic test-topic --from-beginning --bootstrap-server localhost:9092

- 预期结果:消费者终端输出

hello kafka。

如图所示:

** 验证本地数据挂载**

在JupyterLab中:

- 左侧文件浏览器中检查

/home/jovyan/work(对应本地./notebooks目录)。 - 检查

/data目录是否包含本地挂载的文件(例如/path/to/local/data中的内容)。

子节点设置

version: "3.8"

services:

# HDFS DataNode 服务

hadoop-datanode:

image: bde2020/hadoop-datanode:2.0.0-hadoop3.2.1-java8

privileged: true #使用二进制文件安装的docker需要开启特权模式,每个容器都需要开启该模式

container_name: hadoop-datanode-2 # 子节点容器名称唯一(例如按编号命名)

hostname: hadoop-datanode-2

environment:

- CORE_CONF_fs_defaultFS=hdfs://hadoop-namenode:8020 # 指向主节点NameNode

- HDFS_CONF_dfs_replication=2

- TZ=Asia/Shanghai

networks:

- bigdata-net

dns:

- 172.17.0.1

volumes:

- ./hadoop-conf:/etc/hadoop/conf # 挂载主节点的Hadoop配置文件

restart: always

# extra_hosts:

# - "hadoop-namenode:10.32.0.32"

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "5"

# YARN NodeManager 服务

hadoop-nodemanager:

image: bde2020/hadoop-nodemanager:2.0.0-hadoop3.2.1-java8

privileged: true #使用二进制文件安装的docker需要开启特权模式,每个容器都需要开启该模式

container_name: hadoop-nodemanager-2 # 子节点容器名称唯一

hostname: hadoop-nodemanager-2

environment:

- YARN_CONF_yarn_resourcemanager_hostname=hadoop-resourcemanager # 指向主节点ResourceManager

- CORE_CONF_fs_defaultFS=hdfs://hadoop-namenode:8020

- TZ=Asia/Shanghai

networks:

- bigdata-net

dns:

- 172.17.0.1

volumes:

- ./hadoop-conf/yarn-site.xml:/etc/hadoop/yarn-site.xml # 挂载主节点的Hadoop配置文件,用于上报内存与cpu核心数

depends_on:

- hadoop-datanode # 确保DataNode先启动(可选)

restart: always

logging:

driver: "json-file"

options:

max-size: "100m"

max-file: "5"

# 共享网络配置(必须与主节点一致)

networks:

bigdata-net:

external: true

name: weave # 使用主节点创建的Weave网络

yarn配置文件

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop-resourcemanager</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop-resourcemanager:8032</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>4096</value>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>8</value>

</property>

</configuration>

Ps:内存大小和cpu核心数需要按照实际情况填写。

#######################################################################################

今日推荐

小说:《异种的营养是牛肉的六倍?》

简介:【异种天灾】+【美食】+【日常】 变异生物的蛋白质是牛肉的几倍? 刚刚来到这个世界,刘笔就被自己的想法震惊到了。 在被孢子污染后的土地上觉醒了神厨系统,是不是搞错了什么?各种变异生物都能做成美食吗? 那就快端上来罢! 安全区边缘的特色美食饭店,有点非常规的温馨美食日常。