Learning Roadmap:

Section 1: Intro to Parallel Programming & MUSA

- Deep Learning Ecosystem(摩尔线程 国产显卡 MUSA 并行编程 学习笔记-2024/11/30-CSDN博客)

- Ubuntu+Driver+Toolkit+conda+pytorch+torch_musa环境安装(2024/11/24-Ubuntu Windows双系统安装 | 2024/11/30-GPU驱动&MUSA Toolkit安装)

- C/C++ Review(摩尔线程国产显卡 MUSA 并行编程学习笔记-2024/11/22-CSDN博客)

- GPU intros(摩尔线程国产显卡 MUSA 并行编程学习笔记-2024/11/25-CSDN博客)

- GPU硬件架构 (摩尔线程国产显卡 MUSA 并行编程学习笔记-2024/11/26-CSDN博客)

- Write First Kernels (Here) (2024/11/27-线程层级 | 2024/11/28-First MUSA Kernel to Count Thread | 2024/12/02-向量相加)

- MUSA API

- Faster Matrix Multiplication

- Triton

- Pytorch Extensions(摩尔线程国产显卡 MUSA 并行编程学习笔记-2024/11/21-CSDN博客)

- MNIST Multilayer Perceptron

Section 2: Parallel Programming & MUSA in Depth

- Analyzing Parallel Program Performance on a Quad-Core CPU

- Scheduling Task Graphs on a Multi-Core CPU

- A Simple Renderer in MUSA

- Optimizing DNN Performance on DNN Accelerator Hardware

- llm.c

Ref:摩尔学院 | High-Performance Computing with GPUs | Stanford CS149 - Video | Stanford CS149 - Syllabus

Kernel to Add Vector (3D)

Ref: High-Performance Computing with GPUs Chapter 5

下面的代码将用CPU与GPU分别对两个长度为1000万的向量进行相加,并计算对应的平均耗时,其中GPU相加分别采用了两种Kernel,其中一个Kernel定义了三维的Block和Grid,另一个Kernel则使用了一维的Block和Grid

代码地址

MUSA PLAY GROUND - Github

代码

#include <stdio.h>

#include <stdlib.h>

#include <time.h>

#include <musa_runtime.h>

#include <math.h>

#include <iostream>

#define N 10000000 // Vector size = 10 million

#define BLOCK_SIZE_1D 1024

#define BLOCK_SIZE_3D_X 16

#define BLOCK_SIZE_3D_Y 8

#define BLOCK_SIZE_3D_Z 8

// 16 * 16 * 8 = 2048

// CPU vector addition

void vector_add_cpu(float *a, float *b, float *c, int n) {

for (int i = 0; i < n; i++) {

c[i] = a[i] + b[i];

}

}

// MUSA kernel for 1D vector addition

__global__ void vector_add_gpu_1d(float *a, float *b, float *c, int n) {

int i = blockIdx.x * blockDim.x + threadIdx.x;

// one add, one multiply, one store

if (i < n) {

c[i] = a[i] + b[i];

// one add, one store

}

}

// MUSA kernel for 3D vector addition

__global__ void vector_add_gpu_3d(float *a, float *b, float *c, int nx, int ny, int nz) {

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

int k = blockIdx.z * blockDim.z + threadIdx.z;

// 3 adds, 3 multiplies, 3 stores

if (i < nx && j < ny && k < nz) {

int idx = i + j * nx + k * nx * ny;

if (idx < nx * ny * nz) {

c[idx] = a[idx] + b[idx];

}

}

// you get the point...

}

// Initialize vector with random values

void init_vector(float *vec, int n) {

for (int i = 0; i < n; i++) {

vec[i] = (float)rand() / RAND_MAX;

}

}

// Function to measure execution time

double get_time() {

struct timespec ts;

clock_gettime(CLOCK_MONOTONIC, &ts);

return ts.tv_sec + ts.tv_nsec * 1e-9;

}

int main() {

float *h_a, *h_b, *h_c_cpu, *h_c_gpu_1d, *h_c_gpu_3d;

float *d_a, *d_b, *d_c_1d, *d_c_3d;

size_t size = N * sizeof(float);

// Allocate host memory

h_a = (float*)malloc(size);

h_b = (float*)malloc(size);

h_c_cpu = (float*)malloc(size);

h_c_gpu_1d = (float*)malloc(size);

h_c_gpu_3d = (float*)malloc(size);

// Initialize vectors

srand(time(NULL));

init_vector(h_a, N);

init_vector(h_b, N);

// Allocate device memory

musaMalloc(&d_a, size);

musaMalloc(&d_b, size);

musaMalloc(&d_c_1d, size);

musaMalloc(&d_c_3d, size);

// Copy data to device

musaMemcpy(d_a, h_a, size, musaMemcpyHostToDevice);

musaMemcpy(d_b, h_b, size, musaMemcpyHostToDevice);

// Define grid and block dimensions for 1D

int num_blocks_1d = (N + BLOCK_SIZE_1D - 1) / BLOCK_SIZE_1D;

// Define grid and block dimensions for 3D

int nx = 100, ny = 100, nz = 1000; // N = 10000000 = 100 * 100 * 1000

dim3 block_size_3d(BLOCK_SIZE_3D_X, BLOCK_SIZE_3D_Y, BLOCK_SIZE_3D_Z);

dim3 num_blocks_3d(

(nx + block_size_3d.x - 1) / block_size_3d.x,

(ny + block_size_3d.y - 1) / block_size_3d.y,

(nz + block_size_3d.z - 1) / block_size_3d.z

);

// Warm-up runs

printf("Performing warm-up runs...\n");

for (int i = 0; i < 3; i++) {

vector_add_cpu(h_a, h_b, h_c_cpu, N);

vector_add_gpu_1d<<<num_blocks_1d, BLOCK_SIZE_1D>>>(d_a, d_b, d_c_1d, N);

vector_add_gpu_3d<<<num_blocks_3d, block_size_3d>>>(d_a, d_b, d_c_3d, nx, ny, nz);

musaDeviceSynchronize();

}

// Benchmark CPU implementation

printf("Benchmarking CPU implementation...\n");

double cpu_total_time = 0.0;

for (int i = 0; i < 5; i++) {

double start_time = get_time();

vector_add_cpu(h_a, h_b, h_c_cpu, N);

double end_time = get_time();

cpu_total_time += end_time - start_time;

}

double cpu_avg_time = cpu_total_time / 5.0;

// Benchmark GPU 1D implementation

printf("Benchmarking GPU 1D implementation...\n");

double gpu_1d_total_time = 0.0;

for (int i = 0; i < 100; i++) {

musaMemset(d_c_1d, 0, size); // Clear previous results

double start_time = get_time();

vector_add_gpu_1d<<<num_blocks_1d, BLOCK_SIZE_1D>>>(d_a, d_b, d_c_1d, N);

musaDeviceSynchronize();

double end_time = get_time();

gpu_1d_total_time += end_time - start_time;

}

double gpu_1d_avg_time = gpu_1d_total_time / 100.0;

// Verify 1D results immediately

musaMemcpy(h_c_gpu_1d, d_c_1d, size, musaMemcpyDeviceToHost);

bool correct_1d = true;

for (int i = 0; i < N; i++) {

if (fabs(h_c_cpu[i] - h_c_gpu_1d[i]) > 1e-4) {

correct_1d = false;

std::cout << i << " cpu: " << h_c_cpu[i] << " != " << h_c_gpu_1d[i] << std::endl;

break;

}

}

printf("1D Results are %s\n", correct_1d ? "correct" : "incorrect");

// Benchmark GPU 3D implementation

printf("Benchmarking GPU 3D implementation...\n");

double gpu_3d_total_time = 0.0;

for (int i = 0; i < 100; i++) {

musaMemset(d_c_3d, 0, size); // Clear previous results

double start_time = get_time();

vector_add_gpu_3d<<<num_blocks_3d, block_size_3d>>>(d_a, d_b, d_c_3d, nx, ny, nz);

musaDeviceSynchronize();

double end_time = get_time();

gpu_3d_total_time += end_time - start_time;

}

double gpu_3d_avg_time = gpu_3d_total_time / 100.0;

// Verify 3D results immediately

musaMemcpy(h_c_gpu_3d, d_c_3d, size, musaMemcpyDeviceToHost);

bool correct_3d = true;

for (int i = 0; i < N; i++) {

if (fabs(h_c_cpu[i] - h_c_gpu_3d[i]) > 1e-4) {

correct_3d = false;

std::cout << i << " cpu: " << h_c_cpu[i] << " != " << h_c_gpu_3d[i] << std::endl;

break;

}

}

printf("3D Results are %s\n", correct_3d ? "correct" : "incorrect");

// Print results

printf("CPU average time: %f milliseconds\n", cpu_avg_time * 1000);

printf("GPU 1D average time: %f milliseconds\n", gpu_1d_avg_time * 1000);

printf("GPU 3D average time: %f milliseconds\n", gpu_3d_avg_time * 1000);

printf("Speedup (CPU vs GPU 1D): %fx\n", cpu_avg_time / gpu_1d_avg_time);

printf("Speedup (CPU vs GPU 3D): %fx\n", cpu_avg_time / gpu_3d_avg_time);

printf("Speedup (GPU 1D vs GPU 3D): %fx\n", gpu_1d_avg_time / gpu_3d_avg_time);

// Free memory

free(h_a);

free(h_b);

free(h_c_cpu);

free(h_c_gpu_1d);

free(h_c_gpu_3d);

musaFree(d_a);

musaFree(d_b);

musaFree(d_c_1d);

musaFree(d_c_3d);

return 0;

}编译

mcc 01_vector_add_v2.mu -o vector_add_v2 -mtgpu -O2 -lmusart

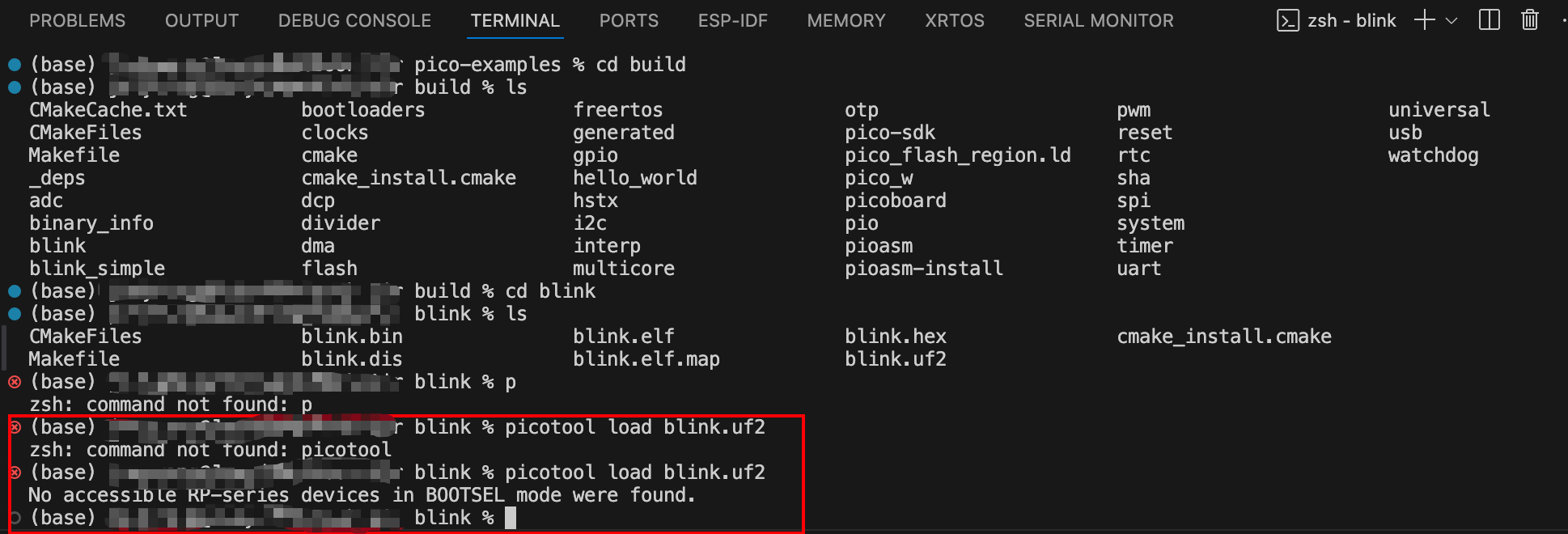

./vector_add_v2输出结果

如图所示,结果输出了CPU与GPU 对于长度为1000万的两个向量的相加,20次的平均速度,并验证了结果的准确性,可以看到通过定义3D block & grid的GPU Kernel不如定义了1D block & grid的 GPU Kernel的速度

Notes

如无必要,定义1D Block就可以

- 这里可以看到相比定义三维Grid & Block Kernel所需要的3次add, 3次multiplies,3次stores,通过1D Gird & Block 的Kernel只需要1次Add, mutiply 和Store,并且整个代码逻辑上要清晰很多,如果Kernel不是一定要计算三维强相关的任务时,定义1D block & grid在计算与简洁性上均有优势

// MUSA kernel for 1D vector addition

__global__ void vector_add_gpu_1d(float *a, float *b, float *c, int n) {

int i = blockIdx.x * blockDim.x + threadIdx.x;

// one add, one multiply, one store

if (i < n) {

c[i] = a[i] + b[i];

// one add, one store

}

}

// MUSA kernel for 3D vector addition

__global__ void vector_add_gpu_3d(float *a, float *b, float *c, int nx, int ny, int nz) {

int i = blockIdx.x * blockDim.x + threadIdx.x;

int j = blockIdx.y * blockDim.y + threadIdx.y;

int k = blockIdx.z * blockDim.z + threadIdx.z;

// 3 adds, 3 multiplies, 3 stores

if (i < nx && j < ny && k < nz) {

int idx = i + j * nx + k * nx * ny;

if (idx < nx * ny * nz) {

c[idx] = a[idx] + b[idx];

}

}

// you get the point...

}

![第二部分:基础知识 6.函数 --[JavaScript 新手村:开启编程之旅的第一步]](https://i-blog.csdnimg.cn/direct/471ee50bacee49c4a7c64573fa3c7975.png#pic_center)