课程

《生成式 AI》课程 第3講:訓練不了人工智慧嗎?你可以訓練你自己-CSDN博客

我们希望你设计一个机器人服务,你可以用LM玩角色扮演游戏。

与LM进行多轮对话

提示:告诉聊天机器人扮演任意角色。

后续输入:与聊天机器人交互。

Part 2: Role-Play

In this task, you are asked to prompt your chatbot into playing a roleplaying game. You should assign it a character, then prompt it into that character.

You need to:

- Come up with a character you want the chatbot to act and the prompt to make the chatbot into that character. Fill the character in character_for_chatbot, and fill the prompt in prompt_for_roleplay.

- Hit the run button

. (The run button will turn into this state

. (The run button will turn into this state when sucessfully executed.) It will pop up an interface that looks like this: (It May look a little bit different if you use dark mode.)

when sucessfully executed.) It will pop up an interface that looks like this: (It May look a little bit different if you use dark mode.)

- Interact with the chatbot for 2 rounds. Type what you want to say in the block "Input", then hit the button "Send". (You can use the "Temperature" slide to control the creativeness of the output.)

- If you want to change your prompt or the character, hit the run button again to stop the cell. Then go back to step 1.

- After you get the desired result, hit the button "Export" to save your result. There will be a file named part2.json appears in the file list. Remember to download it to your own computer before it disappears.

Note:

- If you hit the "Export" button again, the previous result will be covered, so make sure to download it first.

- You should keep in mind that even with the exact same prompt, the output might still

- differ.

以下的代码需要魔法,并且有OpenAI的账号才能使用

# TODO: Fill in the below two lines: character_for_chatbot and prompt_for_roleplay

# The first one is the character you want your chatbot to play

# The second one is the prompt to make the chatbot be a certain character

character_for_chatbot = "FILL IN YOUR CHARACTER"

prompt_for_roleplay = "FILL IN YOUR PROMPT"

# function to clear the conversation

def reset() -> List:

return []

# function to call the model to generate

def interact_roleplay(chatbot: List[Tuple[str, str]], user_input: str, temp=1.0) -> List[Tuple[str, str]]:

'''

* Arguments

- user_input: the user input of each round of conversation

- temp: the temperature parameter of this model. Temperature is used to control the output of the chatbot.

The higher the temperature is, the more creative response you will get.

'''

try:

messages = []

for input_text, response_text in chatbot:

messages.append({'role': 'user', 'content': input_text})

messages.append({'role': 'assistant', 'content': response_text})

messages.append({'role': 'user', 'content': user_input})

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages = messages,

temperature = temp,

max_tokens=200,

)

chatbot.append((user_input, response.choices[0].message.content))

except Exception as e:

print(f"Error occurred: {e}")

chatbot.append((user_input, f"Sorry, an error occurred: {e}"))

return chatbot

# function to export the whole conversation log

def export_roleplay(chatbot: List[Tuple[str, str]], description: str) -> None:

'''

* Arguments

- chatbot: the model itself, the conversation is stored in list of tuples

- description: the description of this task

'''

target = {"chatbot": chatbot, "description": description}

with open("part2.json", "w") as file:

json.dump(target, file)

first_dialogue = interact_roleplay([], prompt_for_roleplay)

# this part constructs the Gradio UI interface

with gr.Blocks() as demo:

gr.Markdown(f"# Part2: Role Play\nThe chatbot wants to play a role game with you, try interacting with it!!")

chatbot = gr.Chatbot(value = first_dialogue)

description_textbox = gr.Textbox(label=f"The character the bot is playing", interactive = False, value=f"{character_for_chatbot}")

input_textbox = gr.Textbox(label="Input", value = "")

with gr.Column():

gr.Markdown("# Temperature\n Temperature is used to control the output of the chatbot. The higher the temperature is, the more creative response you will get.")

temperature_slider = gr.Slider(0.0, 2.0, 1.0, step = 0.1, label="Temperature")

with gr.Row():

sent_button = gr.Button(value="Send")

reset_button = gr.Button(value="Reset")

with gr.Column():

gr.Markdown("# Save your Result.\n After you get a satisfied result. Click the export button to recode it.")

export_button = gr.Button(value="Export")

sent_button.click(interact_roleplay, inputs=[chatbot, input_textbox, temperature_slider], outputs=[chatbot])

reset_button.click(reset, outputs=[chatbot])

export_button.click(export_roleplay, inputs=[chatbot, description_textbox])

# loads the conversation log json file

with open("part2.json", "r") as f:

context = json.load(f)

# traverse through and load the conversation log properly

chatbot = context['chatbot']

role = context['description']

dialogue = ""

for i, (user, bot) in enumerate(chatbot):

if i != 0:

dialogue += f"User: {user}\n"

dialogue += f"Bot: {bot}\n"

# this part constructs the Gradio UI interface

with gr.Blocks() as demo:

gr.Markdown(f"# Part2: Role Play\nThe chatbot wants to play a role game with you, try interacting with it!!")

chatbot = gr.Chatbot(value = context['chatbot'])

description_textbox = gr.Textbox(label=f"The character the bot is playing", interactive = False, value=context['description'])

with gr.Column():

gr.Markdown("# Copy this part to the grading system.")

gr.Textbox(label = "role", value = role, show_copy_button = True)

gr.Textbox(label = "dialogue", value = dialogue, show_copy_button = True)

demo.launch(debug = True)

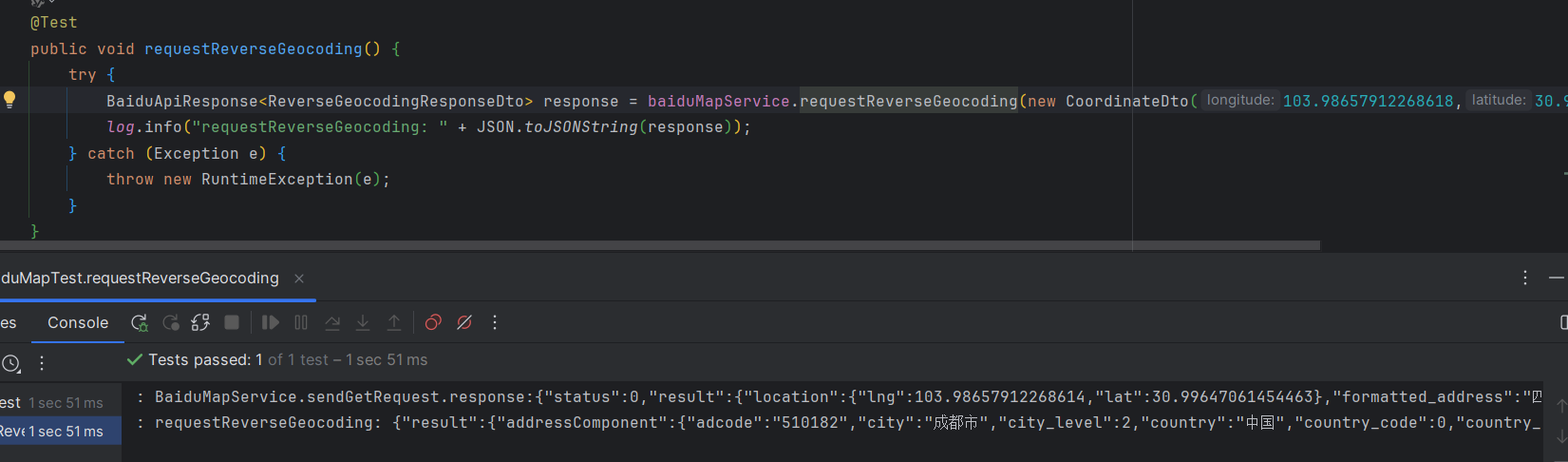

以下是用 API2D 调用 openAI 'model': 'gpt-4o-mini' url = "https://openai.api2d.net/v1/chat/completions"

import requests

import gradio as gr

import json

def get_response(input_text, chat_history):

"""

根据用户输入和已有的对话历史获取语言模型的回复。

:param input_text: 当前用户输入的文本内容。

:param chat_history: 之前的对话历史,是一个包含二元组的列表,每个二元组分别是 (用户消息, 模型回复)。

:return: 返回状态码、包含模型相关信息及回复内容和token数量信息的格式化字符串,若JSON解析出错则返回相应错误提示。

"""

url = "https://openai.api2d.net/v1/chat/completions"

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer fk0000' # <-- 把 fkxxxxx 替换成你自己的 Forward Key,注意前面的 Bearer 要保留,并且和 Key 中间有一个空格。

}

messages = []

# 将之前的对话历史添加到消息列表中,格式需符合API要求,依次添加用户消息和模型回复消息

for user_msg, bot_msg in chat_history:

messages.append({'role': 'user', 'content': user_msg})

messages.append({'role': 'assistant', 'content': bot_msg})

# 先添加当前用户输入的消息

messages.append({'role': 'user', 'content': input_text})

data = {

'model': 'gpt-4o-mini', # 'gpt-3.5-turbo',

'messages': messages

}

response = requests.post(url, headers=headers, json=data)

status_code = response.status_code

try:

json_data = response.json()

# 提取模型名称

model_name = json_data.get('model', '未知模型')

# 提取助手回复的内容

assistant_content = json_data.get('choices', [])[0].get('message', {}).get('content', '无回复内容')

# 提取各类token数量

prompt_tokens = json_data.get('usage', {}).get('prompt_tokens', 0)

completion_tokens = json_data.get('usage', {}).get('completion_tokens', 0)

total_tokens = json_data.get('usage', {}).get('total_tokens', 0)

# 将本次的用户输入和模型回复添加到对话历史中

chat_history.append((input_text, assistant_content))

print(chat_history)

return chat_history, f"模型: {model_name}\n回复内容: {assistant_content}\n提示词token数: {prompt_tokens}\n回复内容token数: {completion_tokens}\n总token数: {total_tokens}"

except json.JSONDecodeError:

return status_code, []

# 如果解析JSON出错,返回空列表,避免给Chatbot传递不符合格式的数据

# 创建Gradio界面

with gr.Blocks() as demo:

chatbot = gr.Chatbot()

user_input = gr.Textbox(lines=2, placeholder="请输入你想发送的内容")

state = gr.State([]) # 创建一个状态变量,用于存储对话历史,初始化为空列表

# 通过按钮点击事件触发获取回复和更新对话历史等操作

send_button = gr.Button("发送")

send_button.click(

fn=get_response,

inputs=[user_input, state],

outputs=[chatbot, gr.Textbox(label="解析后的响应内容")]

)

demo.launch(debug=True)

![]()