题意:如何在OpenAI的Gym中注册一个自定义环境?

问题背景:

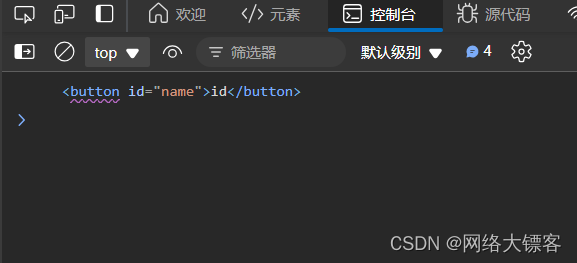

I have created a custom environment, as per the OpenAI Gym framework; containing step, reset, action, and reward functions. I aim to run OpenAI baselines on this custom environment. But prior to this, the environment has to be registered on OpenAI gym. I would like to know how the custom environment could be registered on OpenAI gym? Also, Should I be modifying the OpenAI baseline codes to incorporate this?

我已经按照OpenAI Gym框架创建了一个自定义环境,包含`step`、`reset`、`action`和`reward`函数。我计划在这个自定义环境上运行OpenAI的基线算法。但在此之前,必须在OpenAI Gym中注册这个环境。我想知道如何在OpenAI Gym中注册自定义环境?另外,我是否需要修改OpenAI基线代码以纳入这个自定义环境?

问题解决:

You do not need to modify baselines repo.

你不需要修改`baselines`仓库。

Here is a minimal example. Say you have myenv.py, with all the needed functions (step, reset, ...). The name of the class environment is MyEnv, and you want to add it to the classic_control folder. You have to

这是一个最小的示例。假设你有一个名为`myenv.py`的文件,其中包含所有必要的函数(`step`、`reset`等)。环境的类名是`MyEnv`,你想将其添加到`classic_control`文件夹中。你需要做以下操作:

- Place

myenv.pyfile ingym/gym/envs/classic_control

将`myenv.py`文件放在`gym/gym/envs/classic_control`目录下。

-

Add to

__init__.py(located in the same folder)

将以下内容添加到`__init__.py`文件中(位于同一文件夹下):

from gym.envs.classic_control.myenv import MyEnv

-

Register the environment in

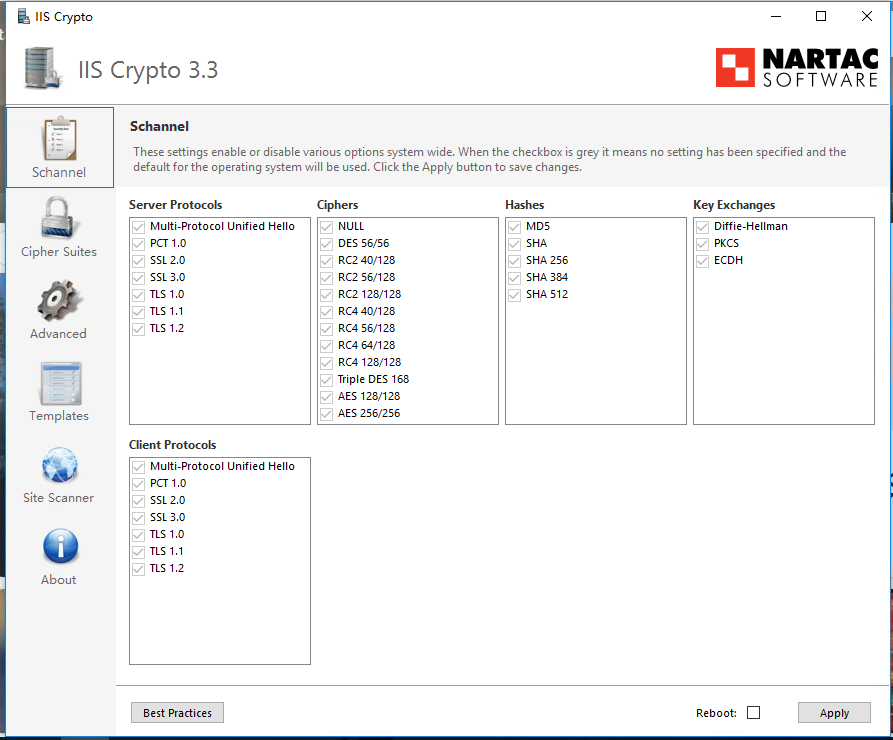

gym/gym/envs/__init__.pyby adding

在`gym/gym/envs/__init__.py`中注册环境,通过添加以下内容:

gym.envs.register(

id='MyEnv-v0',

entry_point='gym.envs.classic_control:MyEnv',

max_episode_steps=1000,

)

At registration, you can also add reward_threshold and kwargs (if your class takes some arguments).

在注册时,你还可以添加`reward_threshold`和`kwargs`(如果你的类需要一些参数的话)。

You can also directly register the environment in the script you will run (TRPO, PPO, or whatever) instead of doing it in gym/gym/envs/__init__.py.

你也可以直接在你将运行的脚本中(如TRPO、PPO或其他)注册环境,而不是在`gym/gym/envs/__init__.py`中进行注册。

EDIT

This is a minimal example to create the LQR environment.

这是一个创建LQR环境的最小示例。

Save the code below in lqr_env.py and place it in the classic_control folder of gym.

将下面的代码保存为`lqr_env.py`并放在Gym的`classic_control`文件夹中。

import gym

from gym import spaces

from gym.utils import seeding

import numpy as np

class LqrEnv(gym.Env):

def __init__(self, size, init_state, state_bound):

self.init_state = init_state

self.size = size

self.action_space = spaces.Box(low=-state_bound, high=state_bound, shape=(size,))

self.observation_space = spaces.Box(low=-state_bound, high=state_bound, shape=(size,))

self._seed()

def _seed(self, seed=None):

self.np_random, seed = seeding.np_random(seed)

return [seed]

def _step(self,u):

costs = np.sum(u**2) + np.sum(self.state**2)

self.state = np.clip(self.state + u, self.observation_space.low, self.observation_space.high)

return self._get_obs(), -costs, False, {}

def _reset(self):

high = self.init_state*np.ones((self.size,))

self.state = self.np_random.uniform(low=-high, high=high)

self.last_u = None

return self._get_obs()

def _get_obs(self):

return self.stateAdd from gym.envs.classic_control.lqr_env import LqrEnv to __init__.py (also in classic_control).

将`from gym.envs.classic_control.lqr_env import LqrEnv`添加到`__init__.py`(同样在`classic_control`文件夹中)。

In your script, when you create the environment, do

在你的脚本中,当你创建环境时,执行以下操作:

gym.envs.register(

id='Lqr-v0',

entry_point='gym.envs.classic_control:LqrEnv',

max_episode_steps=150,

kwargs={'size' : 1, 'init_state' : 10., 'state_bound' : np.inf},

)

env = gym.make('Lqr-v0')