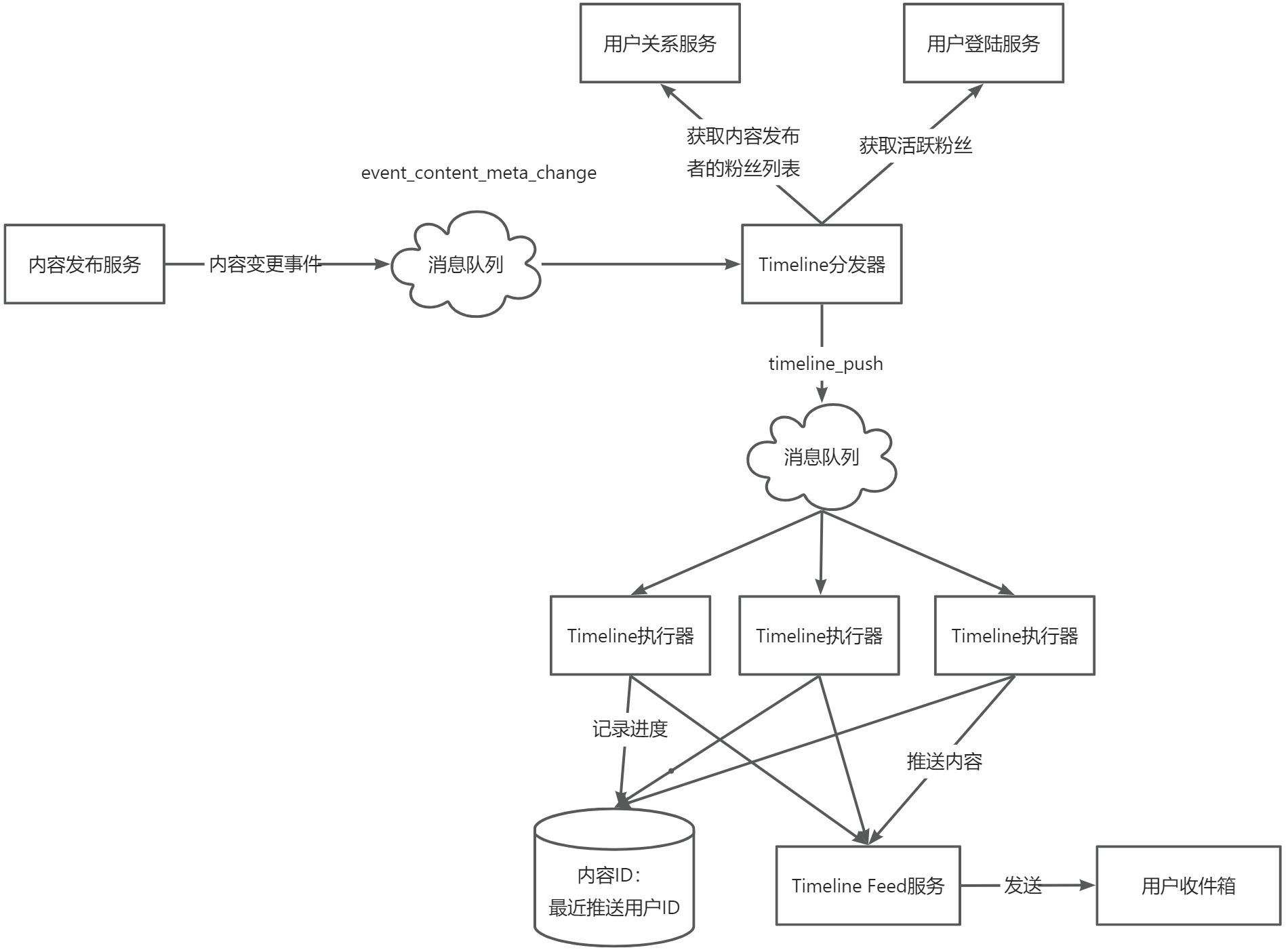

目录

1.数据集格式如下

2.训练的代码如下

3.训练的网络如下

4.训练的结果如下

简单留个备注,方便自己以后查找

1.数据集格式如下

txt里面的格式 类别 中心点x,y 宽高 姿态1的x,姿态1的y,姿态可见度。。。。

<class-index> <x> <y> <width> <height> <px1> <py1> <p1-visibility> <px2> <py2> <p2-visibility> <pxn> <pyn> <p2-visibility>

训练的yaml文件

coco8-pose.yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# COCO8-pose dataset (first 8 images from COCO train2017) by Ultralytics

# Documentation: https://docs.ultralytics.com/datasets/pose/coco8-pose/

# Example usage: yolo train data=coco8-pose.yaml

# parent

# ├── ultralytics

# └── datasets

# └── coco8-pose ← downloads here (1 MB)

# Train/val/test sets as 1) dir: path/to/imgs, 2) file: path/to/imgs.txt, or 3) list: [path/to/imgs1, path/to/imgs2, ..]

path: ../datasets/coco8-pose # dataset root dir

train: images/train # train images (relative to 'path') 4 images

val: images/val # val images (relative to 'path') 4 images

test: # test images (optional)

# Keypoints

kpt_shape: [17, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

flip_idx: [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

# Classes

names:

0: person

# Download script/URL (optional)

download: https://github.com/ultralytics/assets/releases/download/v0.0.0/coco8-pose.zip

2.训练的代码如下

from ultralytics import YOLO

# # Load a model

# model = YOLO("yolov8n-pose.yaml") # build a new model from YAML

# model = YOLO("yolov8n-pose.pt") # load a pretrained model (recommended for training)

model = YOLO("yolov8-pose.yaml").load("yolov8n-pose.pt") # build from YAML and transfer weights

# Train the model

results = model.train(data="coco8-pose.yaml", epochs=100, imgsz=640)3.训练的网络如下

yolov8-pose.yaml

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8-pose keypoints/pose estimation model. For Usage examples see https://docs.ultralytics.com/tasks/pose

# Parameters

nc: 1 # number of classes

kpt_shape: [17, 3] # number of keypoints, number of dims (2 for x,y or 3 for x,y,visible)

scales: # model compound scaling constants, i.e. 'model=yolov8n-pose.yaml' will call yolov8-pose.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024]

s: [0.33, 0.50, 1024]

m: [0.67, 0.75, 768]

l: [1.00, 1.00, 512]

x: [1.00, 1.25, 512]

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

# YOLOv8.0n head

head:

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, C2f, [512]] # 12

- [-1, 1, nn.Upsample, [None, 2, "nearest"]]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, C2f, [256]] # 15 (P3/8-small)

- [-1, 1, Conv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 3, C2f, [512]] # 18 (P4/16-medium)

- [-1, 1, Conv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 3, C2f, [1024]] # 21 (P5/32-large)

- [[15, 18, 21], 1, Pose, [nc, kpt_shape]] # Pose(P3, P4, P5)

4.训练的结果如下

附上

预测的代码

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-pose.pt") # load an official model

# model = YOLO("path/to/best.pt") # load a custom model

# Predict with the model

results = model("https://ultralytics.com/images/bus.jpg") # predict on an image验证的代码

from ultralytics import YOLO

# Load a model

model = YOLO("yolov8n-pose.pt") # load an official model

model = YOLO("path/to/best.pt") # load a custom model

# Validate the model

metrics = model.val() # no arguments needed, dataset and settings remembered

metrics.box.map # map50-95

metrics.box.map50 # map50

metrics.box.map75 # map75

metrics.box.maps # a list contains map50-95 of each category

![Python入门级[ 基础语法 函数... ] 笔记 例题较多](https://i-blog.csdnimg.cn/direct/b86fedcf0cab4a289afacace4215ec56.png)

![[linux#39][线程] 详解线程的概念](https://img-blog.csdnimg.cn/img_convert/9238c18caa9e4022af53c54ce221e63c.png)