目标

在本实验中,你将:

- 用正则化项扩展前面的线性和逻辑代价函数。

- 重新运行前面添加正则化项的过拟合示例。

import numpy as np

%matplotlib widget

import matplotlib.pyplot as plt

from plt_overfit import overfit_example, output

from lab_utils_common import sigmoid

np.set_printoptions(precision=8)

添加正则化

上面的幻灯片显示了线性回归和逻辑回归的成本和梯度函数。注意:

上面的幻灯片显示了线性回归和逻辑回归的成本和梯度函数。注意:

- 开销

- 线性回归和逻辑回归的成本函数有很大不同,但对方程进行正则化是相同的。

- 梯度

- 线性回归和逻辑回归的梯度函数非常相似。它们只是在执行 f w b f_{wb} fwb方面有所不同

正则化代价函数

正则化线性回归的代价函数

代价函数正则化线性回归方程为:

J

(

w

,

b

)

=

1

2

m

∑

i

=

0

m

−

1

(

f

w

,

b

(

x

(

i

)

)

−

y

(

i

)

)

2

+

λ

2

m

∑

j

=

0

n

−

1

w

j

2

(1)

J(\mathbf{w},b) = \frac{1}{2m} \sum\limits_{i = 0}^{m-1} (f_{\mathbf{w},b}(\mathbf{x}^{(i)}) - y^{(i)})^2 + \frac{\lambda}{2m} \sum_{j=0}^{n-1} w_j^2 \tag{1}

J(w,b)=2m1i=0∑m−1(fw,b(x(i))−y(i))2+2mλj=0∑n−1wj2(1)

where:

f

w

,

b

(

x

(

i

)

)

=

w

⋅

x

(

i

)

+

b

(2)

f_{\mathbf{w},b}(\mathbf{x}^{(i)}) = \mathbf{w} \cdot \mathbf{x}^{(i)} + b \tag{2}

fw,b(x(i))=w⋅x(i)+b(2)

将此与没有正则化的成本函数(您在之前的实验室中实现)进行比较,其形式为:

J

(

w

,

b

)

=

1

2

m

∑

i

=

0

m

−

1

(

f

w

,

b

(

x

(

i

)

)

−

y

(

i

)

)

2

J(\mathbf{w},b) = \frac{1}{2m} \sum\limits_{i = 0}^{m-1} (f_{\mathbf{w},b}(\mathbf{x}^{(i)}) - y^{(i)})^2

J(w,b)=2m1i=0∑m−1(fw,b(x(i))−y(i))2

区别在于正则化项

λ

2

m

∑

j

=

0

n

−

1

w

j

2

\frac{\lambda}{2m} \sum_{j=0}^{n-1} w_j^2

2mλ∑j=0n−1wj2

包括这一项激励梯度下降以最小化参数的大小。注意,在这个例子中,参数

b

b

b没有被正则化。这是标准做法。

下面是等式(1)和(2)的实现。请注意,这使用了本课程的标准模式,在所有’ m ‘示例中使用’ for循环’。

def compute_cost_linear_reg(X, y, w, b, lambda_ = 1):

"""

Computes the cost over all examples

Args:

X (ndarray (m,n): Data, m examples with n features

y (ndarray (m,)): target values

w (ndarray (n,)): model parameters

b (scalar) : model parameter

lambda_ (scalar): Controls amount of regularization

Returns:

total_cost (scalar): cost

"""

m = X.shape[0]

n = len(w)

cost = 0.

for i in range(m):

f_wb_i = np.dot(X[i], w) + b #(n,)(n,)=scalar, see np.dot

cost = cost + (f_wb_i - y[i])**2 #scalar

cost = cost / (2 * m) #scalar

reg_cost = 0

for j in range(n):

reg_cost += (w[j]**2) #scalar

reg_cost = (lambda_/(2*m)) * reg_cost #scalar

total_cost = cost + reg_cost #scalar

return total_cost #scalar

运行下面的单元格,看看它是如何工作的。

np.random.seed(1)

X_tmp = np.random.rand(5,6)

y_tmp = np.array([0,1,0,1,0])

w_tmp = np.random.rand(X_tmp.shape[1]).reshape(-1,)-0.5

b_tmp = 0.5

lambda_tmp = 0.7

cost_tmp = compute_cost_linear_reg(X_tmp, y_tmp, w_tmp, b_tmp, lambda_tmp)

print("Regularized cost:", cost_tmp)

预想输出

Regularized cost: 0.07917239320214275

正则化逻辑回归的代价函数

对于正则化逻辑回归,成本函数为

J

(

w

,

b

)

=

1

m

∑

i

=

0

m

−

1

[

−

y

(

i

)

log

(

f

w

,

b

(

x

(

i

)

)

)

−

(

1

−

y

(

i

)

)

log

(

1

−

f

w

,

b

(

x

(

i

)

)

)

]

+

λ

2

m

∑

j

=

0

n

−

1

w

j

2

(3)

J(\mathbf{w},b) = \frac{1}{m} \sum_{i=0}^{m-1} \left[ -y^{(i)} \log\left(f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) - \left( 1 - y^{(i)}\right) \log \left( 1 - f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) \right] + \frac{\lambda}{2m} \sum_{j=0}^{n-1} w_j^2 \tag{3}

J(w,b)=m1i=0∑m−1[−y(i)log(fw,b(x(i)))−(1−y(i))log(1−fw,b(x(i)))]+2mλj=0∑n−1wj2(3)

where:

f

w

,

b

(

x

(

i

)

)

=

s

i

g

m

o

i

d

(

w

⋅

x

(

i

)

+

b

)

(4)

f_{\mathbf{w},b}(\mathbf{x}^{(i)}) = sigmoid(\mathbf{w} \cdot \mathbf{x}^{(i)} + b) \tag{4}

fw,b(x(i))=sigmoid(w⋅x(i)+b)(4)

将此与没有正则化的成本函数(在之前的实验室中实现)进行比较:

J

(

w

,

b

)

=

1

m

∑

i

=

0

m

−

1

[

(

−

y

(

i

)

log

(

f

w

,

b

(

x

(

i

)

)

)

−

(

1

−

y

(

i

)

)

log

(

1

−

f

w

,

b

(

x

(

i

)

)

)

]

J(\mathbf{w},b) = \frac{1}{m}\sum_{i=0}^{m-1} \left[ (-y^{(i)} \log\left(f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right) - \left( 1 - y^{(i)}\right) \log \left( 1 - f_{\mathbf{w},b}\left( \mathbf{x}^{(i)} \right) \right)\right]

J(w,b)=m1i=0∑m−1[(−y(i)log(fw,b(x(i)))−(1−y(i))log(1−fw,b(x(i)))]

和上面的线性回归一样,区别在于正则化项,

λ

2

m

∑

j

=

0

n

−

1

w

j

2

\frac{\lambda}{2m} \sum_{j=0}^{n-1} w_j^2

2mλ∑j=0n−1wj2

包括这一项激励梯度下降以最小化参数的大小。注意,在这个例子中,参数

b

b

b没有被正则化。这是标准做法。

def compute_cost_logistic_reg(X, y, w, b, lambda_ = 1):

"""

Computes the cost over all examples

Args:

Args:

X (ndarray (m,n): Data, m examples with n features

y (ndarray (m,)): target values

w (ndarray (n,)): model parameters

b (scalar) : model parameter

lambda_ (scalar): Controls amount of regularization

Returns:

total_cost (scalar): cost

"""

m,n = X.shape

cost = 0.

for i in range(m):

z_i = np.dot(X[i], w) + b #(n,)(n,)=scalar, see np.dot

f_wb_i = sigmoid(z_i) #scalar

cost += -y[i]*np.log(f_wb_i) - (1-y[i])*np.log(1-f_wb_i) #scalar

cost = cost/m #scalar

reg_cost = 0

for j in range(n):

reg_cost += (w[j]**2) #scalar

reg_cost = (lambda_/(2*m)) * reg_cost #scalar

total_cost = cost + reg_cost #scalar

return total_cost #scalar

运行下面的单元格,看看它是如何工作的。

np.random.seed(1)

X_tmp = np.random.rand(5,6)

y_tmp = np.array([0,1,0,1,0])

w_tmp = np.random.rand(X_tmp.shape[1]).reshape(-1,)-0.5

b_tmp = 0.5

lambda_tmp = 0.7

cost_tmp = compute_cost_logistic_reg(X_tmp, y_tmp, w_tmp, b_tmp, lambda_tmp)

print("Regularized cost:", cost_tmp)

期待输出

Regularized cost: 0.6850849138741673

正则化梯度下降

运行梯度下降的基本算法不随正则化而改变,为:

repeat until convergence:

{

w

j

=

w

j

−

α

∂

J

(

w

,

b

)

∂

w

j

for j := 0..n-1

b

=

b

−

α

∂

J

(

w

,

b

)

∂

b

}

\begin{align*} &\text{repeat until convergence:} \; \lbrace \\ & \; \; \;w_j = w_j - \alpha \frac{\partial J(\mathbf{w},b)}{\partial w_j} \tag{1} \; & \text{for j := 0..n-1} \\ & \; \; \; \; \;b = b - \alpha \frac{\partial J(\mathbf{w},b)}{\partial b} \\ &\rbrace \end{align*}

repeat until convergence:{wj=wj−α∂wj∂J(w,b)b=b−α∂b∂J(w,b)}for j := 0..n-1(1)

每次迭代对所有

j

j

j同时执行

w

j

w_j

wj的更新

正则化改变的是计算梯度。

用正则化计算梯度(线性/逻辑)

线性回归和逻辑回归的梯度计算几乎相同,不同之处在于

f

w

b

f_{\mathbf{w}b}

fwb的计算。

∂

J

(

w

,

b

)

∂

w

j

=

1

m

∑

i

=

0

m

−

1

(

f

w

,

b

(

x

(

i

)

)

−

y

(

i

)

)

x

j

(

i

)

+

λ

m

w

j

∂

J

(

w

,

b

)

∂

b

=

1

m

∑

i

=

0

m

−

1

(

f

w

,

b

(

x

(

i

)

)

−

y

(

i

)

)

\begin{align*} \frac{\partial J(\mathbf{w},b)}{\partial w_j} &= \frac{1}{m} \sum\limits_{i = 0}^{m-1} (f_{\mathbf{w},b}(\mathbf{x}^{(i)}) - y^{(i)})x_{j}^{(i)} + \frac{\lambda}{m} w_j \tag{2} \\ \frac{\partial J(\mathbf{w},b)}{\partial b} &= \frac{1}{m} \sum\limits_{i = 0}^{m-1} (f_{\mathbf{w},b}(\mathbf{x}^{(i)}) - y^{(i)}) \tag{3} \end{align*}

∂wj∂J(w,b)∂b∂J(w,b)=m1i=0∑m−1(fw,b(x(i))−y(i))xj(i)+mλwj=m1i=0∑m−1(fw,b(x(i))−y(i))(2)(3)

- M是数据集中训练样例的个数

- f w , b ( x ( i ) ) f_{\mathbf{w},b}(x^{(i)}) fw,b(x(i)) is the model’s prediction, while y ( i ) y^{(i)} y(i)

- For a linear regression model

f w , b ( x ) = w ⋅ x + b f_{\mathbf{w},b}(x) = \mathbf{w} \cdot \mathbf{x} + b fw,b(x)=w⋅x+b - For a logistic regression model

z = w ⋅ x + b z = \mathbf{w} \cdot \mathbf{x} + b z=w⋅x+b

f w , b ( x ) = g ( z ) f_{\mathbf{w},b}(x) = g(z) fw,b(x)=g(z)

where g ( z ) g(z) g(z) is the sigmoid function:

g ( z ) = 1 1 + e − z g(z) = \frac{1}{1+e^{-z}} g(z)=1+e−z1

加上正则化的项是$\frac{\lambda}{m} w_j $

正则化线性回归的梯度函数

def compute_gradient_linear_reg(X, y, w, b, lambda_):

"""

Computes the gradient for linear regression

Args:

X (ndarray (m,n): Data, m examples with n features

y (ndarray (m,)): target values

w (ndarray (n,)): model parameters

b (scalar) : model parameter

lambda_ (scalar): Controls amount of regularization

Returns:

dj_dw (ndarray (n,)): The gradient of the cost w.r.t. the parameters w.

dj_db (scalar): The gradient of the cost w.r.t. the parameter b.

"""

m,n = X.shape #(number of examples, number of features)

dj_dw = np.zeros((n,))

dj_db = 0.

for i in range(m):

err = (np.dot(X[i], w) + b) - y[i]

for j in range(n):

dj_dw[j] = dj_dw[j] + err * X[i, j]

dj_db = dj_db + err

dj_dw = dj_dw / m

dj_db = dj_db / m

for j in range(n):

dj_dw[j] = dj_dw[j] + (lambda_/m) * w[j]

return dj_db, dj_dw

运行下面的单元格,看看它是如何工作的。

np.random.seed(1)

X_tmp = np.random.rand(5,3)

y_tmp = np.array([0,1,0,1,0])

w_tmp = np.random.rand(X_tmp.shape[1])

b_tmp = 0.5

lambda_tmp = 0.7

dj_db_tmp, dj_dw_tmp = compute_gradient_linear_reg(X_tmp, y_tmp, w_tmp, b_tmp, lambda_tmp)

print(f"dj_db: {dj_db_tmp}", )

print(f"Regularized dj_dw:\n {dj_dw_tmp.tolist()}", )

期望输出

dj_db: 0.6648774569425726

Regularized dj_dw:

[0.29653214748822276, 0.4911679625918033, 0.21645877535865857]

正则化逻辑回归的梯度函数

def compute_gradient_logistic_reg(X, y, w, b, lambda_):

"""

Computes the gradient for linear regression

Args:

X (ndarray (m,n): Data, m examples with n features

y (ndarray (m,)): target values

w (ndarray (n,)): model parameters

b (scalar) : model parameter

lambda_ (scalar): Controls amount of regularization

Returns

dj_dw (ndarray Shape (n,)): The gradient of the cost w.r.t. the parameters w.

dj_db (scalar) : The gradient of the cost w.r.t. the parameter b.

"""

m,n = X.shape

dj_dw = np.zeros((n,)) #(n,)

dj_db = 0.0 #scalar

for i in range(m):

f_wb_i = sigmoid(np.dot(X[i],w) + b) #(n,)(n,)=scalar

err_i = f_wb_i - y[i] #scalar

for j in range(n):

dj_dw[j] = dj_dw[j] + err_i * X[i,j] #scalar

dj_db = dj_db + err_i

dj_dw = dj_dw/m #(n,)

dj_db = dj_db/m #scalar

for j in range(n):

dj_dw[j] = dj_dw[j] + (lambda_/m) * w[j]

return dj_db, dj_dw

运行下面的单元格,看看它是如何工作的。

np.random.seed(1)

X_tmp = np.random.rand(5,3)

y_tmp = np.array([0,1,0,1,0])

w_tmp = np.random.rand(X_tmp.shape[1])

b_tmp = 0.5

lambda_tmp = 0.7

dj_db_tmp, dj_dw_tmp = compute_gradient_logistic_reg(X_tmp, y_tmp, w_tmp, b_tmp, lambda_tmp)

print(f"dj_db: {dj_db_tmp}", )

print(f"Regularized dj_dw:\n {dj_dw_tmp.tolist()}", )

期待输出

dj_db: 0.341798994972791

Regularized dj_dw:

[0.17380012933994293, 0.32007507881566943, 0.10776313396851499]

重新运行过拟合示例

plt.close("all")

display(output)

ofit = overfit_example(True)

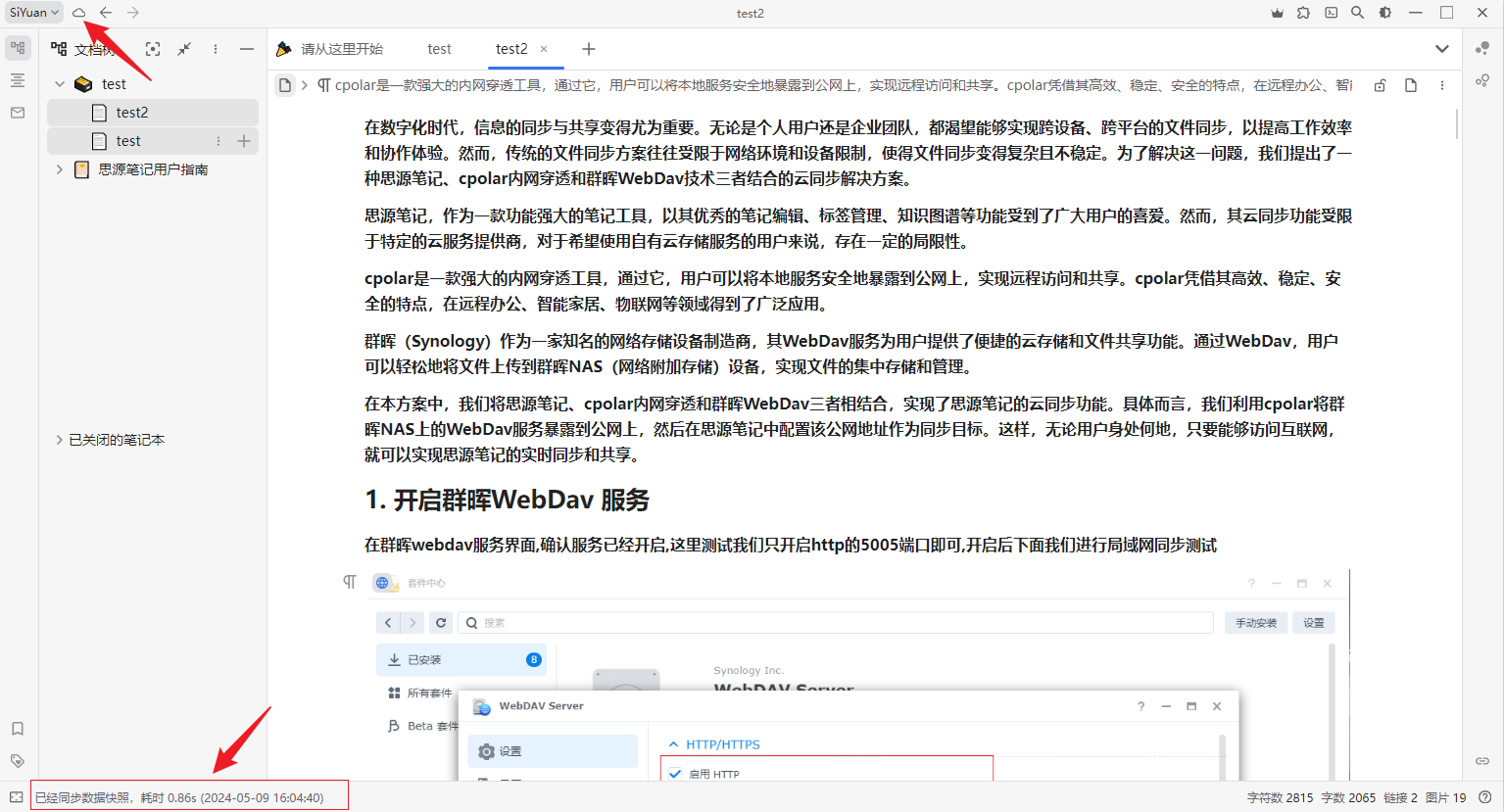

在上面的图表中,在前面的例子中尝试正则化。特别是:

- 分类(逻辑回归)

- 设置度为6,lambda为0(不正则化),拟合数据

- 现在将lambda设置为1(增加正则化),拟合数据,注意差异。

- 回归(线性回归)

- 尝试同样的步骤。

祝贺

你有:

- 成本和梯度例程的例子与回归添加了线性和逻辑回归

- 对正则化如何减少过度拟合产生了一些直觉