Opencv 之 DNN 与 CUDA 目录

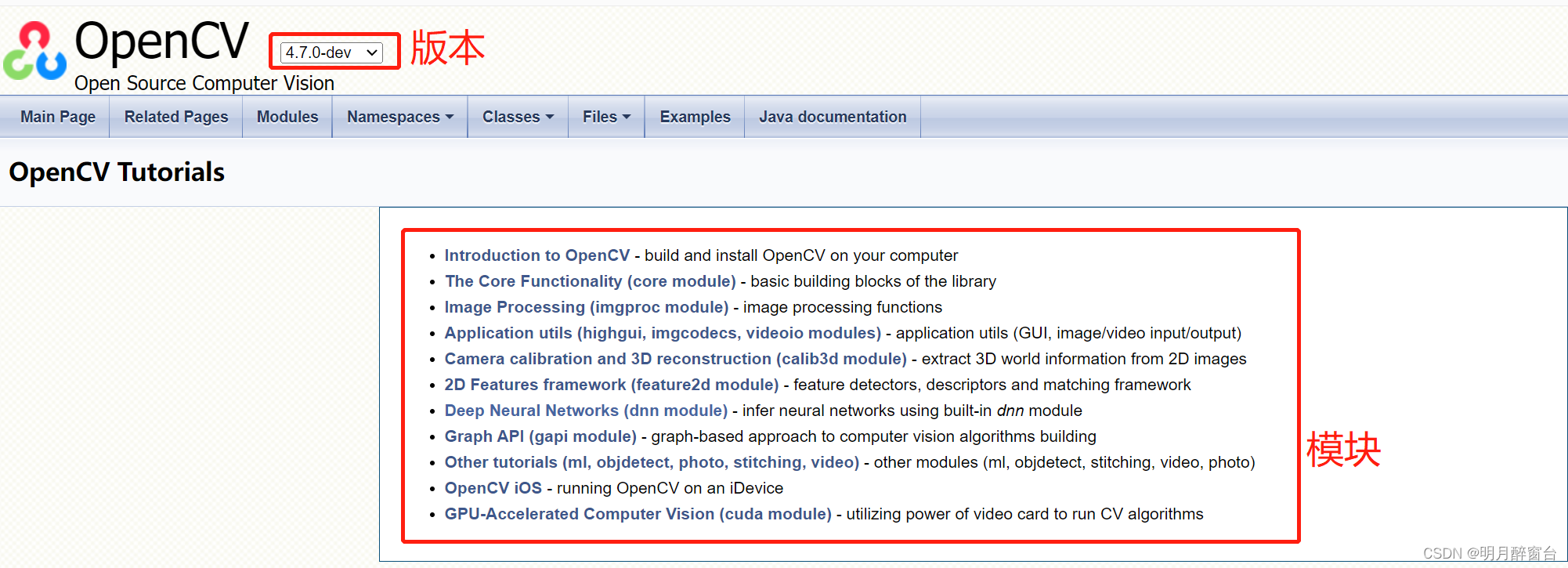

- Opencv官方手稿(包含各模块API介绍及使用例程)

- Opencv在github的仓库地址:https://github.com/opencv

- Opencv额外的测试数据 下载:https://github.com/opencv/opencv_extra

#可通过git下载拉取

git clone https://github.com/opencv/opencv_extra.git

- 开源Opencv+contrib - 455:https://gitcode.net/openmodel/opencv

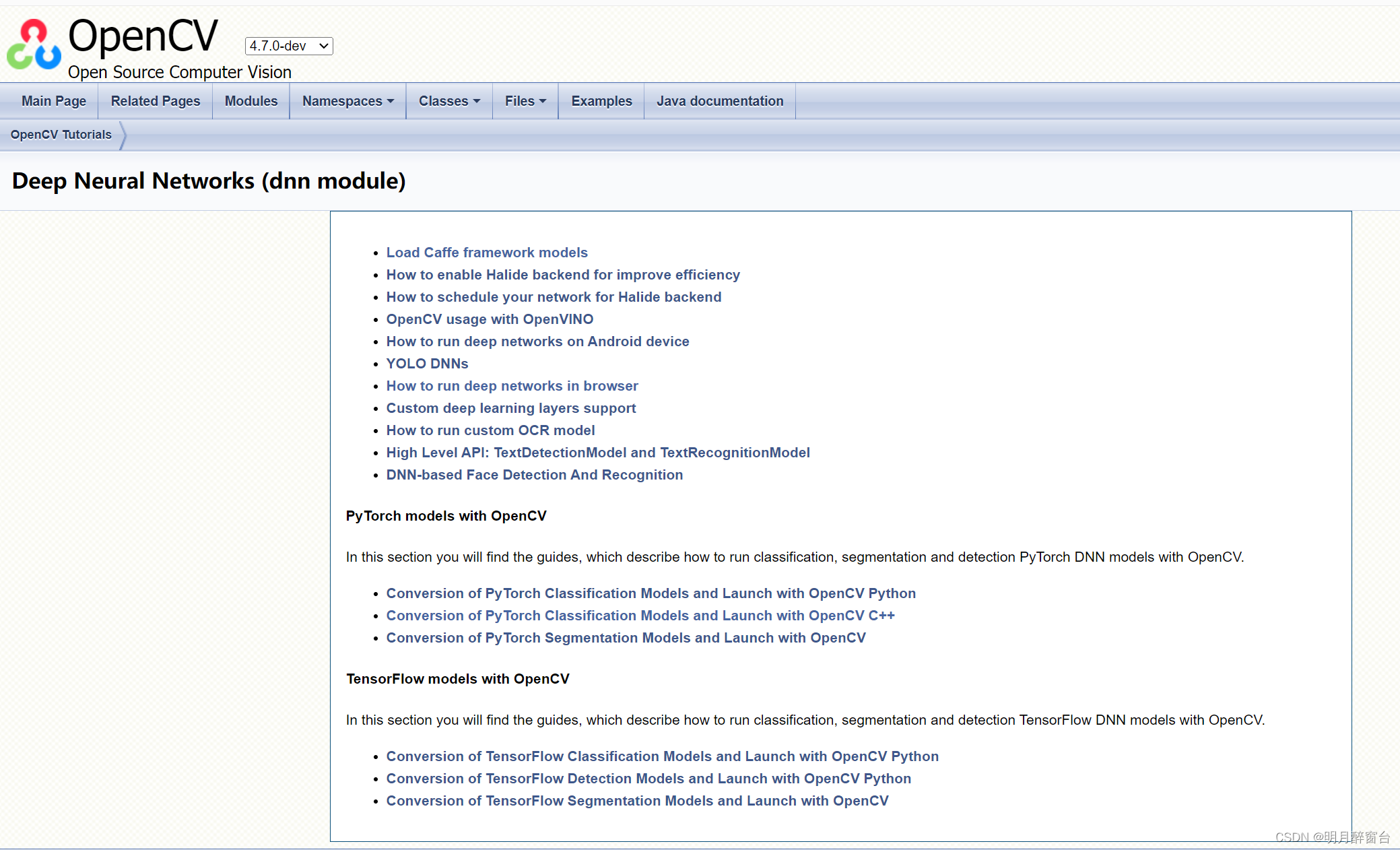

1.DNN模块

- opencv中的DNN模块对接深度学习框架的一些API、C++复现过来的深度学习模型(目标检测、分割)

- 官方介绍:https://docs.opencv.org/4.x/d2/d58/tutorial_table_of_content_dnn.html

#ifndef OPENCV_DNN_HPP

#define OPENCV_DNN_HPP

// This is an umbrella header to include into you project.

// We are free to change headers layout in dnn subfolder, so please include

// this header for future compatibility

/** @defgroup dnn Deep Neural Network module

@{

This module contains:

- API for new layers creation, layers are building bricks of neural networks;

- set of built-in most-useful Layers;

- API to construct and modify comprehensive neural networks from layers;

- functionality for loading serialized networks models from different frameworks.

Functionality of this module is designed only for forward pass computations (i.e. network testing).

A network training is in principle not supported.

@}

*/

/** @example samples/dnn/classification.cpp

Check @ref tutorial_dnn_googlenet "the corresponding tutorial" for more details

*/

/** @example samples/dnn/colorization.cpp

*/

/** @example samples/dnn/object_detection.cpp

Check @ref tutorial_dnn_yolo "the corresponding tutorial" for more details

*/

/** @example samples/dnn/openpose.cpp

*/

/** @example samples/dnn/segmentation.cpp

*/

/** @example samples/dnn/text_detection.cpp

*/

#include <opencv2/dnn/dnn.hpp>

#endif /* OPENCV_DNN_HPP */

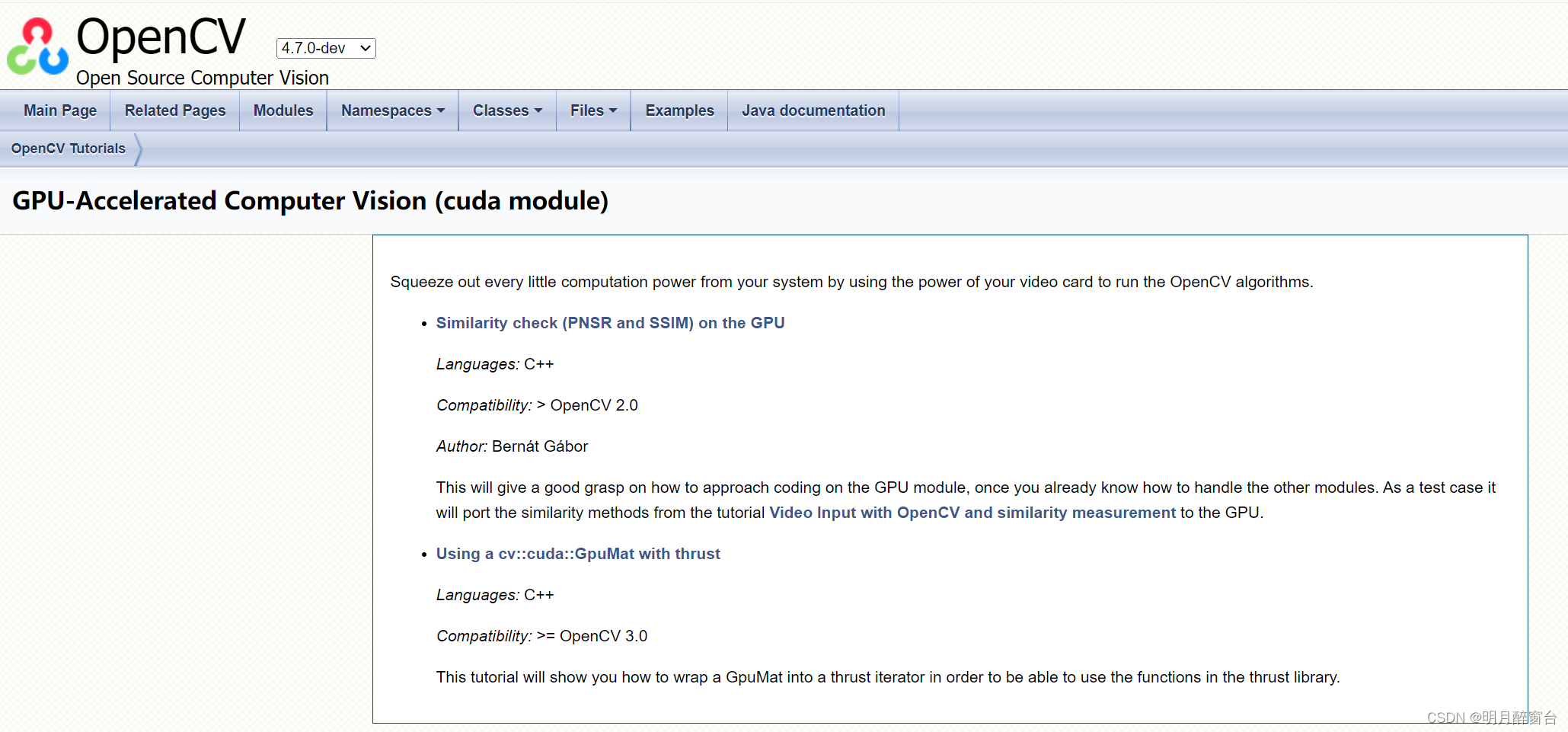

2. CUDA模块

- CUDA模块即Opencv中的GPU模块,用于视觉算法实现过程中的加速

- 官方介绍:https://docs.opencv.org/4.x/da/d2c/tutorial_table_of_content_gpu.html

cuda的使用在目录.\opencv-contrib\include\opencv2\dnn\dnn.cpp中可以找到

//其中包括

/**

* @brief Enum of computation backends supported by layers.

* @see Net::setPreferableBackend

*/

enum Backend

{

//! DNN_BACKEND_DEFAULT equals to DNN_BACKEND_INFERENCE_ENGINE if

//! OpenCV is built with Intel's Inference Engine library or

//! DNN_BACKEND_OPENCV otherwise.

DNN_BACKEND_DEFAULT = 0,

DNN_BACKEND_HALIDE,

DNN_BACKEND_INFERENCE_ENGINE, //!< Intel's Inference Engine computational backend

//!< @sa setInferenceEngineBackendType

DNN_BACKEND_OPENCV,

DNN_BACKEND_VKCOM,

DNN_BACKEND_CUDA,

DNN_BACKEND_WEBNN,

#ifdef __OPENCV_BUILD

DNN_BACKEND_INFERENCE_ENGINE_NGRAPH = 1000000, // internal - use DNN_BACKEND_INFERENCE_ENGINE + setInferenceEngineBackendType()

DNN_BACKEND_INFERENCE_ENGINE_NN_BUILDER_2019, // internal - use DNN_BACKEND_INFERENCE_ENGINE + setInferenceEngineBackendType()

#endif

};

/**

* @brief Enum of target devices for computations.

* @see Net::setPreferableTarget

*/

enum Target

{

DNN_TARGET_CPU = 0,

DNN_TARGET_OPENCL,

DNN_TARGET_OPENCL_FP16,

DNN_TARGET_MYRIAD,

DNN_TARGET_VULKAN,

DNN_TARGET_FPGA, //!< FPGA device with CPU fallbacks using Inference Engine's Heterogeneous plugin.

DNN_TARGET_CUDA,

DNN_TARGET_CUDA_FP16,

DNN_TARGET_HDDL

};