目录

0、创建hive数据

1、pom.xml

2、flink代码

3、sink

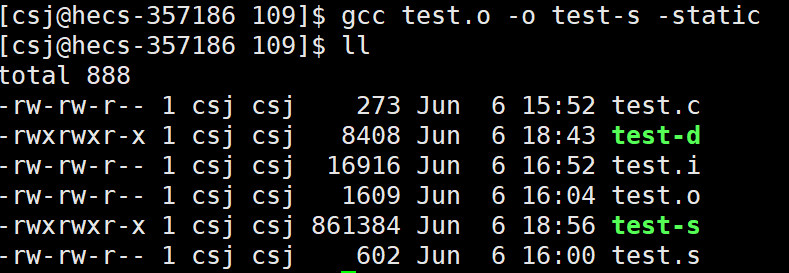

4、提交任务jar

5、flink-conf.yaml

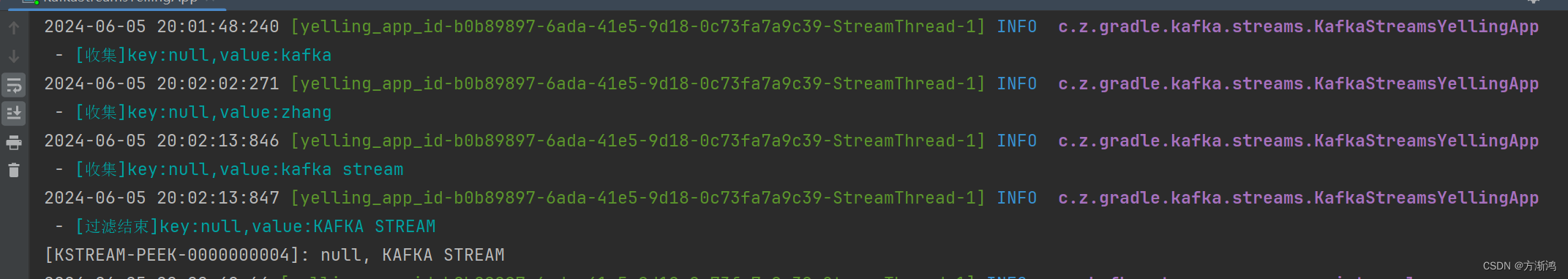

6、数据接收

| flink-1.17.2 | jdk1.8 |

| hive-3.1.3 | hadoop3.3.6 |

| password | http |

0、创建hive数据

/cluster/hive/bin/beeline

!connect jdbc:hive2://ip:10000

create database demo;

drop table demo.example;CREATE TABLE demo.example(

`id` STRING COMMENT '主键',

`name` STRING COMMENT '名称',

`ip` STRING COMMENT 'ip地址',

`time` STRING COMMENT '创建时间',

`info` STRING COMMENT '信息',

`msg` STRING COMMENT '消息',

`oper` STRING COMMENT '操作',

`count` DECIMAL(16,2) COMMENT '次数',

`status` DECIMAL(16,2) COMMENT '状态'

) COMMENT '例子'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

insert into 插入数据

1、pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.example.cloud</groupId>

<artifactId>Hive3Flink2HttpStream</artifactId>

<version>1.0.0</version>

<name>Hive3Flink2HttpStream</name>

<properties>

<java.version>1.8</java.version>

<flink.version>1.17.2</flink.version>

<hive.version>3.1.2</hive.version>

<scala.binary.version>2.12</scala.binary.version>

<hadoop.version>3.3.6</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-statebackend-rocksdb</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jaxb-api</artifactId>

<groupId>javax.xml.bind</groupId>

</exclusion>

<exclusion>

<artifactId>reload4j</artifactId>

<groupId>ch.qos.reload4j</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>nimbus-jose-jwt</artifactId>

<groupId>com.nimbusds</groupId>

</exclusion>

<exclusion>

<artifactId>netty-transport-native-unix-common</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>snappy-java</artifactId>

<groupId>org.xerial.snappy</groupId>

</exclusion>

<exclusion>

<artifactId>stax2-api</artifactId>

<groupId>org.codehaus.woodstox</groupId>

</exclusion>

<exclusion>

<artifactId>commons-compress</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

<exclusion>

<artifactId>commons-io</artifactId>

<groupId>commons-io</groupId>

</exclusion>

<exclusion>

<artifactId>commons-logging</artifactId>

<groupId>commons-logging</groupId>

</exclusion>

<exclusion>

<artifactId>commons-text</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-annotations</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-core</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>curator-framework</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

<exclusion>

<artifactId>netty-buffer</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>netty-common</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>commons-codec</artifactId>

<groupId>commons-codec</groupId>

</exclusion>

<exclusion>

<artifactId>netty-resolver</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>netty-transport</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>netty-codec</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>commons-cli</artifactId>

<groupId>commons-cli</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jackson-annotations</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-core</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-databind</artifactId>

<groupId>com.fasterxml.jackson.core</groupId>

</exclusion>

<exclusion>

<artifactId>commons-cli</artifactId>

<groupId>commons-cli</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>netty-handler</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>netty-transport-native-epoll</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>commons-cli</artifactId>

<groupId>commons-cli</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>com.squareup.okhttp3</groupId>

<artifactId>okhttp</artifactId>

<version>4.10.0</version>

<exclusions>

<exclusion>

<artifactId>kotlin-stdlib-common</artifactId>

<groupId>org.jetbrains.kotlin</groupId>

</exclusion>

<exclusion>

<artifactId>kotlin-stdlib</artifactId>

<groupId>org.jetbrains.kotlin</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-hive_2.12</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>${hive.version}</version>

<exclusions>

<exclusion>

<artifactId>hadoop-annotations</artifactId>

<groupId>org.apache.hadoop</groupId>

</exclusion>

<exclusion>

<artifactId>hadoop-auth</artifactId>

<groupId>org.apache.hadoop</groupId>

</exclusion>

<exclusion>

<artifactId>hadoop-common</artifactId>

<groupId>org.apache.hadoop</groupId>

</e