目录

步骤1:准备三台服务器

步骤2:下载KubeKey

步骤3:创建集群

1. 创建示例配置文件

2. 编辑配置文件

3. 使用配置文件创建集群

4. 验证安装

步骤1:准备三台服务器

- 4c8g (master)

- 8c16g * 2 (worker)

- centos7.9

- 内网互通

- 每个机器有自己域名

- 防火墙开放30000~32767端口

| 主机 IP | 主机名 | 角色 |

|---|---|---|

| 192.168.0.2 | control plane | control plane, etcd |

| 192.168.0.3 | node1 | worker |

| 192.168.0.4 | node2 | worker |

#修改主机名字

hostnamectl set-hostname 主机IP步骤2:下载KubeKey(master节点)

先执行以下命令以确保您从正确的区域下载 KubeKey。

export KKZONE=cn

执行以下命令下载 KubeKey:

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.2 sh -

为 kk 添加可执行权限

chmod +x kk步骤3:创建集群(master节点)

1. 创建示例配置文件

命令如下

./kk create config [--with-kubernetes version] [--with-kubesphere version] [(-f | --file) path]

./kk create config --with-kubernetes v1.20.4 --with-kubesphere v3.1.12. 编辑配置文件

将创建默认文件 config-sample.yaml。编辑文件,以下是多节点集群(具有一个主节点)配置文件的示例。

spec:

hosts:

- {name: master, address: 192.168.0.2, internalAddress: 192.168.0.2, user: ubuntu, password: Testing123}

- {name: node1, address: 192.168.0.3, internalAddress: 192.168.0.3, user: ubuntu, password: Testing123}

- {name: node2, address: 192.168.0.4, internalAddress: 192.168.0.4, user: ubuntu, password: Testing123}

roleGroups:

etcd:

- master

control-plane:

- master

worker:

- node1

- node2

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: ""

port: 6443

我自己的修改后的:

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: master, address: 172.31.0.2, internalAddress: 172.31.0.2, user: root, password: "W1234567@123"}

- {name: node1, address: 172.31.0.3, internalAddress: 172.31.0.3, user: root, password: "W1234567@123"}

- {name: node2, address: 172.31.0.4, internalAddress: 172.31.0.4, user: root, password: "W1234567q@123"}

roleGroups:

etcd:

- master

control-plane:

- master

worker:

- master

- node1

- node2

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.20.4

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.1.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

etcd:

monitoring: false

endpointIps: localhost

port: 2379

tlsEnable: true

common:

redis:

enabled: false

redisVolumSize: 2Gi

openldap:

enabled: false

openldapVolumeSize: 2Gi

minioVolumeSize: 20Gi

monitoring:

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

es:

elasticsearchMasterVolumeSize: 4Gi

elasticsearchDataVolumeSize: 20Gi

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchUrl: ""

externalElasticsearchPort: ""

console:

enableMultiLogin: true

port: 30880

alerting:

enabled: false

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

devops:

enabled: false

jenkinsMemoryLim: 2Gi

jenkinsMemoryReq: 1500Mi

jenkinsVolumeSize: 8Gi

jenkinsJavaOpts_Xms: 512m

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events:

enabled: false

ruler:

enabled: true

replicas: 2

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

metrics_server:

enabled: false

monitoring:

storageClass: ""

prometheusMemoryRequest: 400Mi

prometheusVolumeSize: 20Gi

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

kubeedge:

enabled: false

cloudCore:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

cloudhubPort: "10000"

cloudhubQuicPort: "10001"

cloudhubHttpsPort: "10002"

cloudstreamPort: "10003"

tunnelPort: "10004"

cloudHub:

advertiseAddress:

- ""

nodeLimit: "100"

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

edgeWatcher:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

edgeWatcherAgent:

nodeSelector: {"node-role.kubernetes.io/worker": ""}

tolerations: []

3. 使用配置文件创建集群

./kk create cluster -f config-sample.yaml

我在这里提示有其他的需要安装下图:

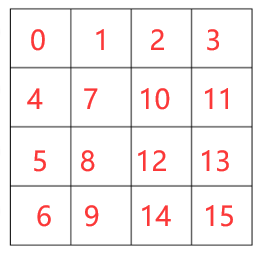

22:33:18 CST [ConfirmModule] Display confirmation form

+--------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| name | sudo | curl | openssl | ebtables | socat | ipset | ipvsadm | conntrack | chrony | docker | containerd | nfs client | ceph client | glusterfs client | time |

+--------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

| master | y | y | y | | | | | | y | | | | | | CST 22:33:18 |

| node1 | y | y | y | | | | | | y | | | | | | CST 22:33:18 |

| node2 | y | y | y | | | | | | y | | | | | | CST 22:33:18 |

+--------+------+------+---------+----------+-------+-------+---------+-----------+--------+--------+------------+------------+-------------+------------------+--------------+

22:33:18 CST [ERRO] master: conntrack is required.

22:33:18 CST [ERRO] master: socat is required.

22:33:18 CST [ERRO] node1: conntrack is required.

22:33:18 CST [ERRO] node1: socat is required.

22:33:18 CST [ERRO] node2: conntrack is required.

22:33:18 CST [ERRO] node2: socat is required然后根据缺少每个节点安装好

yum install -y conntrack && yum install -y socat

然后继续下面这个命令安装:

./kk create cluster -f config-sample.yaml整个安装过程可能需要 10 到 20 分钟,具体取决于您的计算机和网络环境

4. 验证安装

安装完成后,您会看到如下内容

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.0.2:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

the "Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 20xx-xx-xx xx:xx:xx

#####################################################

现在,您可以通过 <NodeIP:30880 使用默认帐户和密码 (admin/P@88w0rd) 访问 KubeSphere 的 Web 控制台。