文章目录

- 功能流表

- 加 Pod

- 加子网

- 跨子网 pod 互访

- 访问外部网络

- DHCP

- 静态路由

- 策略路由

- 安全组

- DNAT

- LB

- FullNAT LB

- Service 扩展

- Src-ip LB

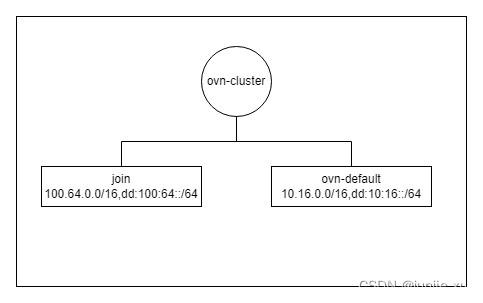

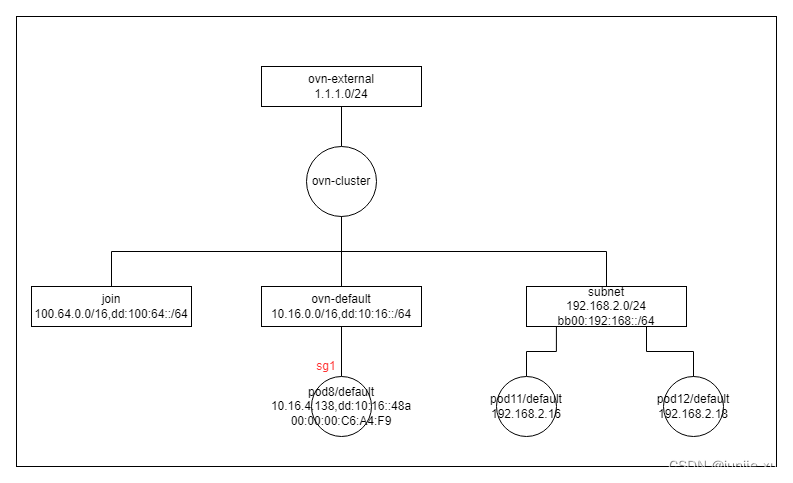

功能流表

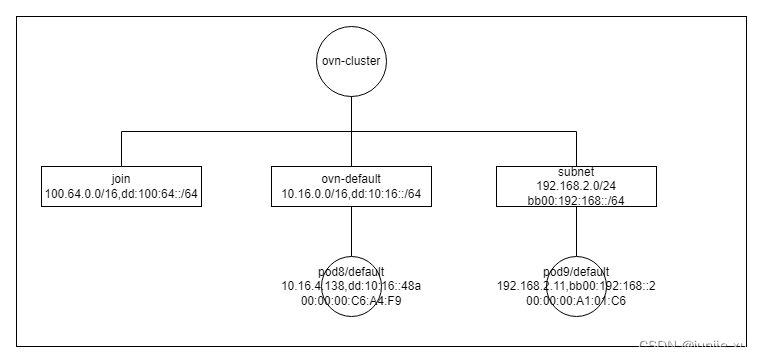

基于此模型配置功能,来查看流表变化,探讨功能实现方式。

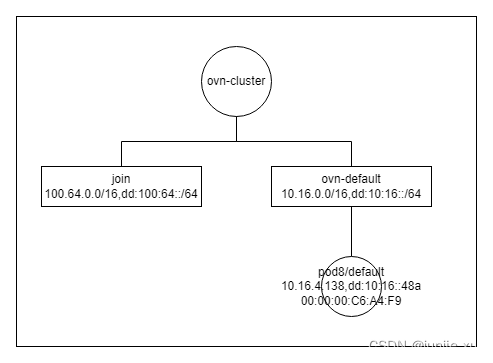

加 Pod

流表添加:

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: ingress

从 ovn-cluster logical_router 到 ovn-default logical_switch 且目的 IP46 地址是 pod8 的, 目的 mac 地址换成 00:00:00:C6:A4:F9。

table=15(lr_in_arp_resolve ), priority=100 , match=(outport == "ovn-cluster-ovn-default" && reg0 == 10.16.4.138), action=(eth.dst = 00:00:00:c6:a4:f9; next;)

table=15(lr_in_arp_resolve ), priority=100 , match=(outport == "ovn-cluster-ovn-default" && xxreg0 == dd:10:16::48a), action=(eth.dst = 00:00:00:c6:a4:f9; next;)

Datapath: "ovn-default" (b6a669bb-984d-4c04-9622-64dba046cd55) Pipeline: ingress

从 pod8 进来的流量,next

table=0 (ls_in_port_sec_l2 ), priority=50 , match=(inport == "pod8.default"), action=(next;)

目的 IP46 地址是 pod8 的

table=14(ls_in_after_lb ), priority=50 , match=(ip4.dst == 10.16.4.138), action=(eth.dst = 00:00:00:c6:a4:f9; next;)

table=14(ls_in_after_lb ), priority=50 , match=(ip6.dst == dd:10:16::48a), action=(eth.dst = 00:00:00:c6:a4:f9; next;)

从 pod8 发出的 arp 请求 且请求的地址是本身 IP,next;GARP

table=19(ls_in_arp_rsp ), priority=100 , match=(arp.tpa == 10.16.4.138 && arp.op == 1 && inport == "pod8.default"), action=(next;)

table=19(ls_in_arp_rsp ), priority=100 , match=(nd_ns && ip6.dst == {dd:10:16::48a, ff02::1:ff00:48a} && nd.target == dd:10:16::48a && inport == "pod8.default"), action=(next;)

请求 pod8 IP 的 arp,直接返回 pod8 的 MAC 地址。本段详细介绍 action,后续带过。

// arp.op = 2 ARP 回复

// arp.tha = arp.sha; arp.sha = 00:00:00:c6:a4:f9 ARP 回复报文的 mac 设置

// arp.tpa = arp.spa; arp.spa = 10.16.4.138 ARP 回复报文的 IP 设置

// outport = inport; flags.loopback = 1; output; 从来接口返回

table=19(ls_in_arp_rsp ), priority=50 , match=(arp.tpa == 10.16.4.138 && arp.op == 1), action=(eth.dst = eth.src; eth.src = 00:00:00:c6:a4:f9; arp.op = 2; /* ARP reply */ arp.tha = arp.sha; arp.sha = 00:00:00:c6:a4:f9; arp.tpa = arp.spa; arp.spa = 10.16.4.138; outport = inport; flags.loopback = 1; output;)

table=19(ls_in_arp_rsp ), priority=50 , match=(nd_ns && ip6.dst == {dd:10:16::48a, ff02::1:ff00:48a} && nd.target == dd:10:16::48a), action=(nd_na { eth.src = 00:00:00:c6:a4:f9; ip6.src = dd:10:16::48a; nd.target = dd:10:16::48a; nd.tll = 00:00:00:c6:a4:f9; outport = inport; flags.loopback = 1; output; };)

目的 MAC 是 pod8 的 MAC 地址,从 pod8.default interface 发出

table=25(ls_in_l2_lkup ), priority=50 , match=(eth.dst == 00:00:00:c6:a4:f9), action=(outport = "pod8.default"; output;)

Datapath: "ovn-default" (b6a669bb-984d-4c04-9622-64dba046cd55) Pipeline: egress

table=9 (ls_out_port_sec_l2 ), priority=50 , match=(outport == "pod8.default"), action=(output;)

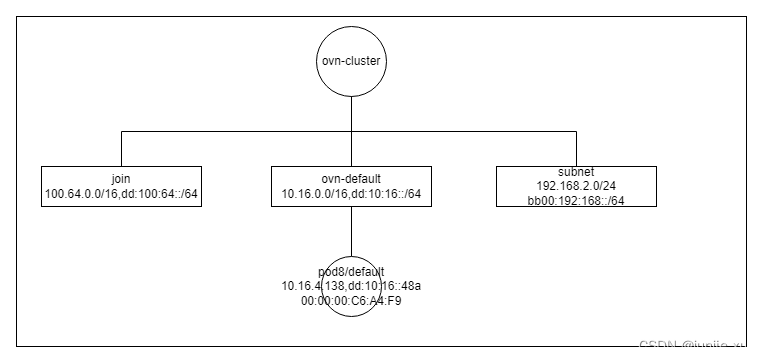

加子网

会加 subnet 网络 logical_switch 的 ingress,egress 表。除此之外,看 ovn-cluster 上的流表变化

流表添加:

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: ingress

从 subnet 子网来的流量,arp 请求,learn mac_bingind

table=1 (lr_in_lookup_neighbor), priority=100 , match=(inport == "ovn-cluster-subnet" && arp.spa == 192.168.2.0/24 && arp.op == 1), action=(reg9[2] = lookup_arp(inport, arp.spa, arp.sha); next;)

LR 收到报文的 ttl 为 1 或者 0,任务到达不了目的地,原路返回 icmp 包,告知 TTL equals 0 during transit

table=3 (lr_in_ip_input ), priority=100 , match=(inport == "ovn-cluster-subnet" && ip4 && ip.ttl == {0, 1} && !ip.later_frag), action=(icmp4 {eth.dst <-> eth.src; icmp4.type = 11; /* Time exceeded */ icmp4.code = 0; /* TTL exceeded in transit */ ip4.dst = ip4.src; ip4.src = 192.168.2.1 ; ip.ttl = 254; outport = "ovn-cluster-subnet"; flags.loopback = 1; output; };)

table=3 (lr_in_ip_input ), priority=100 , match=(inport == "ovn-cluster-subnet" && ip6 && ip6.src == bb00:192:168::/64 && ip.ttl == {0, 1} && !ip.later_frag), action=(icmp6 {eth.dst <-> eth.src; ip6.dst = ip6.src; ip6.src = bb00:192:168::1 ; ip.ttl = 254; icmp6.type = 3; /* Time exceeded */ icmp6.code = 0; /* TTL exceeded in transit */ outport = "ovn-cluster-subnet"; flags.loopback = 1; output; };)

drop 源 ip 为网关或广播地址的报文

table=3 (lr_in_ip_input ), priority=100 , match=(ip4.src == {192.168.2.1, 192.168.2.255} && reg9[0] == 0), action=(drop;)

ipv6 目的地址为 subnet 网关,dhcp 报文,执行 dhcpv6_reply 的操作

table=3 (lr_in_ip_input ), priority=100 , match=(ip6.dst == bb00:192:168::1 && udp.src == 547 && udp.dst == 546), action=(reg0 = 0; handle_dhcpv6_reply;)

table=3 (lr_in_ip_input ), priority=100 , match=(ip6.dst == fe80::200:ff:fe40:8eb1 && udp.src == 547 && udp.dst == 546), action=(reg0 = 0; handle_dhcpv6_reply;)

subnet 中 arp 或 nd 网关的报文回复

table=3 (lr_in_ip_input ), priority=90 , match=(inport == "ovn-cluster-subnet" && arp.op == 1 && arp.tpa == 192.168.2.1 && arp.spa == 192.168.2.0/24), action=(eth.dst = eth.src; eth.src = xreg0[0..47]; arp.op = 2; /* ARP reply */ arp.tha = arp.sha; arp.sha = xreg0[0..47]; arp.tpa <-> arp.spa; outport = inport; flags.loopback = 1; output;)

table=3 (lr_in_ip_input ), priority=90 , match=(inport == "ovn-cluster-subnet" && ip6.dst == {bb00:192:168::1, ff02::1:ff00:1} && nd_ns && nd.target == bb00:192:168::1), action=(nd_na_router { eth.src = xreg0[0..47]; ip6.src = nd.target; nd.tll = xreg0[0..47]; outport = inport; flags.loopback = 1; output; };)

table=3 (lr_in_ip_input ), priority=90 , match=(inport == "ovn-cluster-subnet" && ip6.dst == {fe80::200:ff:fe40:8eb1, ff02::1:ff40:8eb1} && nd_ns && nd.target == fe80::200:ff:fe40:8eb1), action=(nd_na_router { eth.src = xreg0[0..47]; ip6.src = nd.target; nd.tll = xreg0[0..47]; outport = inport; flags.loopback = 1; output; };)

subnet 中 ping 网关的报文回复

table=3 (lr_in_ip_input ), priority=90 , match=(ip4.dst == 192.168.2.1 && icmp4.type == 8 && icmp4.code == 0), action=(ip4.dst <-> ip4.src; ip.ttl = 255; icmp4.type = 0; flags.loopback = 1; next; )

table=3 (lr_in_ip_input ), priority=90 , match=(ip6.dst == {bb00:192:168::1, fe80::200:ff:fe40:8eb1} && icmp6.type == 128 && icmp6.code == 0), action=(ip6.dst <-> ip6.src; ip.ttl = 255; icmp6.type = 129; flags.loopback = 1; next; )

其他到网关的报文丢弃

table=3 (lr_in_ip_input ), priority=60 , match=(ip4.dst == {192.168.2.1}), action=(drop;)

table=3 (lr_in_ip_input ), priority=60 , match=(ip6.dst == {bb00:192:168::1, fe80::200:ff:fe40:8eb1}), action=(drop;)

下面两条IPv6 路由请求,返回的配置和回复报文操作

table=8 (lr_in_nd_ra_options), priority=50 , match=(inport == "ovn-cluster-subnet" && ip6.dst == ff02::2 && nd_rs), action=(reg0[5] = put_nd_ra_opts(addr_mode = "dhcpv6_stateful", slla = 00:00:00:40:8e:b1, prefix = bb00:192:168::/64); next;)

table=9 (lr_in_nd_ra_response), priority=50 , match=(inport == "ovn-cluster-subnet" && ip6.dst == ff02::2 && nd_ra && reg0[5]), action=(eth.dst = eth.src; eth.src = 00:00:00:40:8e:b1; ip6.dst = ip6.src; ip6.src = fe80::200:ff:fe40:8eb1; outport = inport; flags.loopback = 1; output;)

子网访问 fe80::,直接源地址换成网关地址返回

table=11(lr_in_ip_routing ), priority=194 , match=(inport == "ovn-cluster-subnet" && ip6.dst == fe80::/64), action=(ip.ttl--; reg8[0..15] = 0; xxreg0 = ip6.dst; xxreg1 = fe80::200:ff:fe40:8eb1; eth.src = 00:00:00:40:8e:b1; outport = "ovn-cluster-subnet"; flags.loopback = 1; next;)

其他网络访问该子网,源 mac 该为网关 mac,并确定 outport 为子网网关 port,静态路由

table=11(lr_in_ip_routing ), priority=194 , match=(ip6.dst == bb00:192:168::/64), action=(ip.ttl--; reg8[0..15] = 0; xxreg0 = ip6.dst; xxreg1 = bb00:192:168::1; eth.src = 00:00:00:40:8e:b1; outport = "ovn-cluster-subnet"; flags.loopback = 1; next;)

table=11(lr_in_ip_routing ), priority=74 , match=(ip4.dst == 192.168.2.0/24), action=(ip.ttl--; reg8[0..15] = 0; reg0 = ip4.dst; reg1 = 192.168.2.1; eth.src = 00:00:00:40:8e:b1; outport = "ovn-cluster-subnet"; flags.loopback = 1; next;)

策略路由,从子网且在 master 节点的报文发送端,master 节点的 ovn0 作为下一跳,源 mac 改为 join 网络的网关。并更新 outport。

table=13(lr_in_policy ), priority=31000, match=(ip4.dst == 192.168.2.0/24), action=(reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=31000, match=(ip6.dst == bb00:192:168::/64), action=(reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=29000, match=(ip4.src == $subnet.master_ip4), action=(reg0 = 100.64.0.2; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=29000, match=(ip4.src == $subnet.worker2_ip4), action=(reg0 = 100.64.0.4; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=29000, match=(ip4.src == $subnet.worker_ip4), action=(reg0 = 100.64.0.3; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=29000, match=(ip6.src == $subnet.master_ip6), action=(xxreg0 = dd:100:64::2; xxreg1 = dd:100:64::1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=29000, match=(ip6.src == $subnet.worker2_ip6), action=(xxreg0 = dd:100:64::4; xxreg1 = dd:100:64::1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=29000, match=(ip6.src == $subnet.worker_ip6), action=(xxreg0 = dd:100:64::3; xxreg1 = dd:100:64::1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: egress

table=6 (lr_out_delivery ), priority=100 , match=(outport == "ovn-cluster-subnet"), action=(output;)

table3 中 两条都是,第一条是 subnet 设置的 ipv6 网关,第二条是网关 port mac地址生成的 ipv6;

生成方法为前面固定 fe80::; 后面 mac 地址第一组第七位取反,第一组 00,第七位取反后变成 02,即 mac 变成 02:00:00:40:8E:B1;中间再插入 ff:fe -> 0200:00ff:fe40:8eb1。最后得到 ipv6 fe80::0200:00ff:fe40:8eb1

跨子网 pod 互访

下面在 subnet 创建一个 pod9,trace pod9 到 pod8 的访问。

$ kubectl ko trace default/pod8 192.168.2.11 icmp

ingress(dp="ovn-default", inport="pod8.default")

------------------------------------------------

解决准入问题,pod8 发来的报文允许

0. ls_in_port_sec_l2 (northd.c:5607): inport == "pod8.default", priority 50, uuid db8107b9

next;

标记 acl 提示,reg0[8]:可能命中允许,但不需要连接 conntrack; reg0[9]:可能命中 drop。

8. ls_in_acl_hint (northd.c:6141): !ct.trk, priority 5, uuid ea0a4f48

reg0[8] = 1;

reg0[9] = 1;

next;

查看是否目的地址是同网段的,不是的话到下一跳流表,是的话,更新目的 mac,因为子网内已知目的 ip 的 mac

14. ls_in_after_lb (northd.c:7095): ip4.dst != {10.16.0.0/16}, priority 60, uuid 822e2991

next;

需要从网关 port 发出,目的 mac 改为网关 mac 地址,执行 output action

25. ls_in_l2_lkup (northd.c:8745): eth.dst == 00:00:00:63:b3:f9, priority 50, uuid aaecf203

outport = "ovn-default-ovn-cluster";

output;

egress(dp="ovn-default", inport="pod8.default", outport="ovn-default-ovn-cluster")

----------------------------------------------------------------------------------

0. ls_out_pre_lb (northd.c:5757): ip && outport == "ovn-default-ovn-cluster", priority 110, uuid 35b4eb79

next;

同样检查 egress acl 提示

3. ls_out_acl_hint (northd.c:6141): !ct.trk, priority 5, uuid 80cbad44

reg0[8] = 1;

reg0[9] = 1;

next;

9. ls_out_port_sec_l2 (northd.c:5704): outport == "ovn-default-ovn-cluster", priority 50, uuid 9a68d043

output;

/* output to "ovn-default-ovn-cluster", type "l3gateway" */

ingress(dp="ovn-cluster", inport="ovn-cluster-ovn-default")

-----------------------------------------------------------

准入检查,从子网过来的,目的 mac 是该网关的允许

0. lr_in_admission (northd.c:11022): eth.dst == 00:00:00:63:b3:f9 && inport == "ovn-cluster-ovn-default", priority 50, uuid 75733dd4

xreg0[0..47] = 00:00:00:63:b3:f9;

next;

1. lr_in_lookup_neighbor (northd.c:11166): 1, priority 0, uuid 498b58fa

reg9[2] = 1;

next;

2. lr_in_learn_neighbor (northd.c:11175): reg9[2] == 1, priority 100, uuid c709ddc3

next;

10. lr_in_ip_routing_pre (northd.c:11398): 1, priority 0, uuid f62bcaaf

reg7 = 0;

next;

目的地址是 192.168.2.0/24, ttl--,reg0 为目的地址,reg1 为目的地址的网关,源 mac 为目的地址的网关 mac,outport 是目的子网的网关

11. lr_in_ip_routing (northd.c:9909): ip4.dst == 192.168.2.0/24, priority 74, uuid 31ceb650

ip.ttl--;

reg8[0..15] = 0;

reg0 = ip4.dst;

reg1 = 192.168.2.1;

eth.src = 00:00:00:40:8e:b1;

outport = "ovn-cluster-subnet";

flags.loopback = 1;

next;

12. lr_in_ip_routing_ecmp (northd.c:11474): reg8[0..15] == 0, priority 150, uuid d30dd2a0

next;

策略路由,未更改 outport

13. lr_in_policy (northd.c:9125): ip4.dst == 192.168.2.0/24, priority 31000, uuid 614460de

reg8[0..15] = 0;

next;

14. lr_in_policy_ecmp (northd.c:11610): reg8[0..15] == 0, priority 150, uuid e0a09b2e

next;

目的地址为 192.168.2.11, 设置 目的 mac 为 00:00:00:0b:d8:da

15. lr_in_arp_resolve (northd.c:11811): outport == "ovn-cluster-subnet" && reg0 == 192.168.2.11, priority 100, uuid 508186d4

eth.dst = 00:00:00:0b:d8:da;

next;

目的 mac 已设置,不发送 arp

19. lr_in_arp_request (northd.c:12308): 1, priority 0, uuid 3c422c04

output;

egress(dp="ovn-cluster", inport="ovn-cluster-ovn-default", outport="ovn-cluster-subnet")

----------------------------------------------------------------------------------------

0. lr_out_chk_dnat_local (northd.c:13530): 1, priority 0, uuid b0688753

reg9[4] = 0;

next;

6. lr_out_delivery (northd.c:12355): outport == "ovn-cluster-subnet", priority 100, uuid 559ce0d5

output;

/* output to "ovn-cluster-subnet", type "l3gateway" */

ingress(dp="subnet", inport="subnet-ovn-cluster")

-------------------------------------------------

0. ls_in_port_sec_l2 (northd.c:5607): inport == "subnet-ovn-cluster", priority 50, uuid d6f2499b

next;

6. ls_in_pre_lb (northd.c:5754): ip && inport == "subnet-ovn-cluster", priority 110, uuid b1914c0f

next;

8. ls_in_acl_hint (northd.c:6141): !ct.trk, priority 5, uuid ea0a4f48

reg0[8] = 1;

reg0[9] = 1;

next;

目的地址为 192.168.2.11, 设置 目的 mac 为 00:00:00:0b:d8:da

14. ls_in_after_lb (northd.c:7165): ip4.dst == 192.168.2.11, priority 50, uuid ad5447a8

eth.dst = 00:00:00:0b:d8:da;

next;

目的 mac 为 00:00:00:0b:d8:da,outport 为 pod9.default

25. ls_in_l2_lkup (northd.c:8674): eth.dst == 00:00:00:0b:d8:da, priority 50, uuid eb131381

outport = "pod9.default";

output;

egress(dp="subnet", inport="subnet-ovn-cluster", outport="pod9.default")

------------------------------------------------------------------------

LS 上配置了 LB,需要检查

0. ls_out_pre_lb (northd.c:6012): ip, priority 100, uuid 16fc6eff

reg0[2] = 1;

next;

2. ls_out_pre_stateful (northd.c:6062): reg0[2] == 1, priority 110, uuid 953b71bb

ct_lb_mark;

ct_lb_mark

----------

3. ls_out_acl_hint (northd.c:6119): ct.new && !ct.est, priority 7, uuid 26402d99

reg0[7] = 1;

reg0[9] = 1;

next;

4. ls_out_acl (northd.c:6716): ip && (!ct.est || (ct.est && ct_mark.blocked == 1)), priority 1, uuid fdd00aa8

reg0[1] = 1;

next;

7. ls_out_stateful (northd.c:7231): reg0[1] == 1 && reg0[13] == 0, priority 100, uuid fc7cf441

ct_commit { ct_mark.blocked = 0; };

next;

9. ls_out_port_sec_l2 (northd.c:5704): outport == "pod9.default", priority 50, uuid 5412055e

output;

/* output to "pod9.default", type "" */

+ set +x

--------

访问外部网络

pod9 访问 114.114.114.114

$ kubectl ko trace default/pod9 114.114.114.114 udp 53

ingress(dp="subnet", inport="pod9.default")

-------------------------------------------

egress(dp="subnet", inport="pod9.default", outport="subnet-ovn-cluster")

------------------------------------------------------------------------

ingress(dp="ovn-cluster", inport="ovn-cluster-subnet")

------------------------------------------------------

...

策略路由生效,ip4.src == $ovn.default.worker_ip4 reroute 100.64.0.3 outport 是 join 网络,下一跳设置为 worker 节点的 ovn0 ip。

13. lr_in_policy (northd.c:9125): ip4.src == $subnet.worker_ip4, priority 29000, uuid 2e224492

reg0 = 100.64.0.3;

reg1 = 100.64.0.1;

eth.src = 00:00:00:e9:15:ff;

outport = "ovn-cluster-join";

flags.loopback = 1;

reg8[0..15] = 0;

next;

设置 目的 mac 为 ovn0 的 mac

15. lr_in_arp_resolve (northd.c:11811): outport == "ovn-cluster-join" && reg0 == 100.64.0.3, priority 100, uuid 36bca89c

eth.dst = 00:00:00:32:7e:12;

next;

19. lr_in_arp_request (northd.c:12308): 1, priority 0, uuid 3c422c04

output;

egress(dp="ovn-cluster", inport="ovn-cluster-subnet", outport="ovn-cluster-join")

---------------------------------------------------------------------------------

ingress(dp="join", inport="join-ovn-cluster")

---------------------------------------------

目的 mac 是 worker 节点上 ovn0 的 mac,outport 是 node-worker

25. ls_in_l2_lkup (northd.c:8674): eth.dst == 00:00:00:32:7e:12, priority 50, uuid bd93d956

outport = "node-worker";

output;

egress(dp="join", inport="join-ovn-cluster", outport="node-worker")

-------------------------------------------------------------------

9. ls_out_port_sec_l2 (northd.c:5704): outport == "node-worker", priority 50, uuid 16e2ea51

output;

/* output to "node-worker", type "" */

DHCP

比较子网是否带 DHCP 配置

在 logical_router 上配置

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: ingress

从此子网来的 ipv6 nd 报文,配置前缀,用网关 mac 回复。

table=8 (lr_in_nd_ra_options), priority=50 , match=(inport == "ovn-cluster-subnet" && ip6.dst == ff02::2 && nd_rs), action=(reg0[5] = put_nd_ra_opts(addr_mode = "dhcpv6_stateful", slla = 00:00:00:40:8e:b1, prefix = bb00:192:168::/64); next;)

181a183

table=9 (lr_in_nd_ra_response), priority=50 , match=(inport == "ovn-cluster-subnet" && ip6.dst == ff02::2 && nd_ra && reg0[5]), action=(eth.dst = eth.src; eth.src = 00:00:00:40:8e:b1; ip6.dst = ip6.src; ip6.src = fe80::200:ff:fe40:8eb1; outport = inport; flags.loopback = 1; output;)

那具体的 IP 如何呢?

可以看为每个子网添加 pod 时,该子网的流表变化.

Datapath: "subnet" (bc26eedb-61fd-42f7-8841-52442ed3598e) Pipeline: ingress

匹配到 pod9 lsp 来的流量如果是 dhcp 请求,给流的 dhcp options

table=20(ls_in_dhcp_options ), priority=100 , match=(inport == "pod9.default" && eth.src == 00:00:00:a1:01:c6 && ip4.src == 0.0.0.0 && ip4.dst == 255.255.255.255 && udp.src == 68 && udp.dst == 67), action=(reg0[3] = put_dhcp_opts(offerip = 192.168.2.11, lease_time = 3600, netmask = 255.255.255.0, router = 192.168.2.1, server_id = 169.254.0.254); next;)

table=20(ls_in_dhcp_options ), priority=100 , match=(inport == "pod9.default" && eth.src == 00:00:00:a1:01:c6 && ip4.src == 192.168.2.11 && ip4.dst == {169.254.0.254, 255.255.255.255} && udp.src == 68 && udp.dst == 67), action=(reg0[3] = put_dhcp_opts(offerip = 192.168.2.11, lease_time = 3600, netmask = 255.255.255.0, router = 192.168.2.1, server_id = 169.254.0.254); next;)

ipv6

table=20(ls_in_dhcp_options ), priority=100 , match=(inport == "pod9.default" && eth.src == 00:00:00:a1:01:c6 && ip6.dst == ff02::1:2 && udp.src == 546 && udp.dst == 547), action=(reg0[3] = put_dhcpv6_opts(ia_addr = bb00:192:168::2, server_id = 00:00:00:5E:04:65); next;)

dhcp 回复操作

table=21(ls_in_dhcp_response), priority=100 , match=(inport == "pod9.default" && eth.src == 00:00:00:a1:01:c6 && ip4 && udp.src == 68 && udp.dst == 67 && reg0[3]), action=(eth.dst = eth.src; eth.src = 00:00:02:2E:2F:B8; ip4.src = 169.254.0.254; udp.src = 67; udp.dst = 68; outport = inport; flags.loopback = 1; output;)

table=21(ls_in_dhcp_response), priority=100 , match=(inport == "pod9.default" && eth.src == 00:00:00:a1:01:c6 && ip6.dst == ff02::1:2 && udp.src == 546 && udp.dst == 547 && reg0[3]), action=(eth.dst = eth.src; eth.src = 00:00:00:5E:04:65; ip6.dst = ip6.src; ip6.src = fe80::200:ff:fe5e:465; udp.src = 547; udp.dst = 546; outport = inport; flags.loopback = 1; output;)

静态路由

查看默认状态下 ovn-cluster 的路由表

$ kubectl ko nbctl lr-route-list ovn-cluster

IPv4 Routes

Route Table <main>:

0.0.0.0/0 100.64.0.1 dst-ip

IPv6 Routes

Route Table <main>:

::/0 dd:100:64::1 dst-ip

流表如下,包括逻辑路由里的 Logical_Switch,静态路由。

table=11(lr_in_ip_routing ), priority=194 , match=(ip6.dst == bb00:192:168::/64), action=(ip.ttl--; reg8[0..15] = 0; xxreg0 = ip6.dst; xxreg1 = bb00:192:168::1; eth.src = 00:00:00:c8:1c:8a; outport = "ovn-cluster-subnet"; flags.loopback = 1; next;)

table=11(lr_in_ip_routing ), priority=74 , match=(ip4.dst == 192.168.2.0/24), action=(ip.ttl--; reg8[0..15] = 0; reg0 = ip4.dst; reg1 = 192.168.2.1; eth.src = 00:00:00:c8:1c:8a; outport = "ovn-cluster-subnet"; flags.loopback = 1; next;)

table=11(lr_in_ip_routing ), priority=50 , match=(ip4.dst == 10.16.0.0/16), action=(ip.ttl--; reg8[0..15] = 0; reg0 = ip4.dst; reg1 = 10.16.0.1; eth.src = 00:00:00:63:b3:f9; outport = "ovn-cluster-ovn-default"; flags.loopback = 1; next;)

table=11(lr_in_ip_routing ), priority=50 , match=(ip4.dst == 100.64.0.0/16), action=(ip.ttl--; reg8[0..15] = 0; reg0 = ip4.dst; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; next;)

table=11(lr_in_ip_routing ), priority=1 , match=(reg7 == 0 && ip4.dst == 0.0.0.0/0), action=(ip.ttl--; reg8[0..15] = 0; reg0 = 100.64.0.1; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; next;)

table=11(lr_in_ip_routing ), priority=1 , match=(reg7 == 0 && ip6.dst == ::/0), action=(ip.ttl--; reg8[0..15] = 0; xxreg0 = dd:100:64::1; xxreg1 = dd:100:64::1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; next;)

静态路由不仅可以设置 目的 IP 路由,也可以设置源 IP 路由。

具体应用联合 Nat 一起一个例子。

$ kubectl ko nbctl --policy=src-ip lr-route-add ovn-cluster 192.168.2.11/32 100.64.0.1

流表中

table=11(lr_in_ip_routing ), priority=96 , match=(ip4.src == 192.168.2.11/32), action=(ip.ttl--; reg8[0..15] = 0; reg0 = 100.64.0.1; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; next;)

策略路由

查看默认状态下 ovn-cluster 的策略路由表

30000 ip4.dst == 1.2.3.215 reroute 100.64.0.2

29000 ip4.src == $ovn.default.master_ip4 reroute 100.64.0.2

29000 ip6.src == $subnet.master_ip6 reroute dd:100:64::2

流表如下:依次是到主机 internel_ip ,master 节点上 default 子网 ipv4 的 port,master 节点上 subnet 子网 ipv6 的 port,吓一跳到 ovn0 的路由。

table=13(lr_in_policy ), priority=30000, match=(ip4.dst == 1.2.3.215), action=(reg0 = 100.64.0.2; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

table=13(lr_in_policy ), priority=29000, match=(ip4.src == $ovn.default.master_ip4), action=(reg0 = 100.64.0.2; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;

table=13(lr_in_policy ), priority=29000, match=(ip6.src == $subnet.master_ip6), action=(xxreg0 = dd:100:64::2; xxreg1 = dd:100:64::1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

添加 到 localdns 的策略路由可以像下面一样添加。

kubectl ko nbctl lr-policy-add ovn-cluster 29100 "ip4.src == \$ovn.default.master_ip4 && ip4.dst == ip4.dst == 169.254.25.10" reroute 100.64.0.2

流表中

table=13(lr_in_policy ), priority=29100, match=(ip4.src == $ovn.default.master_ip4 && ip4.dst == ip4.dst == 169.254.25.10), action=(reg0 = 100.64.0.2; reg1 = 100.64.0.1; eth.src = 00:00:00:e9:15:ff; outport = "ovn-cluster-join"; flags.loopback = 1; reg8[0..15] = 0; next;)

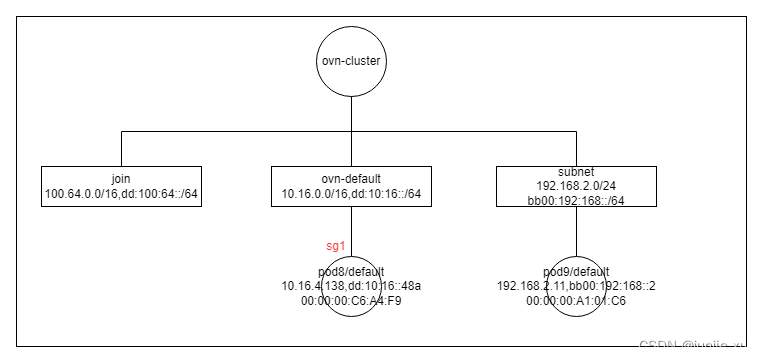

安全组

sg1 为 安全组名称 (kubeOVN CRD)

egress:allow all

ingress:22 allow,icmp drop

apiVersion: kubeovn.io/v1

kind: SecurityGroup

metadata:

name: sg1

spec:

egressRules:

- ipVersion: ipv4

policy: allow

priority: 1

protocol: all

remoteAddress: 0.0.0.0/0

remoteType: address

ingressRules:

- ipVersion: ipv4

policy: drop

priority: 10

protocol: icmp

remoteAddress: 0.0.0.0/0

remoteType: address

- ipVersion: ipv4

policy: allow

priority: 10

protocol: tcp

portRangeMin: 22

portRangeMax: 22

remoteAddress: 0.0.0.0/0

remoteType: address

流表变化:

logical_switch 的 ingress 对应 lsp 的 egress

Datapath: "ovn-default" (b6a669bb-984d-4c04-9622-64dba046cd55) Pipeline: ingress

允许 ipv4 的 dhcp

table=1 (ls_in_port_sec_ip ), priority=90 , match=(inport == "pod8.default" && eth.src == 00:00:00:c6:a4:f9 && ip4.src == 0.0.0.0 && ip4.dst == 255.255.255.255 && udp.src == 68 && udp.dst == 67), action=(next;)

允许 ip 10.16.4.138,mac 00:00:00:c6:a4:f9,lsp pod8.default 流量

table=1 (ls_in_port_sec_ip ), priority=90 , match=(inport == "pod8.default" && eth.src == 00:00:00:c6:a4:f9 && ip4.src == {10.16.4.138}), action=(next;)

允许 到 ff02::/16 的 RA

table=1 (ls_in_port_sec_ip ), priority=90 , match=(inport == "pod8.default" && eth.src == 00:00:00:c6:a4:f9 && ip6.src == :: && ip6.dst == ff02::/16 && icmp6.type == {131, 135, 143}), action=(next;)

允许 ip6 地址 dd:10:16::48a, fe80::200:ff:fec6:a4f9, mac 00:00:00:c6:a4:f9,的流量

table=1 (ls_in_port_sec_ip ), priority=90 , match=(inport == "pod8.default" && eth.src == 00:00:00:c6:a4:f9 && ip6.src == {fe80::200:ff:fec6:a4f9, dd:10:16::48a}), action=(next;)

其他流量 drop,上面第二条和第四条已经 next了,所以如果 ip 和 mac 正确,匹配不到本条流表。

table=1 (ls_in_port_sec_ip ), priority=80 , match=(inport == "pod8.default" && eth.src == 00:00:00:c6:a4:f9 && ip), action=(drop;)

garp 的配置

table=2 (ls_in_port_sec_nd ), priority=90 , match=(inport == "pod8.default" && eth.src == 00:00:00:c6:a4:f9 && arp.sha == 00:00:00:c6:a4:f9 && arp.spa == {10.16.4.138}), action=(next;)

table=2 (ls_in_port_sec_nd ), priority=90 , match=(inport == "pod8.default" && eth.src == 00:00:00:c6:a4:f9 && ip6 && nd && ((nd.sll == 00:00:00:00:00:00 || nd.sll == 00:00:00:c6:a4:f9) || ((nd.tll == 00:00:00:00:00:00 || nd.tll == 00:00:00:c6:a4:f9) && (nd.target == fe80::200:ff:fec6:a4f9 || nd.target == dd:10:16::48a)))), action=(next;)

table=2 (ls_in_port_sec_nd ), priority=80 , match=(inport == "pod8.default" && (arp || nd)), action=(drop;)

源 port 是安全组 sg1 port_group 里的(pod 绑定 sg,后会将 lsp 放到 port_group 里)

table=9 (ls_in_acl ), priority=3299 , match=(reg0[7] == 1 && (inport==@ovn.sg.sg1 && ip4 && ip4.dst==0.0.0.0/0)), action=(reg0[1] = 1; next;)

table=9 (ls_in_acl ), priority=3299 , match=(reg0[8] == 1 && (inport==@ovn.sg.sg1 && ip4 && ip4.dst==0.0.0.0/0)), action=(next;)

Datapath: "ovn-default" (b6a669bb-984d-4c04-9622-64dba046cd55) Pipeline: egress

22 端口

table=4 (ls_out_acl ), priority=3290 , match=(reg0[8] == 1 && (outport==@ovn.sg.sg1 && ip4 && ip4.src==0.0.0.0/0 && 22<=tcp.dst<=22)), action=(next;)

icmp drop

table=4 (ls_out_acl ), priority=3290 , match=(reg0[9] == 1 && (outport==@ovn.sg.sg1 && ip4 && ip4.src==0.0.0.0/0 && icmp4)), action=(/* drop */)

table=4 (ls_out_acl ), priority=3003 , match=(reg0[9] == 1 && (outport==@ovn.sg.kubeovn_deny_all && ip)), action=(/* drop */)

Trace

$ kubectl ko trace default/pod9 10.16.4.138 icmp

......

4. ls_out_acl (northd.c:6472): reg0[9] == 1 && (outport==@ovn.sg.sg1 && ip4 && ip4.src==0.0.0.0/0 && icmp4), priority 3290, uuid 8d3eb6cf

drop;

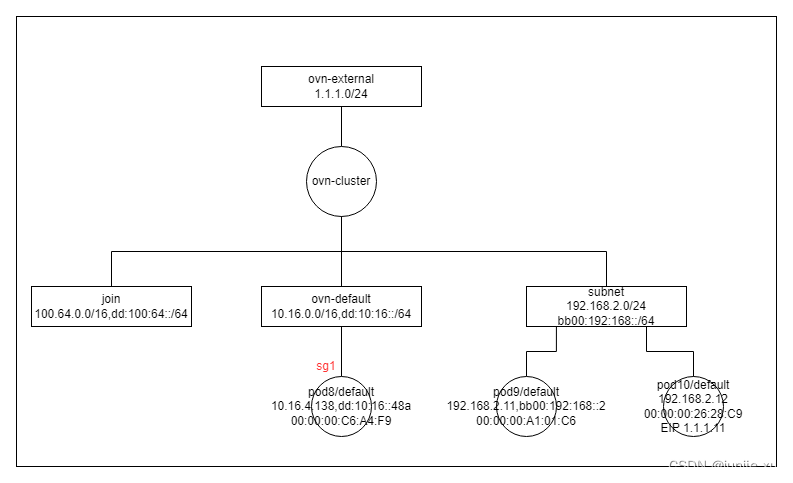

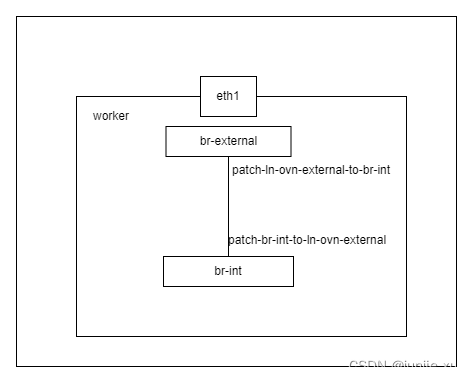

DNAT

创建 ovn-external-gw-config

pod10 配置 eip 为 1.1.1.11/24,如上配置完成后。

apiVersion: v1

kind: ConfigMap

metadata:

name: ovn-external-gw-config

namespace: kube-system

data:

enable-external-gw: "true"

external-gw-nodes: "worker"

external-gw-nic: "eth1"

nic-ip: "1.1.1.254/24"

nic-mac: "00:00:3e:e7:00:eb"

external-gw-addr: "1.1.1.1/24"

$ kubectl ko nbctl show

switch af2cc54a-ff49-4d5a-8037-ea07369de09d (ovn-external)

port ln-ovn-external

type: localnet

addresses: ["unknown"]

port ovn-external-ovn-cluster

type: router

router-port: ovn-cluster-ovn-external

router 5471f042-ce6f-470f-8f0d-55e75972206a (ovn-cluster)

port ovn-cluster-subnet

mac: "00:00:00:C8:1C:8A"

networks: ["192.168.2.1/24", "bb00:192:168::1/64"]

port ovn-cluster-ovn-default

mac: "00:00:00:63:B3:F9"

networks: ["10.16.0.1/16", "dd:10:16::1/64"]

port ovn-cluster-ovn-external

mac: "00:00:3e:e7:00:eb"

networks: ["1.1.1.254/24"]

gateway chassis: [fcbaedd2-3ab3-41c5-bc75-e04436ed9eac]

port ovn-cluster-join

mac: "00:00:00:E9:15:FF"

networks: ["100.64.0.1/16", "dd:100:64::1/64"]

nat b07e765d-7568-4389-ba75-3ebd8e8e421d

external ip: "1.1.1.11"

logical ip: "192.168.2.12"

type: "dnat_and_snat"

$ kubectl ko nbctl lr-route-list ovn-cluster

IPv4 Routes

Route Table <main>:

192.168.2.13 1.1.1.1 src-ip

关键流表变化

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: ingress

到 1.1.1.11 eip 的 arp 直接回复

table=3 (lr_in_ip_input ), priority=90 , match=(arp.op == 1 && arp.tpa == 1.1.1.11), action=(eth.dst = eth.src; eth.src = xreg0[0..47]; arp.op = 2; /* ARP reply */ arp.tha = arp.sha; arp.sha = xreg0[0..47]; arp.tpa <-> arp.spa; outport = inport; flags.loopback = 1; output;)

table=4 (lr_in_unsnat ), priority=90 , match=(ip && ip4.dst == 1.1.1.11), action=(ct_snat;)

到 1.1.1.11 ct_dnat pod10 ip

table=6 (lr_in_dnat ), priority=100 , match=(ip && ip4.dst == 1.1.1.11), action=(flags.loopback = 1; ct_dnat(192.168.2.13);)

pod 到外部的路由,下一跳到 1.1.1.1,源 mac 为 1.1.1.254 的 mac

table=11(lr_in_ip_routing ), priority=96 , match=(ip4.src == 192.168.2.13/32), action=(ip.ttl--; reg8[0..15] = 0; reg0 = 1.1.1.1; reg1 = 1.1.1.254; eth.src = 00:00:3e:e7:00:eb; outport = "ovn-cluster-ovn-external"; flags.loopback = 1; next;)

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: egress

pod 到外部时的流量 snat 成 1.1.1.11

table=3 (lr_out_snat ), priority=33 , match=(ip && ip4.src == 192.168.2.13 && (!ct.trk || !ct.rpl)), action=(ct_snat(1.1.1.11);)

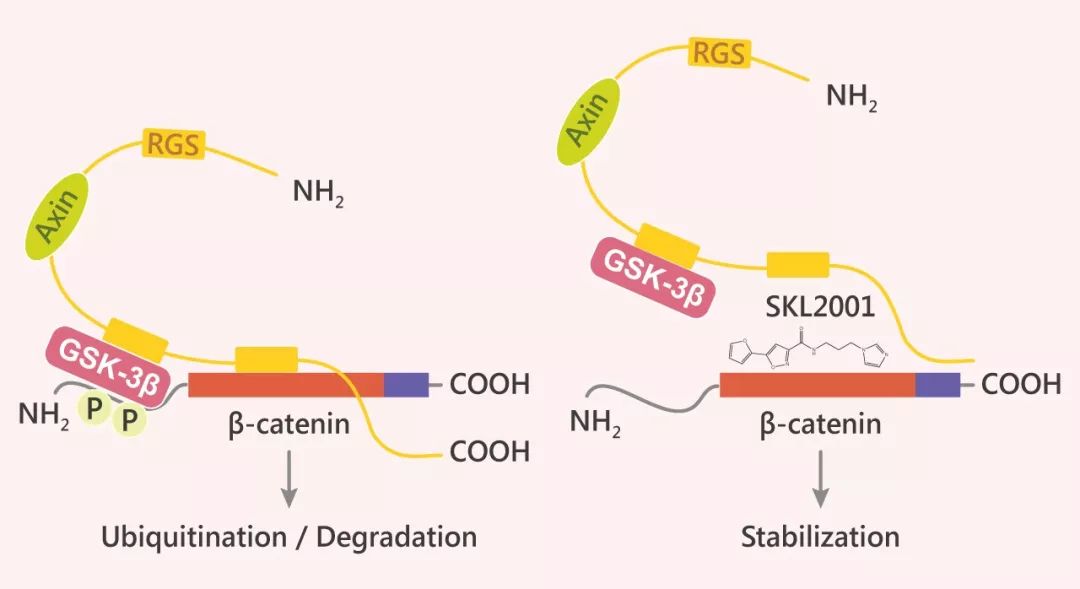

LB

LB 分为 LogicalRouter LB 和 LogicalSwitch LB,对于 OVN 来说,LB 是一个资源,然后将该资源关联到 LR 或 LS 上。

当前 kubeOVN 在 enable-lb 时,会关联到 LR 和 LS 上,以后会针对 子网 VPC 进行可选配置。

示例 10.96.99.99:8080 192.168.2.16:80 192.168.2.18:80

$ kubectl ko nbctl lb-add lb0 10.96.99.99:8080 192.168.2.16:80,192.168.2.18:80 tcp

$ kubectl ko nbctl lr-lb-add ovn-cluster lb0

流表变化:

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: ingress

访问 vip,reg0 存 vip,reg9[16..31] 存目的端口,ct_dnat 处理

table=5 (lr_in_defrag ), priority=110 , match=(ip && ip4.dst == 10.96.99.99 && tcp), action=(reg0 = 10.96.99.99; reg9[16..31] = tcp.dst; ct_dnat;)

未建立连接的执行执行对应的 backends

table=6 (lr_in_dnat ), priority=120 , match=(ct.est && ip4 && reg0 == 10.96.99.99 && tcp && reg9[16..31] == 8080 && ct_mark.natted == 1), action=(next;)

table=6 (lr_in_dnat ), priority=120 , match=(ct.new && ip4 && reg0 == 10.96.99.99 && tcp && reg9[16..31] == 8080), action=(ct_lb_mark(backends=192.168.2.16:80,192.168.2.18:80);)

FullNAT LB

FullNAT LB 即 在 LB 时,即做 DNAT 又 做 SNAT。

lb_force_snat_ip=router_ip:设置 对应接口的 ip 为 snat 的 ip,该模型下为子网的网关。即192.168.2.1

$ kubectl ko nbctl set logical_router ovn-cluster options:lb_force_snat_ip=router_ip

$ kubectl ko nbctl --wait=hv set logical_router ovn-cluster options:chassis=fcbaedd2-3ab3-41c5-bc75-e04436ed9eac

流表变化:

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: ingress

和普通 LB,action 加 flag force_snat_for_lb = 1

table=6 (lr_in_dnat ), priority=120 , match=(ct.est && ip4 && reg0 == 10.96.99.99 && tcp && reg9[16..31] == 8080 && ct_mark.natted == 1), action=(flags.force_snat_for_lb = 1; next;)

table=6 (lr_in_dnat ), priority=120 , match=(ct.new && ip4 && reg0 == 10.96.99.99 && tcp && reg9[16..31] == 8080), action=(flags.force_snat_for_lb = 1; ct_lb_mark(backends=192.168.2.16:80,192.168.2.18:80);)

egress 时,做 snat 到指定子网的网关

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: egress

table=3 (lr_out_snat ), priority=110 , match=(flags.force_snat_for_lb == 1 && ip4 && outport == "ovn-cluster-join"), action=(ct_snat(100.64.0.1);)

table=3 (lr_out_snat ), priority=110 , match=(flags.force_snat_for_lb == 1 && ip4 && outport == "ovn-cluster-ovn-default"), action=(ct_snat(10.16.0.1);)

table=3 (lr_out_snat ), priority=110 , match=(flags.force_snat_for_lb == 1 && ip4 && outport == "ovn-cluster-ovn-external"), action=(ct_snat(1.1.1.254);)

table=3 (lr_out_snat ), priority=110 , match=(flags.force_snat_for_lb == 1 && ip4 && outport == "ovn-cluster-subnet"), action=(ct_snat(192.168.2.1);)

Service 扩展

Src-ip LB

Src-ip 是 kubernetes 对 service 能力的要求,根据 src-ip 访问不同的 endpoint。

$ kubectl ko nbctl set Load_Balancer lb0 selection_fields=ip_src

流表变化:

Datapath: "ovn-cluster" (5ec1c73b-e8f2-4b11-8779-28e02a091b87) Pipeline: ingress

table=6 (lr_in_dnat ), priority=120 , match=(ct.new && ip4 && reg0 == 10.96.99.99 && tcp && reg9[16..31] == 8080), action=(ct_lb_mark(backends=192.168.2.16:80,192.168.2.18:80);)

=>

更改计算方式

match=(ct.new && ip4 && reg0 == 10.96.99.99 && tcp && reg9[16..31] == 8080), action=(ct_lb_mark(backends=192.168.2.16:80,192.168.2.18:80; hash_fields="ip_src");)

![[模型部署]:深度学习模型部署(已更Pytorch篇)](https://img-blog.csdnimg.cn/img_convert/add7ef1520d15ca900dd9e4243028fd5.png)