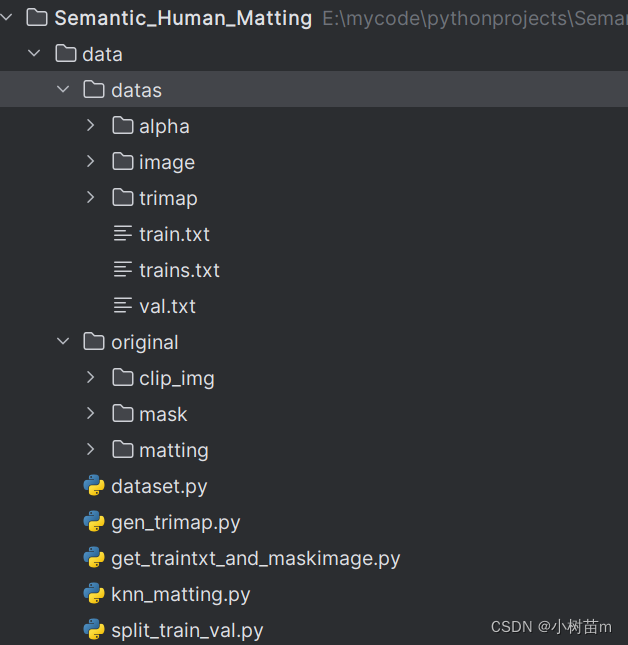

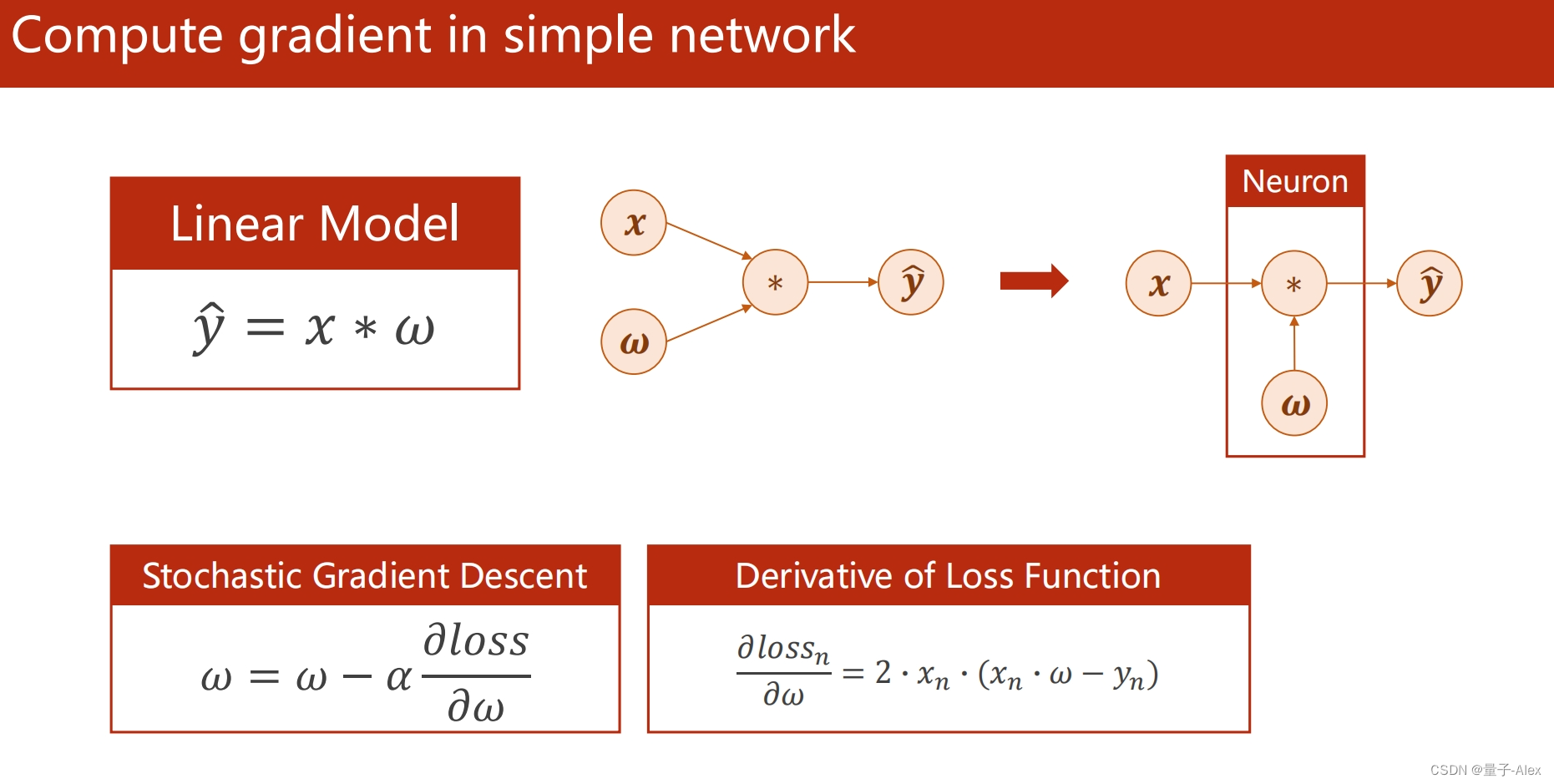

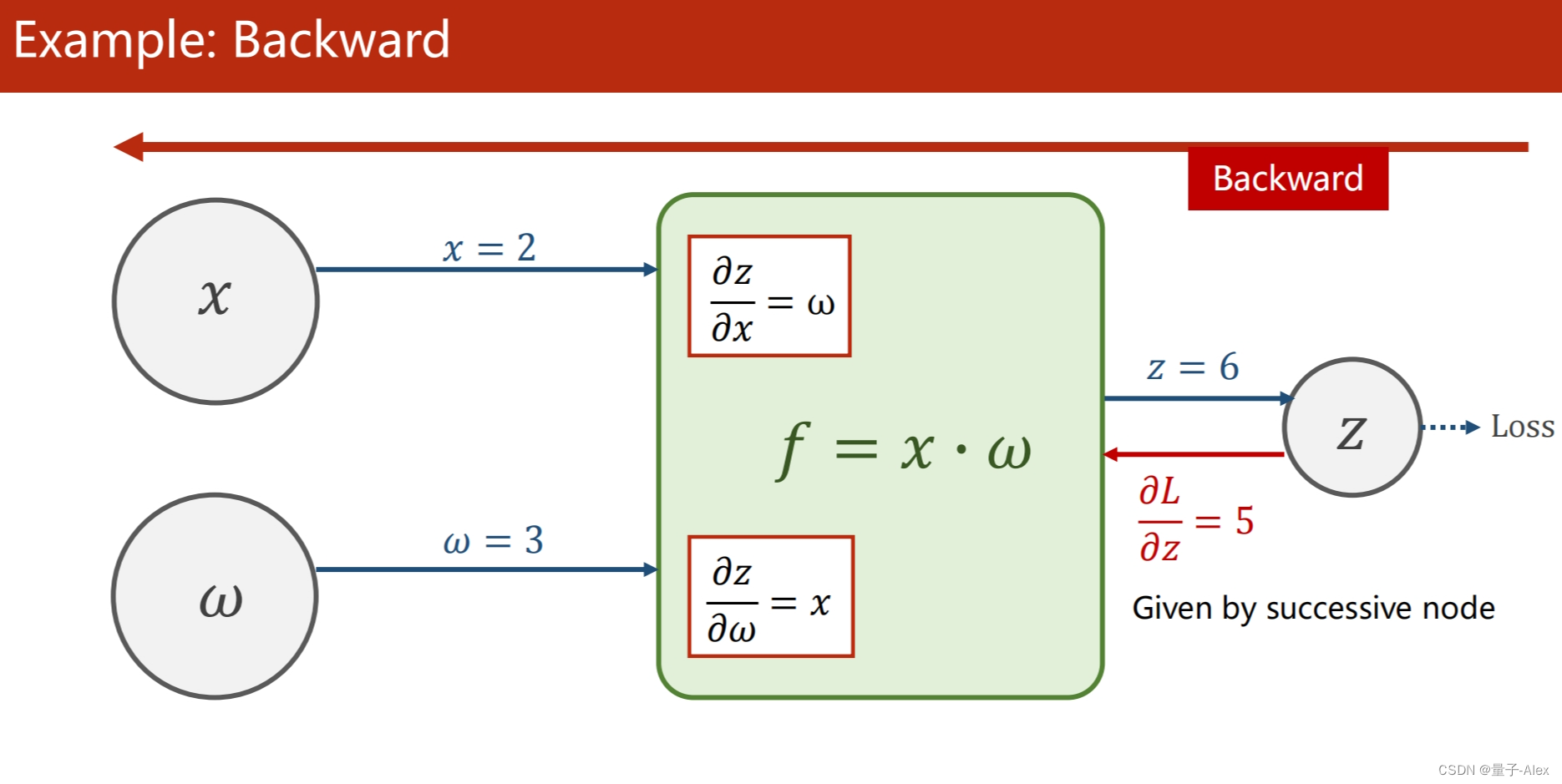

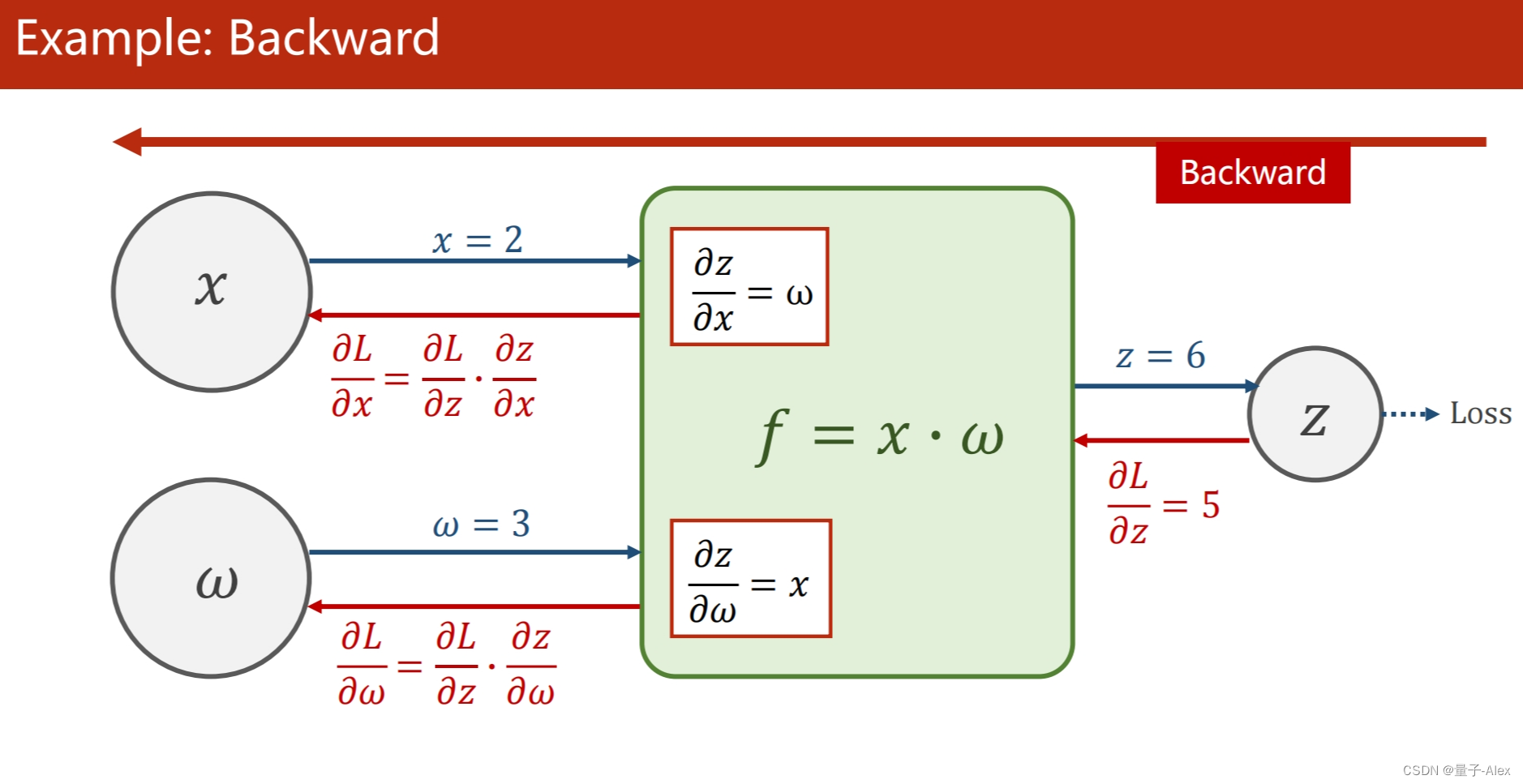

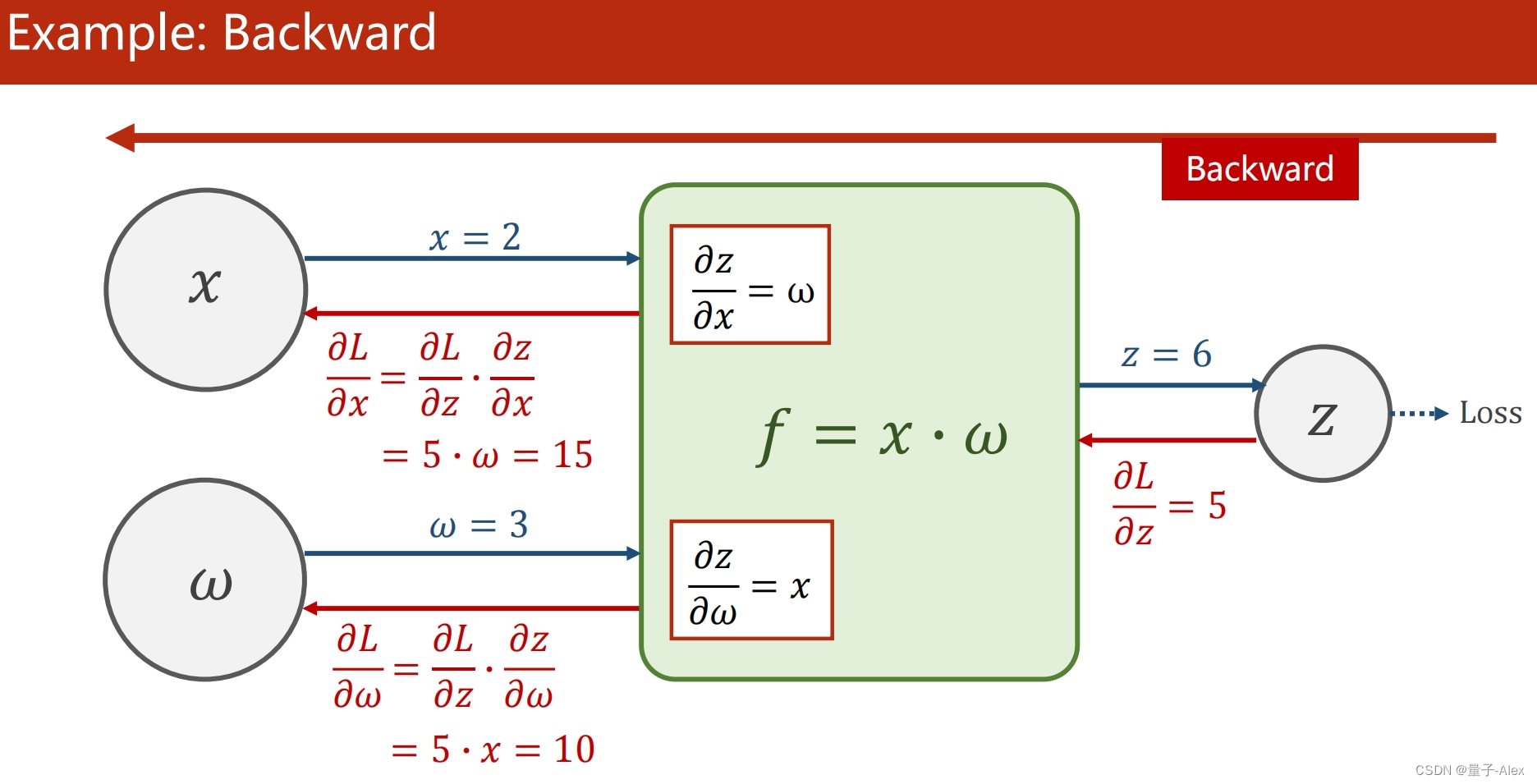

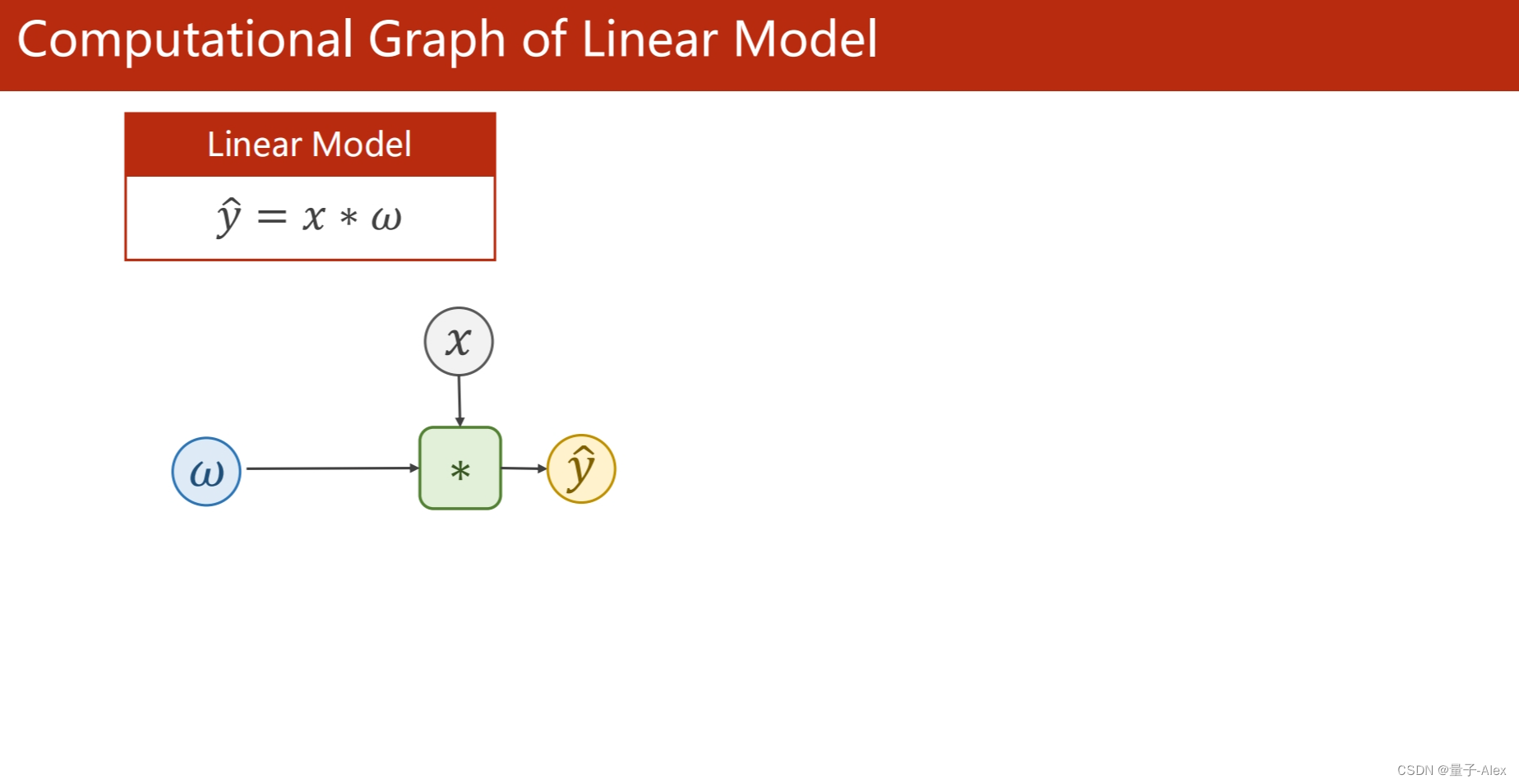

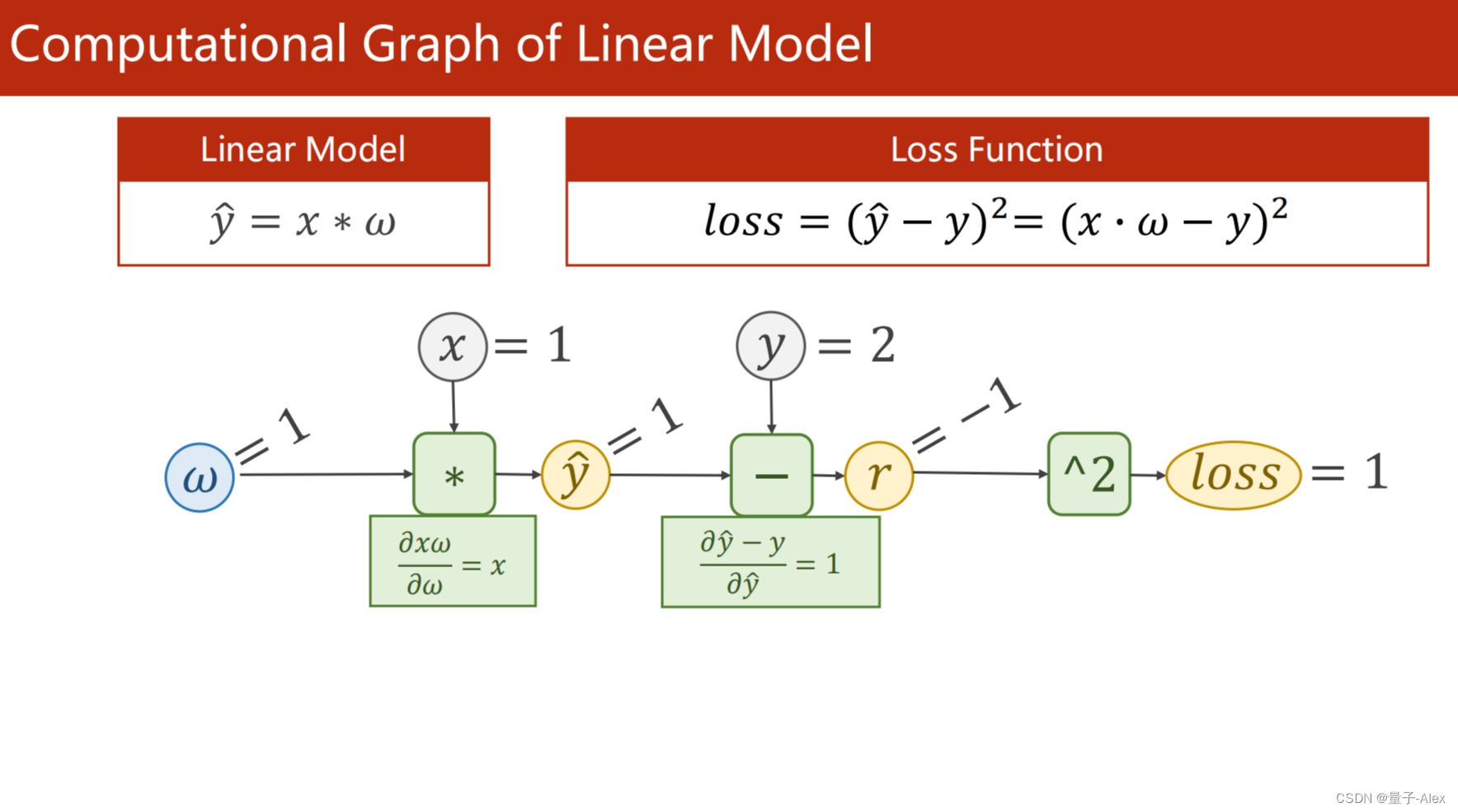

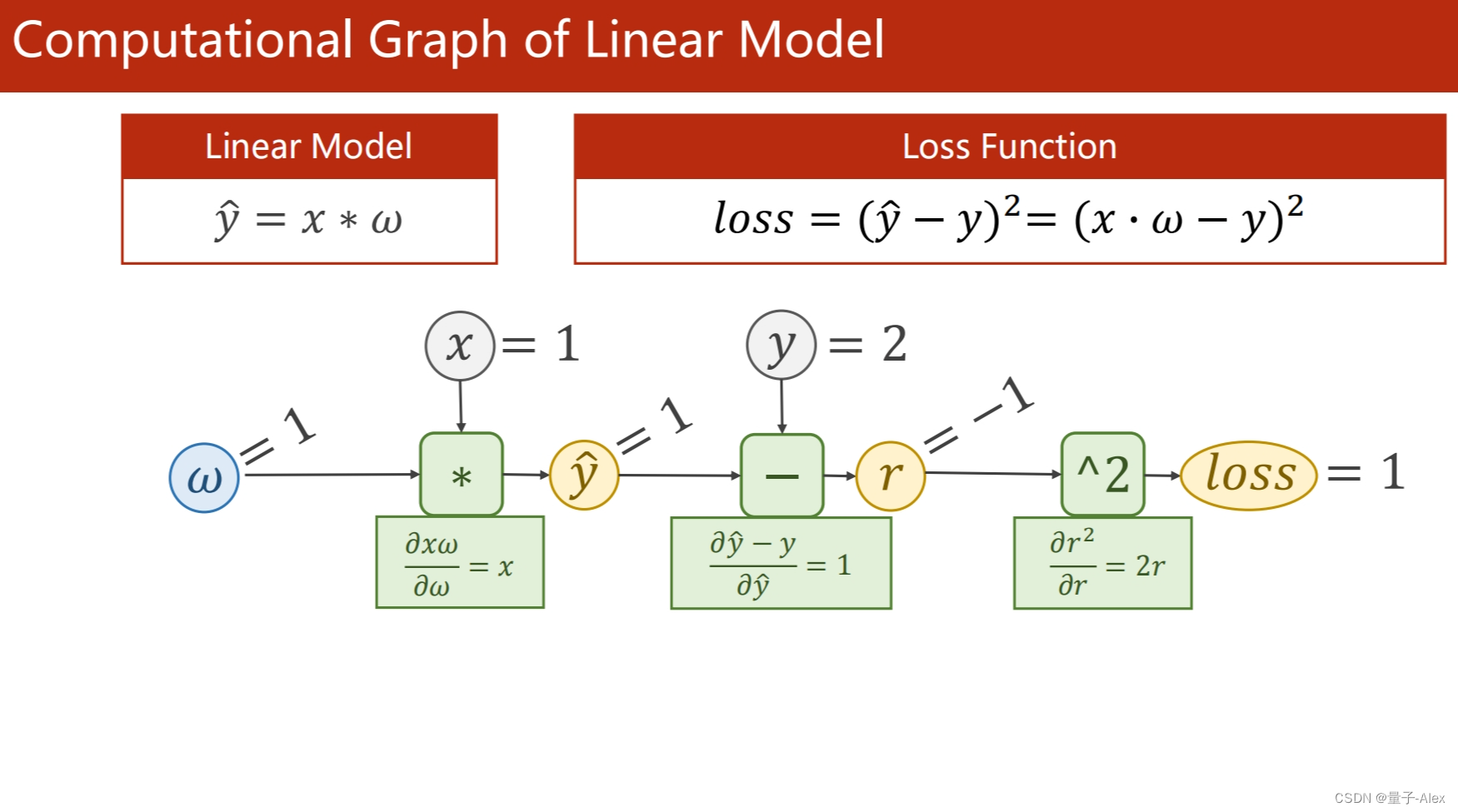

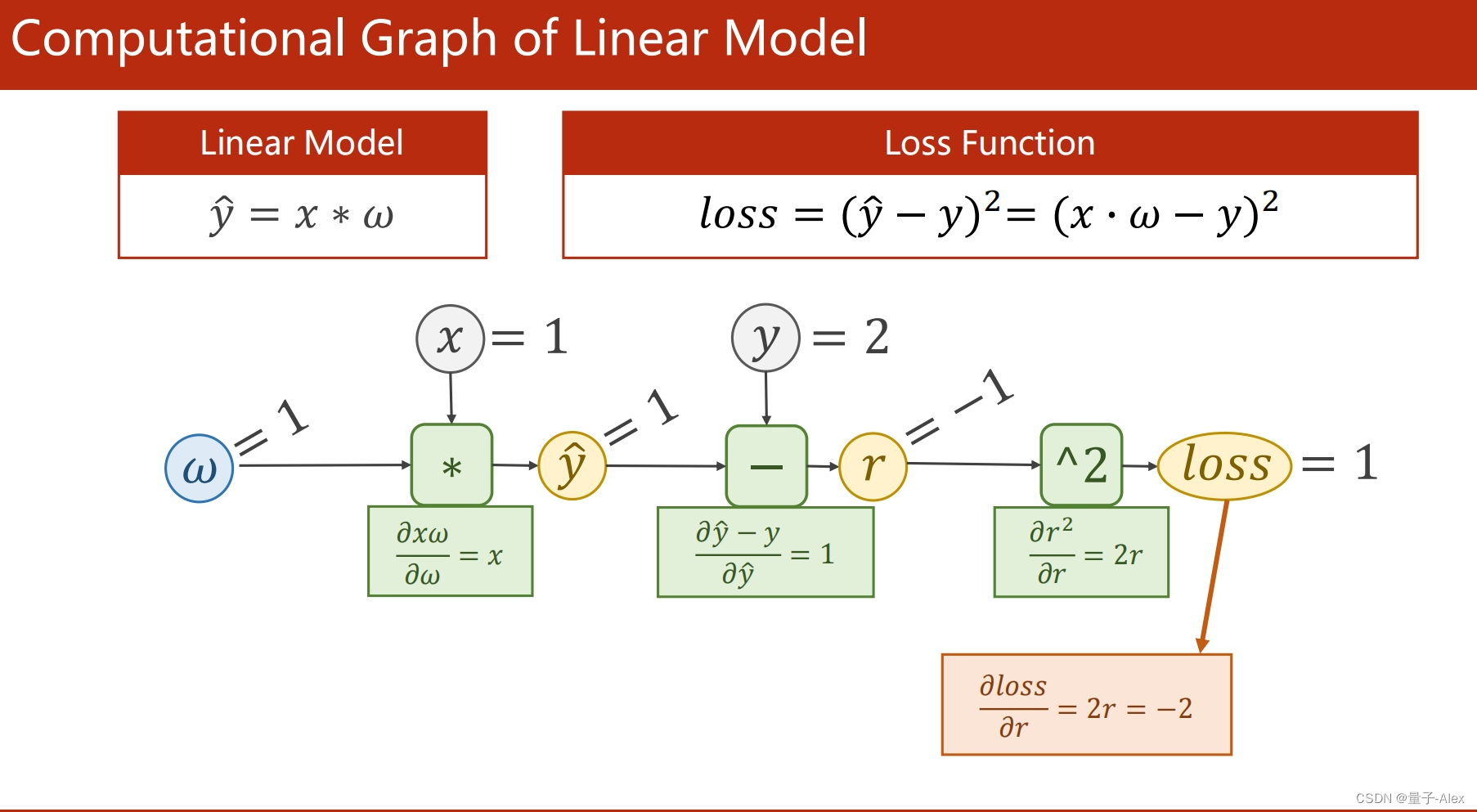

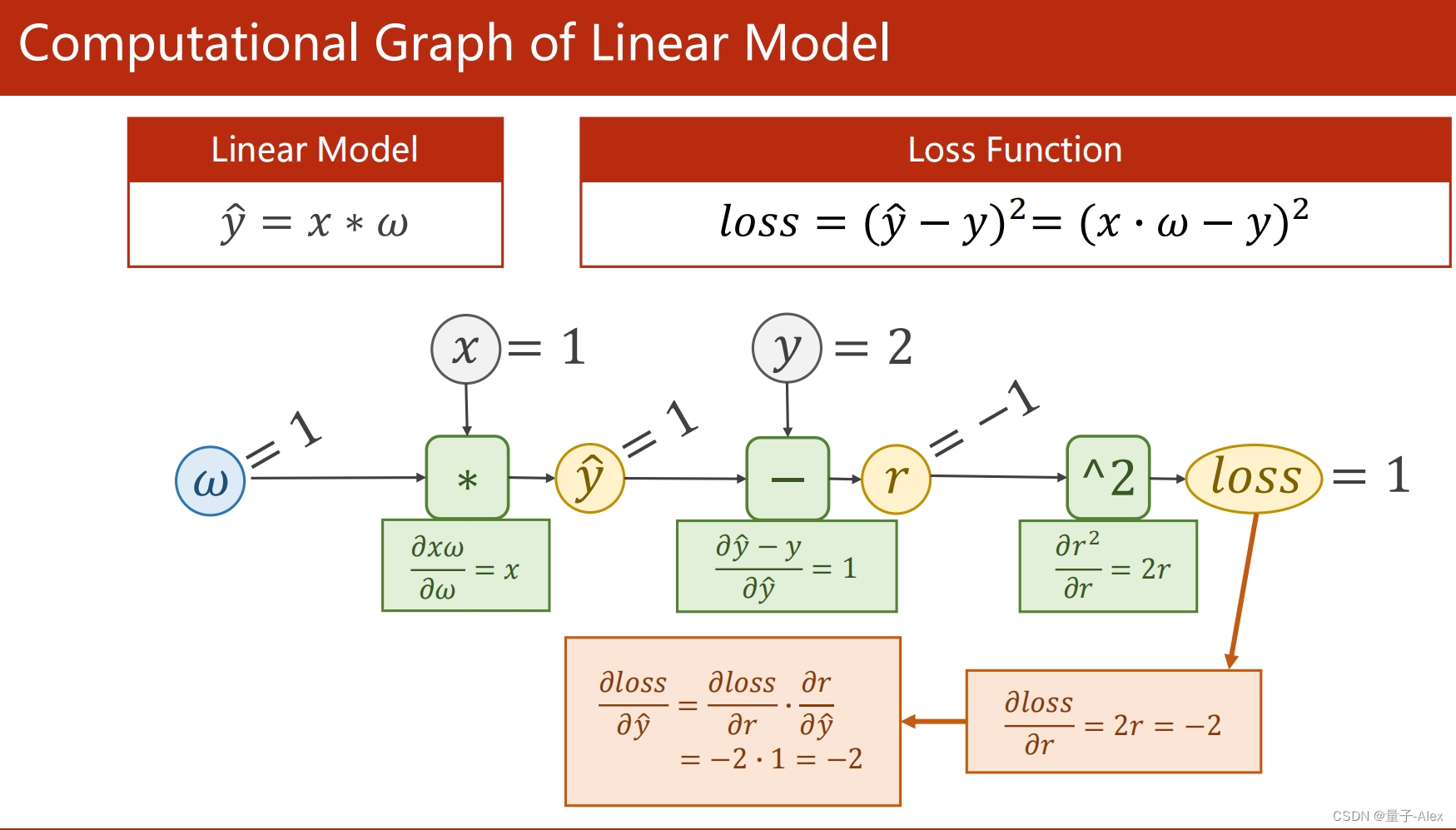

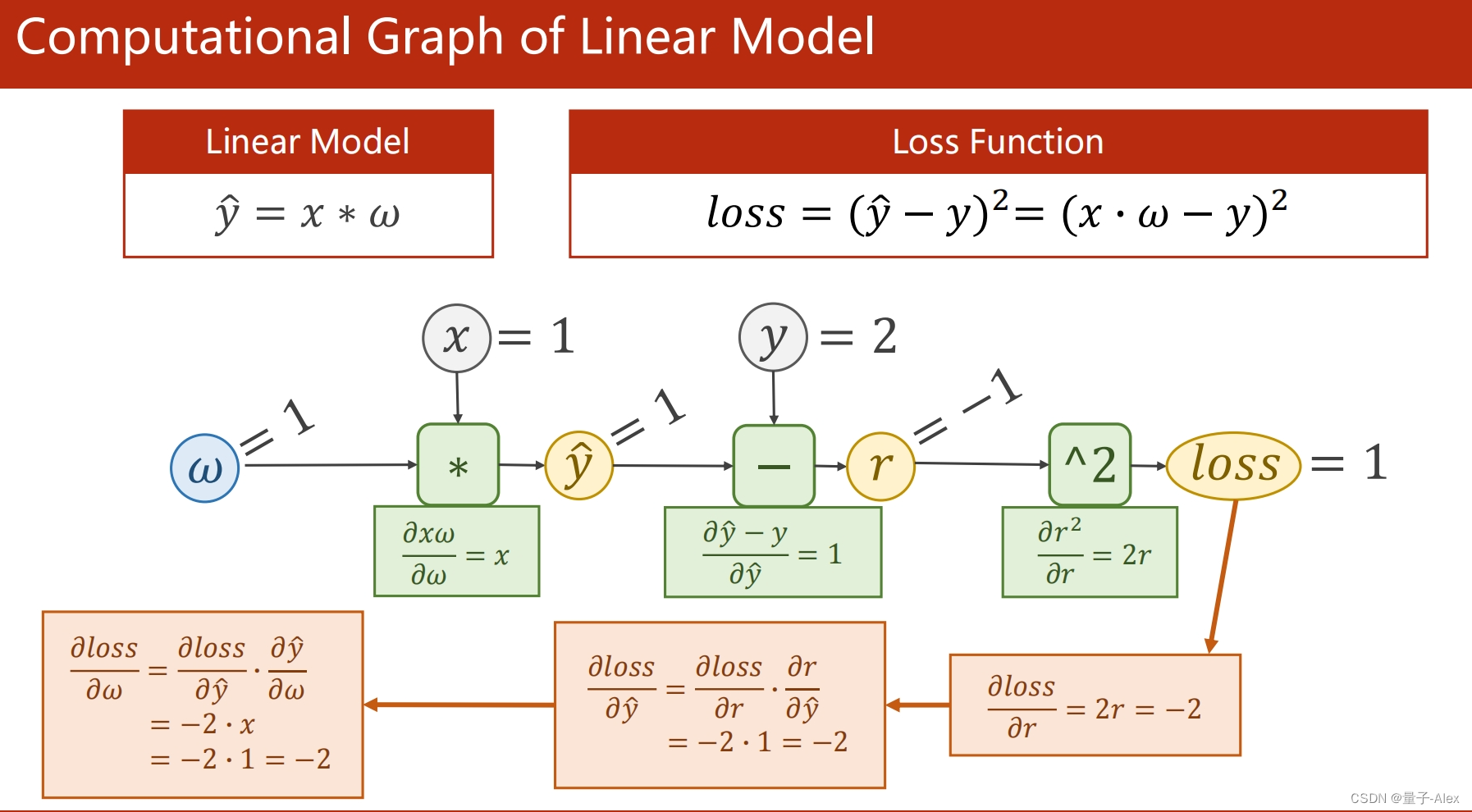

lecture04反向传播

课程网址

Pytorch深度学习实践

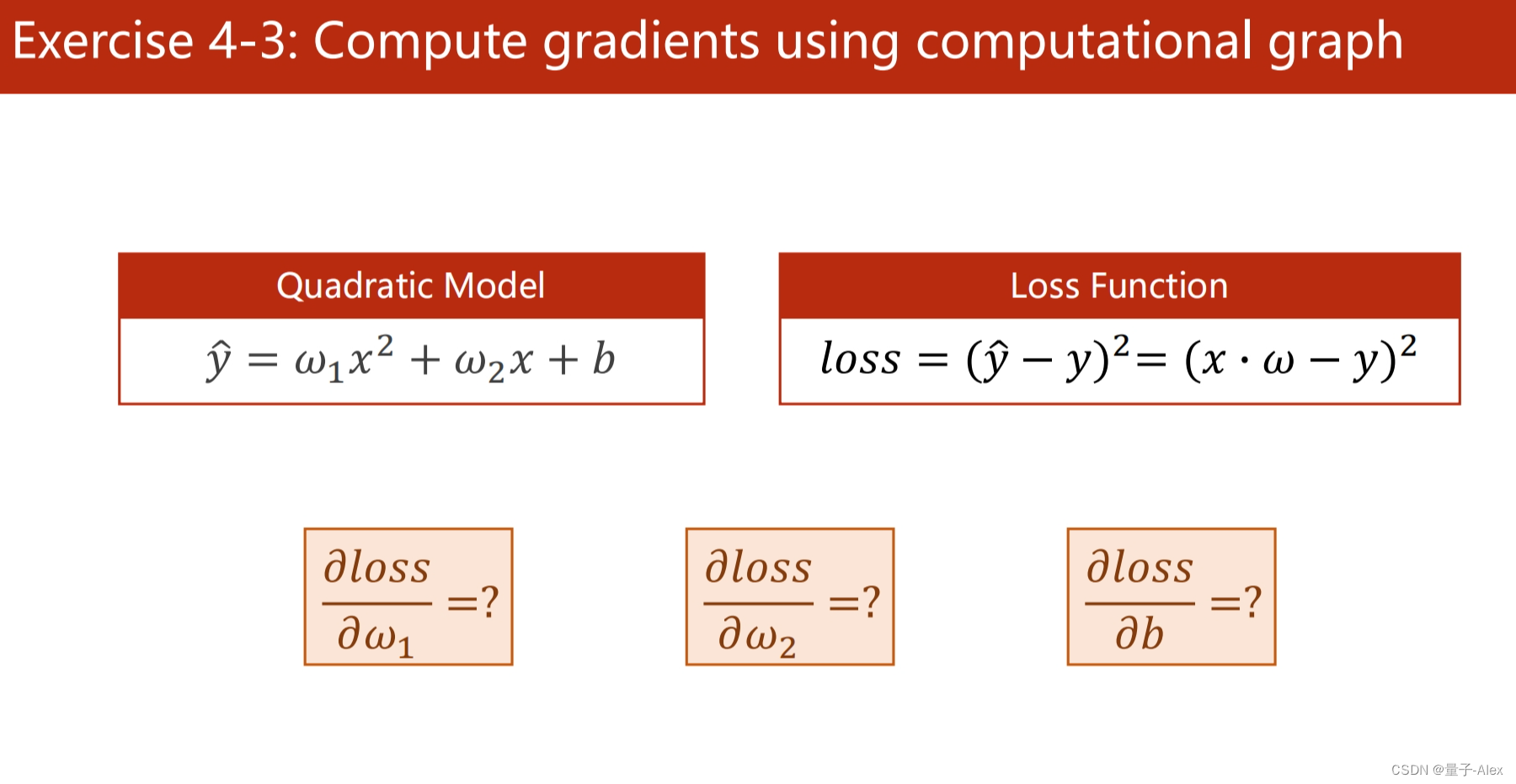

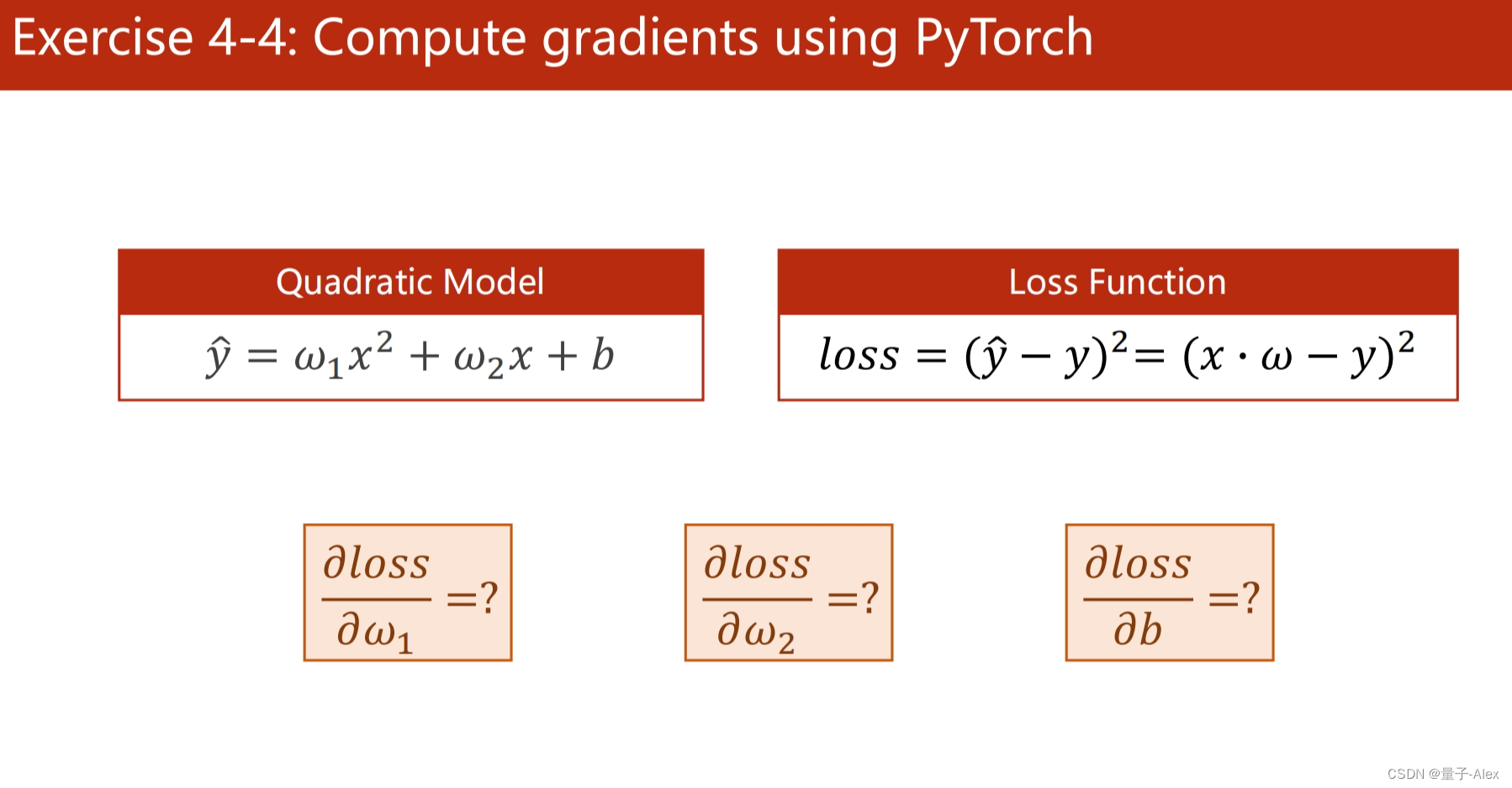

部分课件内容:

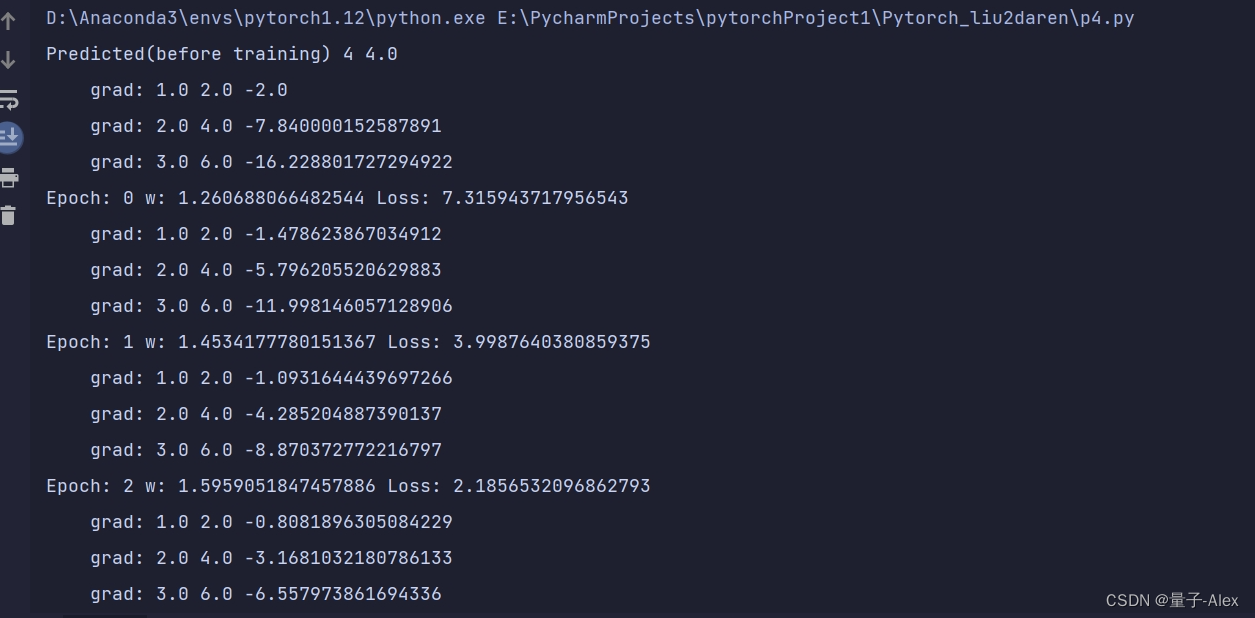

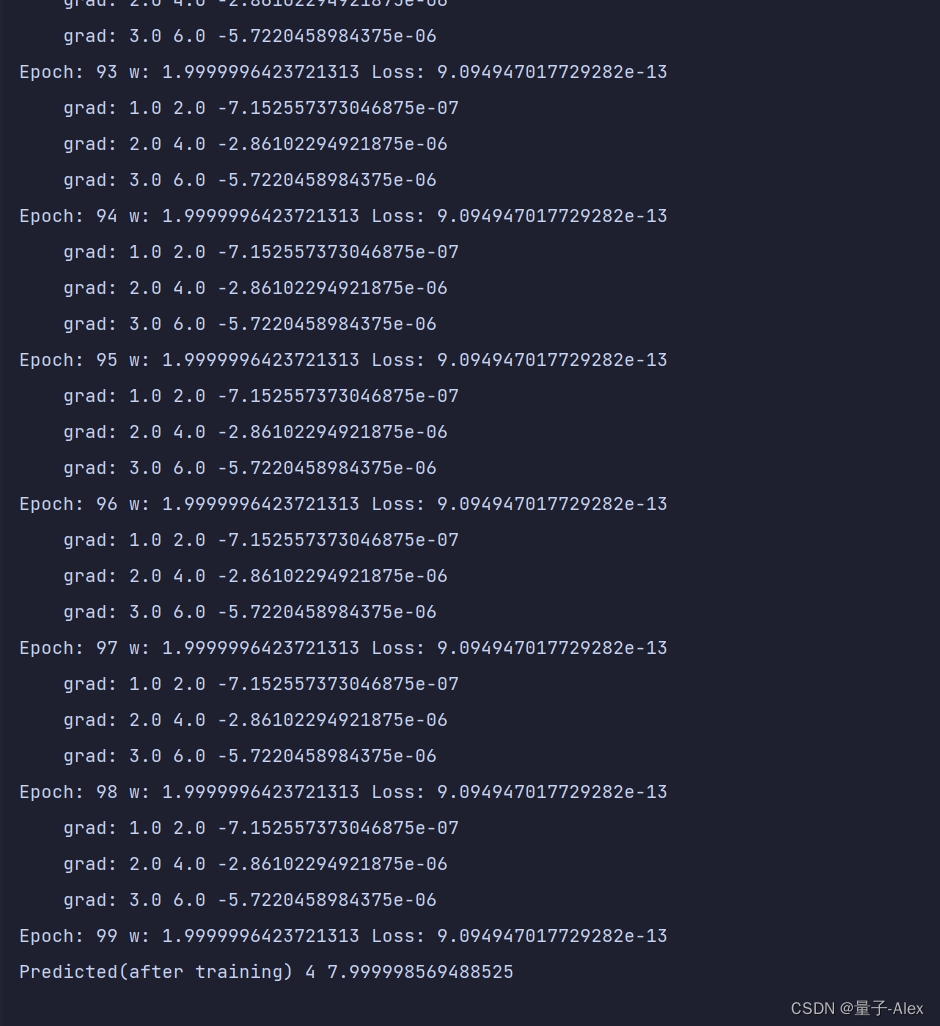

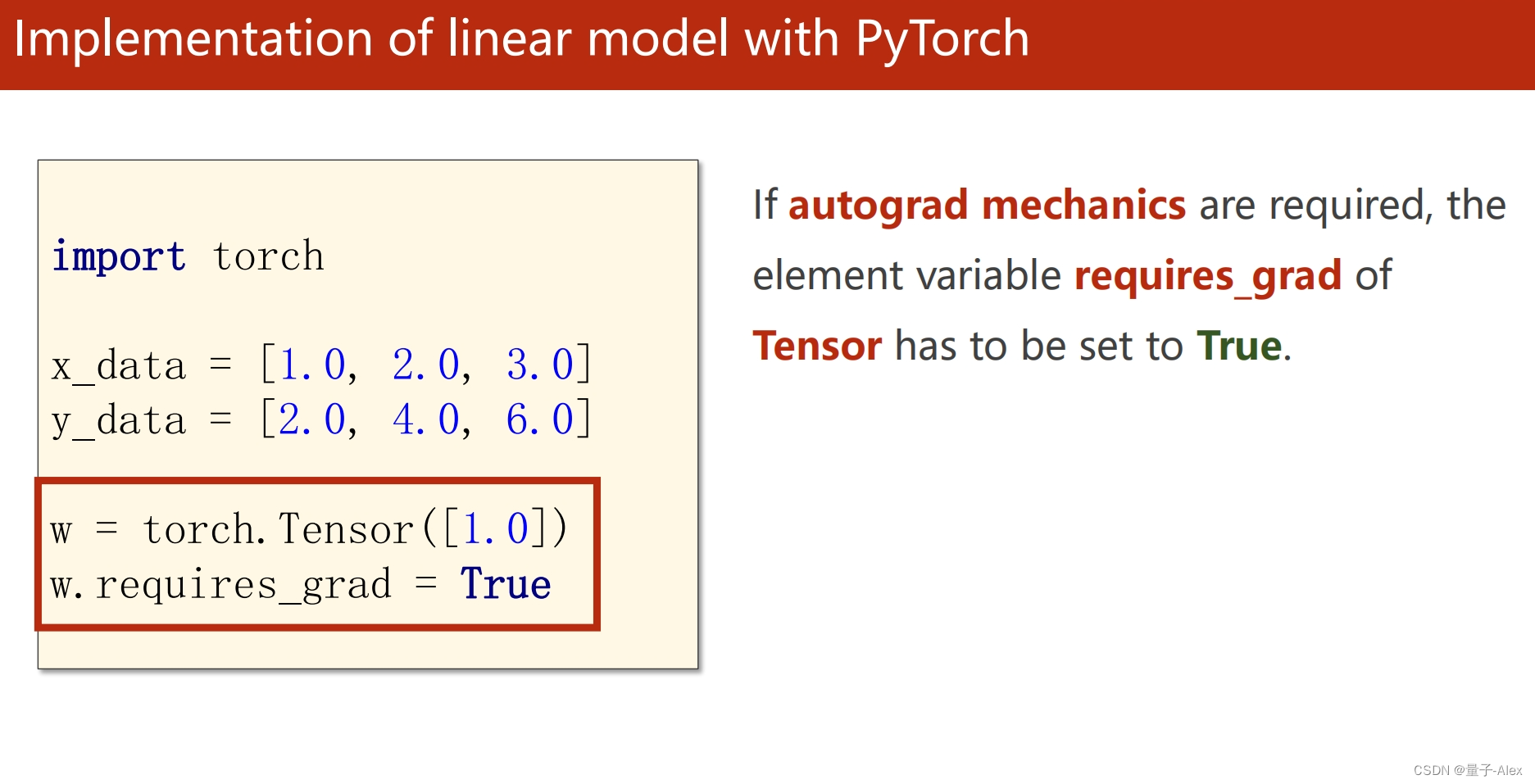

import torch

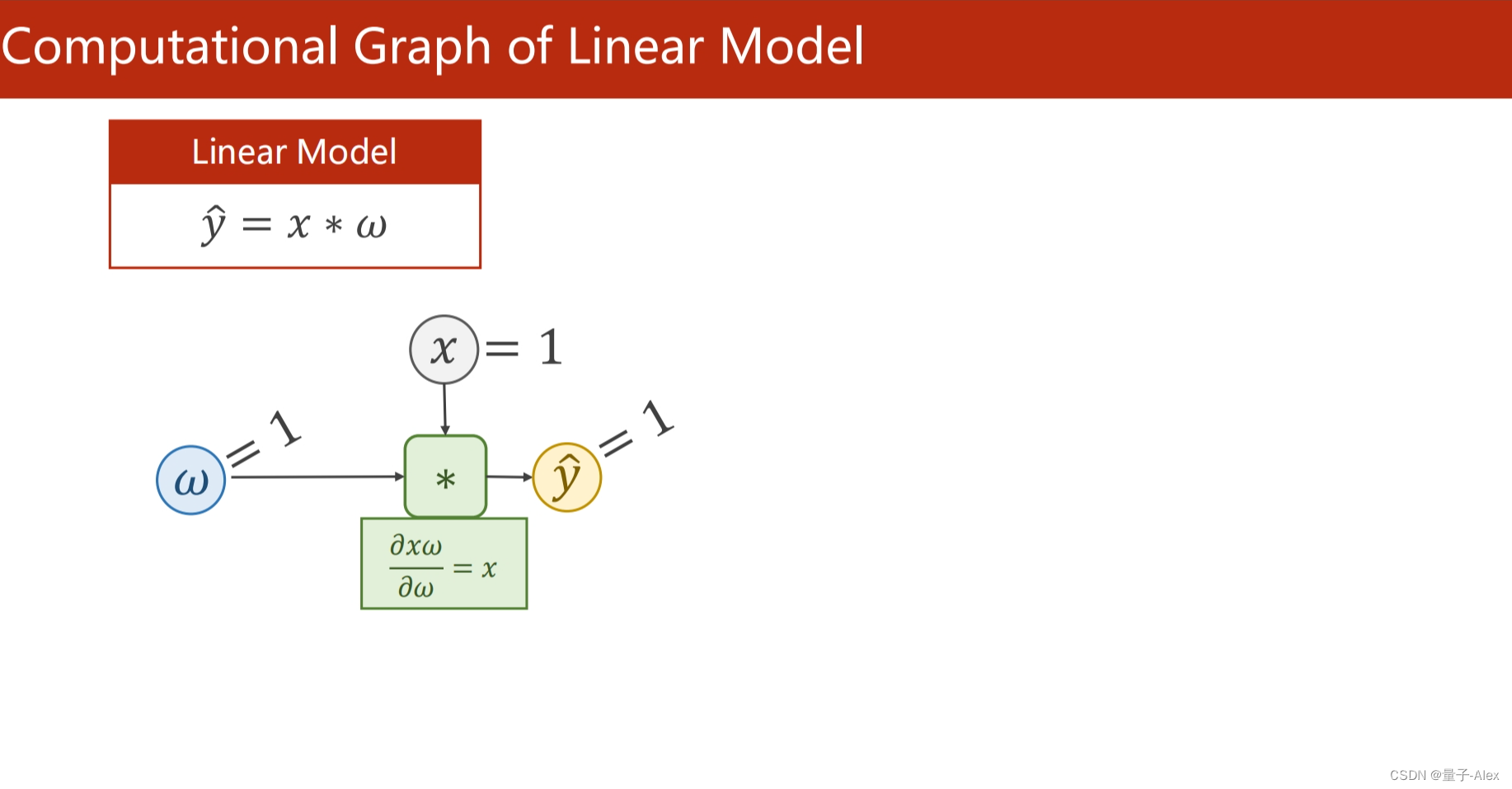

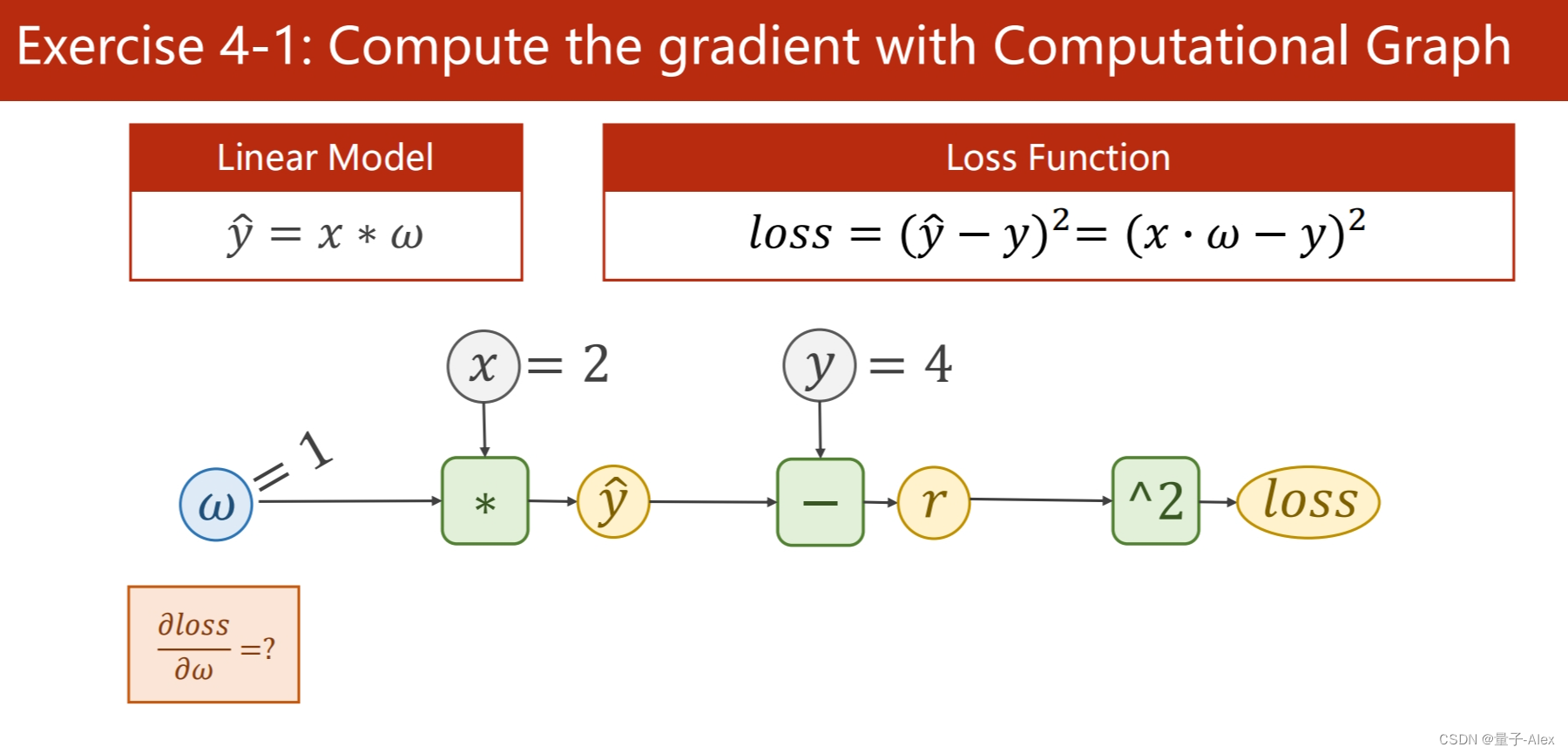

x_data =[1.0,2.0,3.0]

y_data =[2.0,4.0,6.0]

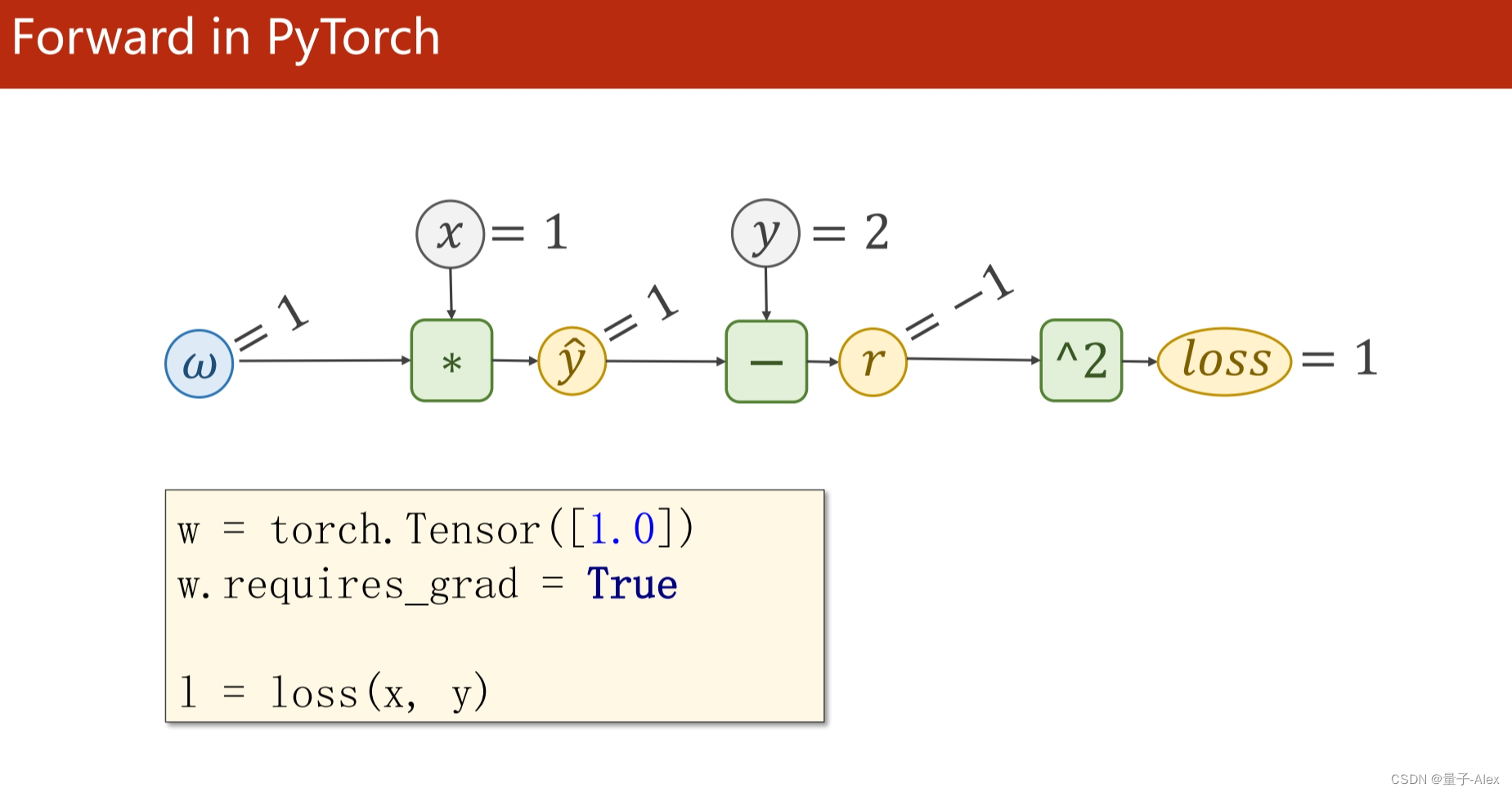

w = torch.tensor([1.0])

w.requires_grad = True

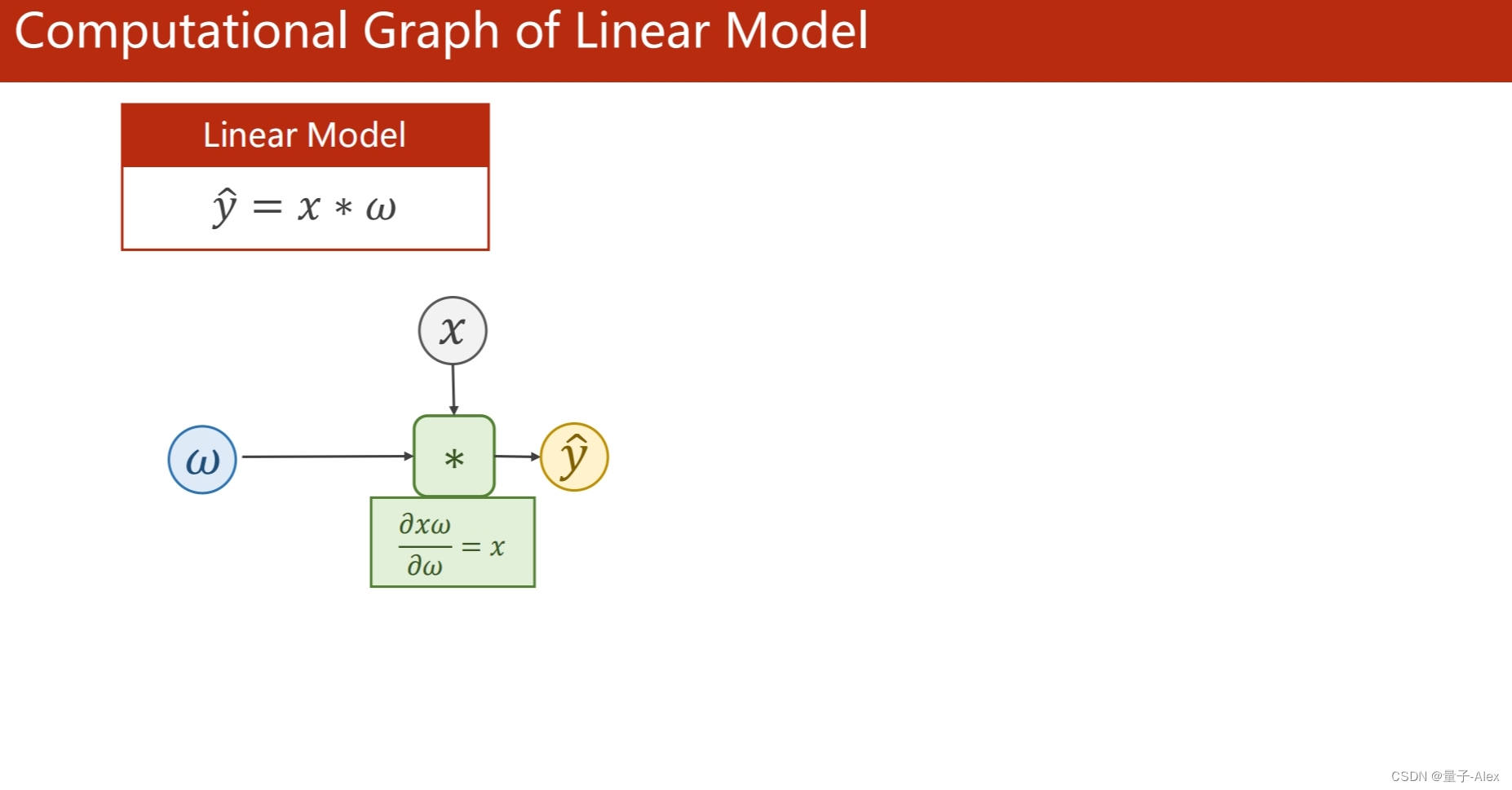

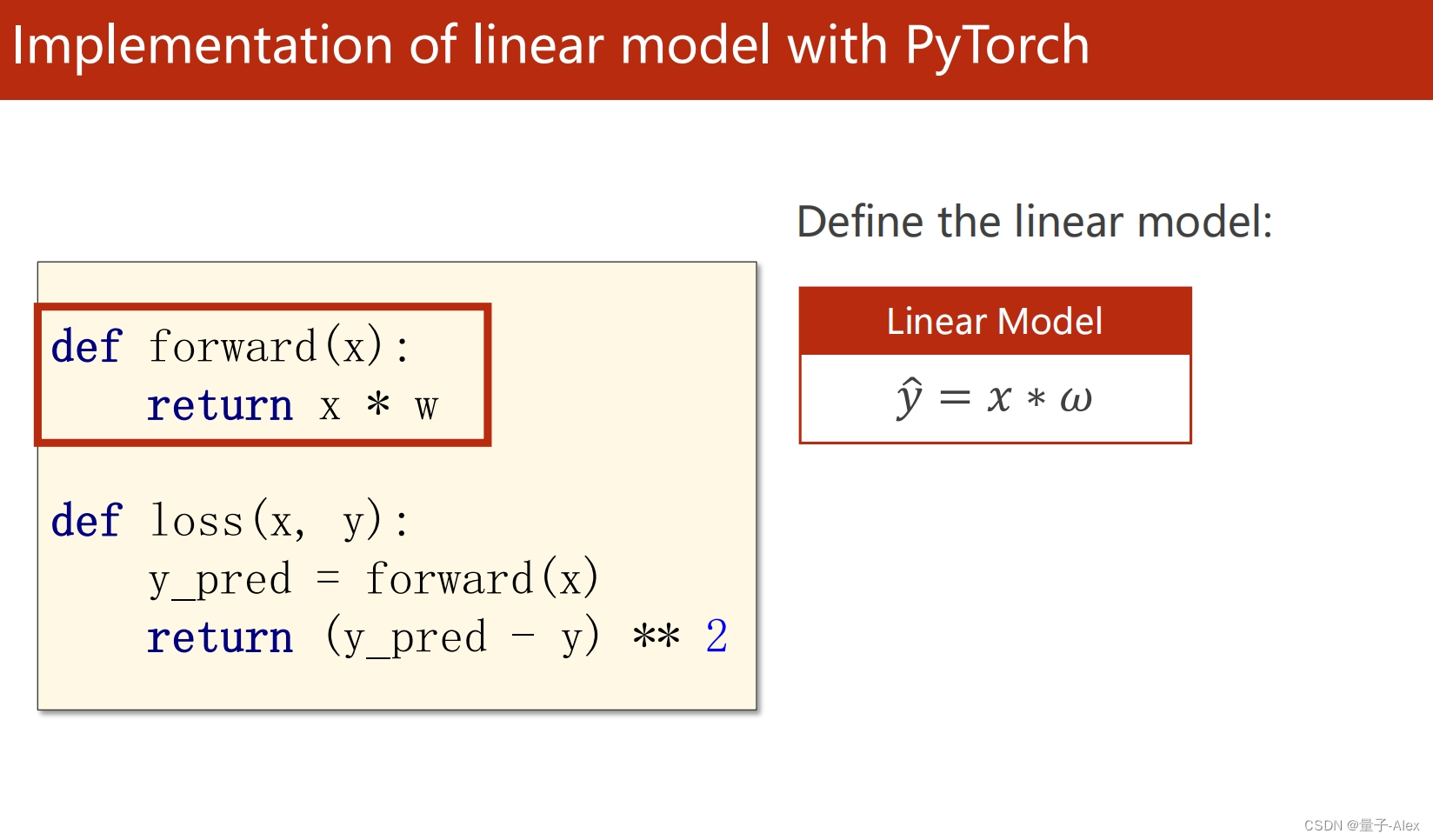

def forward(x):

return x*w

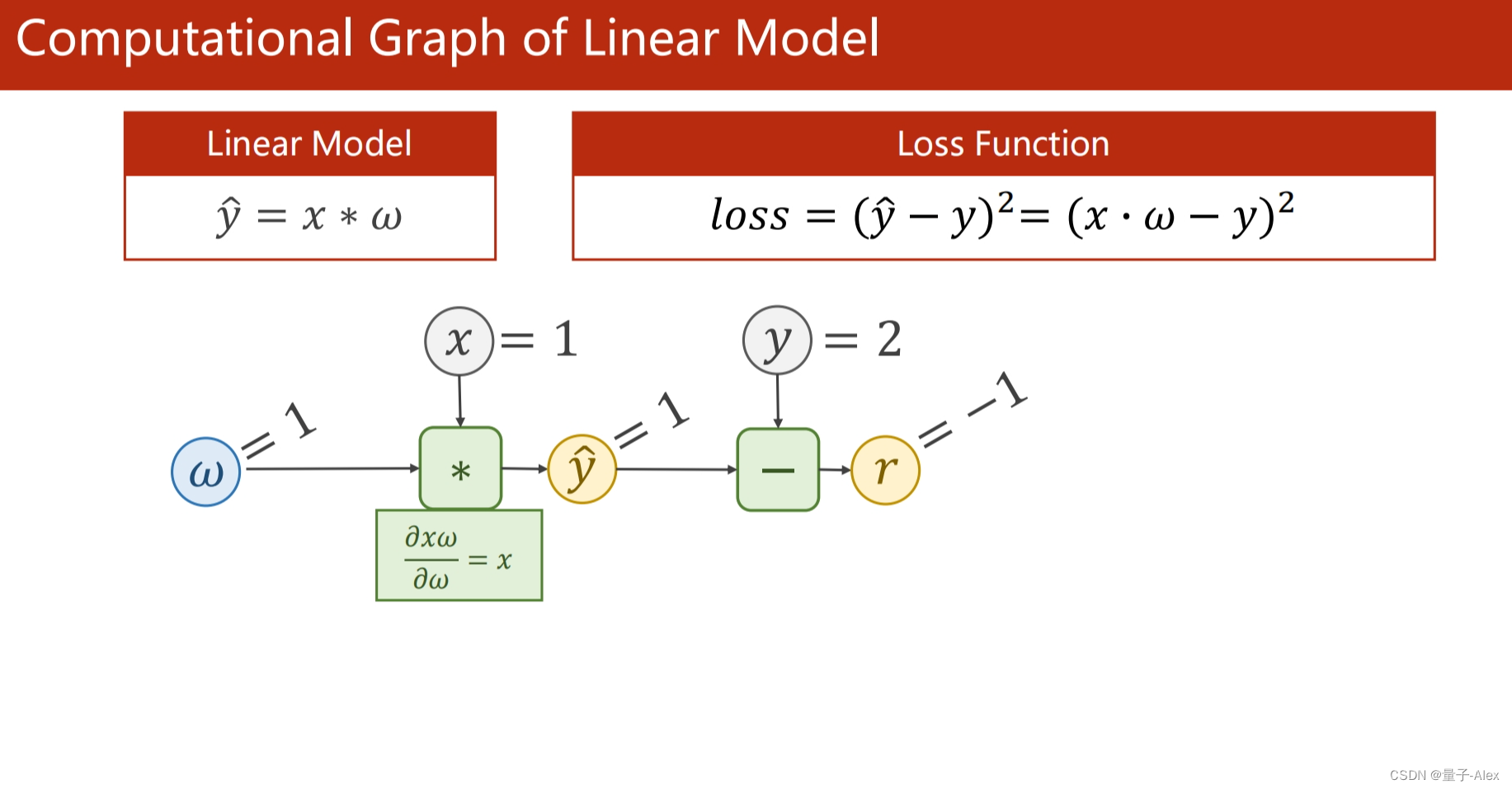

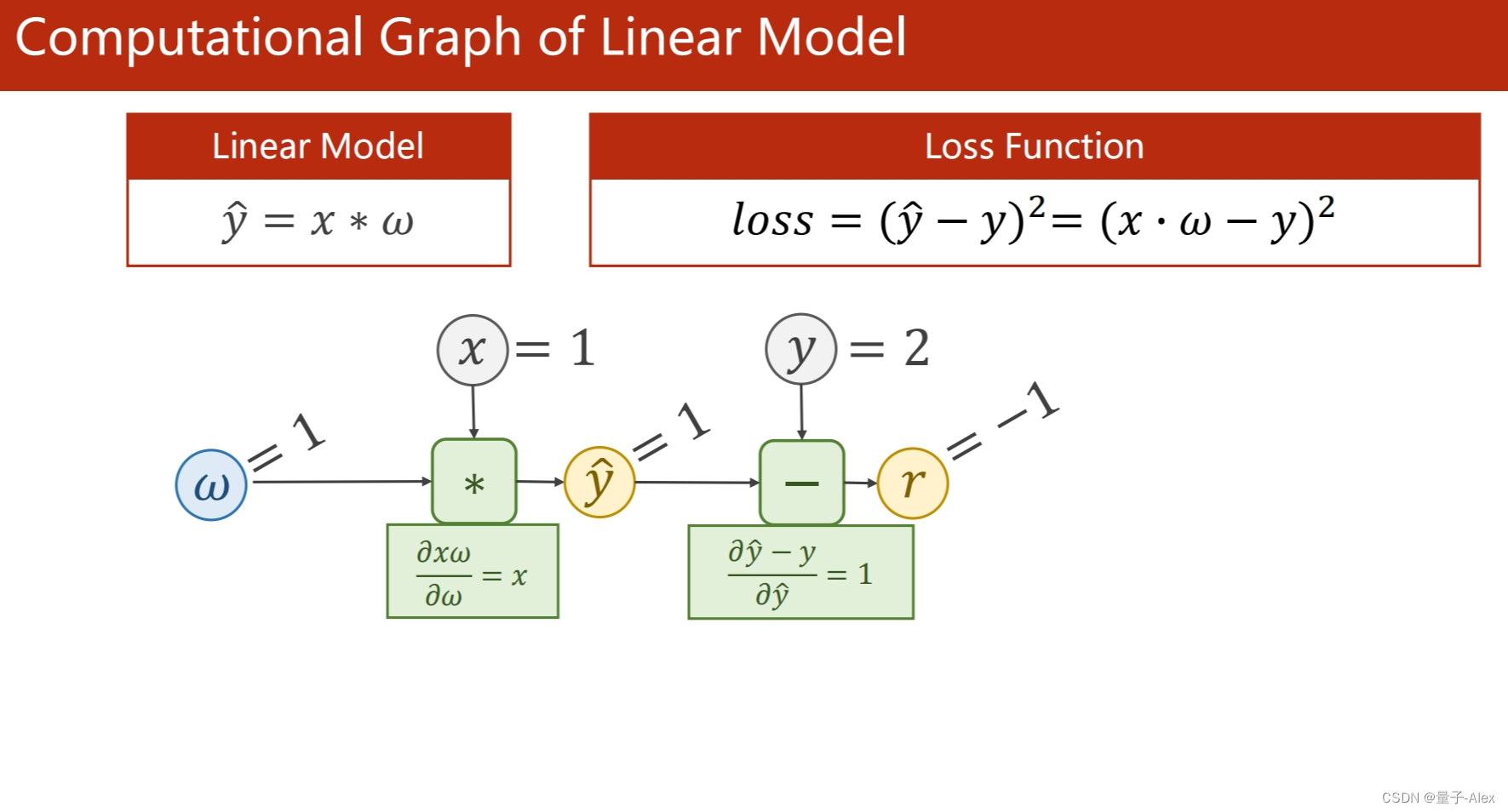

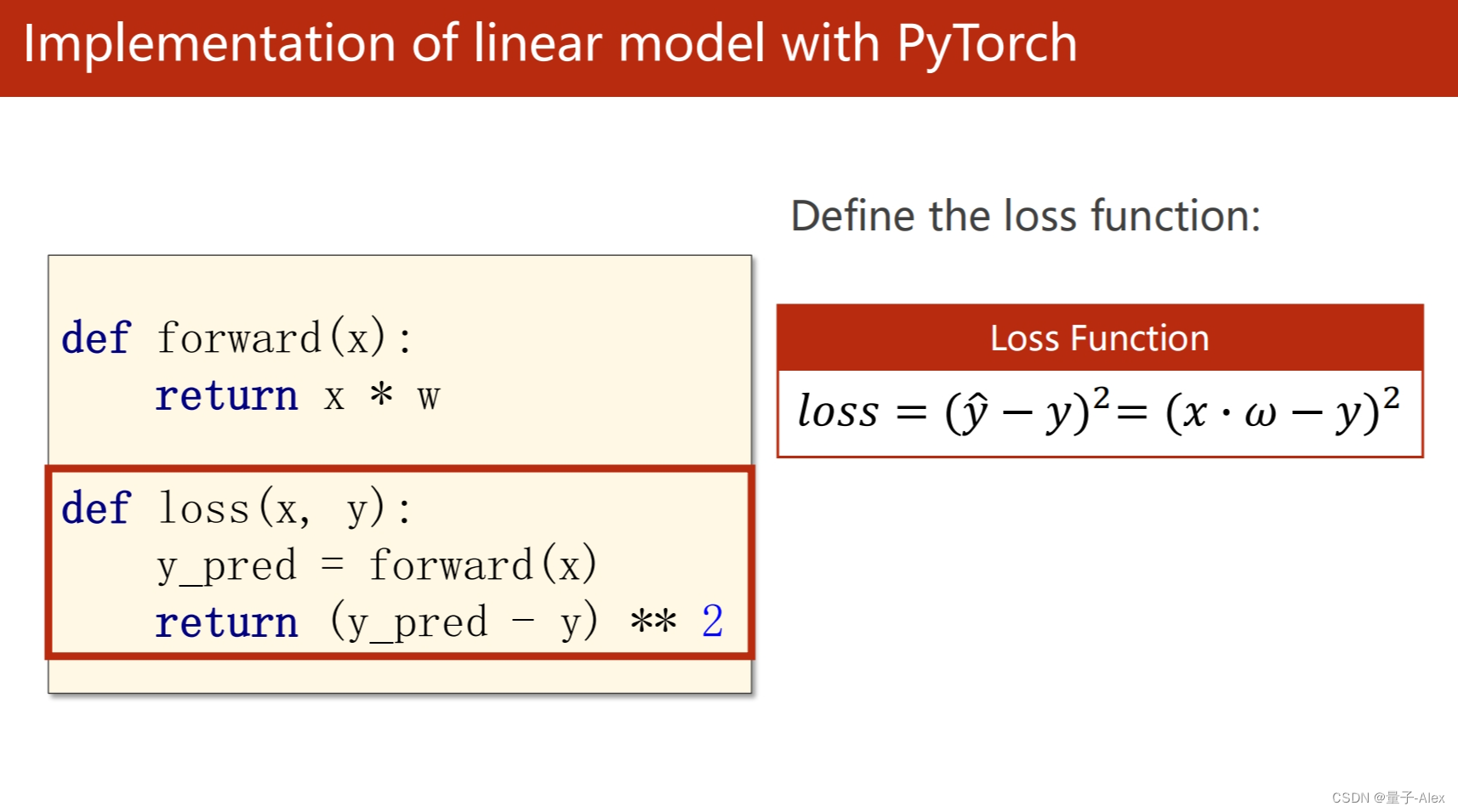

def loss(x,y):

y_pred = forward(x)

return (y_pred-y)**2

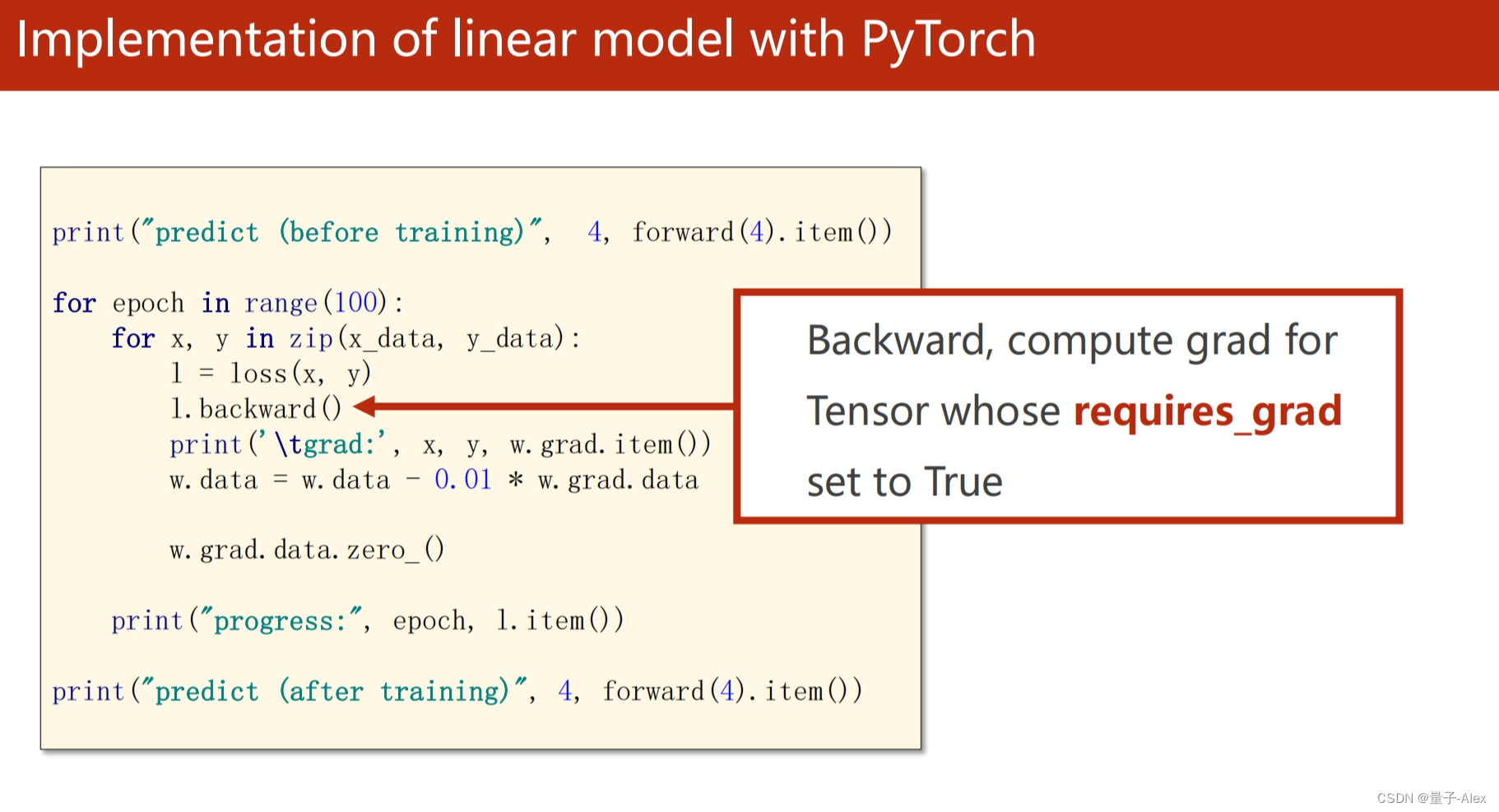

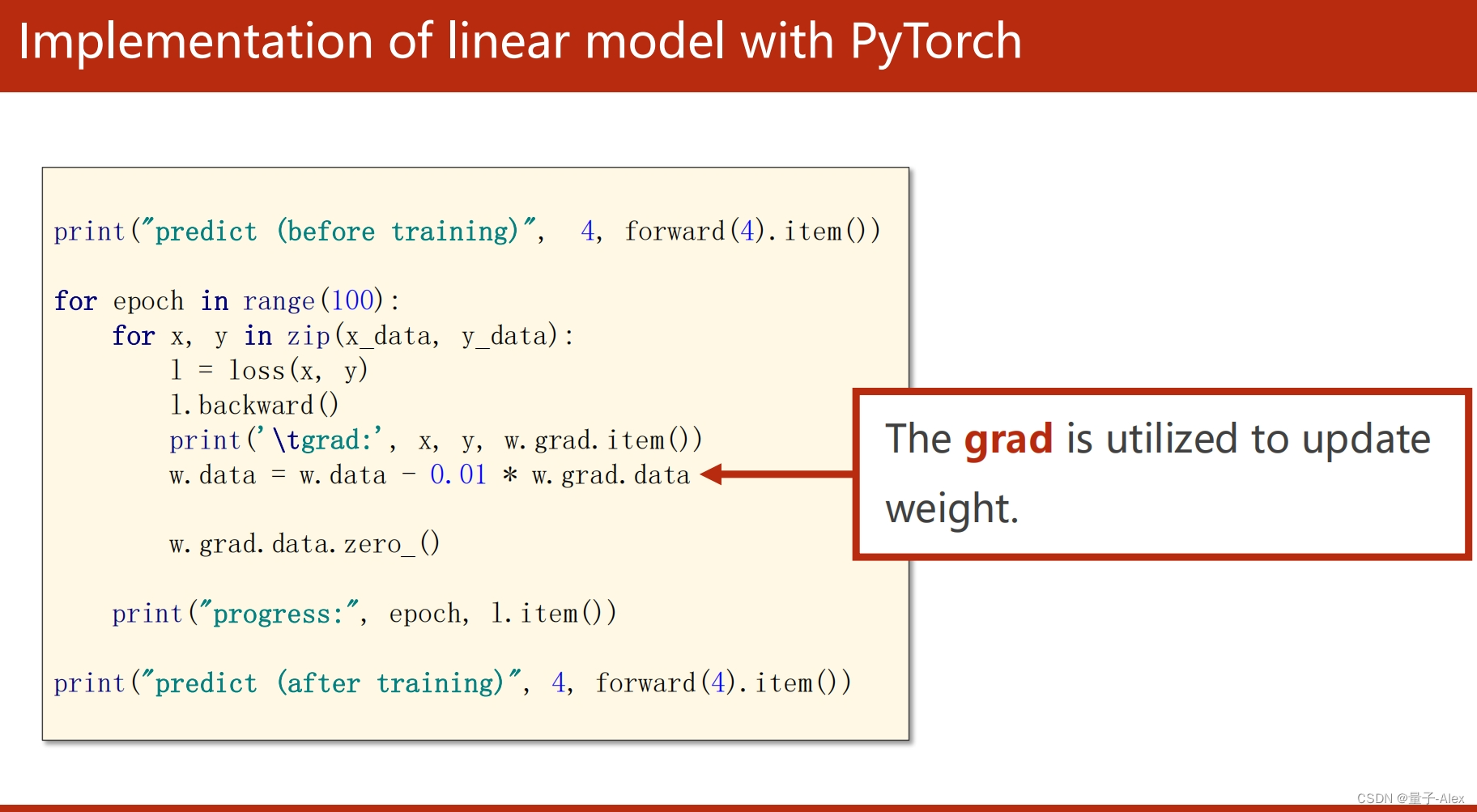

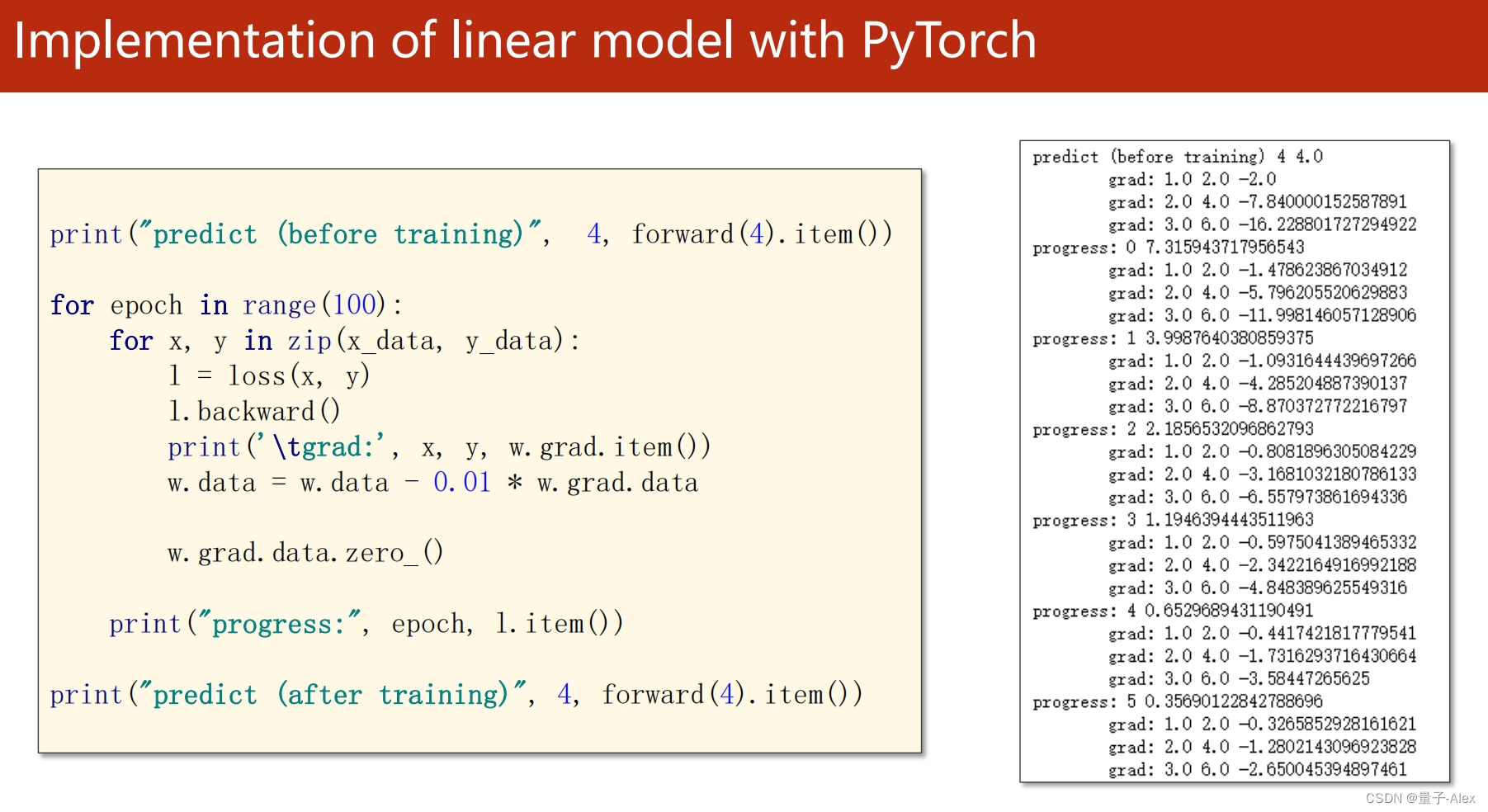

print('Predicted(before training)',4,forward(4).item())

for epoch in range(100):

for x,y in zip(x_data,y_data):

l = loss(x,y)

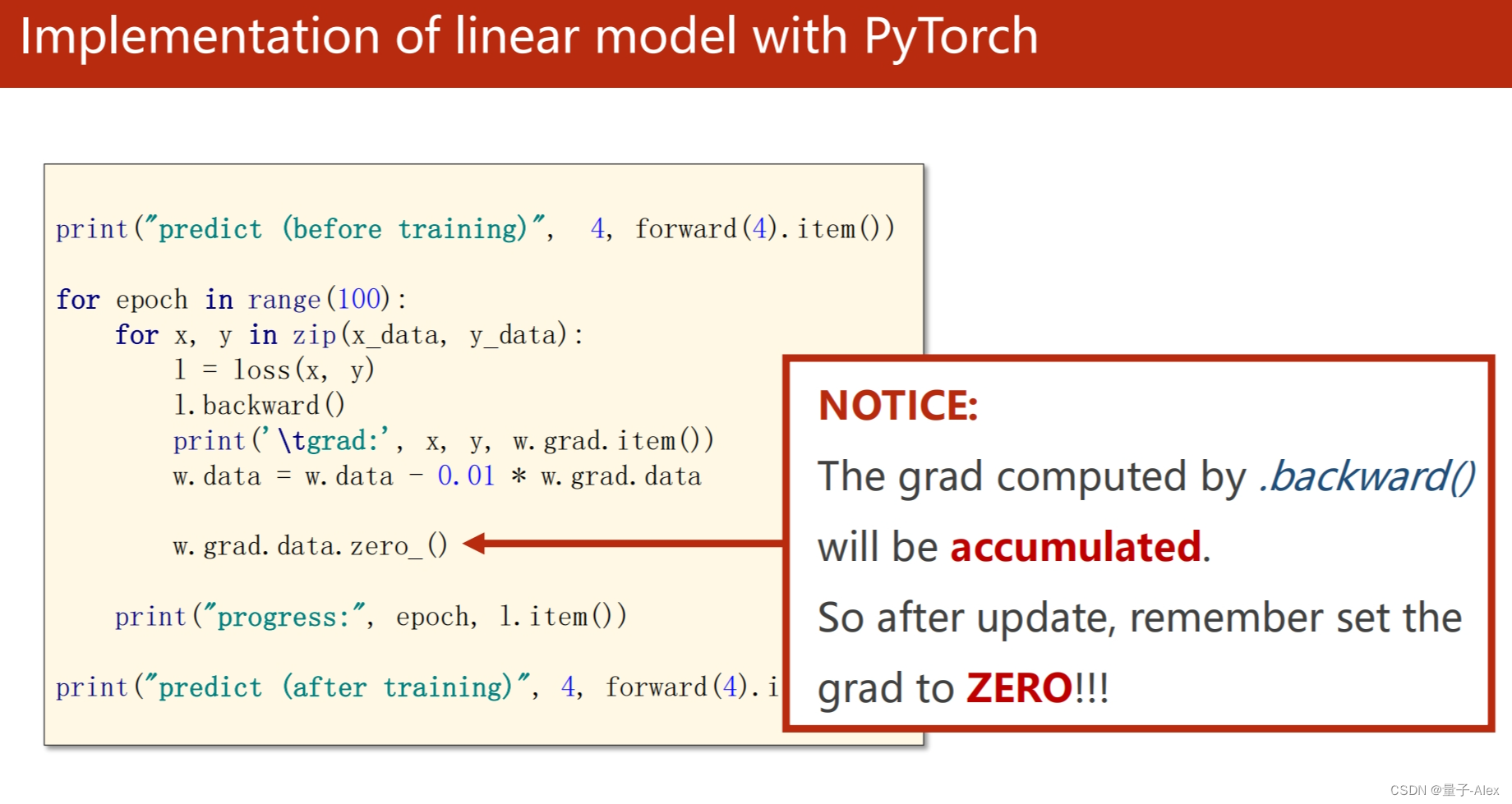

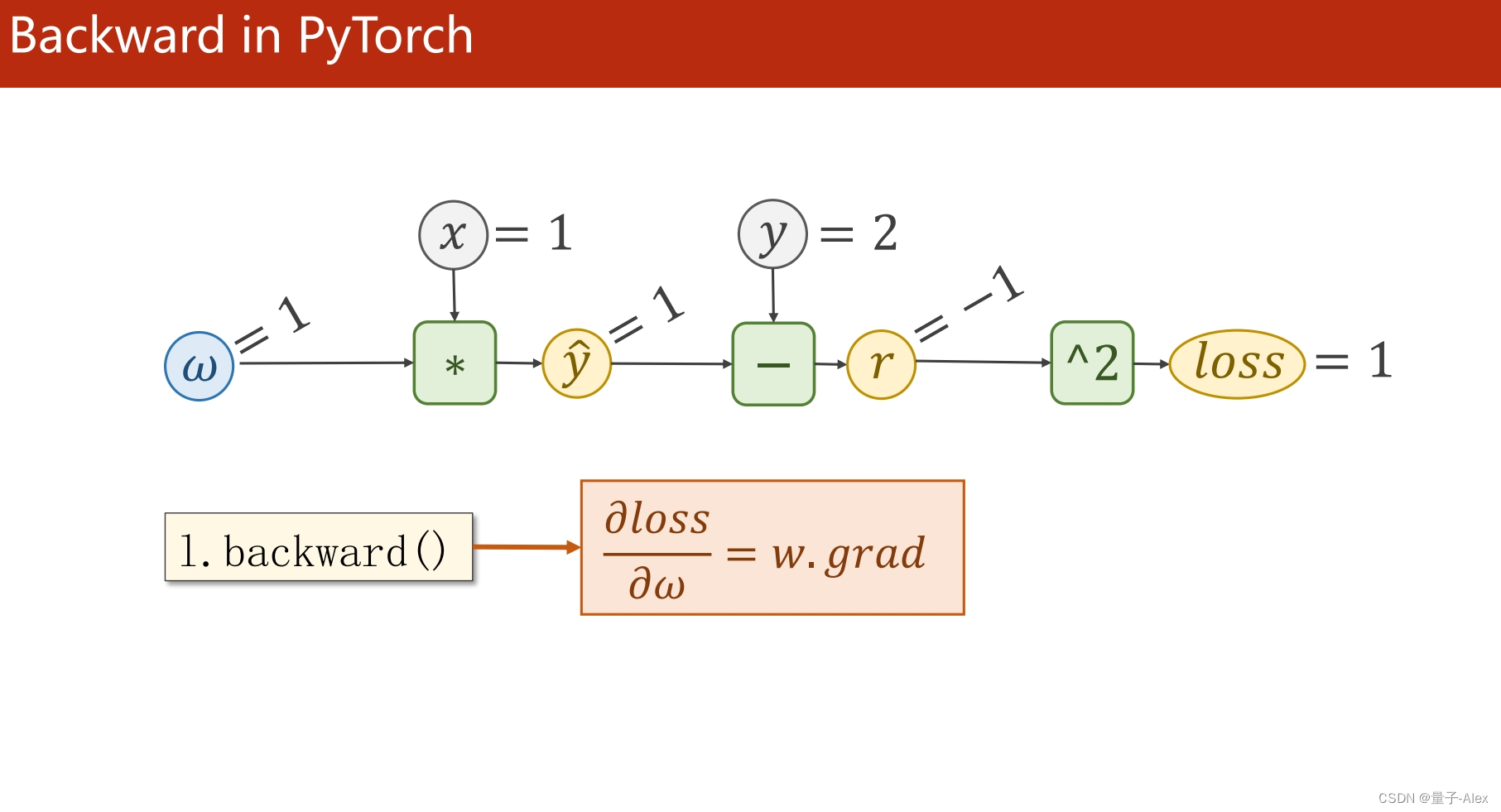

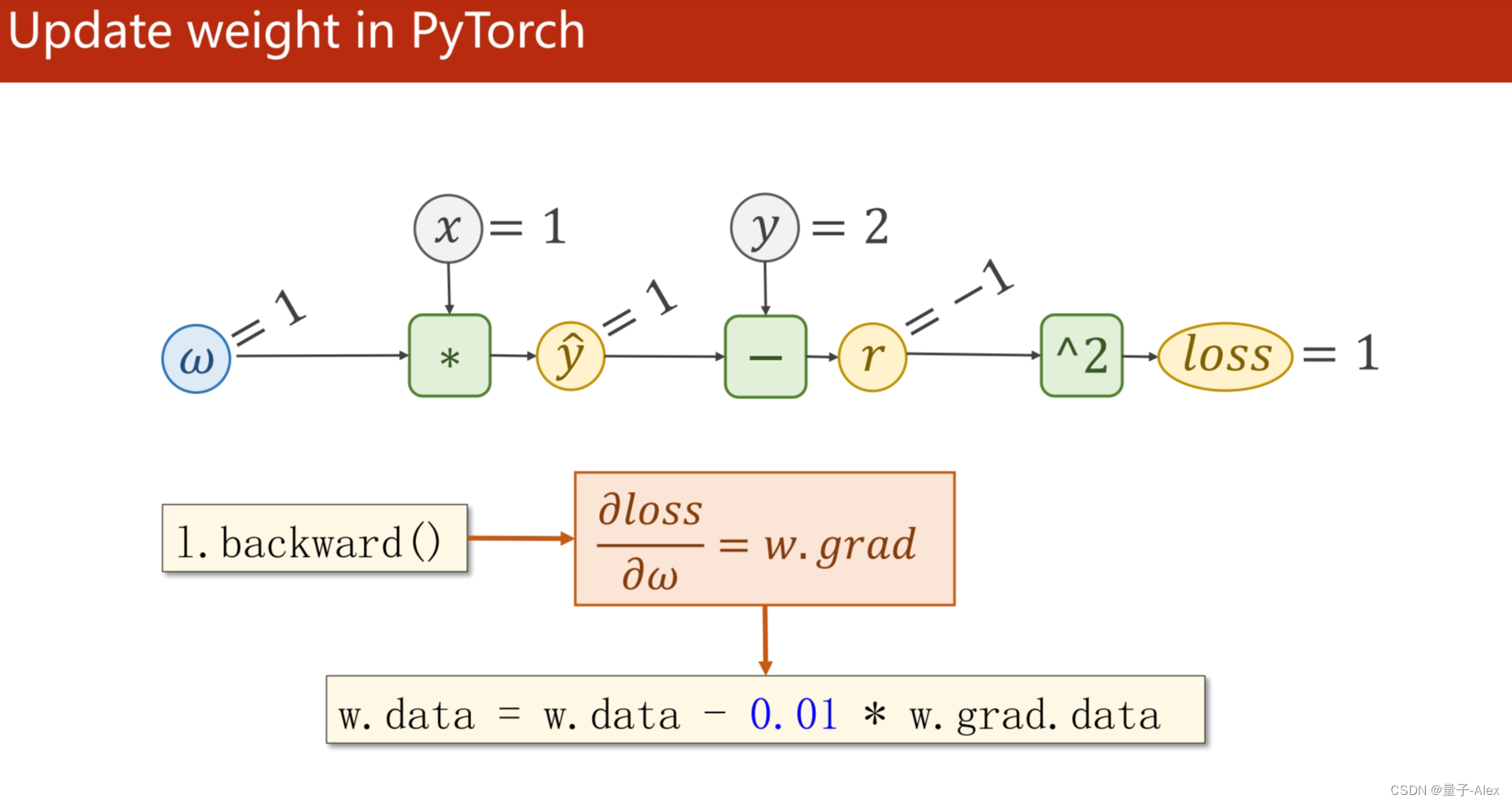

l.backward()

print("\tgrad:",x,y,w.grad.item())

w.data = w.data - 0.01 * w.grad.item()

w.grad.zero_()

print('Epoch:', epoch,'w:',w.item(), 'Loss:', l.item())

print('Predicted(after training)',4,forward(4).item())

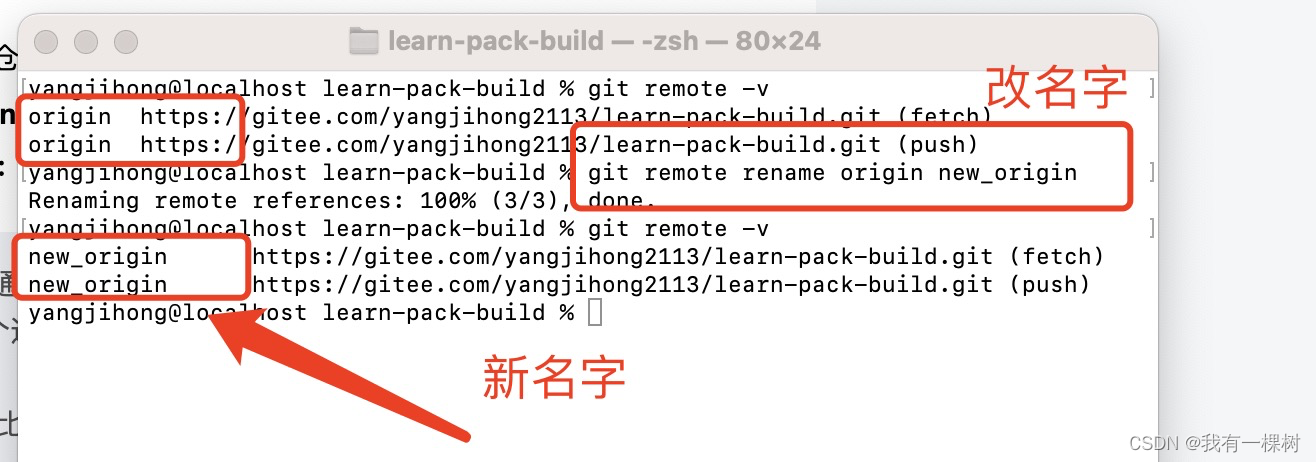

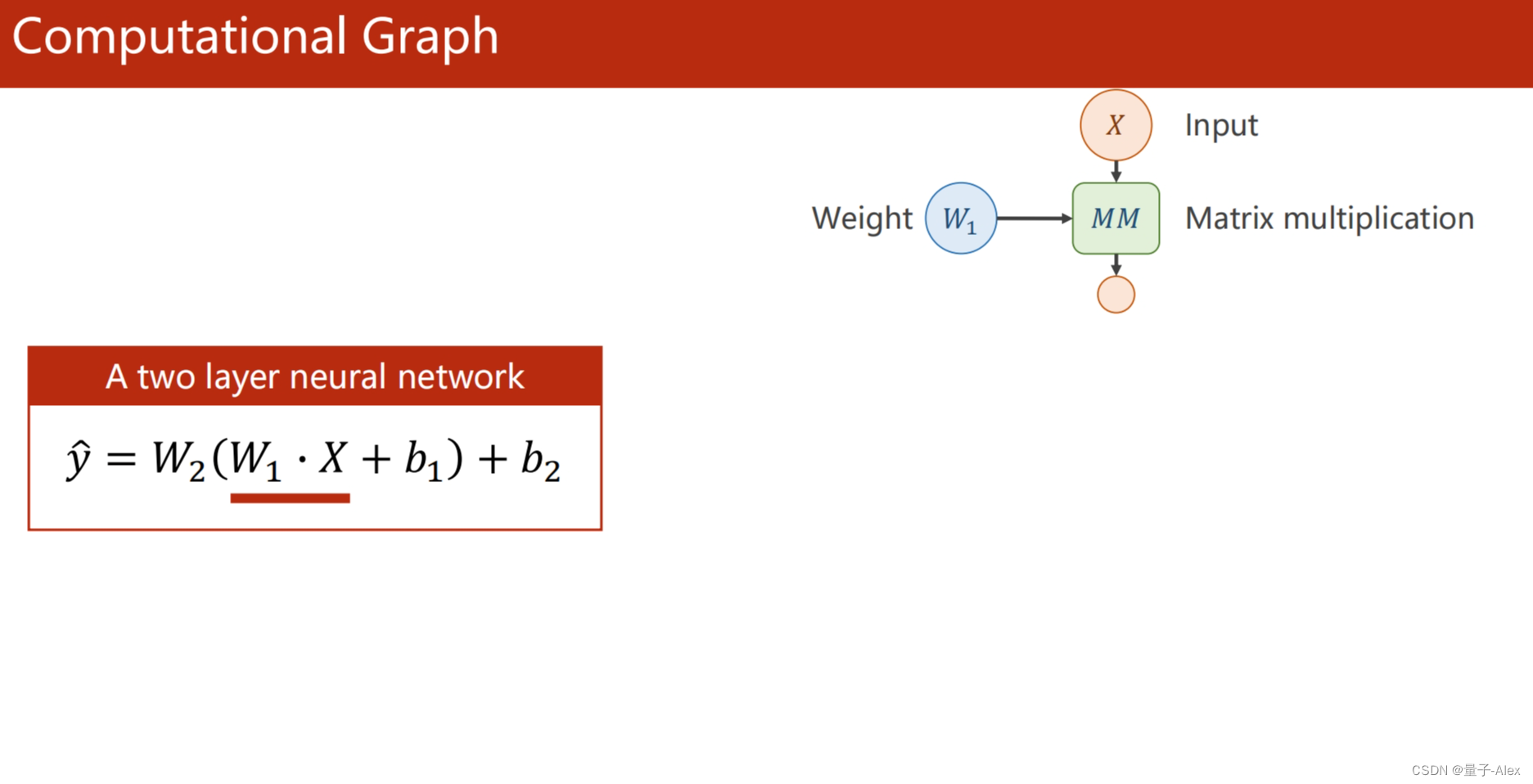

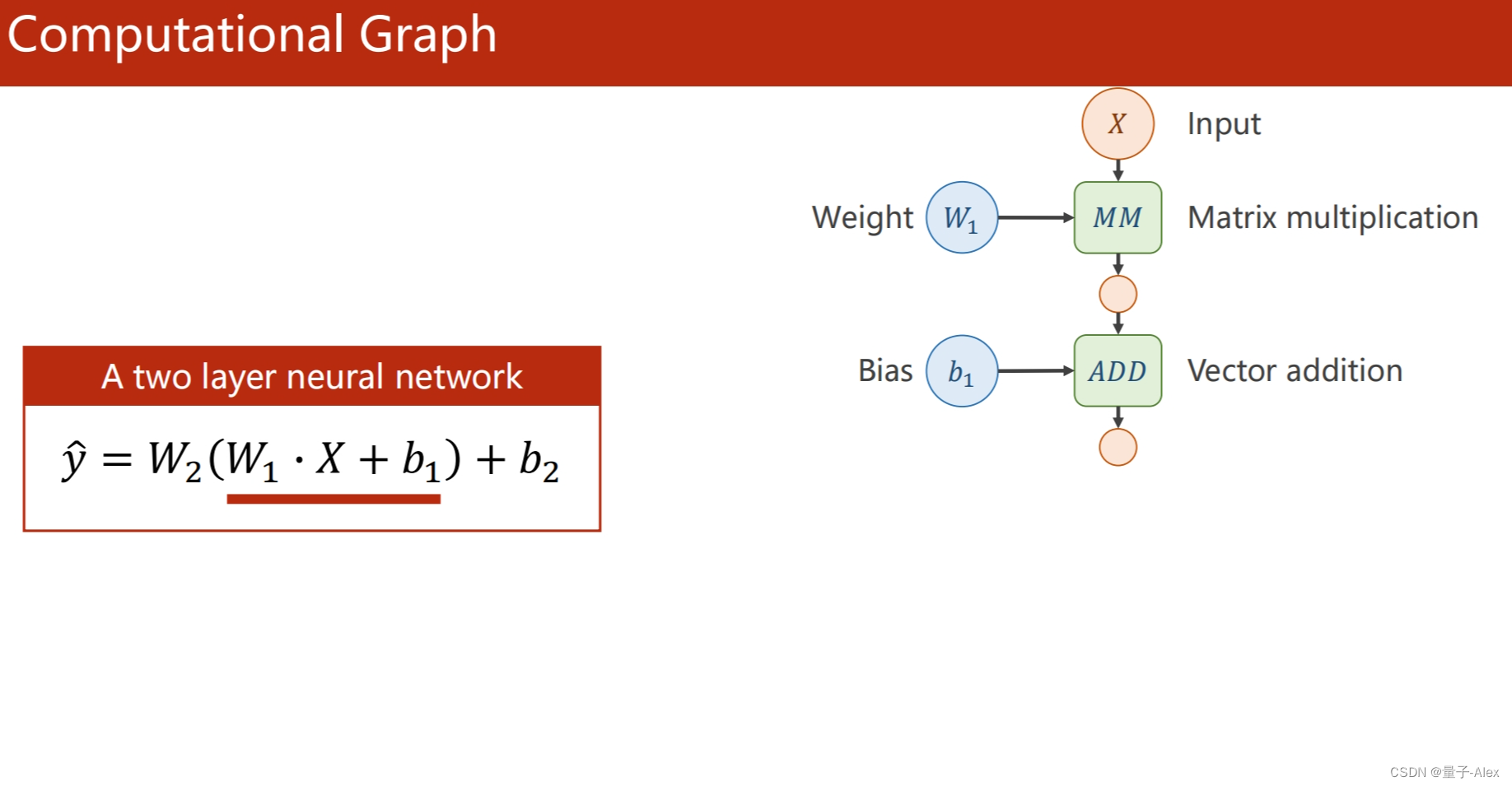

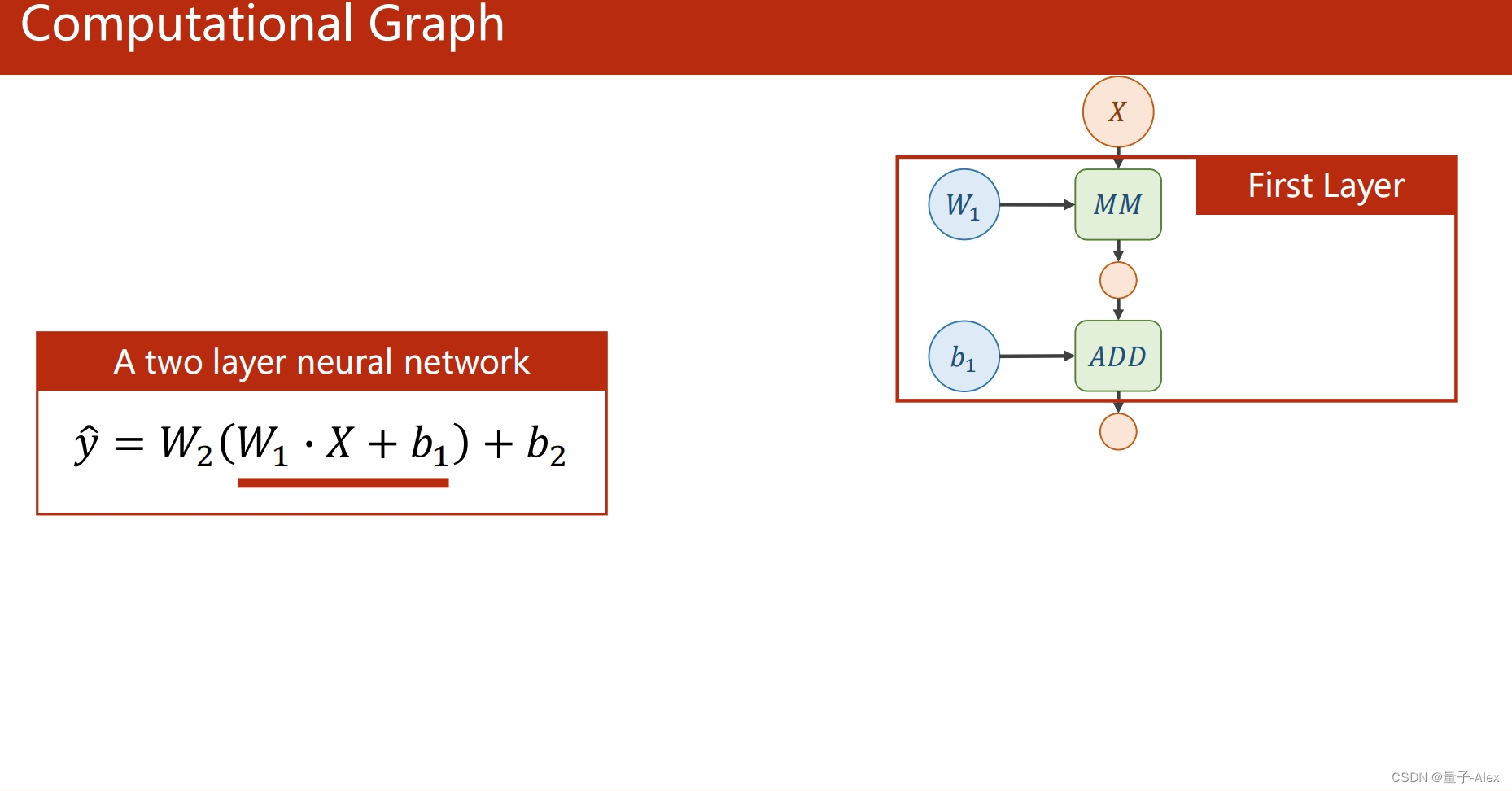

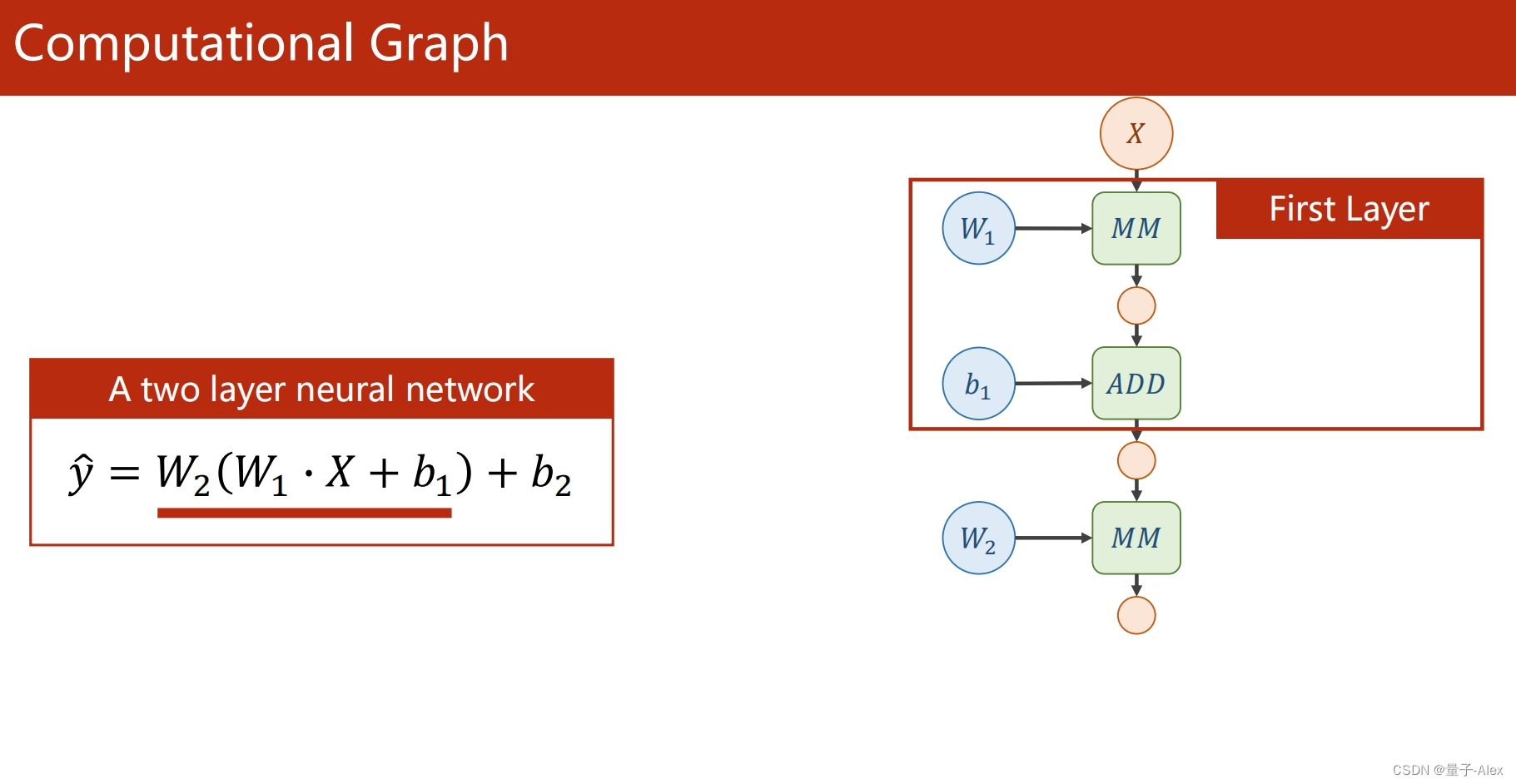

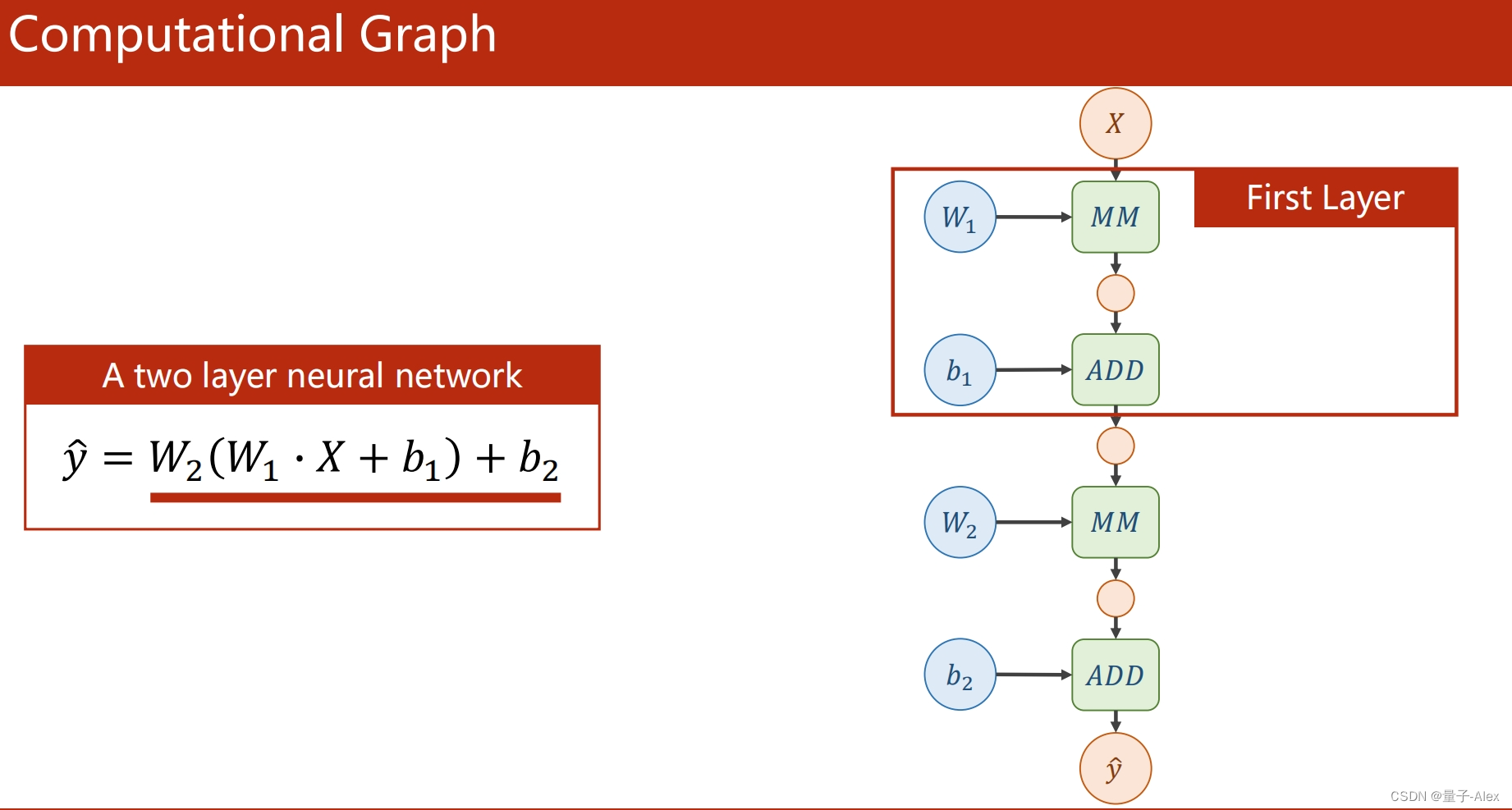

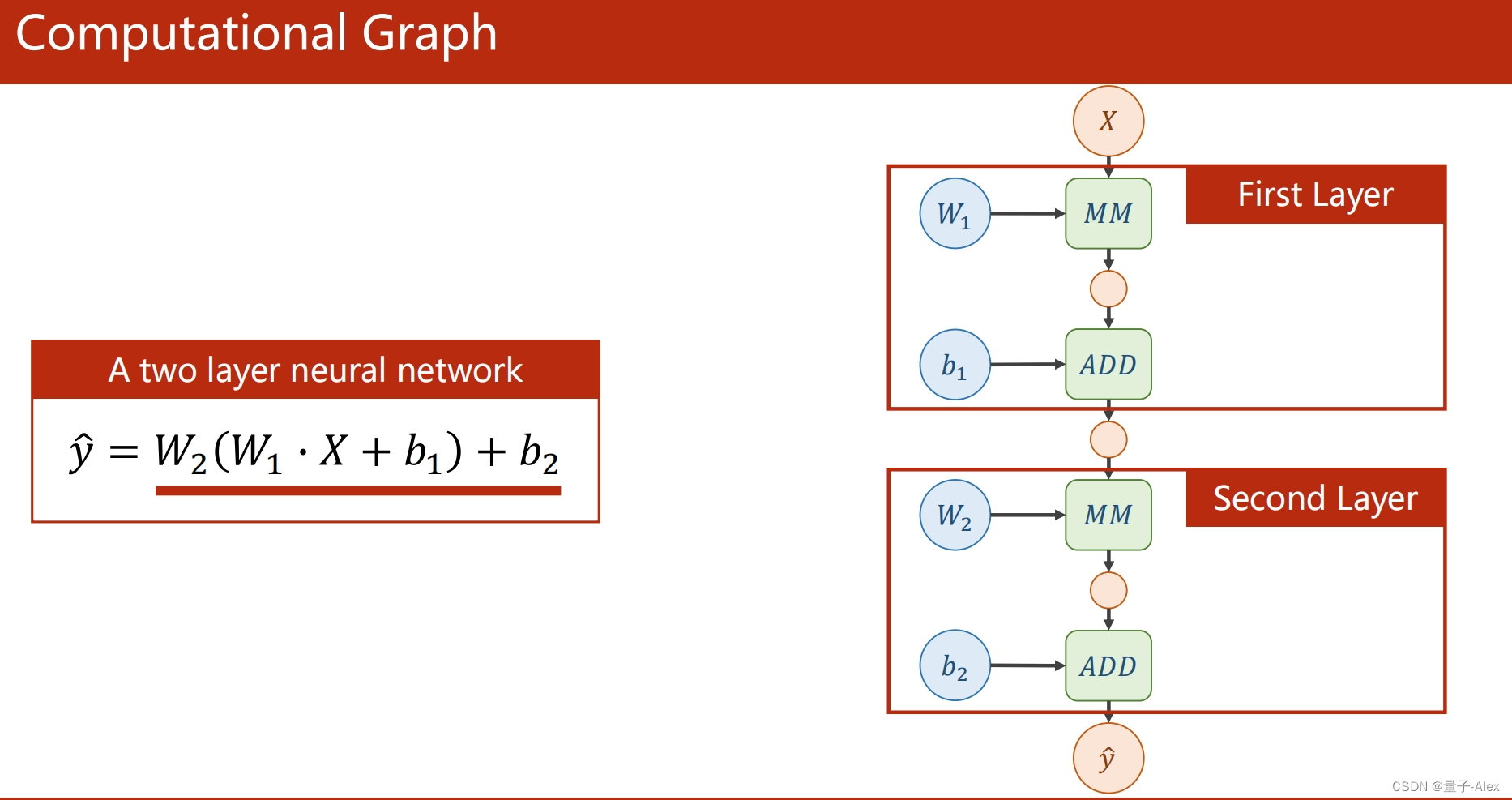

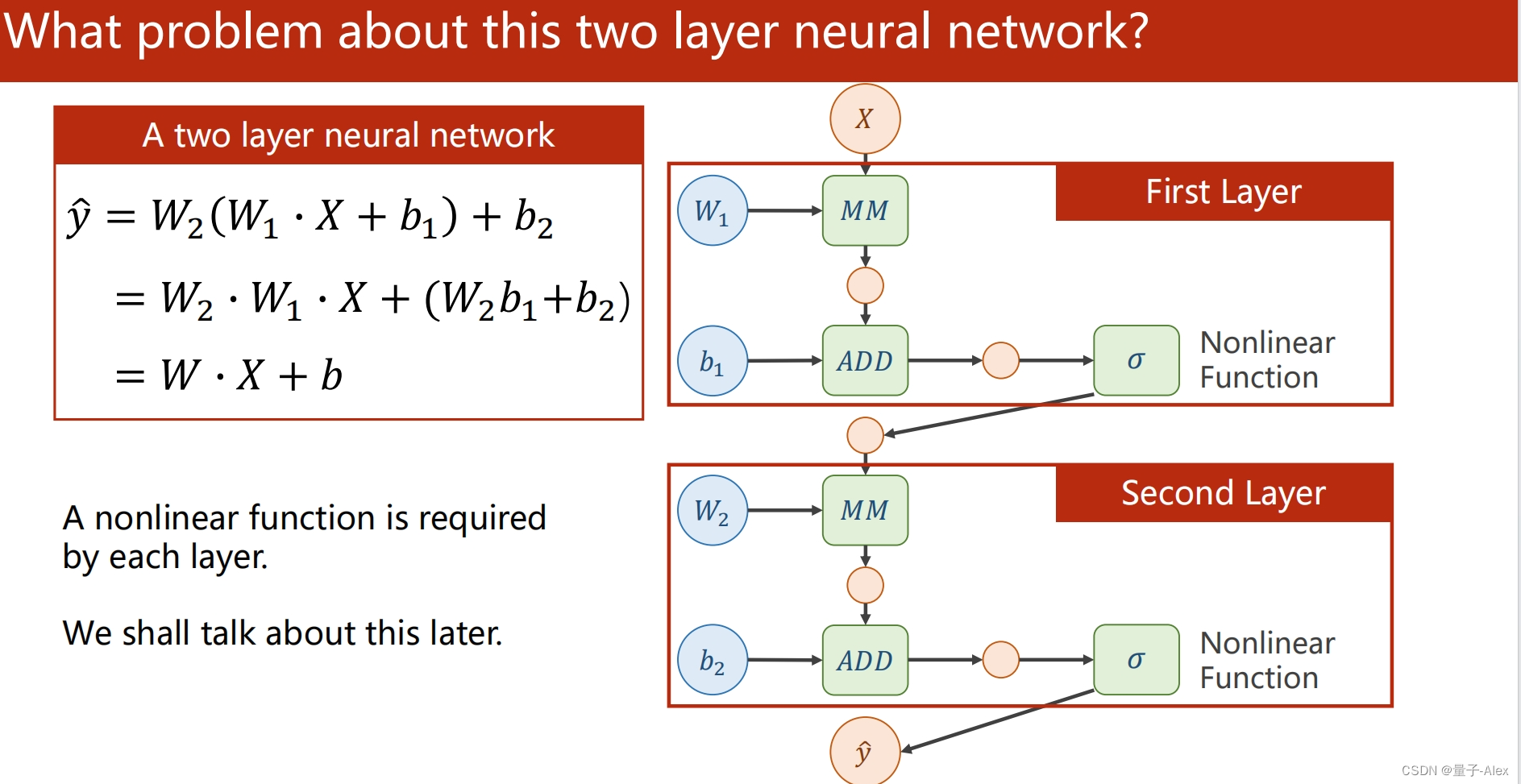

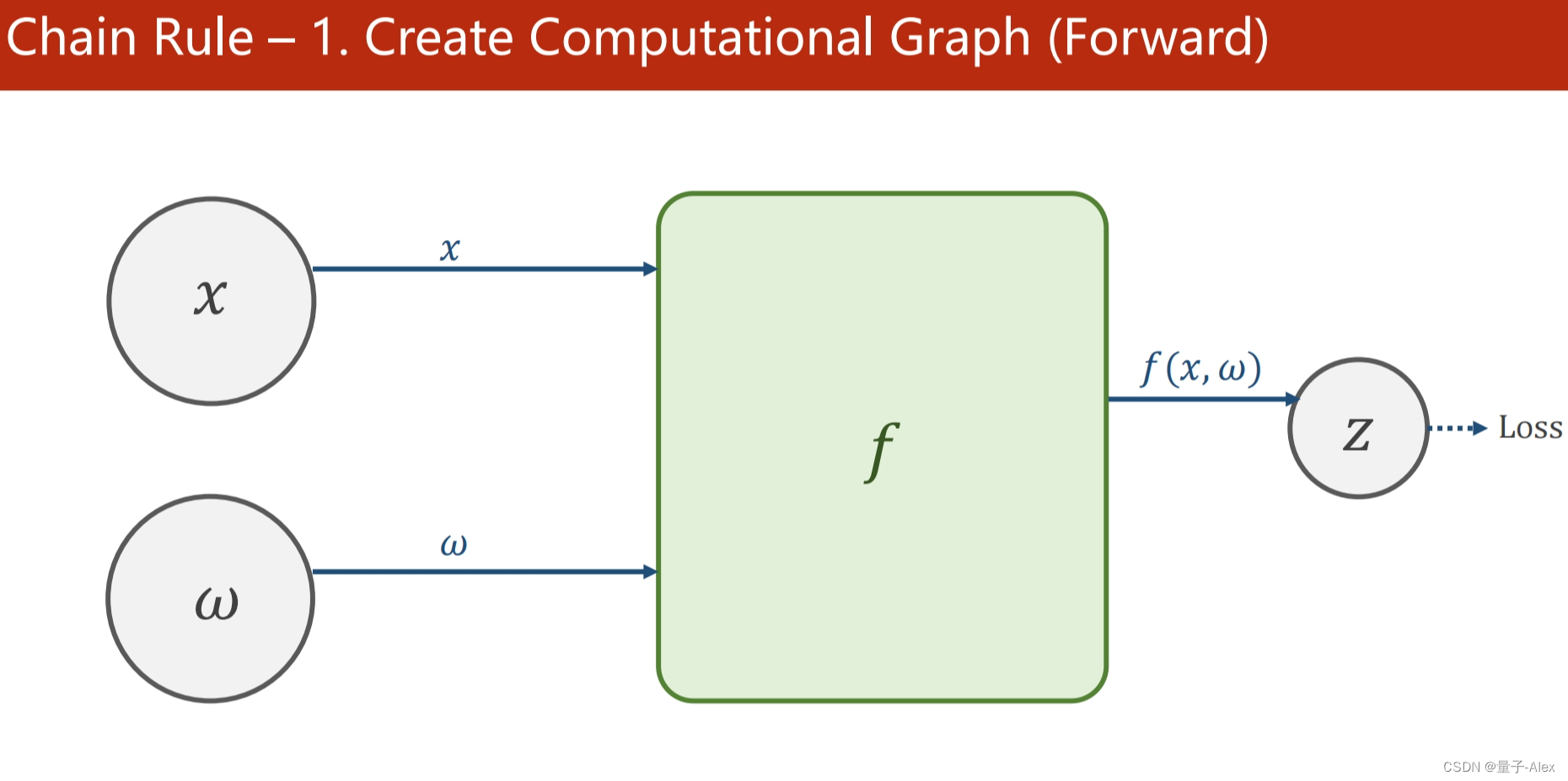

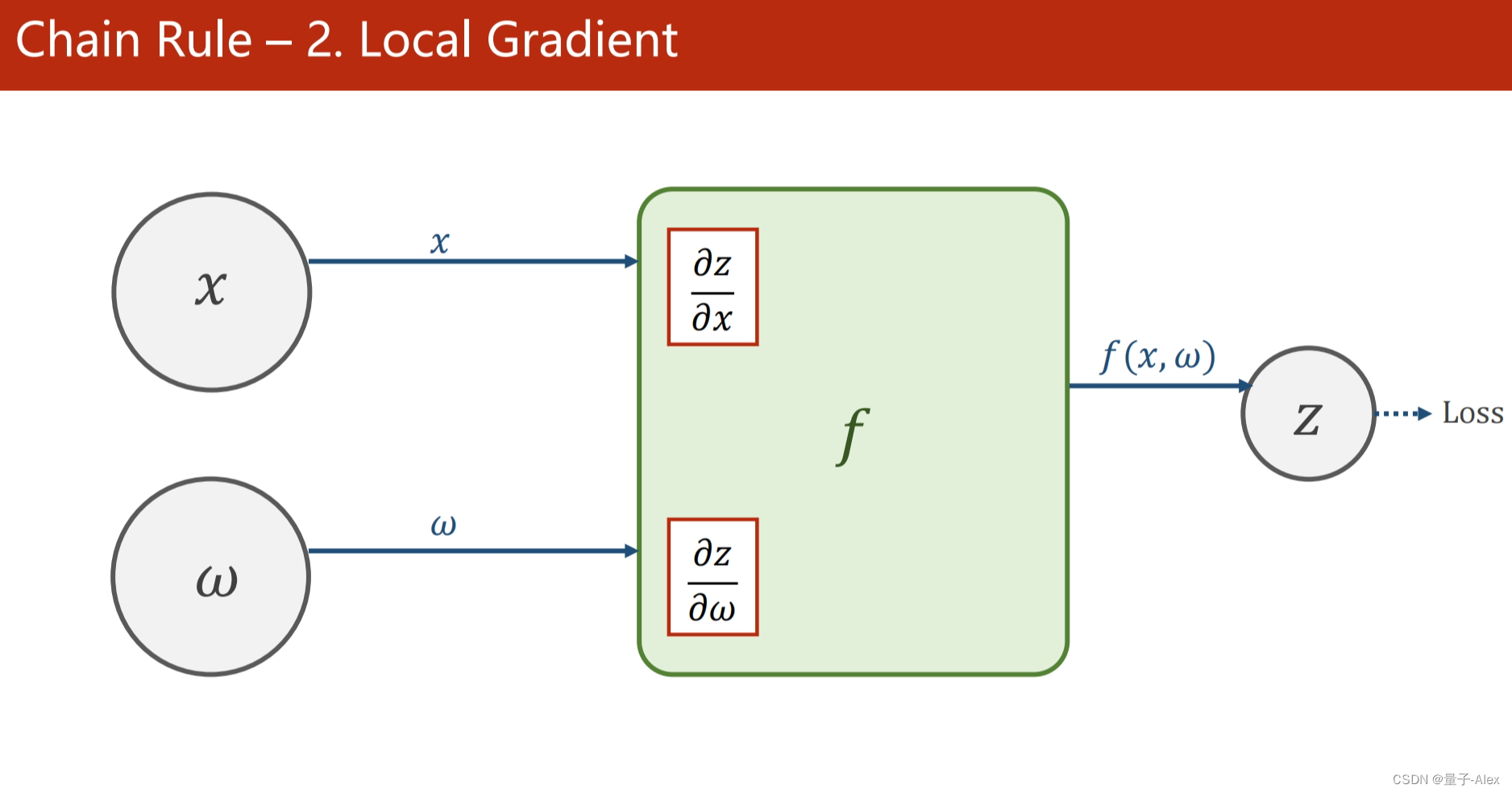

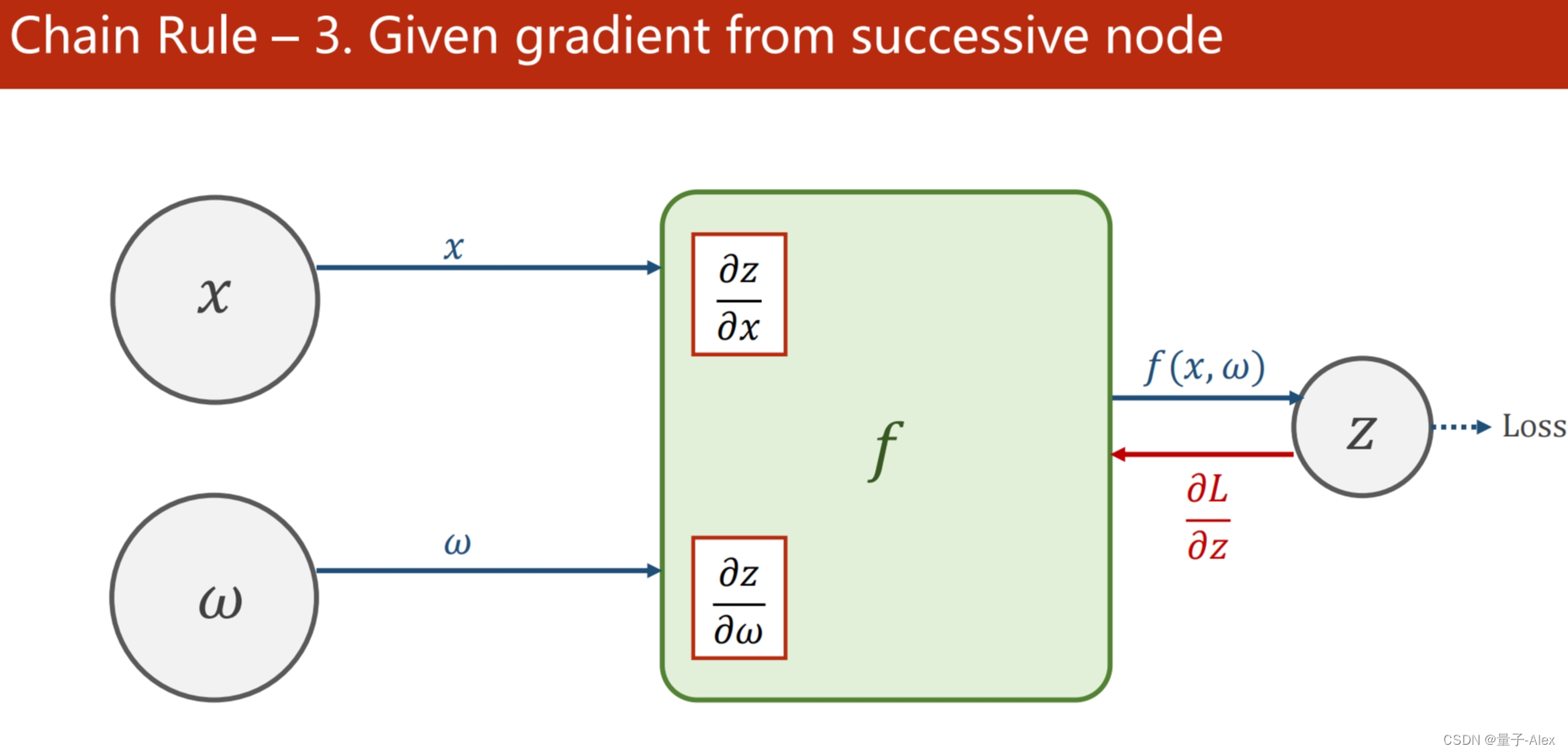

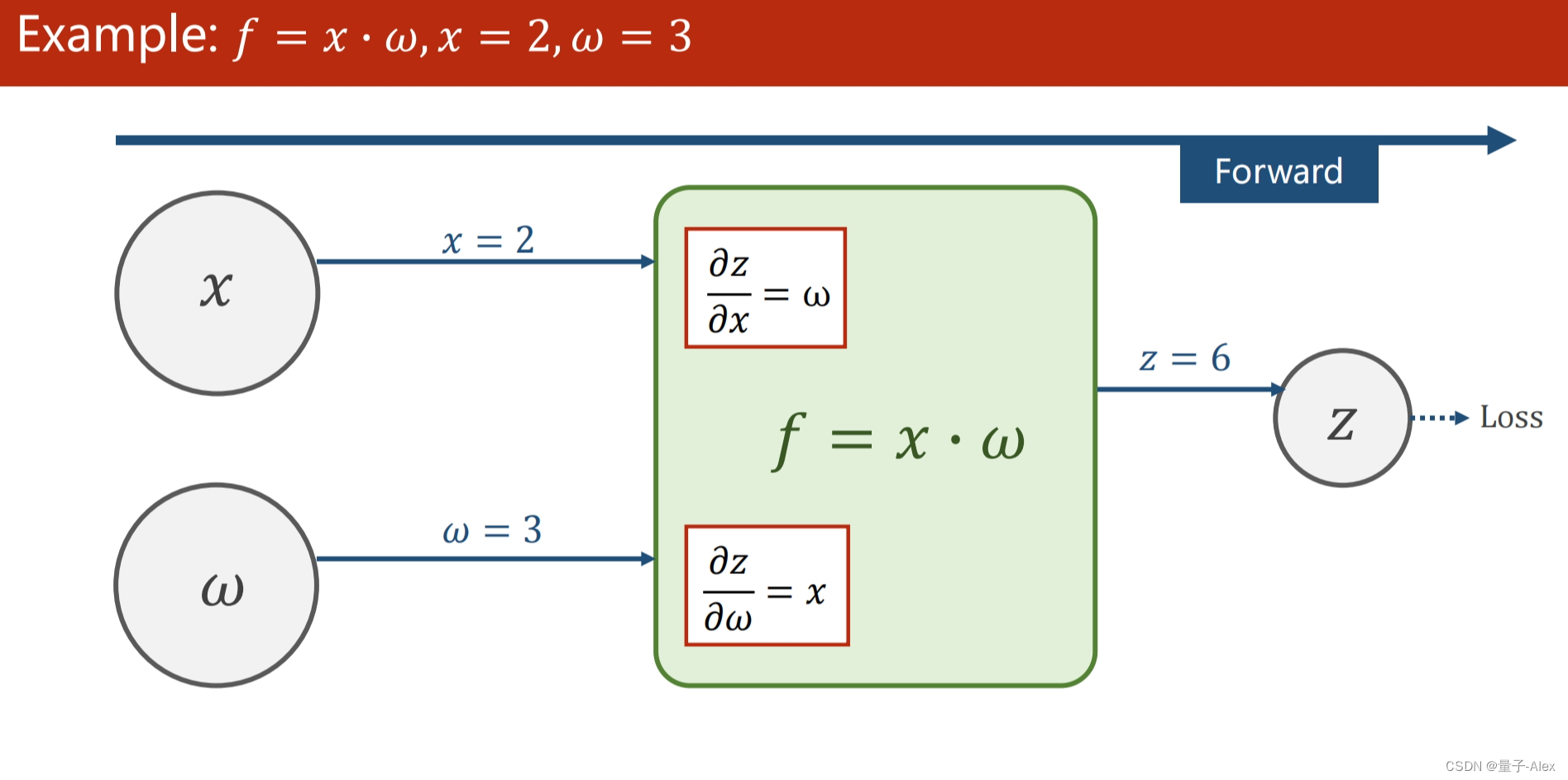

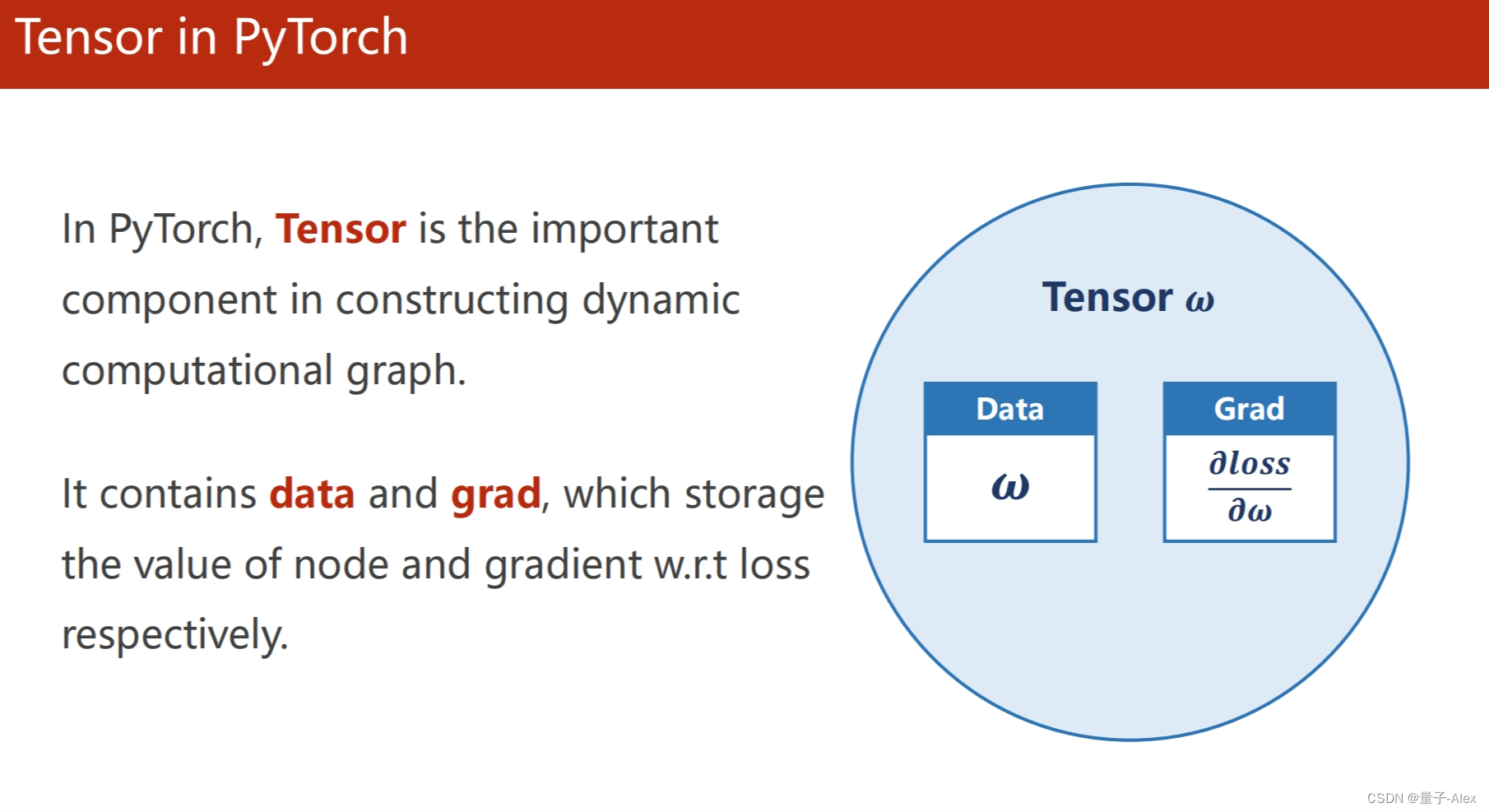

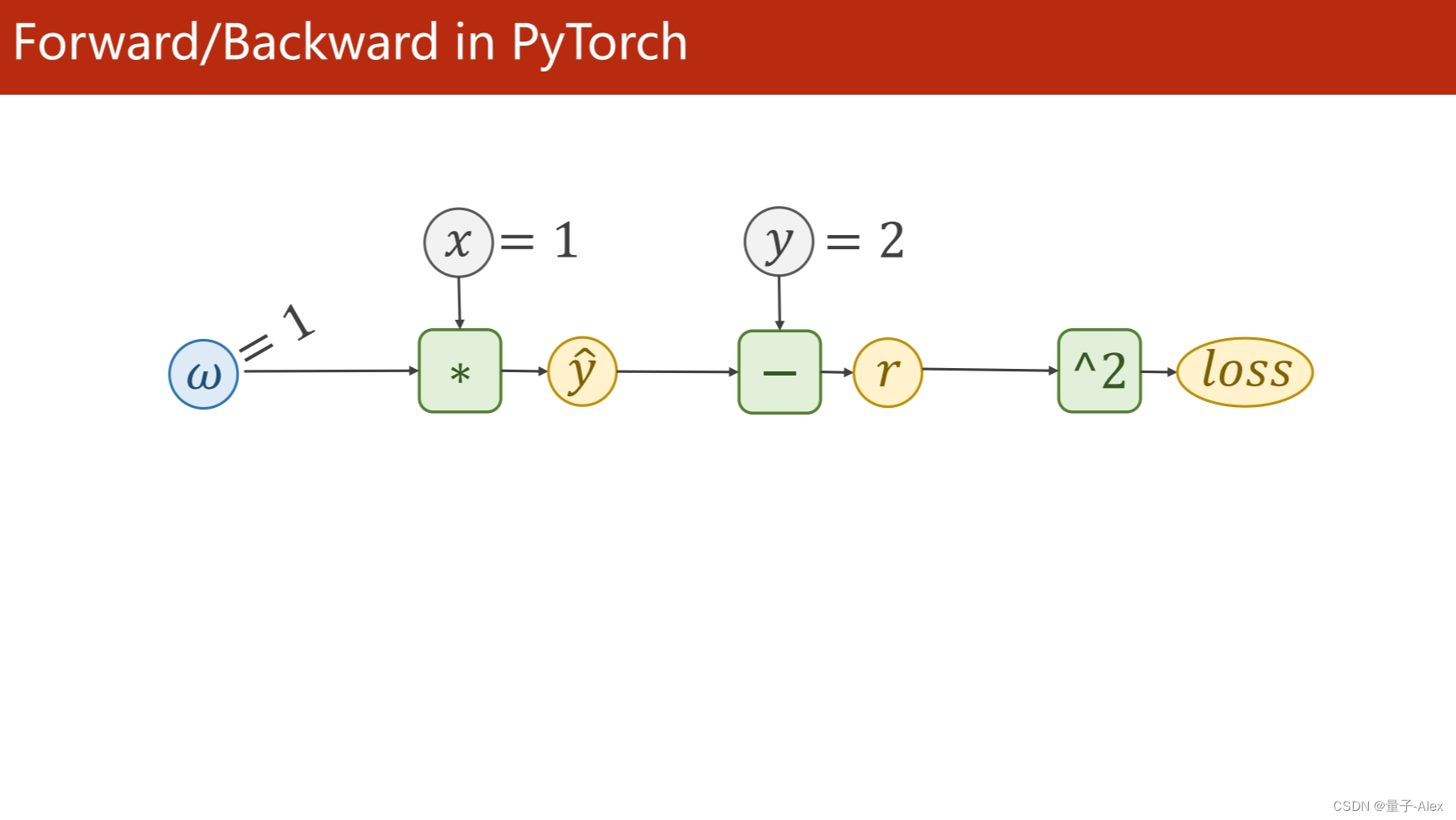

pytorch的机制是动态计算图,

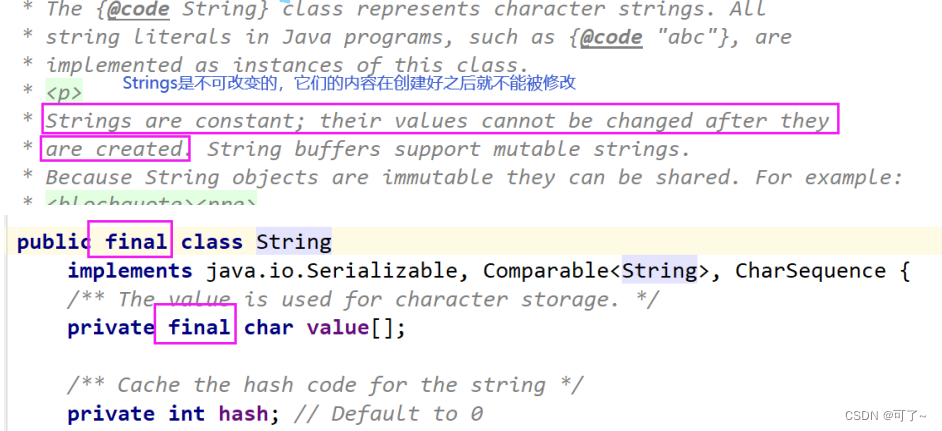

tensor里面既有data也有gradient