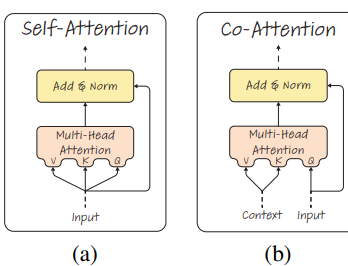

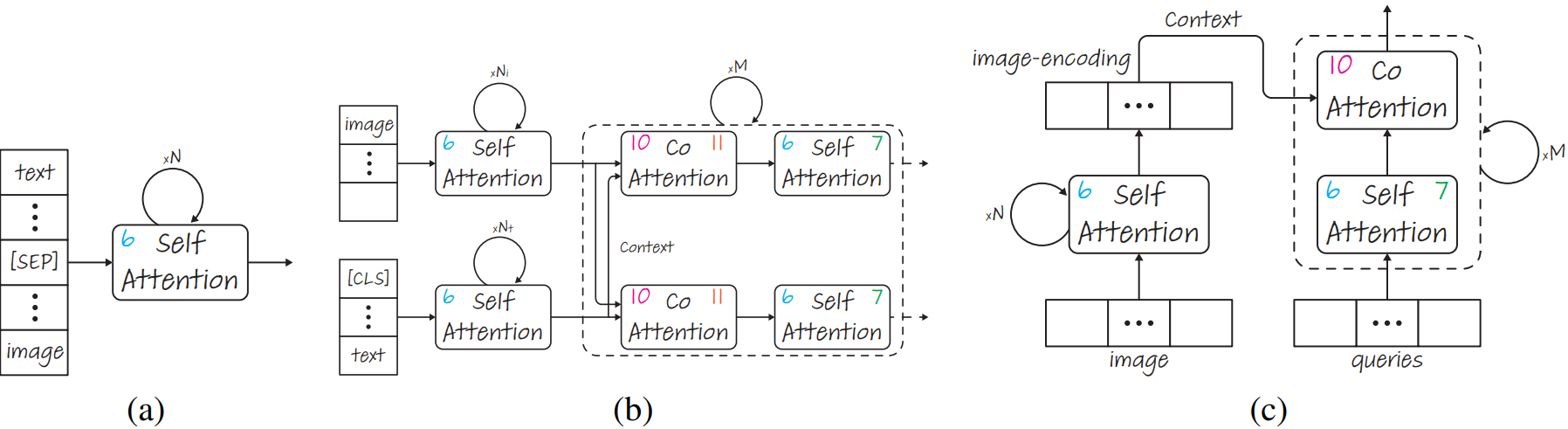

two modalities are separated by the [SEP] token,the numbers in each attention module represent the Eq. number.

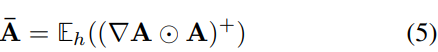

E

h

_h

h is the mean,

∇

\nabla

∇A :=

∂

y

t

∂

A

{∂y_t}\over∂A

∂A∂ytfor

y

t

y_t

yt which is the model’s output.

⊙

\odot

⊙ is the Hadamard product,remove the negative contributions before averaging.

aggregated self-attention matrix R

q

q

^{qq}

qq,previous layers’ mixture of context is embodied by R

q

k

^{qk}

qk.

感想

作者的实验在coco和ImageNet验证集上做的,不好follow

![[嵌入式C][入门篇] 快速掌握基础2 (数据类型、常量、变量)](https://img-blog.csdnimg.cn/direct/72957661bd4f447cbdf07f72c8b761ba.png)