文章目录

- 5. Turing Machine

- 5.1 TM Configuration

- 5.2 TM Transitions

- 5.3 TM Computation

- 5.4 Language accepted by TM

- 5.5 Decider

- 5.6 Multi-tape TM

- 5.6.1 Multi-tape TM equivalent to 1-tape TM

- 5.7 Nondeterministic TM

- 5.7.1 Address

- 5.7.2 NTM equivalent to TM

- 5.8 Enumerable Language and Enumerator

- 5.9 Encoding

- 5.9.1 Encoding of Graph

- 5.9.2 TM to decide the connectedness of a Graph

- 6. Decidable Languages

- 6.1 Decidability

- 6.2 Unsolvable and Undecidable problems

- 6.3 Countable set

- 7. Reducibility

- 7.1 Computation histories

- 7.2 Computable functions

- 7.3 Mapping Reducibility

- 7.4 Algorithm and Information

- 7.4.1 Algorithm

- 7.4.2 Information

- 7.5 Recursively Enumerable Languages

- 7.5.1 Enumerability

- 7.5.2 Recursively Enumerable Languages

- 7.5.3 Decidability and Undecidability

- 7.5.4 The Language L T M L_{TM} LTM

- 7.5.5 Enumerator

- 7.6 Complexity Theory

- 7.6.1 Running time

- 7.6.2 Asymptotic Notation

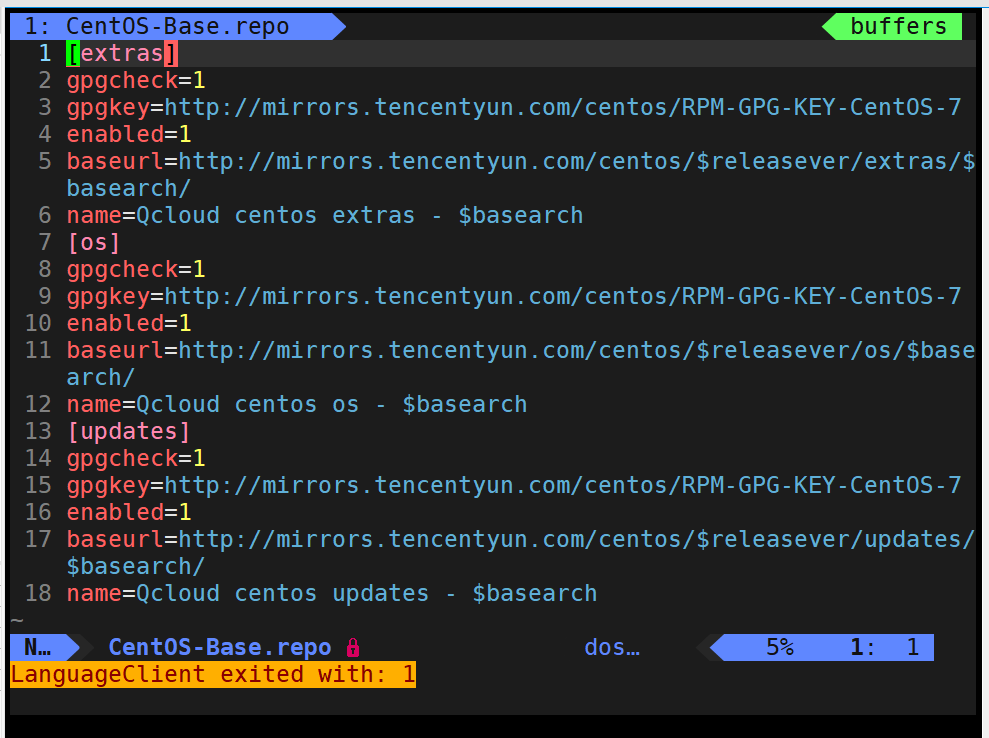

5. Turing Machine

- k ≥ 1 k\ge1 k≥1 infinitely long tape (The tape is infinite both to the left and to the right), divided into cells. Each cell stores a symbol belonging to Γ Γ Γ (tape alphabet).

- Tape head can move both right and left, one cell per move. It read from or write to a tape.

- State control can be in any one of a finite number of states Q Q Q. It is based on: state and symbol read from tape.

- Machine has one start state, one accept state and one reject state.

- Machine can run forever: infinite loop.

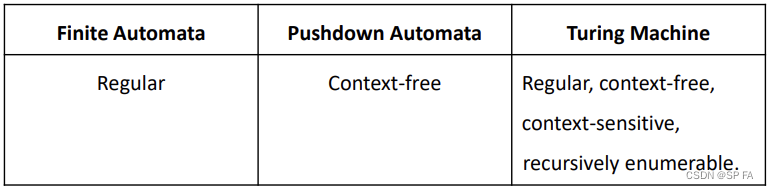

Properties of Turing Machine

- Turing machine can both read from tape and write on it.

- Tape head can move both right and left.

- Tape is infinite and can be used for storage.

- Accept and reject states take immediate effect.

A Turing machine ™ is a 7-tuple M = ( Σ , Γ , Q , δ , q , q a c c e p t , q r e j e c t ) M=(\Sigma,Γ,Q,\delta,q,q_{accept},q_{reject}) M=(Σ,Γ,Q,δ,q,qaccept,qreject), where

- Σ \Sigma Σ is a finite set, called the input alphabet; the blank symbol is not contained in Σ \Sigma Σ

- Γ Γ Γ is a finite set, called the tape alphabet; this alphabet contains the blank symbol, and Σ ⊆ Γ \Sigma\subseteqΓ Σ⊆Γ

- Q Q Q is a finite set, whose elements are called states

- q q q is an element of Q Q Q; it is called the start state

- q a c c e p t q_{accept} qaccept is an element of Q Q Q; it is called the accept state

- q r e j e c t q_{reject} qreject is an element of Q Q Q; it is called the reject state, q r e j e c t ≠ q a c c e p t q_{reject}\ne q_{accept} qreject=qaccept

- δ \delta δ is called the transition function, which is a function δ : Q × Γ → Q × Γ × { L , R , N } \delta:Q\timesΓ\rightarrow Q\timesΓ\times\{L,R,N\} δ:Q×Γ→Q×Γ×{L,R,N}

L L L: move to left, R R R: move to right, N N N: no move.

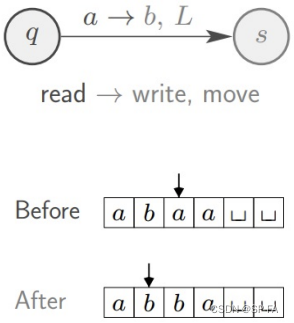

Transition function

δ ( q , a ) = ( s , b , L ) \delta(q,a)=(s,b,L) δ(q,a)=(s,b,L)

If TM

- in state q ∈ Q q\in Q q∈Q

- tape head reads tape symbol a ∈ Γ a\inΓ a∈Γ

Then TM

- moves to state s ∈ Q s\in Q s∈Q

- overwrites a a a with b ∈ Γ b\inΓ b∈Γ

- moves head left

Computation steps

- Before the computation step, the Turing machine is in a state KaTeX parse error: Undefined control sequence: \inQ at position 2: r\̲i̲n̲Q̲, and the tape head is on a certain cell.

- TM M M M proceeds according to transition function: δ : Q × Γ → Q × Γ × { L , R , N } \delta:Q\timesΓ\rightarrow Q\timesΓ\times\{L,R,N\} δ:Q×Γ→Q×Γ×{L,R,N}

- Depending on

r

r

r and

k

k

k symbols read from tape:

- switches to a state r ′ ∈ Q r'\in Q r′∈Q

- tape head writes a symbol of Γ Γ Γ in the cell it is currently scanning

- tape head moves one cell to the left or right or stay at the current cell.

- Computation continues until q r e j e c t q_{reject} qreject or q a c c e p t q_{accept} qaccept is entered.

- Otherwise, M M M will run forever (input string is neither accepted nor rejected)

Start configuration

The input is a string over the input alphabet

Σ

\Sigma

Σ. Initially, this input string is stored on the first tape, and the head of this tape is on the leftmost symbol of the input string.

Computation and termination

Starting in the start configuration, the Turing machine performs a sequence of computation steps. The computation terminates at the moment when the Turing machine enters the accept state

q

a

c

c

e

p

t

q_{accept}

qaccept or the reject state

q

r

e

j

e

c

t

q_{reject}

qreject. (If the machine never enters

q

a

c

c

e

p

t

q_{accept}

qaccept and

q

r

e

j

e

c

t

q_{reject}

qreject the computation does not terminate.)

Acceptance

The Turing machine

M

M

M accepts the input string

w

∈

Σ

∗

w\in\Sigma^*

w∈Σ∗ , if the computation on this input terminates in the state

q

a

c

c

e

p

t

q_{accept}

qaccept.

5.1 TM Configuration

Configuration of a TM M = ( Q , Σ , Γ , δ , q , q a c c e p t , q r e j e c t ) M=(Q,\Sigma,Γ,\delta,q,q_{accept},q_{reject}) M=(Q,Σ,Γ,δ,q,qaccept,qreject) is a string u q v uqv uqv with u , v ∈ Γ ∗ u,v\inΓ^* u,v∈Γ∗ and q ∈ Q q\in Q q∈Q, and specifies that currently

- M M M is in state q q q

- tape contains u v uv uv

- tape head is pointing to the cell containing the first symbol in v v v

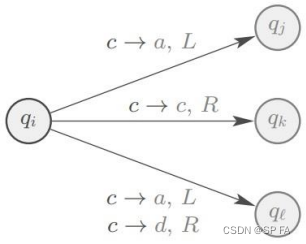

5.2 TM Transitions

Configuration C 1 C1 C1 yields configuration C 2 C2 C2 if the Turing machine can legally go from C 1 C1 C1 to C 2 C2 C2 in a single step. For TM M = ( Q , Σ , Γ , δ , q , q a c c e p t , q r e j e c t ) M=(Q,\Sigma,Γ,\delta,q,q_{accept},q_{reject}) M=(Q,Σ,Γ,δ,q,qaccept,qreject), suppose

- u , v ∈ Γ ∗ u,v\inΓ^* u,v∈Γ∗

- a , b , c ∈ Γ a,b,c\inΓ a,b,c∈Γ

- q i , q j ∈ Q q_i,q_j\in Q qi,qj∈Q

- transition function δ : Q × Γ → Q × Γ × { L , R } \delta:Q\timesΓ\rightarrow Q\timesΓ\times\{L,R\} δ:Q×Γ→Q×Γ×{L,R}

5.3 TM Computation

Given a TM M = ( Q , Σ , Γ , δ , q , q a c c e p t , q r e j e c t ) M = (Q,\Sigma,Γ,\delta,q,q_{accept},q_{reject}) M=(Q,Σ,Γ,δ,q,qaccept,qreject) and input string w ∈ Σ ∗ w\in\Sigma^∗ w∈Σ∗. M M M accepts input w w w if there is a finite sequence of configurations C 1 , C 2 , … , C k C_1, C_2,\dots,C_k C1,C2,…,Ck for some k ≥ 1 k≥1 k≥1 with

- C 1 C_1 C1 is the starting configuration q 0 w q0w q0w

- C i C_i Ci yields C i + 1 C_{i+1} Ci+1 for all i = 1 , … , k − 1 i=1,\dots,k-1 i=1,…,k−1 ((sequence of configurations obeys transition function δ \delta δ)

- C k C_k Ck is an accepting configuration u q a c c e p t v uq_{accept}v uqacceptv for some u , v ∈ Γ ∗ u,v\inΓ^* u,v∈Γ∗

5.4 Language accepted by TM

The language L ( M ) L(M) L(M) accepted by the Turing machine M M M is the set of all strings in Σ ∗ Σ^∗ Σ∗ that are accepted by M M M.

Language A A A is Turing-recognizable if there is a TM M M M such that A = L ( M ) A=L(M) A=L(M)

- Also called recursively enumerable or enumerable language.

- On an input w ∈ L ( M ) w\in L(M) w∈L(M), the machine M M M can either halt in a rejecting state, or it can loop indefinitely.

- Turing-recognizable not practical because never know if TM will halt.

5.5 Decider

A decider is TM that halts on all inputs

Language A = L ( M ) A=L(M) A=L(M) is decided by TM M M M if on each possible input w ∈ Σ ∗ w\in Σ^∗ w∈Σ∗, the TM finishes in a halting configuration

- M M M ends in q a c c e p t q_{accept} qaccept for each w ∈ A w\in A w∈A

- M M M ends in q r e j e c t q_{reject} qreject for each w ∈ A w\in A w∈A

A A A is Turing-decidable if ∃ ∃ ∃ TM M M M that decides A

- Also called recursive or decidable language.

- Differences to Turing-recognizable language:

- Turing-decidable language has TM that halts on every string w ∈ Σ ∗ w \in Σ^∗ w∈Σ∗

- TM for Turing-recognizable language may loop on strings w ∉ w\notin w∈/ this language

5.6 Multi-tape TM

- Each tape has its own head

- Transition determined by

- state

- the content read by all heads

- Reading and writing of each head are independent of others

A k-tape Turing machine ™ is a 7-tuple M = ( Σ , Γ , Q , δ , q , q a c c e p t , q r e j e c t ) M=(\Sigma,Γ,Q,\delta,q,q_{accept},q_{reject}) M=(Σ,Γ,Q,δ,q,qaccept,qreject) has k k k different tapes and k k k different read/write heads, where,

- Σ \Sigma Σ is a finite set, called the input alphabet; the blank symbol ϵ \epsilon ϵ is not contained in Σ \Sigma Σ.

- Γ Γ Γ is a finite set, called the tape alphabet; this alphabet contains the blank symbol ϵ \epsilon ϵ, and Σ ⊆ Γ \Sigma\subseteqΓ Σ⊆Γ

- Q Q Q is a finite set, whose elements are called states

- q q q is an element of Q Q Q; it is called the start state

- q a c c e p t q_{accept} qaccept is an element of Q Q Q; it is called the accept state

- q r e j e c t q_{reject} qreject is an element of Q Q Q; it is called the reject state

- δ \delta δ is called the transition function, which is a function δ : Q × Γ k → Q × Γ k × { L , R , N } k \delta:Q\timesΓ^k\rightarrow Q\timesΓ^k\times\{L,R,N\}^k δ:Q×Γk→Q×Γk×{L,R,N}k

Γ k = Γ × Γ × ⋯ × Γ Γ^k=Γ\timesΓ\times\cdots\timesΓ Γk=Γ×Γ×⋯×Γ

Transition function

δ

:

Q

×

Γ

k

→

Q

×

Γ

k

×

{

L

,

R

,

N

}

k

\delta:Q\timesΓ^k\rightarrow Q\timesΓ^k\times\{L,R,N\}^k

δ:Q×Γk→Q×Γk×{L,R,N}k

Given

δ

(

q

i

,

a

1

,

a

2

,

⋯

,

a

k

)

=

(

q

j

,

b

1

,

b

2

,

⋯

,

b

k

,

L

,

R

,

⋯

,

L

)

\delta(q_i,a_1,a_2,\cdots,a_k)=(q_j,b_1,b_2,\cdots,b_k,L,R,\cdots,L)

δ(qi,a1,a2,⋯,ak)=(qj,b1,b2,⋯,bk,L,R,⋯,L)

5.6.1 Multi-tape TM equivalent to 1-tape TM

simulate k-tape TM using 1-tape TM

Proof

Let TM

M

=

(

Σ

,

Γ

,

Q

,

δ

,

q

,

q

a

c

c

e

p

t

,

q

r

e

j

e

c

t

)

M=(\Sigma,Γ,Q,\delta,q,q_{accept},q_{reject})

M=(Σ,Γ,Q,δ,q,qaccept,qreject) be a k-tape TM.

M M M has:

- input w = w 1 , w 2 , ⋯ , w k w=w_1,w_2,\cdots,w_k w=w1,w2,⋯,wk

- other tapes contain only blanks ϵ \epsilon ϵ

- each head points to first cell.

Construct 1-tape TM M ′ M^\prime M′ by extending tape alphabet Γ ′ = Γ ∪ Γ ˙ ∪ { # } Γ^\prime=Γ\cup\dot{Γ}\cup\{\#\} Γ′=Γ∪Γ˙∪{#}where Γ ˙ \dot{Γ} Γ˙ contains the head positions of different tapes. These positions are marked by dotted symbol.

For each step of k-tape TM M M M, 1-tape M ′ M^\prime M′ operates its tape as:

- At the start of the simulation, the tape head of M ′ M^\prime M′ is on the leftmost # \# #

- Scans the tape from first # \# # to ( k + 1 ) s t # (k+1)st~\# (k+1)st # to read symbols under heads.

- Rescans to write new symbol and move heads.

Turing recognizable & Multiple-tape TM

Language

L

L

L is TM-recognizable if and only if some multi-tape TM recognizes

L

L

L.

5.7 Nondeterministic TM

A nondeterministic Turing machine (NTM) M can have several options at every step. It is defined by the 7-tuple M = ( Σ , Γ , Q , δ , q , q a c c e p t , q r e j e c t ) M=(\Sigma,Γ,Q,\delta,q,q_{accept},q_{reject}) M=(Σ,Γ,Q,δ,q,qaccept,qreject), where

- Σ \Sigma Σ is input alphabet (withoutblank)

- Γ Γ Γ is tape alphabet with { ϵ } ∪ Σ ⊆ Γ \{\epsilon\}\cup\Sigma\subseteqΓ {ϵ}∪Σ⊆Γ

- Q Q Q is a finite set, whose elements are called states

- δ \delta δ is transition function δ : Q × Γ → P ( Q × Γ × { L , R } ) \delta:Q\timesΓ\rightarrow P(Q\timesΓ\times\{L,R\}) δ:Q×Γ→P(Q×Γ×{L,R})

- q q q is start state ∈ Q \in Q ∈Q

- q a c c e p t q_{accept} qaccept is accept state ∈ Q \in Q ∈Q

- q r e j e c t q_{reject} qreject is reject state ∈ Q \in Q ∈Q

Transition

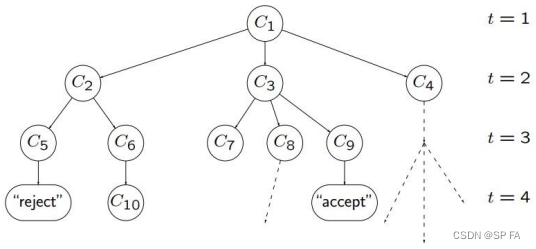

Computation

With any input

w

w

w, computation of NTM is represented by a configuration tree.

If

∃

\exists

∃ at least one accepting leaf, then NTM accepts.

5.7.1 Address

Every node in the tree has at most b b b children. b b b is size of largest set of possible choices for N ′ s N's N′s transition function.

- Every node in tree has an address that is a string over the alphabet Γ b = { 1 , 2 , ⋯ , b } Γ_b=\{1,2,\cdots,b\} Γb={1,2,⋯,b}

5.7.2 NTM equivalent to TM

Every nondeterministic TM has an equivalent deterministic TM.

Proof

- Build TM D D D to simulate NTM N N N on each input w w w. D D D tries all possible branches of N ′ s N^\prime s N′s tree of configurations.

- If D D D finds any accepting configuration, then it accepts input w w w.

- If all branches reject, then D D D rejects input w w w.

- If no branch accepts and at least one loops, then D D D loops on w w w.

- Initially, input tape contains input string w w w. Simulation and address tapes are initially empty.

- Copy input tape to simulation tape.

- Use simulation tape to simulate NTM

N

N

N on input

w

w

w on path in tree from root to the address on address tape.

- At each node, consult next symbol on address tape to determine which branch to take.

- Accept if accepting configuration reached.

- Skip to next step if

- symbols on address tape exhausted.

- nondeterministic choice invalid

- rejecting configuration reached

- Replace string on address tape with next string in Γ b ∗ Γ_b^* Γb∗ in string order, and go to Stage 2.

Turing recognizable & Multiple-tape TM

Language L is TM-recognizable if a NTM recognizes it. Multiple-tape TMs and NTMs are not more powerful than standard TMs.

Turing decidable & NTM decidable

A nondeterministic TM is a decider if all branches halt on all inputs. A language is decidable if some nondeterministic TM decides it.

5.8 Enumerable Language and Enumerator

A language is enumerable if some TM recognizes it.

An enumerator is usually represented as a 2-tape Turing machine. One working tape, and one print tape.

Language A is Turing-recognizable if some enumerator enumerates it.

5.9 Encoding

Input to a Turing machine is a string of symbols over an alphabet

When we want TMs to work on different objects, we need to encode this object as a string of symbols over an alphabet.

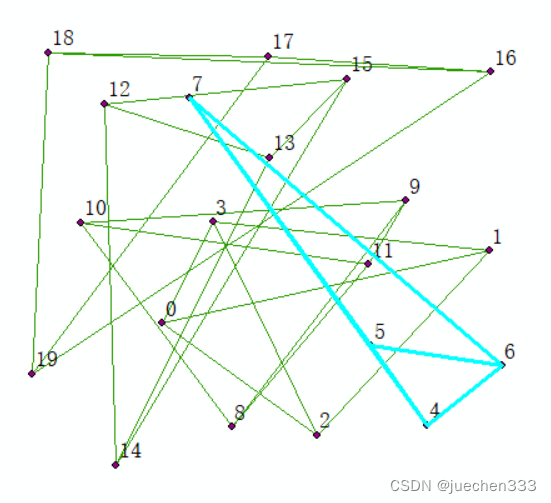

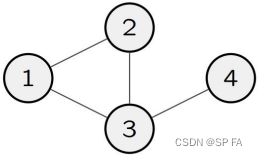

5.9.1 Encoding of Graph

Given an undirected graph

G

G

G

<

G

>

<G>

<G> of graph

G

G

G is string of symbols over some alphabet

Σ

\Sigma

Σ, where the string starts with list of nodes and followed by list of edges.

5.9.2 TM to decide the connectedness of a Graph

An undirected graph is connected if every node can be reached from any other node by travelling along edge. Let A A A be the language consisting of strings representing connected undirected graph.

On input < G > ∈ Ω <G>\in\Omega <G>∈Ω, where G G G is an undirected graph

- Check if G G G is a valid graph encoding. If not, reject.

- Select first node of G G G and mark it.

- Repeat until no new nodes marked.

- For each node in G G G, mark it if it’s attached by an edge to a node already marked.

- Scan all nodes of G G G to see whether they all are marked. If they are, accept; otherwise, reject.

Ω \Omega Ω denotes the universe of a decision problem, comprising all instances.

For TM M M M that decides A = { < G > ∣ G is a connected undirected graph } A=\{<G>|G\text{ is a connected undirected graph}\} A={<G>∣G is a connected undirected graph} D = { < G > ∣ G is an undirected graph } D=\{<G>|G\text{ is an undirected graph}\} D={<G>∣G is an undirected graph}

Step 1 checks that input G ∈ Ω G∈Ω G∈Ω is valid encoding:

- Two list

- First is a list of numbers

- Second is a list of pairs of numbers

- First list contains no duplicate

- Every node in second list appears in first list

Step 2-5 check if G G G is connected.

6. Decidable Languages

6.1 Decidability

Let Σ \Sigma Σ be an alphabet and let L ⊆ Σ ∗ L\subseteq\Sigma^* L⊆Σ∗ be a language. We say that L L L is decidable, if there exists a Turing machine M M M, such that for every string w ∈ Σ ∗ w\in\Sigma^* w∈Σ∗, the following holds:

- If w ∈ L w\in L w∈L, then the computation of the Turing machine M M M, on the input string w w w, terminates in the accept state.

- If w ∉ L w\notin L w∈/L, then the computation of the Turing machine M M M, on the input string w w w, terminates in the reject state.

Given a language L L L whose elements are pairs of the form ( B , w ) (B,w) (B,w), where

- B B B is some computation model.

- w w w is a string over the alphabet Σ \Sigma Σ

The pair ( B , w ) ∈ L B (B,w)\in L_B (B,w)∈LB iff w ∈ L w\in L w∈L.

Since the input to computation model B B B is a string over Σ \Sigma Σ, we must encode the pair ( B , w ) (B,w) (B,w) as a string.

6.1.1 The Language L D F A L_{DFA} LDFA is decidable

Decision problem: Dose a given DFA

B

B

B accept a given string

w

w

w?

L

D

F

A

=

{

<

B

,

w

>

∣

B

is a DFA that accept

w

}

⊆

Ω

Ω

=

{

<

B

,

w

>

∣

B

is a DFA and

w

is a string

}

\begin{aligned}L_{DFA}&=\{<B,w>|B\text{ is a DFA that accept }w\}\subseteq\Omega\\\Omega&=\{<B,w>|B\text{ is a DFA and }w\text{ is a string}\}\end{aligned}

LDFAΩ={<B,w>∣B is a DFA that accept w}⊆Ω={<B,w>∣B is a DFA and w is a string}

To prove L D F A L_{DFA} LDFA is decidable, we need to construct TM M M M that decides L D F A L_{DFA} LDFA.

For M M M that decides L D F A L_{DFA} LDFA:

- take < B , w > ∈ Ω <B,w>\in\Omega <B,w>∈Ω as input

- halt and accept if < B , w > ∈ L D F A <B,w>\in L_{DFA} <B,w>∈LDFA

- halt and reject if < B , w > ∉ L D F A <B,w>\notin L_{DFA} <B,w>∈/LDFA

Proof

On input

<

B

,

w

>

∈

Ω

<B,w>\in\Omega

<B,w>∈Ω, where

- B = ( Σ , Q , δ , q 0 , F ) B=(\Sigma,Q,\delta,q_0,F) B=(Σ,Q,δ,q0,F) is a DFA.

- w = w 1 w 2 ⋯ w n ∈ Σ ∗ w=w_1w_2\cdots w_n\in\Sigma^* w=w1w2⋯wn∈Σ∗ is input string to process on B B B

- Check if < B , w > <B,w> <B,w> is “proper” encoding. If not, reject.

- Simulate

B

B

B on

w

w

w based on:

- q ∈ Q q\in Q q∈Q, the current state of B B B

- i ∈ { 1 , 2 , ⋯ , ∣ w ∣ } i\in\{1,2,\cdots,|w|\} i∈{1,2,⋯,∣w∣}, the pointer that illustrates the current position in w w w.

- q q q changes in accordance with w i w_i wi and the transition function δ ( q , w i ) \delta(q,w_i) δ(q,wi).

- If B B B ends in q ∈ F q\in F q∈F, then M M M accepts; otherwise, reject.

6.1.2 The Language L N F A L_{NFA} LNFA is decidable

Proof

On input

<

B

,

w

>

∈

Ω

<B,w>\in\Omega

<B,w>∈Ω, where

- B = ( σ , Q , δ , q 0 , F ) B=(\sigma,Q,\delta,q_0,F) B=(σ,Q,δ,q0,F) is a NFA

- w ∈ Σ ∗ w\in\Sigma^* w∈Σ∗ is input string to process on B B B.

- Check if < B , w > <B,w> <B,w> is “proper” encoding. If not, reject.

- Transform NFA B B B into DFA C C C.

- Run TM M M M for L D F A L_{DFA} LDFA on input < C , w > <C,w> <C,w>

6.1.3 The Language L R E X L_{REX} LREX is decidable

- Check if < R , w > <R,w> <R,w> is “proper” encoding. If not, reject.

- Transform regular expression R R R into DFA B B B.

- Run TM M M M for L D F A L_{DFA} LDFA on input < C , w > <C,w> <C,w>

6.1.4 CFGs are decidable

- Check if < G , w > <G,w> <G,w> is proper encoding of CFG and string; if not, reject.

- Convert G G G into equivalent CFG G ′ G^\prime G′ in Chomsky normal form.

- If w = ϵ w=\epsilon w=ϵ, check if S → ϵ S\rightarrow\epsilon S→ϵ is a rule of G ′ G^\prime G′. If so, accept; otherwise, reject.

- If w ≠ ϵ w\ne\epsilon w=ϵ, list all derivations with 2 n − 1 2n-1 2n−1 steps, where n = ∣ w ∣ n=|w| n=∣w∣

- If any generates w w w, accept, otherwise, reject.

6.1.5 CFLs are decidable

- Let

L

L

L be a CFL

- G ′ G^\prime G′ be a CFG for language L L L.

- S S S be a TM that decides A C F G = { < G , w > ∣ G is a CFG that generates string w } A_{CFG}=\{<G,w>|G\text{ is a CFG that generates string }w\} ACFG={<G,w>∣G is a CFG that generates string w}

- Construct TM

M

G

′

M_{G^\prime}

MG′ for language

L

L

L having CFG

G

′

G^\prime

G′ as follows:

- Run TM decider S S S on input < G ′ , w > <G^\prime,w> <G′,w>

- If S S S accepts, accept, otherwise, reject.

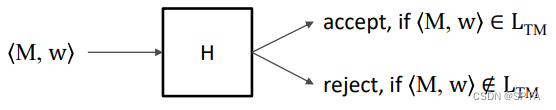

6.1.6 The Language L T M L_{TM} LTM is undecidable

图灵机停机问题是不可判定的,意思即是不存在一个图灵机能够判定任意图灵机对于任意输入是否停机。

- Suppose

L

T

M

L_{TM}

LTM is decided by a TM

H

H

H, with input

<

M

,

w

>

∈

Ω

<M,w>\in\Omega

<M,w>∈Ω

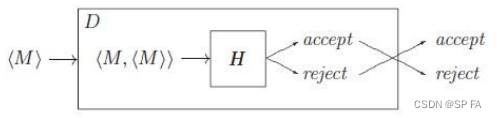

- Use

H

H

H as a subroutine to construct a new TM

D

D

D

- If we input the string and take

M

=

D

M=D

M=D

- If D D D accept < D > <D> <D>, then D D D rejects < D > <D> <D>

- Clearly a contradiction.

6.2 Unsolvable and Undecidable problems

Undecidable problem. The associated language of a problem cannot be recognized by a TM that halts for all inputs. (one problem that should give a “yes” or “no” answer, but yet no algorithm exists that can answer correctly on all inputs.)

Unsolvable problem. A computational problem that cannot be solved by a TM. Undecidable problem is a subcategory of Unsolvable problem.

6.3 Countable set

Let A A A and B B B be two sets. We say that A A A and B B B have the same size, if there exists a bijection f : A → B f:A\rightarrow B f:A→B

Let A A A be a set. We say that A A A is countable, if A A A is finite, or A A A and N N N have the same size.

Uncountable set

A set is uncountable if it contains so many elements that there is no bijection between this set and the set of natural numbers (N).

7. Reducibility

Reduction is a way of converting one problem to another problem, so that the solution to the second problem can be used to solve the first problem.

If A A A reduces to B B B, then any solution of B B B solves A A A (Reduction always involves two problems, A A A and B B B).

- If A A A is reducible to B B B, then A A A cannot be harder than B B B.

- If A A A is reducible to B B B and B B B is decidable, then A A A is also decidable.

- If A A A is reducible to B B B and A A A is undecidable, then B B B is also undecidable.

A common strategy for proving that a language

L

L

L is undecidable is by reduction method, proceeding as follows:

Typical approach to show

L

L

L is undecidable via reduction from

A

A

A to

L

L

L:

- Find a problem A A A known to be undecidable

- Suppose L L L is decidable.

- Let R R R be a TM that decides L L L.

- Using R R R as subroutine to construct another TM S S S that decides A A A.

- But A A A is not decidable.

- Conclusion: L L L is not decidable.

7.1 Computation histories

An accepting computation history for a TM M M M on a string w w w is a sequence of configurations C 1 , C 2 , ⋯ , C k C_1,C_2,\cdots,C_k C1,C2,⋯,Ck for some k ≥ 1 k\geq1 k≥1 such that the following properties hold:

- C 1 C_1 C1 is the start configuration of M M M on w w w.

- Each C j C_j Cj yields C j + 1 C_{j+1} Cj+1

- C k C_k Ck is an accepting configuration.

A rejecting computation history for M M M on w is the same except last configuration C k C_k Ck is a rejecting configuration of M M M.

Accepting and rejecting computation histories are finite.

If M M M does not halt on w w w, then no accepting or rejecting computation history exists.

Useful for both:

- deterministic TMs (one history).

- nondeterministic TMs (many histories).

< M , w > ∉ A T M <M,w>\notin A_{TM} <M,w>∈/ATM is equivalent to

- there is no accepting computation history for M M M on w w w

- all histories are non-accepting ones for M M M on w w w

7.2 Computable functions

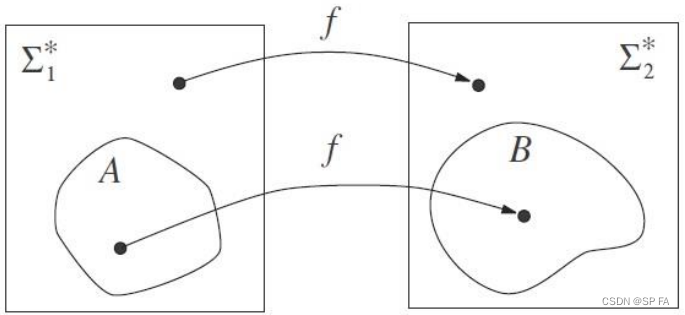

Suppose we have 2 languages A A A and B B B, where

- A A A is defined over alphabet Σ 1 \Sigma_1 Σ1, so A ⊆ Σ 1 ∗ A\subseteq\Sigma^*_1 A⊆Σ1∗

- B ⊆ Σ 2 ∗ B\subseteq\Sigma^*_2 B⊆Σ2∗

Informally speaking, A A A is reducible to B B B if we can use a black box for B B B to build an algorithm for A A A.

A function

f

:

Σ

1

∗

→

Σ

2

∗

f:\Sigma^*_1\rightarrow\Sigma^*_2

f:Σ1∗→Σ2∗ is a computable function if some TM

M

M

M, on every input

w

∈

Σ

1

∗

w\in\Sigma^*_1

w∈Σ1∗ halts with just

f

(

w

)

∈

Σ

2

∗

f(w)\in\Sigma^*_2

f(w)∈Σ2∗ on its tape. (there exists a TM can compute this function)

One useful class of computable functions transforms one TM into another.

7.3 Mapping Reducibility

Suppose that A A A and B B B are two languages

- A A A is defined over alphabet Σ 1 ∗ \Sigma^*_1 Σ1∗, so A ⊆ Σ 1 ∗ A\subseteq\Sigma^*_1 A⊆Σ1∗

- B ⊆ Σ 2 ∗ B\subseteq\Sigma^*_2 B⊆Σ2∗

Then A A A is mapping reducible to B B B, written A ≤ m B A\le_mB A≤mBif there is a computable function f : Σ 1 ∗ → Σ 2 ∗ f:\Sigma^*_1\rightarrow\Sigma^*_2 f:Σ1∗→Σ2∗such that, for every w ∈ Σ 1 ∗ w\in\Sigma_1^* w∈Σ1∗ w ∈ A ⇔ f ( w ) ∈ B w\in A\Leftrightarrow f(w)\in B w∈A⇔f(w)∈BThe function f f f is called a reduction of A A A to B B B

YES instance for problem

A

⇔

A\Leftrightarrow

A⇔ YES instance for problem

B

B

B.

Theorem

- If A ≤ m B A\leq_mB A≤mB and B B B is decidable, then A A A is decidable.

- If A ≤ m B A\leq_mB A≤mB and B B B is Turing-recognizable, then A A A is Turing-recognizable.

Corollary

3. If

A

≤

m

B

A\leq_mB

A≤mB and

A

A

A is undecidable, then

B

B

B is undecidable.

4. If

A

≤

m

B

A\leq_mB

A≤mB and

A

A

A is not Turing-recognizable, then

B

B

B is not Turing-recognizable.

7.4 Algorithm and Information

Algorithm is independent of computation model

All reasonable variants of TM models are equivalent to TM:

- k-tape TM

- nondeterministic TM

- enumerator

- random-access TM: head can jump to any cell in one step

Similarly, all “reasonable” programming languages are equivalent. The notion of an algorithm is independent of the computation model.

7.4.1 Algorithm

Informally

- a recipe

- a procedure

- a computer program

Historically

- algorithms have long history in mathematics

- but not precisely defined until 20th century

- informal notions rarely questioned, but insufficient to show a problem has no algorithm.

7.4.2 Information

We define the quantity of information contained in an object to be the size of that object’s smallest representation or description (a precise and

unambiguous characterization of the object so that we may recreate it from the description alone.).

Minimal length description

Many types of description language can be used to define information. Selecting which language to use affects the characteristics of the definition.

In this class, our description languages is based on algorithms.

One way to use algorithms to describe strings is to construct a Turing machine that prints out the string when it is started on a blank tape and then represent that Turing machine itself as a string.

Drawback to this approach:

A Turing machine cannot represent a table of information concisely with its transition function. To represent a string of

n

n

n bits, you might use

n

n

n states and

n

n

n rows in the transition function table. That would result in a description that is excessively long for our purpose.

We describe a binary string x x x with a Turing machine M M M and a binary input w w w to M M M. The length of the description is the combined length of representing M M M and w w w.

- Writing this description with our usual notation for encoding several objects into a single binary string < M , w > <M,w> <M,w>.

- To produce a concise result, we define the string < M , w > <M,w> <M,w> to be < M > w <M>w <M>w

However, we might run into trouble if directly concatenating w w w onto the end of M M M. The point at which < M > <M> <M> ends and w w w begins is not discernible from the description itself. We avoid this problem by ensuring that we can locate the separation between < M > <M> <M> and w w w in < M > w <M>w <M>w.

Let x x x be a binary string. The minimal description of x x x, written d ( x ) d(x) d(x), is the shortest string < M , w > <M,w> <M,w> where TM M M M on input w w w halts with x x x on its tape. The descriptive complexity of x x x, written K ( x ) K(x) K(x), is K ( x ) = ∣ d ( x ) ∣ K(x)=|d(x)| K(x)=∣d(x)∣

- The definition of K(x) is intended to capture our intuition for the amount of information in the string x.

Theorem 1

∃

c

∀

x

[

K

(

x

)

≤

∣

x

∣

+

c

]

\exist c~\forall x~[K(x)\leq|x|+c]

∃c ∀x [K(x)≤∣x∣+c]

This theorem says that the descriptive complexity of a string is at most a fixed constant more than its length. The constant is a universal one, not dependent on the string.

Theorem 2

∃

c

∀

x

,

y

[

K

(

x

y

)

≤

2

K

(

x

)

+

K

(

y

)

+

c

]

\exist c~\forall x,y~[K(xy)\leq2K(x)+K(y)+c]

∃c ∀x,y [K(xy)≤2K(x)+K(y)+c]

The cost of combining two descriptions leads to a bound that is greater than the sum of the individual complexities.

7.5 Recursively Enumerable Languages

7.5.1 Enumerability

Let Σ \Sigma Σ be an alphabet and let L ⊆ Σ ∗ L\subseteq\Sigma^* L⊆Σ∗ be a language. We say that L L L is enumerable, if

there exists a Turing machine M M M, such that for every string w ∈ Σ ∗ w\in\Sigma^* w∈Σ∗, the following holds:

- if w ∈ A w\in A w∈A, then the computation of the M M M, on the input string w w w, terminates in the accept state.

- if w ∉ A w\notin A w∈/A, then either the computation terminates in the reject state or the computation does not terminate.

From the perspective of algorithm

The language

L

L

L is enumerable, if there exists an algorithm having the following property:

- if w ∈ A w\in A w∈A, then the algorithm terminates on the input string w w w and tells us that w ∈ A w\in A w∈A

- if

w

∉

A

w\notin A

w∈/A, then either

- the algorithm terminates on the input string w w w and tells us that w ∉ A w\notin A w∈/A

- the algorithm does not terminate on the input string w w w, in which case it does not tell us that w ∉ A w\notin A w∈/A

7.5.2 Recursively Enumerable Languages

A language L L L is recursively enumerable if there exists a TM M M M such that L = L ( M ) L= L(M) L=L(M).

A language L L L over Σ \Sigma Σ is recursive if there exists a TM M M M such that L = L ( M ) L=L(M) L=L(M) and M M M halts on every w ∈ Σ ∗ w\in\Sigma^* w∈Σ∗

There is only a slight difference between recursively enumerable and recursive languages. In the first case we do not require the Turing machine to terminate on every input word.

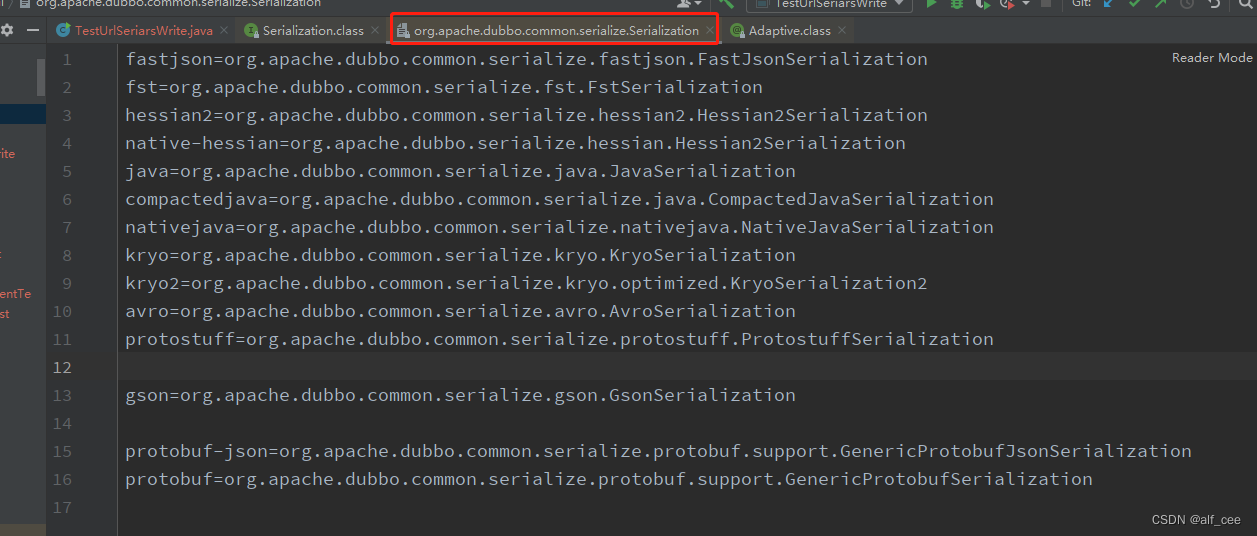

递归语言、递归可枚举语言和非递归可枚举语言

Enumeration procedure for recursive languages

To enumerate all

w

∈

Σ

∗

w\in\Sigma^*

w∈Σ∗ in a recursive language

L

L

L:

- Let M M M be a TM that recognizes L L L, L = L ( M ) L=L(M) L=L(M)

- Construct 2-tape TM

M

′

M^\prime

M′: Tape 1 will enumerate the strings in

Σ

∗

\Sigma^*

Σ∗, tape 2 will enumerate the strings in

L

L

L.

- On tape 1 generate the next string v ∈ Σ ∗ v\in\Sigma^* v∈Σ∗

- Simulate M M M on v v v (if M M M accepts v v v, then write v v v on tape 2).

Enumeration procedure for r.e languages

To enumerate all

w

∈

Σ

∗

w\in\Sigma^*

w∈Σ∗ in a recursively enumerable language

L

L

L:

Repeat

- Generate next string (Suppose k strings have been generated: w 1 , w 2 , ⋯ , w k w_1,w_2,\cdots,w_k w1,w2,⋯,wk)

- Run M M M for one step on w k w_k wk, run M M M for two steps on w k − 1 w_{k-1} wk−1… Run M M M for k k k steps on w 1 w_1 w1. If any of the strings are accepted then write them to tape 2.

7.5.3 Decidability and Undecidability

“Decidable” is a synonym for “recursive.” We tend to refer to languages as “recursive” and problems as “decidable”.

If a language is not recursive, then we call the problem expressed by that language “undecidable”.

Theorem

Every decidable language is enumerable. (Converse is not correct)

7.5.4 The Language L T M L_{TM} LTM

The language L T M = { < M , w > : M is a Turing machine that accepts the string w } L_{TM}=\{<M,w>:M\text{ is a Turing machine that accepts the string} w\} LTM={<M,w>:M is a Turing machine that accepts the stringw} is undecidable

Theorem

The language

L

T

M

L_{TM}

LTM is enumerable

7.5.5 Enumerator

Let Σ \Sigma Σ be an alphabet and let L ⊂ Σ ∗ L\subset\Sigma^* L⊂Σ∗ be a language. An enumerator for L L L is a Turing machine M M M having the following properties:

- M M M has a print tape and a print state. During its computation, M M M writes symbols of Σ \Sigma Σ on the print tape. Each time, M M M enters the print state, the current string on the print tape is sent to the printer and the print tape is made empty.

- At the start of the computation, all tapes are empty and M M M is in the start state.

- Every string w w w in L L L is sent to the printer at least once.

- Every string w w w that is not in L L L is never sent to the printer.

Theorem

A language is enumerable if and only if it has an enumerator.

7.6 Complexity Theory

If we can solve a problem P P P, how easy or hard is it to do so?

Counting Resources

We have two ways to measure the “hardness” of a problem:

- Time Complexity: how many time-steps are required in the computation of a problem?

- Space Complexity: how many bits of memory are required for the computation?

7.6.1 Running time

Let M M M be a Turing machine, and let w w w be an input string for M M M. We define the running time f ( ∣ w ∣ ) f(|w|) f(∣w∣) of M M M on input w w w as the number of computation steps made by M M M on input w w w.

As usual, we denote by ∣ w ∣ |w| ∣w∣, the number of symbols in the string w w w.

- The exact running time of most algorithms is complex.

- To large problems, try to use an approximation instead.

- Sometimes, only focus on the “important ” part of running time.

Let Σ \Sigma Σ be an alphabet, let T : N 0 → N 0 T:N_0\rightarrow N_0 T:N0→N0 be a function, let A ⊆ Σ ∗ A\subseteq\Sigma^* A⊆Σ∗ be a decidable language, and let F : Σ ∗ → Σ ∗ F:\Sigma^*\rightarrow\Sigma^* F:Σ∗→Σ∗ be a computable function.

We say that the Turing machine M M M decides the language A A A in time T T T, if f ( ∣ w ∣ ) ≤ T ( ∣ w ∣ ) f(|w|)\leq T(|w|) f(∣w∣)≤T(∣w∣)for all strings w w w in Σ ∗ \Sigma^* Σ∗.

We say that the Turing machine M M M computes the function F F F in time T T T, if f ( ∣ w ∣ ) ≤ T ( ∣ w ∣ ) f(|w|)\leq T(|w|) f(∣w∣)≤T(∣w∣)for all strings w w w in Σ ∗ \Sigma^* Σ∗

7.6.2 Asymptotic Notation

We typically measure the computational efficiency as the number of a basic operations it performs as a function of its input length.

The computation efficiency can be captured by a function T T T from the set of natural numbers N N N to itself such that T ( n ) T(n) T(n) is equal to the maximum number of basic operations that the algorithm performs on inputs of length n n n.

However, this function is sometimes be overly dependent on the low-level

details of our definition of a basic operation.

Big-O Notation

Given functions

f

f

f and

g

g

g, where

f

,

g

:

N

→

R

+

f,g:N\rightarrow R^+

f,g:N→R+We say that

f

(

n

)

=

O

(

g

(

n

)

)

f(n)=O(g(n))

f(n)=O(g(n))if there are two positive constants

c

c

c and

n

0

n_0

n0 such that

f

(

n

)

≤

c

⋅

g

(

n

)

for all

n

≥

n

0

f(n)\leq c\cdot g(n)\text{ for all }n\geq n_0

f(n)≤c⋅g(n) for all n≥n0where

n

=

∣

w

∣

n=|w|

n=∣w∣

We say that g ( n ) g(n) g(n) is an asymptotic upper bound on f ( n ) f(n) f(n)

Polynomials

p

(

n

)

=

a

1

n

k

1

+

a

2

n

k

2

+

⋯

+

a

d

n

k

d

p(n)=a_1n^{k_1}+a_2n^{k_2}+\cdots+a_dn^{k_d}

p(n)=a1nk1+a2nk2+⋯+adnkdwhere

k

1

>

k

2

>

⋯

>

k

d

≥

0

k_1>k_2>\cdots>k_d\geq0

k1>k2>⋯>kd≥0, then

- p ( n ) = O ( n k 1 ) p(n)=O(n^{k_1}) p(n)=O(nk1)

- Also, p ( n ) = O ( n r ) p(n)=O(n^r) p(n)=O(nr) for all r ≥ k 1 r\geq k_1 r≥k1

Exponential

Exponential functions like

2

n

2^n

2n always eventually “overpower” polynomials.

- For all constants a a a and k k k, polynomial f ( n ) = a ⋅ n k + ⋯ f(n)=a\cdot n^k+\cdots f(n)=a⋅nk+⋯ obeys: f ( n ) = O ( 2 n ) f(n)=O(2^n) f(n)=O(2n)

- For functions in n n n, we have n k = O ( b n ) n^k=O(b^n) nk=O(bn)for all positive constants k k k and b > 1 b>1 b>1

Logarithms

f

(

n

)

=

O

(

log

n

)

f(n)=O(\log n)

f(n)=O(logn)

Little-o Notation

Given two functions

f

f

f and

g

g

g, where

f

,

g

:

N

→

R

+

f,g:N\rightarrow R^+

f,g:N→R+We say that

f

(

n

)

=

o

(

g

(

n

)

)

f(n)=o(g(n))

f(n)=o(g(n))if

lim

n

→

∞

f

(

n

)

g

(

n

)

=

0

\lim\limits_{n\rightarrow\infin}\frac{f(n)}{g(n)}=0

n→∞limg(n)f(n)=0

Big-O notation is about “asymptotically less than or equal to”. Little-o is about “asymptotically much smaller than”.