对于三维视觉而言,需要清晰了解世界坐标系和像素坐标系的对应关系。本文用python做实验。

相机内外参数的数学推导可以看我之前的博客《【AI数学】相机成像之内参数

》,《【AI数学】相机成像之外参数》。

实验开始

首先明确相机的内外参数:

intri = [[1111.0, 0.0, 400.0],

[0.0, 1111.0, 400.0],

[0.0, 0.0, 1.0]]

extri = [[-9.9990e-01, 4.1922e-03, -1.3346e-02, -5.3798e-02],

[-1.3989e-02, -2.9966e-01, 9.5394e-01, 3.8455e+00],

[-4.6566e-10, 9.5404e-01, 2.9969e-01, 1.2081e+00],

[0.0, 0.0, 0.0, 1.0]]

我们在世界坐标系上找一个点,比如p1=[0, 0, 0],我们将其投影到像素坐标系,然后观察其投影坐标。

首先,我们将其转换到相机坐标系:(我们仅用外参数extri就可以得到相机坐标系)

import numpy as np

extri = np.array(

[[-9.9990e-01, 4.1922e-03, -1.3346e-02, -5.3798e-02],

[-1.3989e-02, -2.9966e-01, 9.5394e-01, 3.8455e+00],

[-4.6566e-10, 9.5404e-01, 2.9969e-01, 1.2081e+00],

[0.0, 0.0, 0.0, 1.0]])

p1 = np.array([0, 0, 0])

R = extri[:3, :3] # rotation

T = extri[:3, -1] # trans

cam_p1 = np.dot(p1 - T, R)

print(cam_p1)

然后,我们将相机坐标系转换到像素坐标系:

intri = [[1111.0, 0.0, 400.0],

[0.0, 1111.0, 400.0],

[0.0, 0.0, 1.0]]

screen_p1 = np.array([-cam_p1[0] * intri[0][0] / cam_p1[2] + intri[0][2],

cam_p1[1] * intri[1][1] / cam_p1[2] + intri[1][2]

])

# Formula:

# x y

# x_im = f_x * --- + offset_x y_im = f_y * --- + offset_y

# z z

print(screen_p1)

# [400.00057322 400.00211168]

由此可知,世界坐标系中的原点[0, 0, 0]经过这一套相机内外参数矩阵变换后,投影到屏幕的位置是[400, 400]。我们的图像大小刚好是[800, 800],恰好是中间位置。

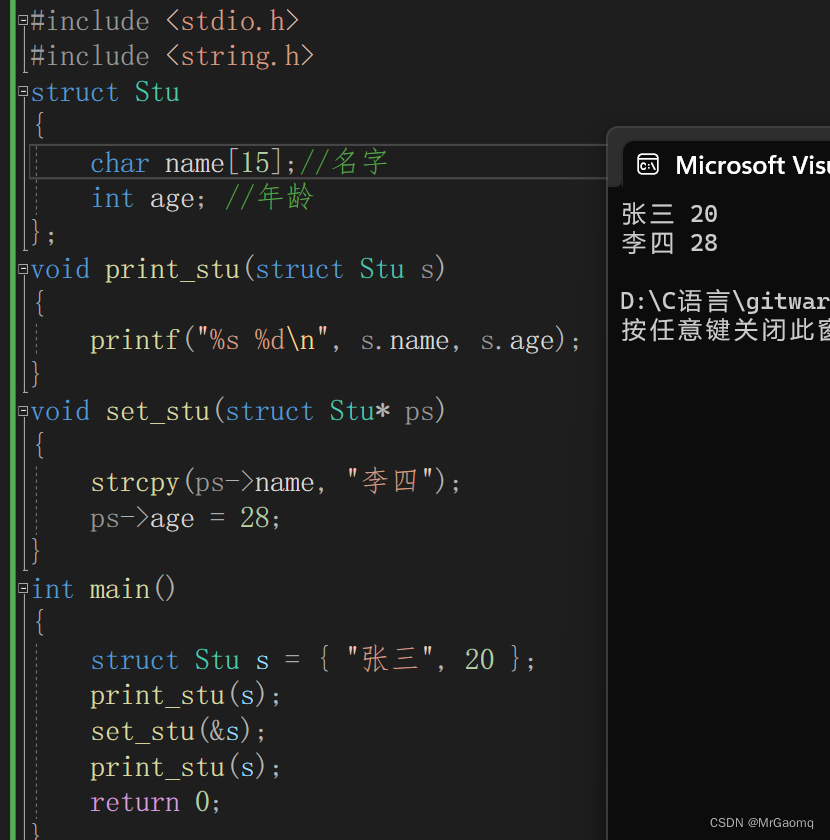

上面的实验改成面向对象的写法,更方便调用:

import numpy as np

class CamTrans:

def __init__(self, intri, extri, h=None, w=None):

self.intri = intri

self.extri = extri

self.h = int(2 * intri[1][2]) if h is None else h

self.w = int(2 * intri[0][2]) if w is None else w

self.R = extri[:3, :3]

self.T = extri[:3, -1]

self.focal_x = intri[0][0]

self.focal_y = intri[1][1]

self.offset_x = intri[0][2]

self.offset_y = intri[1][2]

def world2cam(self, p):

return np.dot(p - self.T, self.R)

def cam2screen(self, p):

"""

x y

x_im = f_x * --- + offset_x y_im = f_y * --- + offset_y

z z

"""

x, y, z = p

return [-x * self.focal_x / z + self.offset_x, y * self.focal_y / z + self.offset_y]

def world2screen(self, p):

return self.cam2screen(self.world2cam(p))

if __name__ == "__main__":

p = [0, 0, 1]

intri = [[1111.0, 0.0, 400.0],

[0.0, 1111.0, 400.0],

[0.0, 0.0, 1.0]]

extri = np.array(

[[-9.9990e-01, 4.1922e-03, -1.3346e-02, -5.3798e-02],

[-1.3989e-02, -2.9966e-01, 9.5394e-01, 3.8455e+00],

[-4.6566e-10, 9.5404e-01, 2.9969e-01, 1.2081e+00],

[0.0, 0.0, 0.0, 1.0]])

# another camera

extri1 = np.array([[-3.0373e-01, -8.6047e-01, 4.0907e-01, 1.6490e+00],

[ 9.5276e-01, -2.7431e-01, 1.3041e-01, 5.2569e-01],

[-7.4506e-09, 4.2936e-01, 9.0313e-01, 3.6407e+00],

[0.0, 0.0, 0.0, 1.0]])

ct = CamTrans(intri, extri)

print(ct.world2screen(p))

ct1 = CamTrans(intri, extri1)

print(ct1.world2screen(p))

通过实验发现,世界坐标系的原点在两个相机的像素坐标中都位于中心点(400, 400),由此验证,我们的坐标投影是正确的~

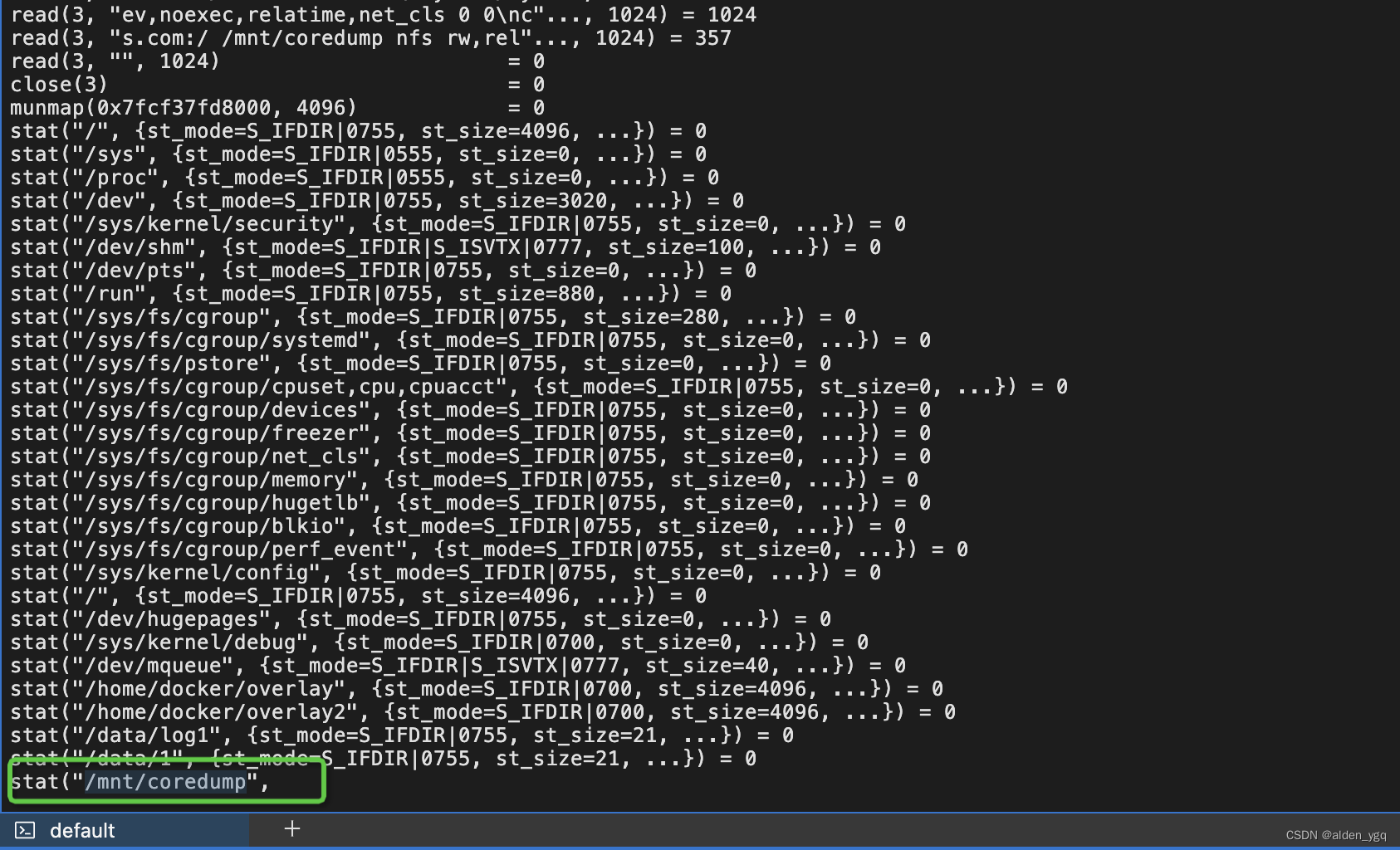

射线投影实验

有了intri和extri两个矩阵,我们同样可以从像素坐标向世界坐标发出射线,如图:

光心连接某个具体像素就可以确定一条射线了。我们这个实验是为了验证同一条射线上的采样点投影回像素坐标时也会投影在同一点。

import numpy as np

import torch

def get_rays(h, w, K, c2w):

i, j = torch.meshgrid(torch.linspace(0, w - 1, w),

torch.linspace(0, h - 1, h))

# pytorch's meshgrid has indexing='ij'

i = i.t()

j = j.t()

# directions

dirs = torch.stack([(i - K[0][2]) / K[0][0],

-(j - K[1][2]) / K[1][1],

-torch.ones_like(i)],

-1)

# camera to world

# Rotate ray directions from camera frame to the world frame

# rays_d = torch.sum(dirs[..., np.newaxis, :] * c2w[:3, :3], -1)

rays_d = torch.sum(dirs[..., None, :] * c2w[:3, :3], -1)

# dot product, equals to: [c2w.dot(dir) for dir in dirs]

# Translate camera frame's origin to the world frame. It is the origin of all rays.

rays_o = c2w[:3, -1].expand(rays_d.shape)

return rays_o, rays_d

def _main():

intri = [[1111.0, 0.0, 400.0],

[0.0, 1111.0, 400.0],

[0.0, 0.0, 1.0]]

extri = np.array(

[[-9.9990e-01, 4.1922e-03, -1.3346e-02, -5.3798e-02],

[-1.3989e-02, -2.9966e-01, 9.5394e-01, 3.8455e+00],

[-4.6566e-10, 9.5404e-01, 2.9969e-01, 1.2081e+00],

[0.0, 0.0, 0.0, 1.0]])

h, w = 800, 800

rays_o, rays_d = get_rays(h, w, K, pose) # all rays

# rays_o means origins, rays_d means directions

ray_o = rays_o[400][400] # choose (400, 400)th rays

ray_d = rays_d[400][400] # choose (400, 400)th rays

z_vals = [2., 6.] # pick 2 z-values

pts = ray_o[..., None, :] + ray_d[..., None, :] * z_vals[..., :, None] # sampling 2 points from the ray

print(pts)

ct = CamTrans(intri, extri)

print(ct.world2screen(pts[0]))

print(ct.world2screen(pts[1]))

# [399.99682432604334, 400.010187052666]

# [399.99608371858295, 399.992518381194]

通过实验发现,在同一条射线上的采样点会反投影同一个二维点上。

![[0xGame 2023 公开赛道] week4 crypto/pwn/rev](https://img-blog.csdnimg.cn/a7b1a571a8ee49fe92ada82f42a9e102.png)