网上大多分析LLM参数的文章都比较粗粒度,对于LLM的精确部署不太友好,在这里记录一下分析LLM参数的过程。

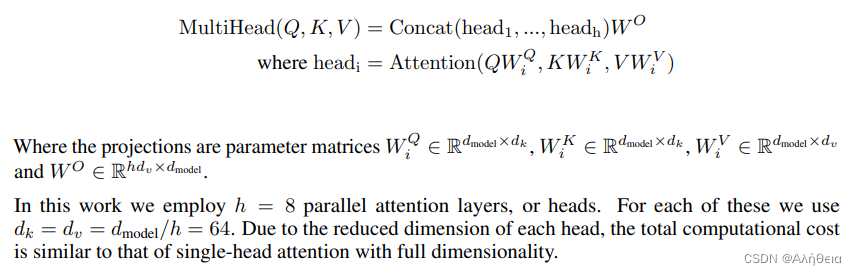

首先看QKV。先上transformer原文

也就是说,当h(heads) = 1时,在默认情况下,

W

i

Q

W_i^Q

WiQ、

W

i

K

W_i^K

WiK、

W

i

V

W_i^V

WiV都是2维方阵,方阵维度是

d

m

o

d

e

l

×

d

m

o

d

e

l

d_{model} \times d_{model}

dmodel×dmodel.

结合llama源码 (https://github.com/facebookresearch/llama/blob/main/llama/model.py)

class ModelArgs:

dim: int = 4096

n_layers: int = 32

n_heads: int = 32

n_kv_heads: Optional[int] = None

vocab_size: int = -1 # defined later by tokenizer

multiple_of: int = 256 # make SwiGLU hidden layer size multiple of large power of 2

ffn_dim_multiplier: Optional[float] = None

norm_eps: float = 1e-5

max_batch_size: int = 32

max_seq_len: int = 2048

# ...

class Attention(nn.Module):

"""Multi-head attention module."""

def __init__(self, args: ModelArgs):

"""

Initialize the Attention module.

Args:

args (ModelArgs): Model configuration parameters.

Attributes:

n_kv_heads (int): Number of key and value heads.

n_local_heads (int): Number of local query heads.

n_local_kv_heads (int): Number of local key and value heads.

n_rep (int): Number of repetitions for local heads.

head_dim (int): Dimension size of each attention head.

wq (ColumnParallelLinear): Linear transformation for queries.

wk (ColumnParallelLinear): Linear transformation for keys.

wv (ColumnParallelLinear): Linear transformation for values.

wo (RowParallelLinear): Linear transformation for output.

cache_k (torch.Tensor): Cached keys for attention.

cache_v (torch.Tensor): Cached values for attention.

"""

super().__init__()

self.n_kv_heads = args.n_heads if args.n_kv_heads is None else args.n_kv_heads

model_parallel_size = fs_init.get_model_parallel_world_size()

self.n_local_heads = args.n_heads // model_parallel_size

self.n_local_kv_heads = self.n_kv_heads // model_parallel_size

self.n_rep = self.n_local_heads // self.n_local_kv_heads

self.head_dim = args.dim // args.n_heads

计算出

self.n_kv_heads = h = 32

self.head_dim = 4096/32=128

所以

W

i

Q

W_i^Q

WiQ、

W

i

K

W_i^K

WiK、

W

i

V

W_i^V

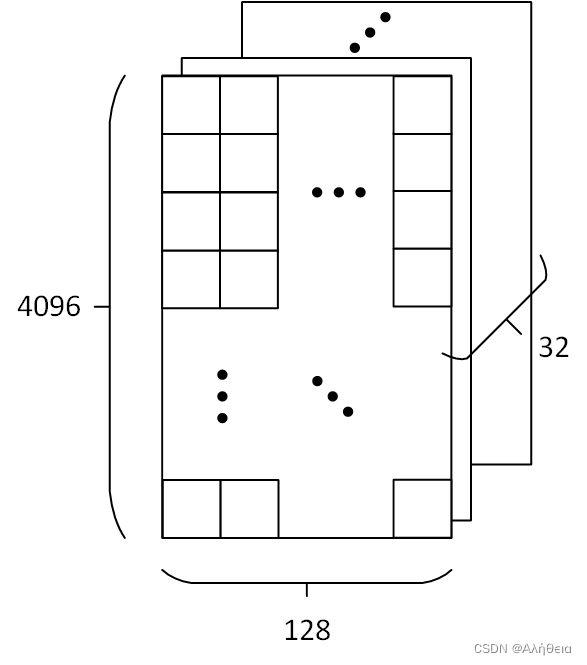

WiV 大小都为(4096, 128).

Q

×

K

T

Q×K^T

Q×KT后,大小为(4096, 4096),除法scale+softmax后不变,然后

×

V

×V

×V,大小恢复变为(4096, 128)。Attention不改变大小(在默认

d

k

=

d

v

d_k=d_v

dk=dv情况下)。

经过Cancat,分开的头又合并,大小变为(4096, 4096)方阵,经过 W O W^O WO全连接,还是(4096, 4096)方阵。

然后看Feed forward.根据源码,

class TransformerBlock(nn.Module):

def __init__(self, layer_id: int, args: ModelArgs):

"""

Initialize a TransformerBlock.

Args:

layer_id (int): Identifier for the layer.

args (ModelArgs): Model configuration parameters.

Attributes:

n_heads (int): Number of attention heads.

dim (int): Dimension size of the model.

head_dim (int): Dimension size of each attention head.

attention (Attention): Attention module.

feed_forward (FeedForward): FeedForward module.

layer_id (int): Identifier for the layer.

attention_norm (RMSNorm): Layer normalization for attention output.

ffn_norm (RMSNorm): Layer normalization for feedforward output.

"""

super().__init__()

self.n_heads = args.n_heads

self.dim = args.dim

self.head_dim = args.dim // args.n_heads

self.attention = Attention(args)

self.feed_forward = FeedForward(

dim=args.dim,

hidden_dim=4 * args.dim,

multiple_of=args.multiple_of,

ffn_dim_multiplier=args.ffn_dim_multiplier,

)

self.layer_id = layer_id

self.attention_norm = RMSNorm(args.dim, eps=args.norm_eps)

self.ffn_norm = RMSNorm(args.dim, eps=args.norm_eps)

def forward(

self,

x: torch.Tensor,

start_pos: int,

freqs_cis: torch.Tensor,

mask: Optional[torch.Tensor],

):

"""

Perform a forward pass through the TransformerBlock.

Args:

x (torch.Tensor): Input tensor.

start_pos (int): Starting position for attention caching.

freqs_cis (torch.Tensor): Precomputed cosine and sine frequencies.

mask (torch.Tensor, optional): Masking tensor for attention. Defaults to None.

Returns:

torch.Tensor: Output tensor after applying attention and feedforward layers.

"""

h = x + self.attention.forward(

self.attention_norm(x), start_pos, freqs_cis, mask

)

out = h + self.feed_forward.forward(self.ffn_norm(h))

return out

multiattention layer过后,经过加法和norm(RMS norm),进入feed_forward全连接。全连接层第一个维度是args.dim=4096, 第二个维度(hidden_dim)是4 * args.dim = 4*4096=16384 (目前还有问题)

![[学习记录] 设计模式 3. 观察者模式](https://img-blog.csdnimg.cn/c9857f7dea29431da4fbcaae5fd65fac.png)